Large language models (LLMs) are the main kind of text-handling AIs, and they're popping up everywhere. ChatGPT is by far the most famous tool that uses an LLM—it's powered by a specially tuned version of OpenAI's GPT models. But there are lots of other chatbots and text generators—including everything from Google Gemini and Anthropic's Claude to Writesonic and Jasper—that are built on top of LLMs.

LLMs have been simmering in research labs since the late 2010s, but after the release of ChatGPT (which showcased the power of GPT), they've burst out of the lab and into the real world.

Some LLMs have been in development for years. Others have been quickly spun up to catch the latest hype cycle. And yet more are open research tools. The first generations of large multimodal models (LMMs), which are able to handle other input and output modalities, like images, audio, and video, as well as text, are also starting to be widely available—which complicates things even more. So here, I'll break down some of the most important LLMs on the scene right now.

The best LLMs in 2024

There are dozens of major LLMs, and hundreds that are arguably significant for some reason or other. Listing them all would be nearly impossible, and in any case, it would be out of date within days because of how quickly LLMs are being developed. (I'm updating this list a few months after it was first published, and there are already new versions of multiple models to talk about, as well as at least one new one to add.)

Take the word "best" with a grain of salt here: I've tried to narrow things down by offering a list of the most significant, interesting, and popular LLMs (and LMMs), not necessarily the ones that outperform on benchmarks (though most of these do). I've also mostly focused on LLMs that you can use—rather than ones that are the subjects of super interesting research papers—since we like to keep things practical around here.

One last thing before diving in: a lot of AI-powered apps don't list what LLMs they rely on. Some we can guess at or it's clear from their marketing materials, but for lots of them, we just don't know. That's why you'll see "Undisclosed" in the table below a bit—it just means we don't know of any major apps that use the LLM, though it's possible some do.

Click on any app in the list below to learn more about it.

LLM | Developer | Popular apps that use it | Access |

|---|---|---|---|

OpenAI | Microsoft, Duolingo, Stripe, Zapier, Dropbox, ChatGPT | API | |

Gemini chatbot, some features on other Google apps like Docs and Gmail | API | ||

Undisclosed | Open | ||

Meta | AI features in Meta apps, Meta AI chatbot | Open | |

LMSYS Org | Chatbot Arena | Open | |

Anthropic | Slack, Notion, Zoom | API | |

Stability AI | Undisclosed | Open | |

Stability AI | Undisclosed | Open | |

Cohere | HyperWrite, Jasper, Notion, LongShot | API | |

Technology Innovation Institute | Undisclosed | Open | |

Databricks and Mosaic | Undisclosed | Open | |

Mistral AI | Undisclosed | Open | |

Salesforce | Undisclosed | Open | |

xAI | Grok Chatbot | Chatbot and open |

What is an LLM?

An LLM, or large language model, is a general-purpose AI text generator. It's what's behind the scenes of all AI chatbots and AI writing generators.

LLMs are supercharged auto-complete. Stripped of fancy interfaces and other workarounds, what they do is take a prompt and generate an answer using a string of plausible follow-on text. The chatbots built on top of LLMs aren't looking for keywords so they can answer with a canned response—instead, they're doing their best to understand what's being asked and reply appropriately.

This is why LLMs have really taken off: the same models (with or without a bit of extra training) can be used to respond to customer queries, write marketing materials, summarize meeting notes, and do a whole lot more.

But LLMs can only work with text, which is why LMMs are starting to crop up: they can incorporate images, handwritten notes, audio, video, and more. While not as readily available as LLMs, they have the potential to offer a lot more real-world functionality.

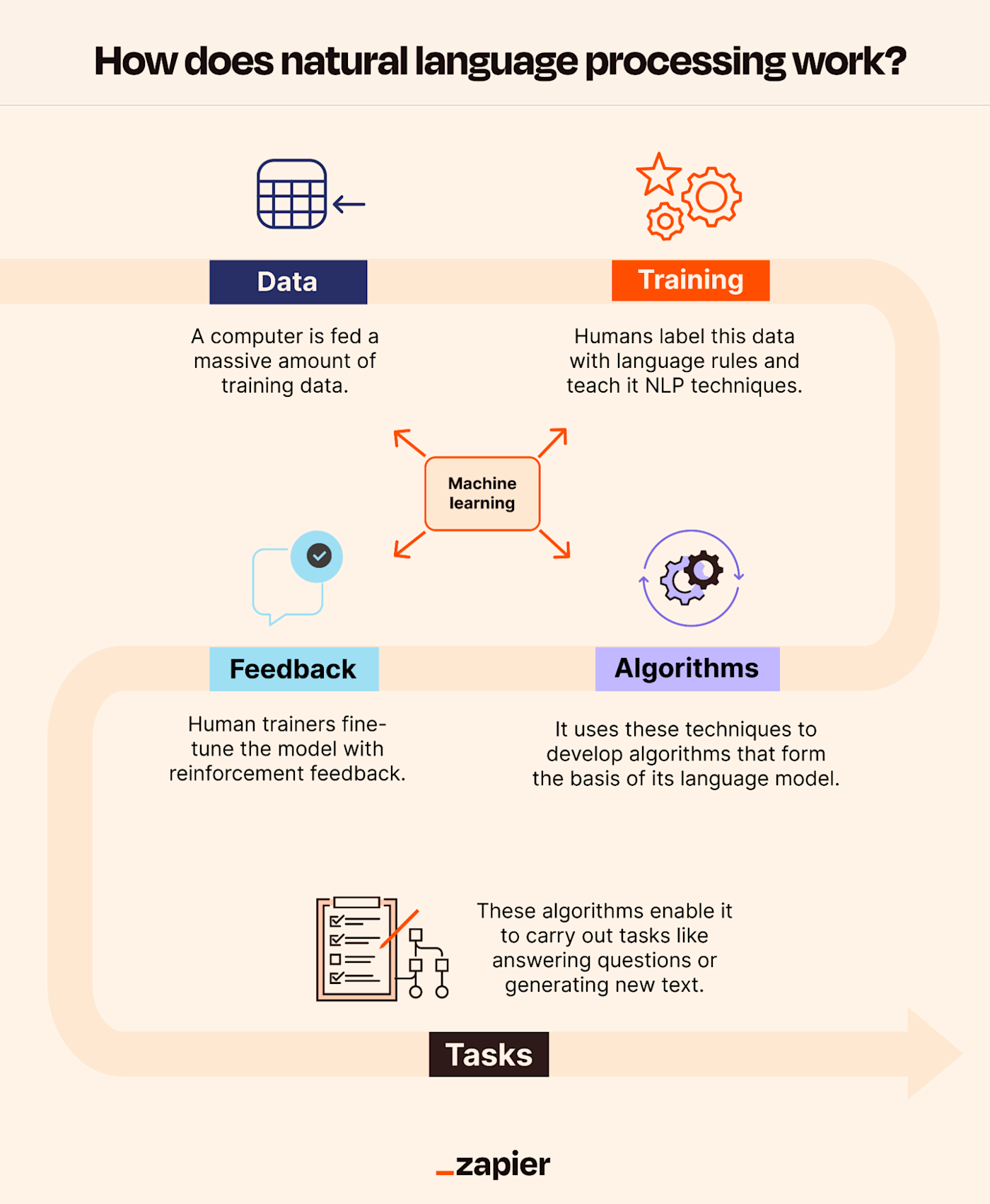

How do LLMs work?

Early LLMs, like GPT-1, would fall apart and start to generate nonsense after a few sentences, but today's LLMs, like GPT-4, can generate thousands of words that all make sense.

To get to this point, LLMs were trained on huge corpuses of data. The specifics vary a little bit between the different LLMs—depending on how careful the developers are to fully acquire the rights to the materials they're using—but as a general rule, you can assume that they've been trained on something like the entire public internet and every major book that's ever been published at a bare minimum. This is why LLMs can generate text that sounds so authoritative on such a wide variety of subjects.

From this training data, LLMs are able to model the relationship between different words (or really, fractions of words called tokens) using high-dimensional vectors. This is all where things get very complicated and mathy, but the basics are that every individual token ends up with a unique ID and that similar concepts are grouped together. This is then used to generate a neural network, a kind of multi-layered algorithm based on how the human brain works—and that's at the core of every LLM.

The neural network has an input layer, an output layer, and multiple hidden layers, each with multiple nodes. It's these nodes that compute what words should follow on from the input, and different nodes have different weights. For example, if the input string contains the word "Apple," the neural network will have to decide to follow up with something like "Mac" or "iPad," something like "pie" or "crumble," or something else entirely. When we talk about how many parameters an LLM has, we're basically comparing how many layers and nodes there are in the underlying neural network. In general, the more nodes, the more complex the text a model is able to understand and generate.

LMMs are even more complex because they also have to incorporate data from additional modalities, but they're typically trained and structured in much the same way.

Of course, an AI model trained on the open internet with little to no direction sounds like the stuff of nightmares. And it probably wouldn't be very useful either, so at this point, LLMs undergo further training and fine-tuning to guide them toward generating safe and useful responses. One of the major ways this works is by adjusting the weights of the different nodes, though there are other aspects of it too.

All this is to say that while LLMs are black boxes, what's going on inside them isn't magic. Once you understand a little about how they work, it's easy to see why they're so good at answering certain kinds of questions. It's also easy to understand why they tend to make up (or hallucinate) random things.

For example, take questions like these:

What bones does the femur connect to?

What currency does the USA use?

What is the tallest mountain in the world?

These are easy for LLMs, because the text they were trained on is highly likely to have generated a neural network that's predisposed to respond correctly.

Then look at questions like these:

What year did Margot Robbie win an Oscar for Barbie?

What weighs more, a ton of feathers or a ton of feathers?

Why did China join the European Union?

You're far more likely to get something weird with these. The neural network will still generate follow-on text, but because the questions are tricky or incorrect, it's less likely to be correct.

What can LLMs be used for?

LLMs are powerful mostly because they're able to be generalized to so many different situations and uses. The same core LLM (sometimes with a bit of fine-tuning) can be used to do dozens of different tasks. While everything they do is based around generating text, the specific ways they're prompted to do it changes what features they appear to have.

Here are some of the tasks LLMs are commonly used for:

General-purpose chatbots (like ChatGPT and Google Gemini)

Customer service chatbots that are trained on your business's docs and data

Translating text from one language to another

Converting text into computer code, or one language into another

Generating social media posts, blog posts, and other marketing copy

Sentiment analysis

Moderating content

Correcting and editing writing

Data analysis

And hundreds of other things. We're only in the early days of the current AI revolution.

But there are also plenty of things that LLMs can't do, but that other kinds of AI models can. A few examples:

Interpret images

Generate images

Convert files between different formats

Search the web

Perform math and other logical operations

Of course, some LLMs and chatbots appear to do some of these things. But in most cases, there's another AI service stepping in to assist—or you're actually using an LMM.

With all that context, let's move on to the LLMs themselves.

The best LLMs in 2024

GPT

Developer: OpenAI

Parameters: More than 175 billion (likely trillions)

Access: API

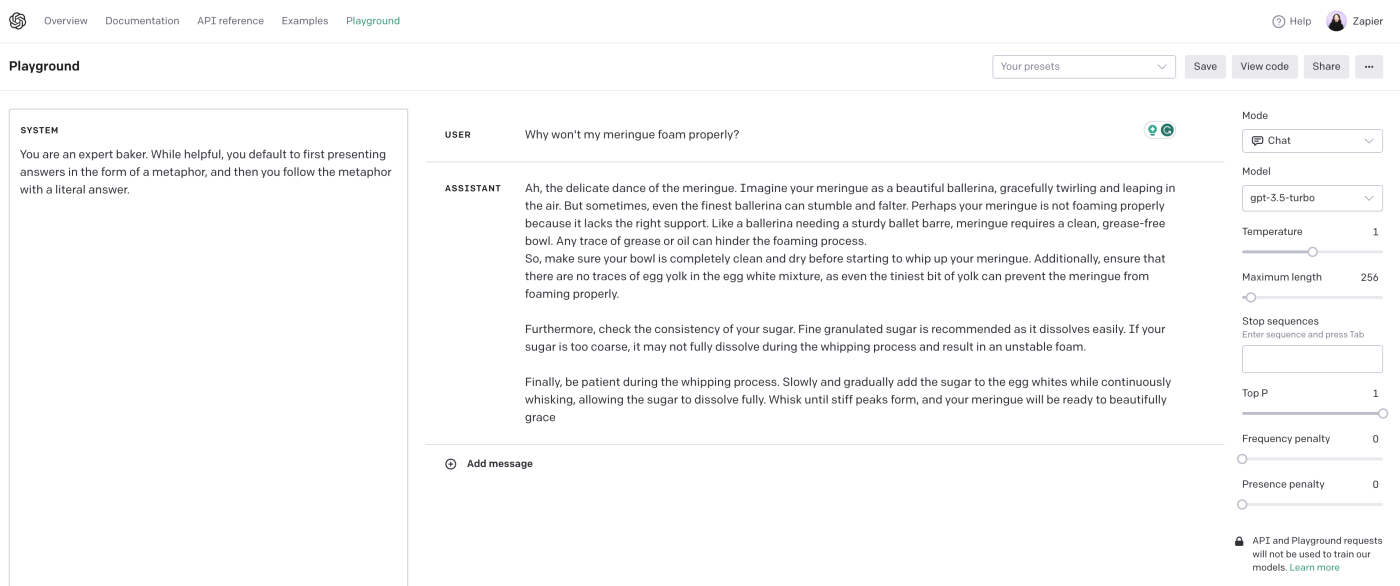

OpenAI's Generative Pre-trained Transformer (GPT) models kickstarted the latest AI hype cycle. There are four main models currently available: GPT-3.5-turbo, GPT-4, and GPT-4 Turbo. There's also a new multimodal version called GPT-4o. All the different versions of GPT are general-purpose AI models with an API, and they're used by a diverse range of companies—including Microsoft, Duolingo, Stripe, Descript, Dropbox, and Zapier—to power countless different tools. Still, ChatGPT is probably the most popular demo of its powers.

You can also connect Zapier to GPT or ChatGPT, so you can use GPT straight from the other apps in your tech stack. Here's more about how to automate ChatGPT, or you can get started with one of these pre-made workflows.

Start a conversation with ChatGPT when a prompt is posted in a particular Slack channel

Create email copy with ChatGPT from new Gmail emails and save as drafts in Gmail

Generate conversations in ChatGPT with new emails in Gmail

Gemini

Developer: Google

Parameters: Nano available in 1.8 billion and 3.25 billion versions; others unknown

Access: API

Google Gemini is a family of AI models from Google. The three models—Gemini Nano, Gemini Pro, and Gemini Ultra—are designed to operate on different devices, from smartphones to dedicated servers. While capable of generating text like an LLM, the Gemini models are also natively able to handle images, audio, video, code, and other kinds of information.

Gemini Pro also powers AI features throughout Google's apps, like Docs and Gmail, as well as Google's chatbot, which is confusingly also called Gemini (formerly Bard). Gemini Pro 1.5 is available to developers through Google AI Studio or Vertex AI, and Gemini Nano and Ultra are due out later in 2024.

With Zapier's Google Vertex AI and Google AI Studio integrations, you can access Gemini from all the apps you use at work. Here are a few examples to get you started.

Send prompts to Google Vertex AI from Google Sheets and save the responses

Start a conversation with Google Vertex AI when a prompt is posted in a particular Slack channel

Send prompts in Google AI Studio (Gemini) for new or updated rows in Google Sheets

Label incoming emails automatically with Google AI Studio (Gemini)

Google Gemma

Developer: Google

Parameters: 2 billion and 7 billion

Access: Open

Google Gemma is a family of open AI models from Google based on the same research and technology it used to develop Gemini. It's available in two sizes: 2 billion parameters and 7 billion parameters.

Llama 3

Developer: Meta

Parameters: 8 billion, 70 billion, and 400 billion (unreleased)

Access: Open

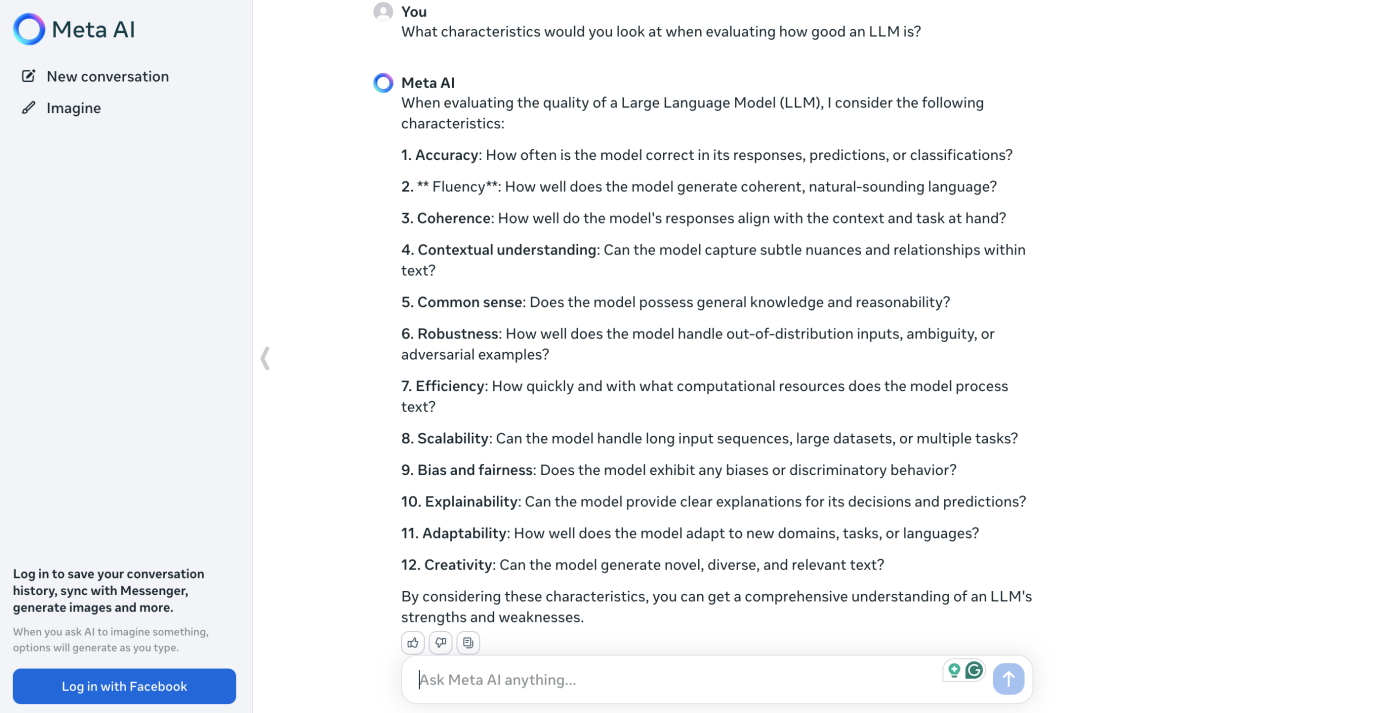

Llama 3 is a family of open LLMs from Meta, the parent company of Facebook and Instagram. In addition to powering most AI features throughout Meta's apps, it's one of the most popular and powerful open LLMs, and you can download the source code yourself from GitHub. Because it's free for research and commercial uses, a lot of other LLMs use Llama 3 as a base.

There are 8 billion and 70 billion parameter versions available now, and a 400 billion parameter version is still in training. Meta's previous model family, Llama 2, is still available in 7 billion, 13 billion, and 70 billion parameter versions.

Vicuna

Developer: LMSYS Org

Parameters: 7 billion, 13 billion, and 33 billion

Access: Open

Vicuna is an open chatbot built off Meta's Llama LLM. It's widely used in AI research and as part of Chatbot Arena, a chatbot benchmark operated by LMSYS.

Claude 3

Developer: Anthropic

Parameters: Unknown

Access: API

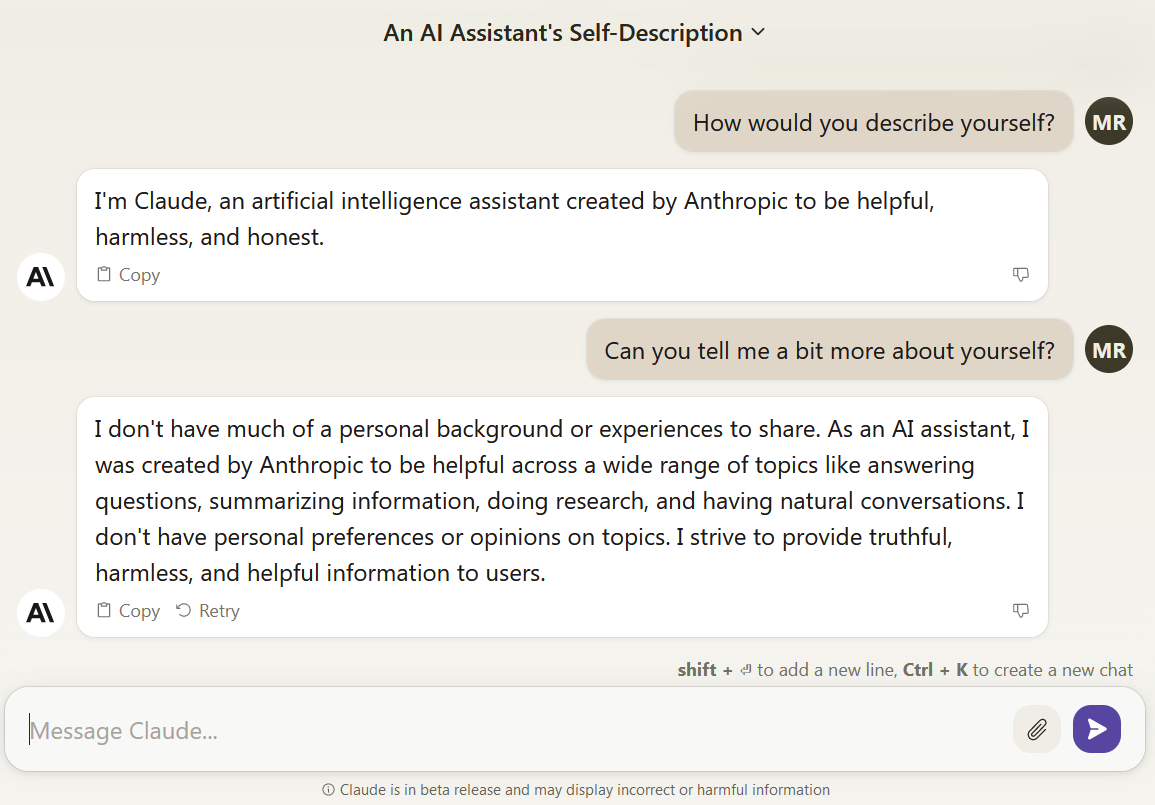

Claude 3 is arguably one of the most important competitors to GPT. Its three models—Haiku, Sonnet, and Opus—are designed to be helpful, honest, harmless, and crucially, safe for enterprise customers to use. As a result, companies like Slack, Notion, and Zoom have all partnered with Anthropic.

Like all the other proprietary LLMs, Claude 3 is only available as an API, though it can be further trained on your data and fine-tuned to respond how you need. You can also connect Claude to Zapier so you can automate Claude from all your other apps. Here are some pre-made workflows to get you started.

Write AI-generated email responses with Claude and store in Gmail

Generate an AI-analysis of Google Form responses and store in Google Sheets

Start conversation in Anthropic (Claude) for every new labeled email in Gmail

Stable Beluga and StableLM 2

Developer: Stability AI

Parameters: 1.6 billion, 7 billion, 12 billion, 13 billion, and 70 billion

Access: Open

Stability AI is the group behind Stable Diffusion, one of the best AI image generators. They've also released a handful of open LLMs based on Llama, including Stable Beluga and StableLM 2, although they're nowhere near as popular as the image generator.

Coral

Developer: Cohere

Parameters: Unknown

Access: API

Like Claude 3, Cohere's Coral LLM is designed for enterprise users. It similarly offers an API and allows organizations to train versions of its model on their own data, so it can accurately respond to specific queries from employees and customers.

Falcon

Developer: Technology Innovation Institute

Parameters: 1.3 billion, 7.5 billion, 40 billion, and 180 billion

Access: Open

Falcon is a family of open LLMs that have consistently performed well in the various AI benchmarks. It has models with up to 180 billion parameters and can outperform older models like GPT-3.5 in some tasks. It's released under a permissive Apache 2.0 license, so it's suitable for commercial and research use.

DBRX

Developer: Databricks and Mosaic

Parameters: 132 billion

Access: Open

Databricks' DBRX LLM is the successor to Mosaic's MPT-7B and MPT-30B LLMs. It's one of the most powerful open LLMs. Interestingly, it's not built on top of Meta's Llama model, unlike a lot of other open models.

DBRX surpasses or equals previous generation closed LLMs like GPT-3.5 on most benchmarks—though it is available under an open license.

Mixtral 8x7B and 8x22B

Developer: Mistral

Parameters: 45 billion and 141 billion

Access: Open

Mistral's Mixtral 8x7B and 8x22B models use a series of sub-systems to efficiently outperform larger models. Despite having significantly fewer parameters (and thus being capable of running faster or on less powerful hardware), they're able to beat other models like Llama 2 and GPT-3.5 in some benchmarks. They're also released under an Apache 2.0 license.

Mistral has also released a more direct GPT competitor called Mistral Large that's available through cloud computing platforms.

XGen-7B

Developer: Salesforce

Parameters: 7 billion

Access: Open

Salesforce's XGen-7B isn't an especially powerful or popular open model—it performs about as well as other open models with seven billion parameters. But I still think it's worth including because it highlights how many large tech companies have AI and machine learning departments that can just develop and launch their own LLMs.

Grok

Developer: xAI

Parameters: Unknown

Access: Chatbot and open

Grok, an AI model and chatbot trained on data from X (formerly Twitter), doesn't really warrant a place on this list on its own merits as it's not very popular nor particularly good. Still, I'm listing it here because it was developed by xAI, the AI company founded by Elon Musk. While it might not be making waves in the AI scene, it's still getting plenty of media coverage, so it's worth knowing it exists.

Why are there so many LLMs?

Until a year or two back, LLMs were limited to research labs and tech demos at AI conferences. Now, they're powering countless apps and chatbots, and there are hundreds of different models available that you can run yourself (if you have the computer skills). How did we get here?

Well, there are a few factors in play. Some of the big ones are:

With GPT-3 and ChatGPT, OpenAI demonstrated that AI research had reached the point where it could be used to build practical tools—so lots of other companies started doing the same.

LLMs take a lot of computing power to train, but it can be done in a matter of weeks or months.

There are lots of open source models that can be retrained or adapted into new models without the need to develop a whole new model.

There's a lot of money being thrown at AI companies, so there are big incentives for anyone with the skills and knowledge to develop any kind of LLM to do so.

What to expect from LLMs in the future

I think we're going to see a lot more LLMs in the near future, especially from major tech companies. Amazon, IBM, Intel, and NVIDIA all have LLMs under development, in testing, or available for customers to use. They're not as buzzy as the models I listed above, nor are regular people ever likely to use them directly, but I think it's reasonable to expect large enterprises to start deploying them widely, both internally and for things like customer support.

I also think we're going to see a lot more efficient LLMs tailored to run on smartphones and other lightweight devices. Google has already hinted at this with Gemini Nano, which runs some features on the Google Pixel Pro 8. Developments like Mistral's Mixtral 8x22B demonstrate techniques that enable smaller LLMs to compete with larger ones efficiently.

The other big thing that's coming is large multimodal models or LMMs. These combine text generation with other modalities, like images and audio, so you can ask a chatbot what's going on in an image or have it respond with audio. GPT-4o and Google's Gemini models are two of the first LMMs that are likely to be widely deployed, but we're definitely going to see more.

Other than that, who can tell? Three years ago, I definitely didn't think we'd have powerful AIs like ChatGPT available for free. Maybe in a few years, we'll have artificial general intelligence (AGI).

Related reading:

This article was originally published in January 2024. The most recent update was in May 2024.