Ask any AI chatbot a question or any AI tool to perform a task, and its answers will hopefully be helpful. Sometimes, though, they'll be completely made up. When this happens, it's called a "hallucination"—and it's one of the biggest problems with every kind of AI tool.

You've almost certainly seen a few headlines where an AI model has gone a little bit rogue, so let's look at what you need to know about AI hallucinations and some steps you can take to minimize them. (Though, really, that's something that needs to be done platform-side for the best results.)

What are AI hallucinations?

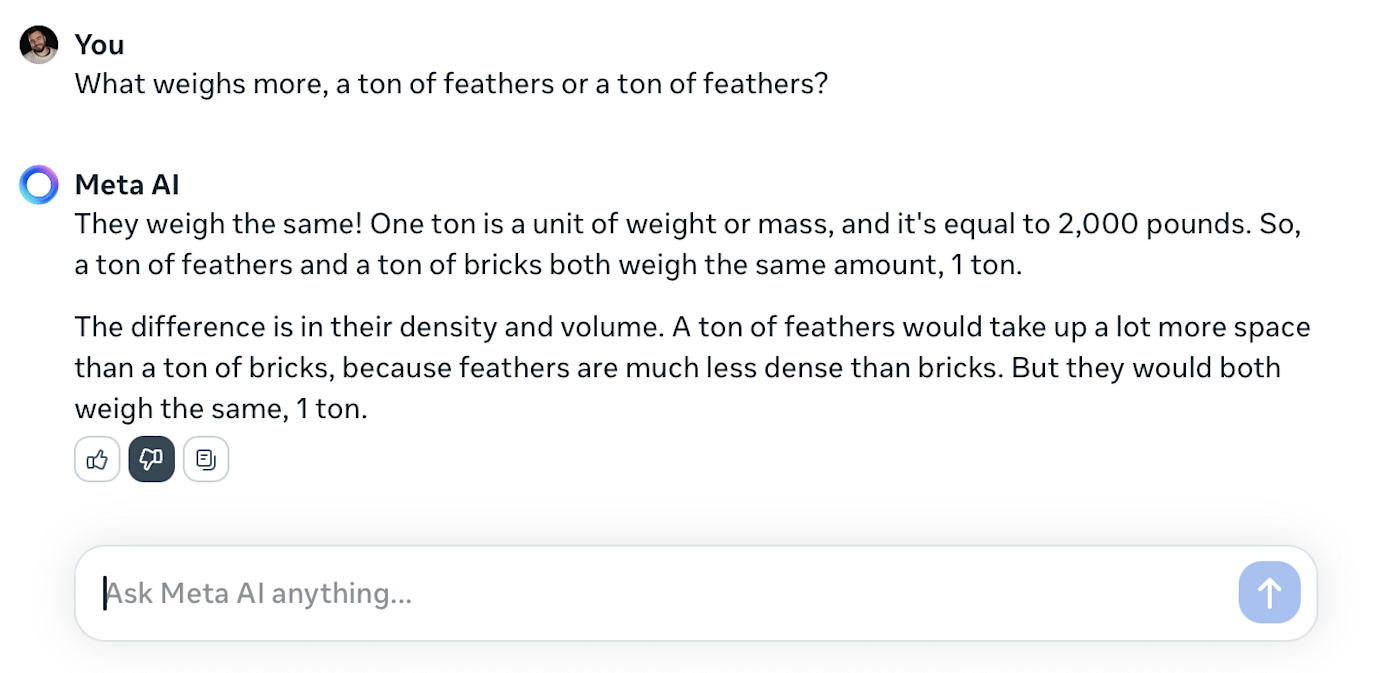

An AI hallucination is when an AI model generates incorrect or misleading information but presents it as if it were a fact. There's some debate about what counts as hallucination and whether it's even the appropriate word for what happens when an AI model fabricates information, but for our purposes, we'll consider it a hallucination any time an AI responds incorrectly to a prompt that it should be able to respond correctly to.

The problem is that the large language models (LLMs) and large multimodal models (LMMs) that underlie any AI text generating tool or chatbot like ChatGPT don't really know anything. They're designed to predict the best string of text that plausibly follows on from your prompt, whatever that happens to be. If they don't know the actual answer, they can just as easily make up a string of nonsense that will fit the bill.

Think of LLMs as really really fancy autocorrect. They're just trying to make up the next few sentences and paragraphs. They don't have any internal concept of truth or factual accuracy, and they lack the ability to reason or apply logic to their responses. If it's words you want, it's words you'll get.

And this is a fundamental part of LLMs. The only reason ChatGPT knows that 1+1=2 is that it has seen that a lot more often in its training data than 1+1=3 or 1+1=4. (And even then, ChatGPT is mostly able to solve math problems because it recognizes them as math problems, then kicks them off to a calculator function.)

So, it's best to think of AI hallucinations as an unavoidable byproduct of an LLM trying to respond to your prompt with an appropriate string of text.

What causes AI hallucinations?

AI hallucinations can occur for several reasons, including:

Insufficient, outdated, or low-quality training data. An AI model is only as good as the data it's trained on. If the AI tool doesn't understand your prompt or doesn't have sufficient information, it'll rely on the limited dataset it's been trained on to generate a response—even if it's inaccurate.

Bad data retrieval. Increasingly, AI tools are able to pull in additional data from external sources—but they aren't really able to fact-check it. This is how Google's AI answers recommended people put glue on pizza.

Overfitting. When an AI model is trained on a limited dataset, it may memorize the inputs and appropriate outputs. This leaves it unable to effectively generalize new data, resulting in AI hallucinations.

Use of idioms or slang expressions. If a prompt contains an idiom or slang expression that the AI model hasn't been trained on, it may lead to nonsensical outputs.

Adversarial attacks. Prompts that are deliberately designed to confuse the AI can cause it to produce AI hallucinations.

But really, hallucinations are a side effect of how modern AI systems are designed and trained. Even with the best training data and the clearest possible instructions, there's still a non-zero chance that the AI model will respond with a hallucination. Hallucinations also affect every kind of generative AI model, whether they're creating images or text.

We're focusing on text here because it's the most pernicious and widespread kind of hallucination. When DALL·E 3 doesn't generate the right image, you can probably see it straight away. When ChatGPT gets something wrong, it might not be as easy to spot.

AI hallucination examples

Hallucination is a pretty broad problem with AI. It can range from simple errors to dramatic failures in reasoning. Here are some of the kinds of things that you're likely to find AIs hallucinating (or at least the kinds of things referred to as hallucinations):

Completely made-up facts, references, and other details. For example, ChatGPT regularly suggests I've written articles for publications that I haven't, and multiple lawyers have gotten into trouble for filing paperwork with made-up case law.

Repeating errors, satire, or lies from other sources as fact. This is where the glue pizza and assertions that Barack Obama is Muslim come from.

Failing to provide the full context or all the information that you need. For example, queries about what mushrooms are safe to eat rarely list poisonous mushrooms as edible, but they often give insufficient details about how to properly identify any particular one. If you just follow the AI's advice, you may mistakenly eat a poisonous mushroom.

Misinterpreting your original prompt but then responding appropriately. I've been trying to get ChatGPT to solve a Sudoku for me for months, and it always misinterprets the original puzzle state and then fails to solve it as a result.

Why are AI hallucinations a problem?

AI hallucinations are part of a growing list of ethical concerns about AI. Aside from misleading people with factually inaccurate information and eroding user trust, hallucinations can perpetuate biases or cause other harmful consequences if taken at face value.

For companies, they can also cause costly problems. An Air Canada support chatbot hallucinated a policy that ended up with the airline losing a court case. While the penalties involved were only a few hundred dollars, the lawyers' fees and reputational damage presumably had a significantly higher price tag.

All this to say that, despite how far it's come, AI still has a long way to go before it can be considered a reliable replacement for humans in a lot of tasks. Even simple things like content research or writing social media posts need to be carefully overseen.

Can you prevent AI hallucinations?

AI hallucinations are impossible to prevent. They're an unfortunate side effect of the ways that modern AI models work. With that said, there are things that can be done to minimize hallucinations as much as possible.

The most effective ways to minimize hallucinations are on the backend. I'll share some tips for getting the most accurate information out of chatbots and other AI tools, but really, the reason ChatGPT doesn't make up random French kings very frequently anymore is that OpenAI has spent a lot of time and resources adding guardrails to stop it from hallucinating.

Retrieval augmented generation (RAG)

One of the most powerful tools that's available at the moment is retrieval augmented generation (RAG). Essentially, the AI model is given access to a database that contains the accurate information it needs to do its job. For example, RAG is being used to create AI tools that can cite actual case law (and not just make things up) or respond to customer queries using information from your help docs. It's how tools like Jasper are able to accurately use information about you or your company, and custom GPTs can be given knowledge references.

While RAG can't fully prevent hallucination—and employing it properly has its own complexities—it's one of the most widely used techniques at the moment.

Preventing AI hallucinations with prompt engineering

While it's important that developers build AI tools in ways that minimize hallucinations, there are still ways you can make AI models more accurate when you interact with them, based on how you prompt the AI.

Don't use foundation models to do things they aren't trained to do. ChatGPT is a general-purpose chatbot trained on a wide range of content. It's not designed for specific uses like citing case law or conducting a scientific literature review. While it will often give you an answer, it's likely to be a pretty bad answer. Instead, find an AI tool designed for the task and use it.

Provide information, references, data, and anything else you can. Relatedly, whatever kind of AI tool you're using, provide it with as much context as you can. With chatbots like ChatGPT and Claude, you can upload documents and other files for the AI to use; with other tools, you can create an entire RAG database for it to pull from.

Fact-check important outputs. While most AI tools will work well enough for non-critical tasks, if you're asking Meta AI if a certain mushroom is safe to eat, double-check the results on a mycology website.

Use custom instructions and controls. Depending on the AI tool you're using, you may be able to set custom instructions or adjust controls like the temperature to set how they respond. Even if there are no set controls, you can add something like "Be helpful!" to prompt the AI to respond in a specific way.

Ask the AI to double-check its results. Particularly for logic or multimodal tasks, if you're unsure if the AI has things right, you can ask it to double-check or confirm its results.

Employ chain-of-thought, least-to-most, and few-shots prompts. Some ways of prompting LLMs are more effective than others. If you're asking an AI to work through a logic puzzle, ask it to explain its reasoning at every step or break the problem down into its constituent pieces and solve them one at a time. If you can, give any AI model a few examples of what you want it to do. All these prompt engineering techniques have been shown to get more accurate results from LLMs.

Give clear, single-step prompts. While techniques like chain-of-thought prompting can make LLMs more effective at working through complex problems, you'll often have better results if you just give direct prompts that only require one logical operation. That way, there's less opportunity for the AI to hallucinate or go wrong.

Limit the AI's possible outcomes. Similarly, if you give the AI an either-or choice, a few examples of how you want it to respond, or ask it to format its results in a certain way, you're likely to get better outputs with fewer hallucinations.

Finally: expect hallucinations. No matter how careful you are, expect any AI tool you use to occasionally just get weird. If you expect the occasional hallucination or wild response, you can catch them before your AI chatbot gets your company brought to court.

Verify, verify, verify

To put it simply, AI is a bit overzealous with its storytelling. While AI research companies like OpenAI are keenly aware of the problems with hallucinations, and are developing new models that require even more human feedback, AI is still very likely to fall into a comedy of errors.

So whether you're using AI to write code, problem solve, or carry out research, refining your prompts using the above techniques can help it do its job better—but you'll still need to verify each and every one of its outputs.

Related reading:

This article was originally published in April 2023 by Elena Alston and has also had contributions from Jessica Lau. The most recent update was in July 2024.