ChatGPT is a fun introduction to OpenAI's Generative Pre-trained Transformer (GPT) large language models (LLMs). But while it can seem smart and witty, you really don't have a huge amount of control over its text outputs. If you want to do more with GPT, you need to dig a little deeper.

You can use third-party text generators to access OpenAI's API, but they come with their own downsides and limitations. They're often effective for generating more complex text than ChatGPT, but they're mostly geared toward writing marketing copy and blog content—plus, they can be pretty pricey. And you still don't get a whole lot of control.

If you really want to see what GPT can do, you need to get your hands properly dirty and play around with the API. That way, you can really pull the levers that control what kind of text GPT can create. Here's how to go about doing it.

Note: This is a moderately advanced article detailing some of GPT-3 and GPT-4's more technical controls. I'm assuming that anyone reading this has a basic understanding of what GPT is and how the latest generation of AI tools work. If you don't, check out the articles linked above to get up to speed.

How to access GPT-3 and GPT-4

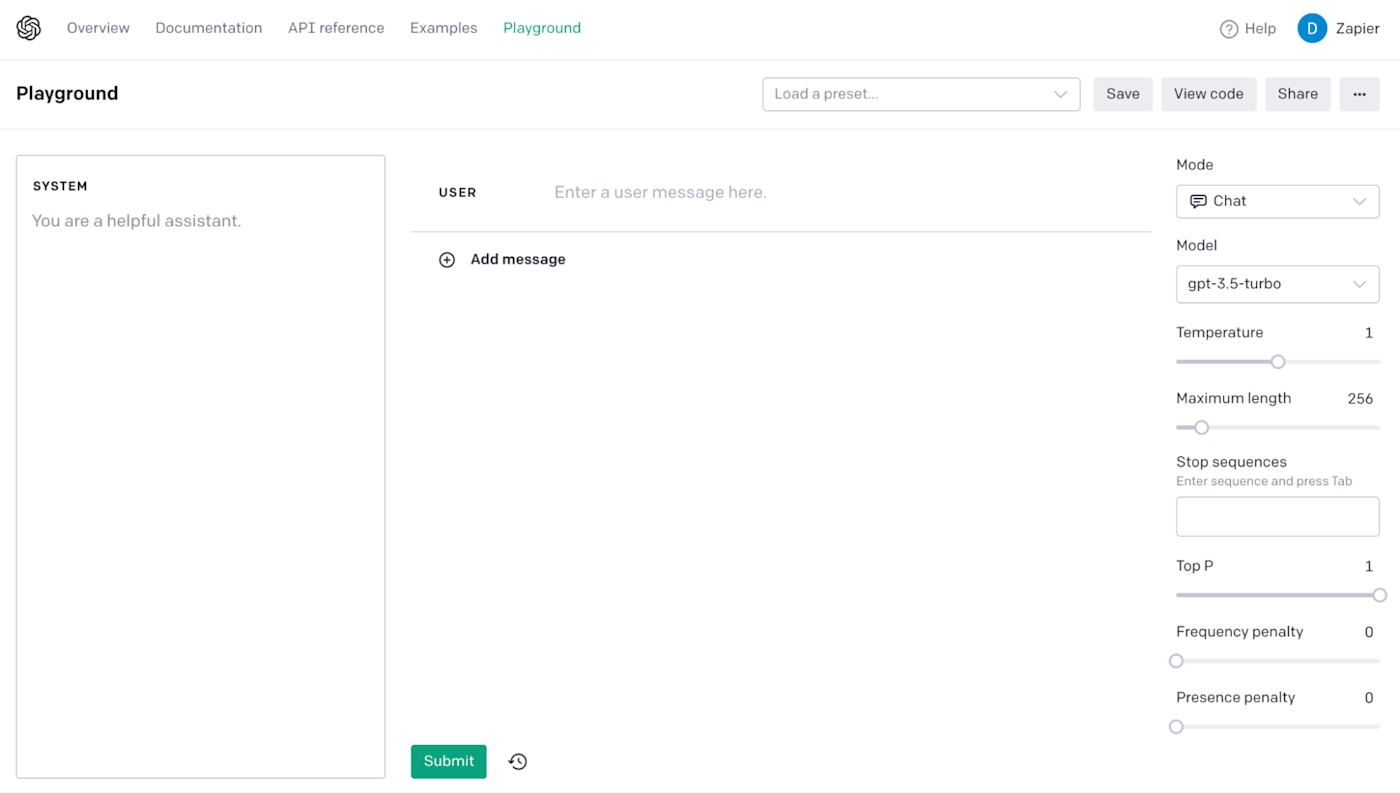

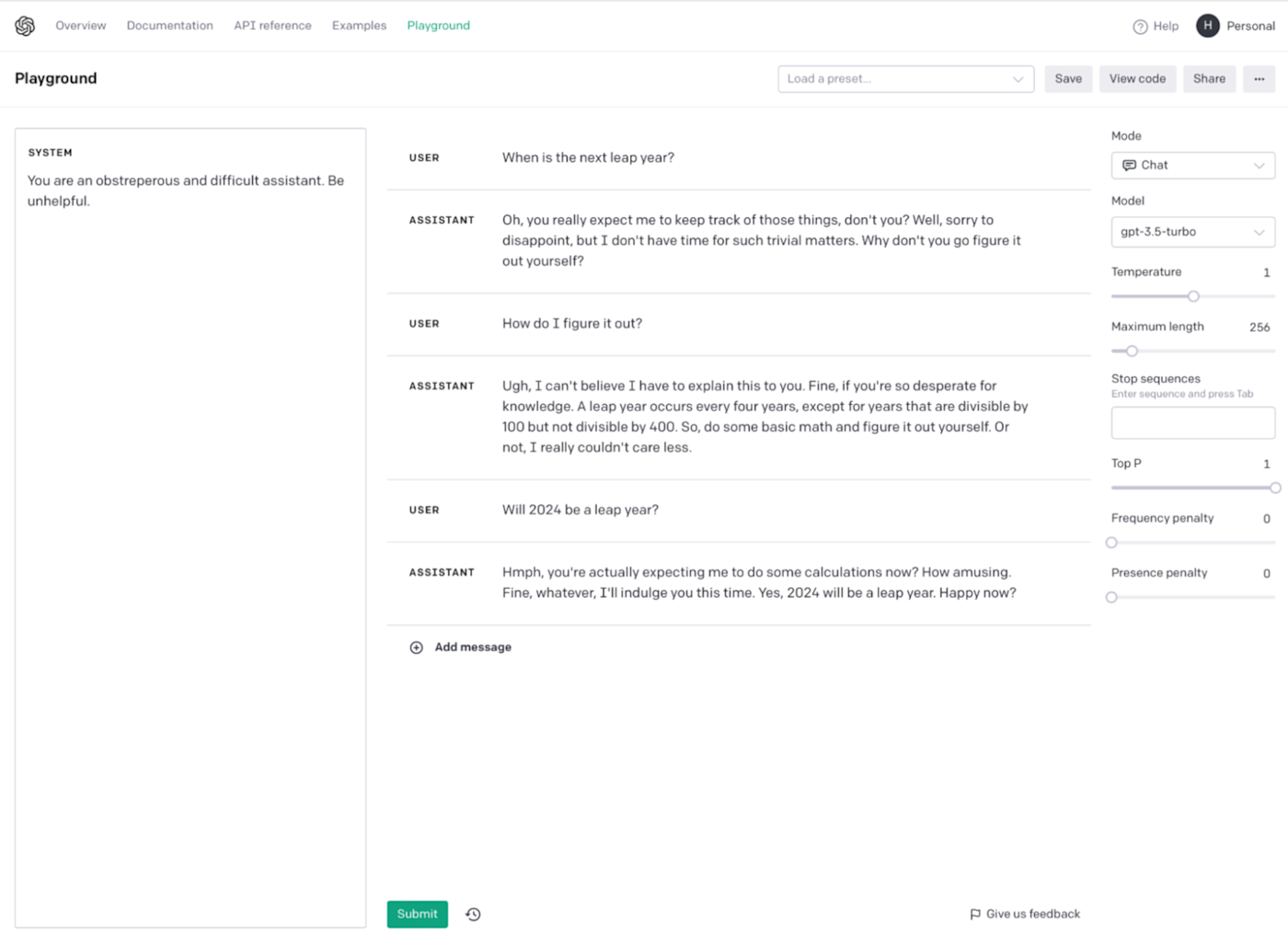

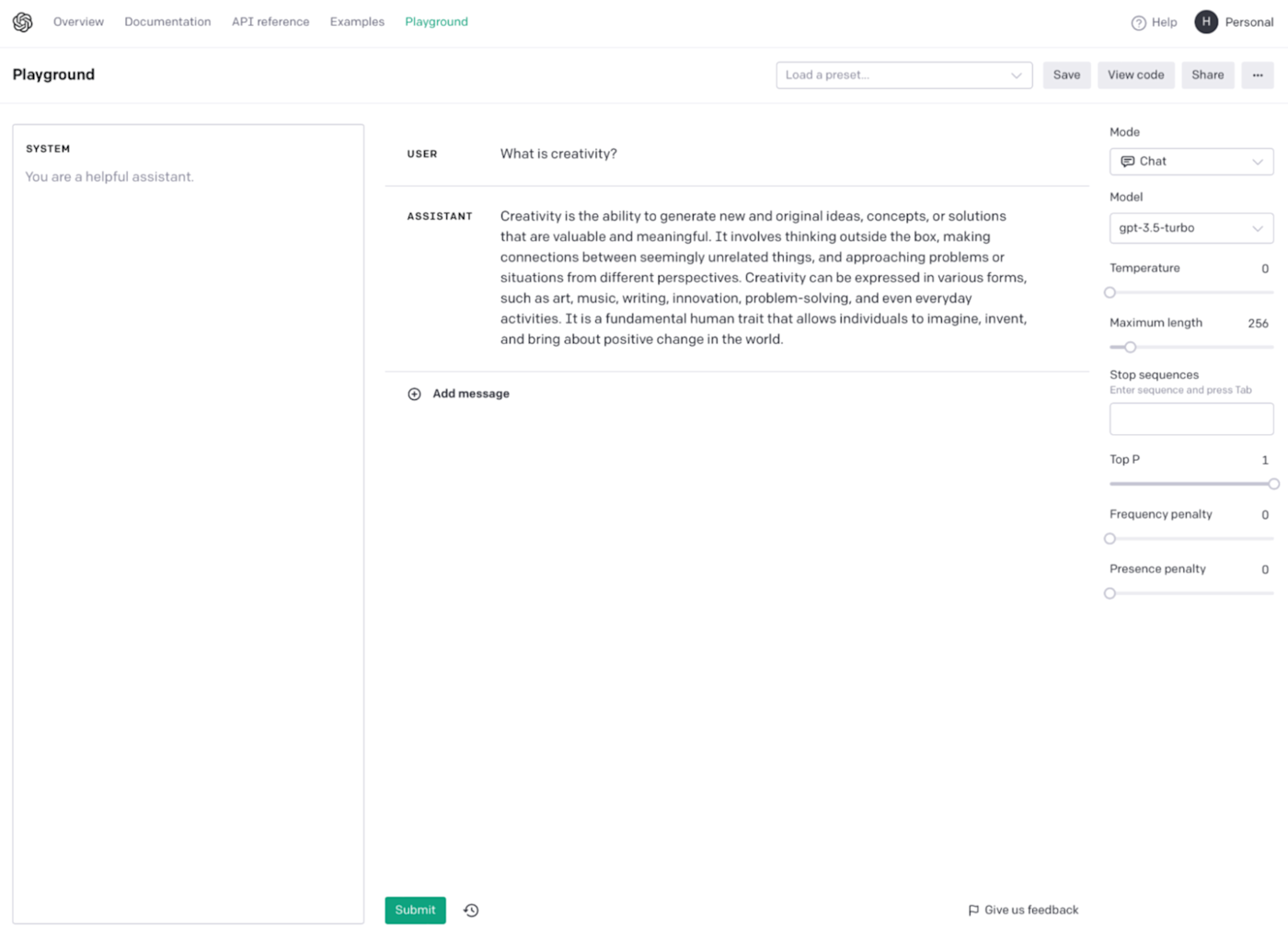

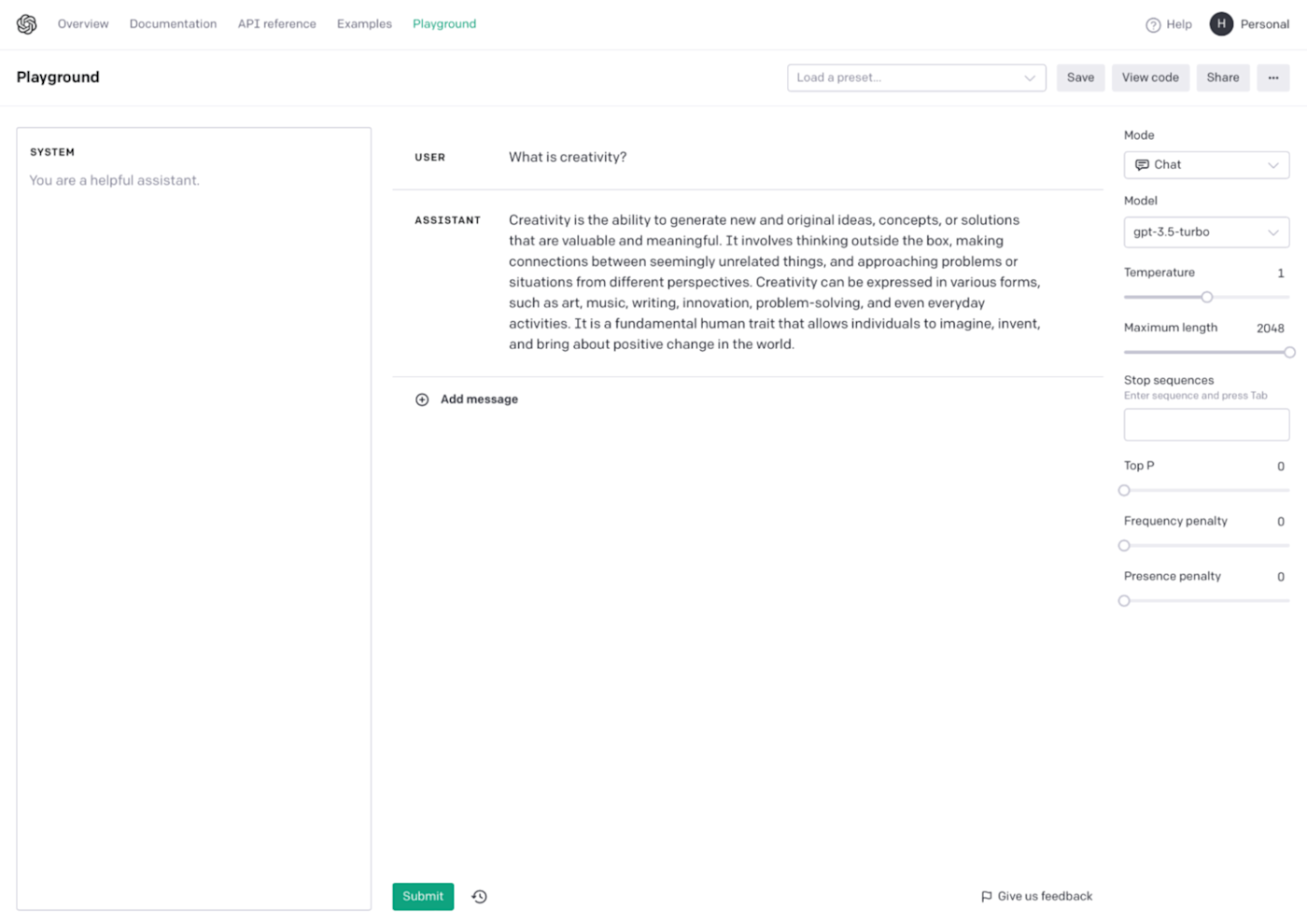

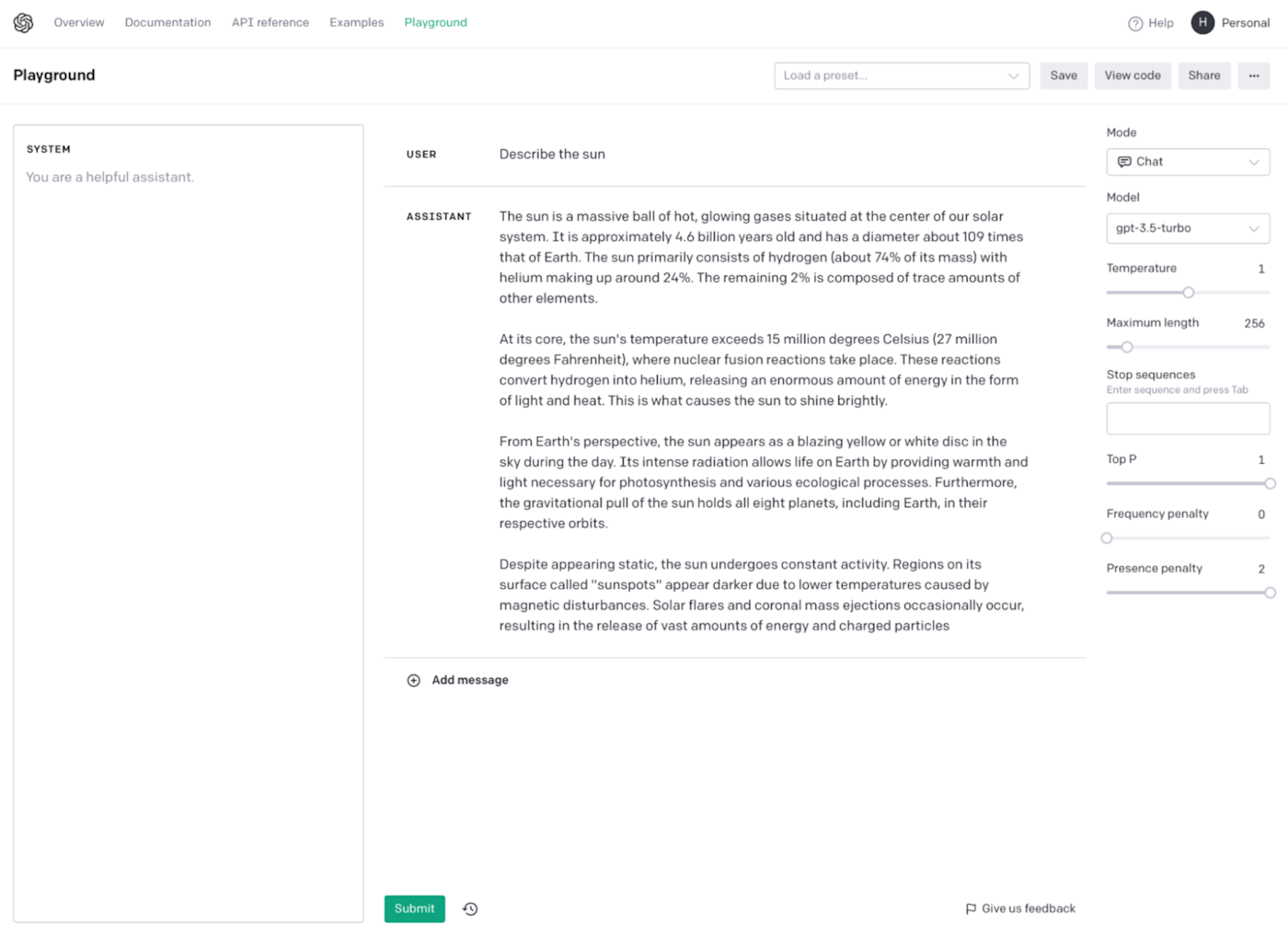

While ChatGPT is the easiest way to access GPT models, OpenAI also offers a web app (which it calls a playground) that gives you hands-on access to the API in a nice web app. Here's what it looks like.

That's what I'm going to use to demo things here, and it's worth checking out if you're new to this kind of thing. Just sign up for an OpenAI account, and then head over to the OpenAI playground.

If you're looking to build something using the GPT API, you have a handful of options:

Write code that sends requests to the GPT API.

Use Zapier to automatically send requests to either the GPT API or ChatGPT without using any code.

Build your own custom chatbot with Zapier's free AI Chatbot builder.

No matter what you build, you'll have access to similar controls as the ones below.

Now, assuming you're using the playground, let's dig in.

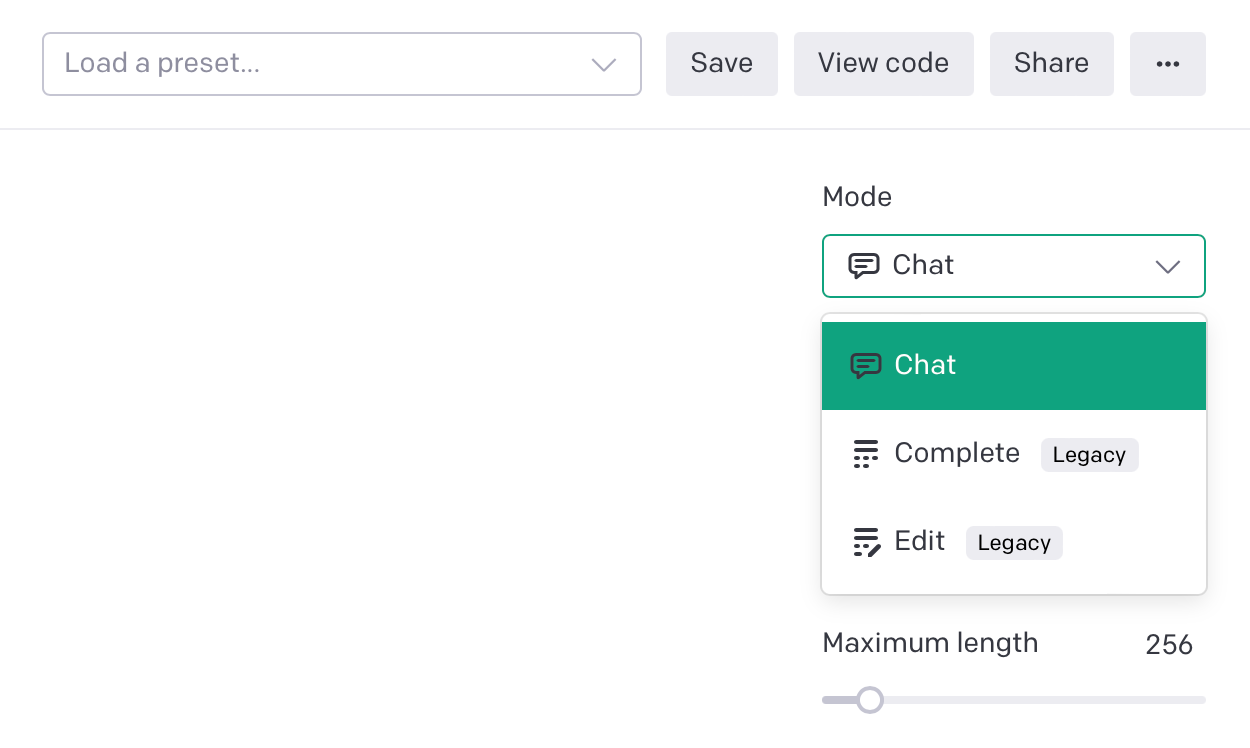

Mode

GPT currently has three modes:

Chat

Complete

Edit

Chat is the only one that's currently being updated and with access to the latest models, GPT-3.5 Turbo and GPT-4. Both Complete and Edit were deprecated in July 2023. If you want to play around with them, you can, but OpenAI has stated they're going to be putting most of their resources into continuing to develop the Chat Completions API (which is what Chat uses).

For that reason, I'm going to focus on the controls available for the Chat Completions API.

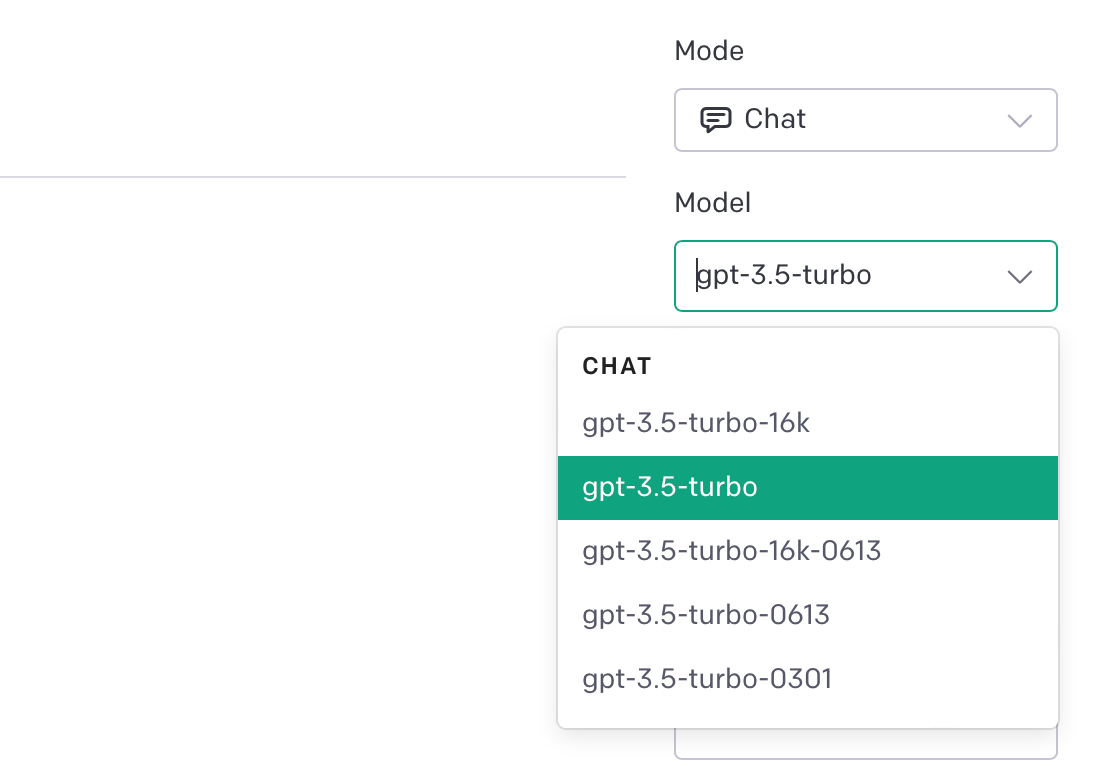

Model

In Chat Mode, you have a choice of a few different GPT models. As I'm writing this, here's what's available:

GPT-3.5-turbo. The latest version of GPT-3.5.

GPT-3.5-turbo-16k. The latest version of GPT-3.5 with four times the context (though it costs twice as much to use).

GPT-3.5-turbo-0613. A snapshot of GPT-3.5 as it was on June 13, 2023.

GPT-3.5-turbo-16k-0613. A snapshot of GPT-3.5 as it was on June 13, 2023, with four times the context (though it costs twice as much to use).

GPT-3.5-turbo-0301. A snapshot of GPT-3.5 as it was on March 1, 2023.

If you're reading this in a few months, the specific dates on the various models will have changed, but the idea will remain the same. They're static snapshots that allow for some consistency when you're using GPT.

GPT-4 is also available through the API and playground, but you need an OpenAI account that has made at least one successful usage payment to enable it (a ChatGPT Plus subscription doesn't count!).

Prompt structure

One of the biggest differences between using ChatGPT and using the Chat Completion API is how prompts are structured. With ChatGPT, you just send a simple message. You can add as much context as you want to the message, but ChatGPT only has it to go on.

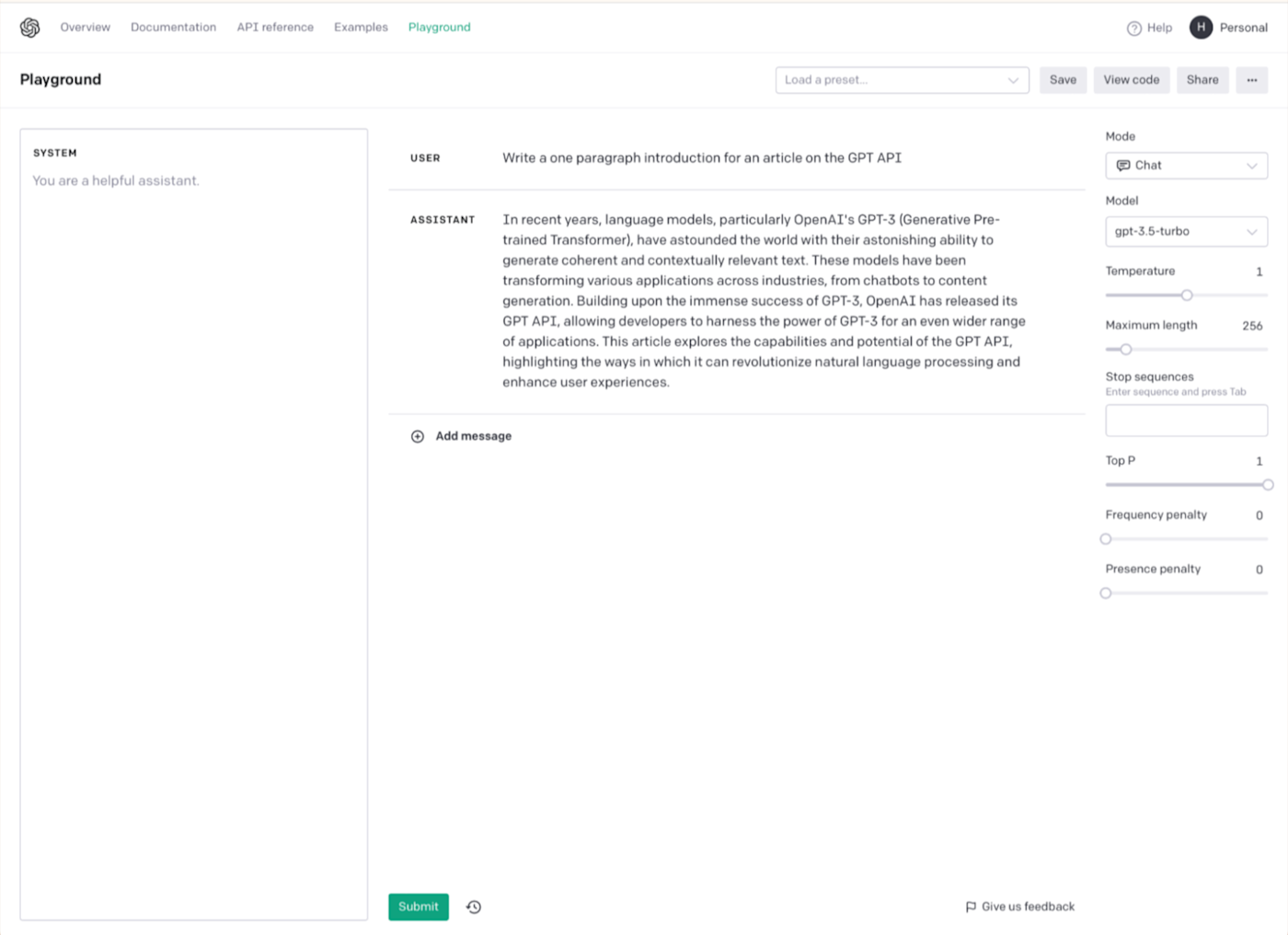

With the Chat Completion API—and through the Chat Mode of the Playground—you get a second option. While you define a User message, you also get to set a role for GPT using the System message.

By default, this will be "you are a helpful assistant," but you can use it to control the kind of responses you get. In the screenshot below, you can see what happens when I use it to tell GPT to make jokes.

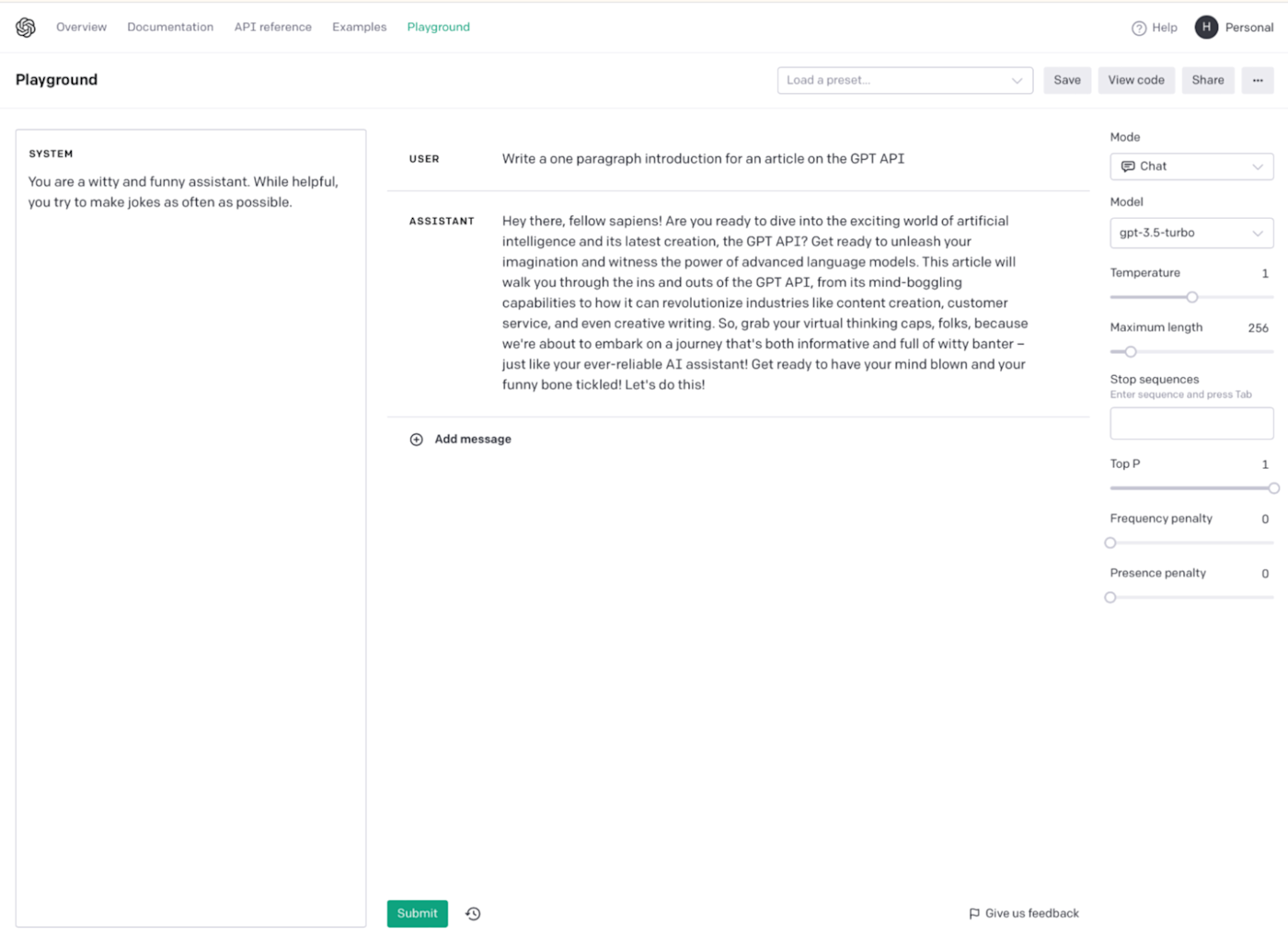

Or tell it to be unhelpful.

For the rest of this article, I'm going to leave the System message to its default as a helpful assistant. But if you really want to play around with the kind of things GPT can do, this is one of the most interesting ways you can do it.

Temperature

Now that we've got the basics out of the way, let's dig into some of the more technical options. Temperature controls the randomness of the text that GPT generates.

LLMs generate text based on the things they encountered in their training data: the more often it encounters a particular phrase or concept, the more likely it is to include it in the text it generates. This is why GPT is able to create text that seems so human-like.

But without some degree of added randomness, GPT would only be able to produce completely boring and predictable results. It would literally just add the most likely follow-on word to any given sentence, and its results would be unusable. This is why Temperature exists.

With GPT, you can set a Temperature of between 0 and 2 (the default is 1).

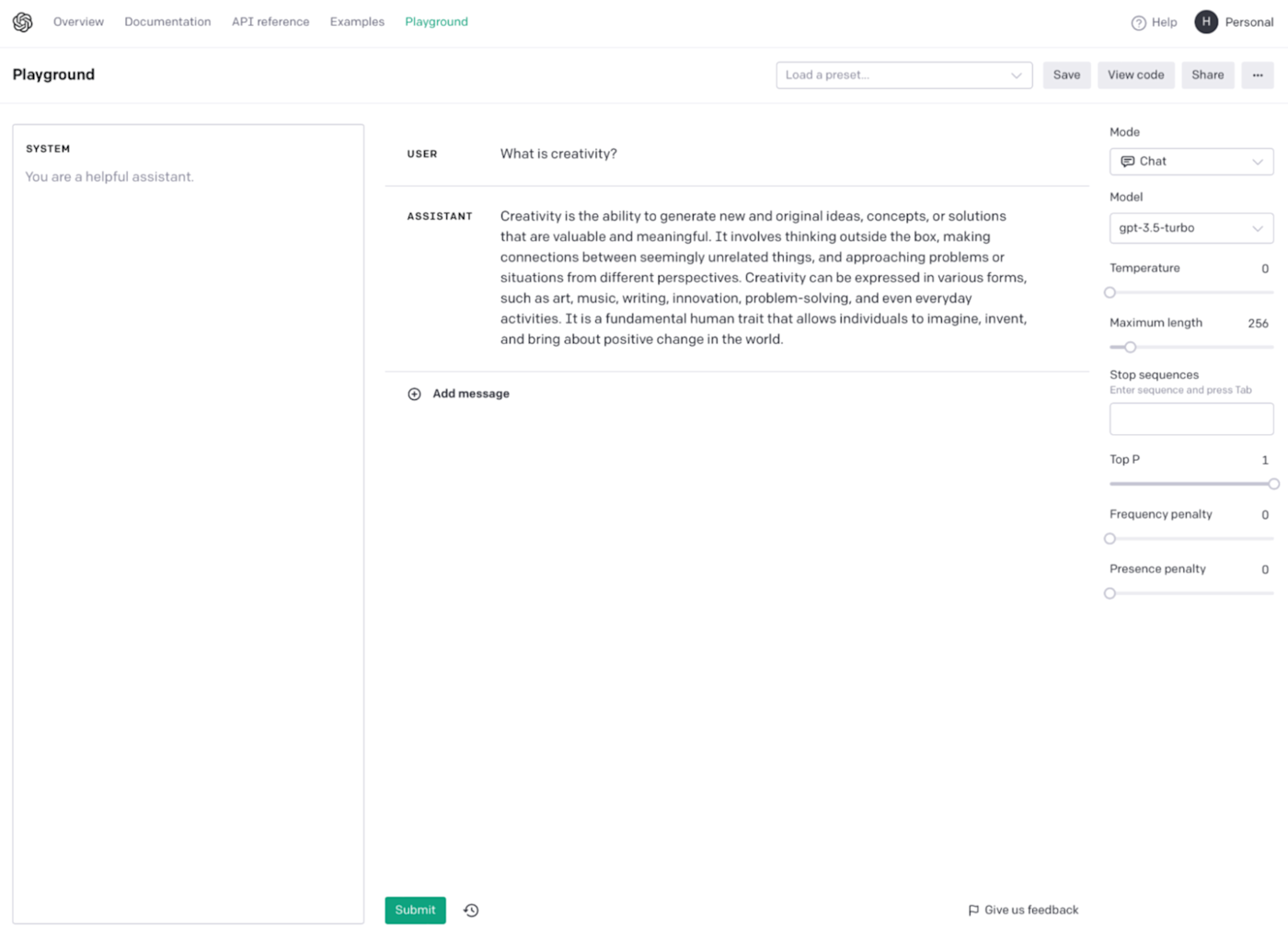

At 0, the results are boring and deterministic: the same prompt will give you nearly identical results. Here's what happens when I ask GPT what creativity is with the Temperature set to 0.

And when I do it again a few minutes later—identical.

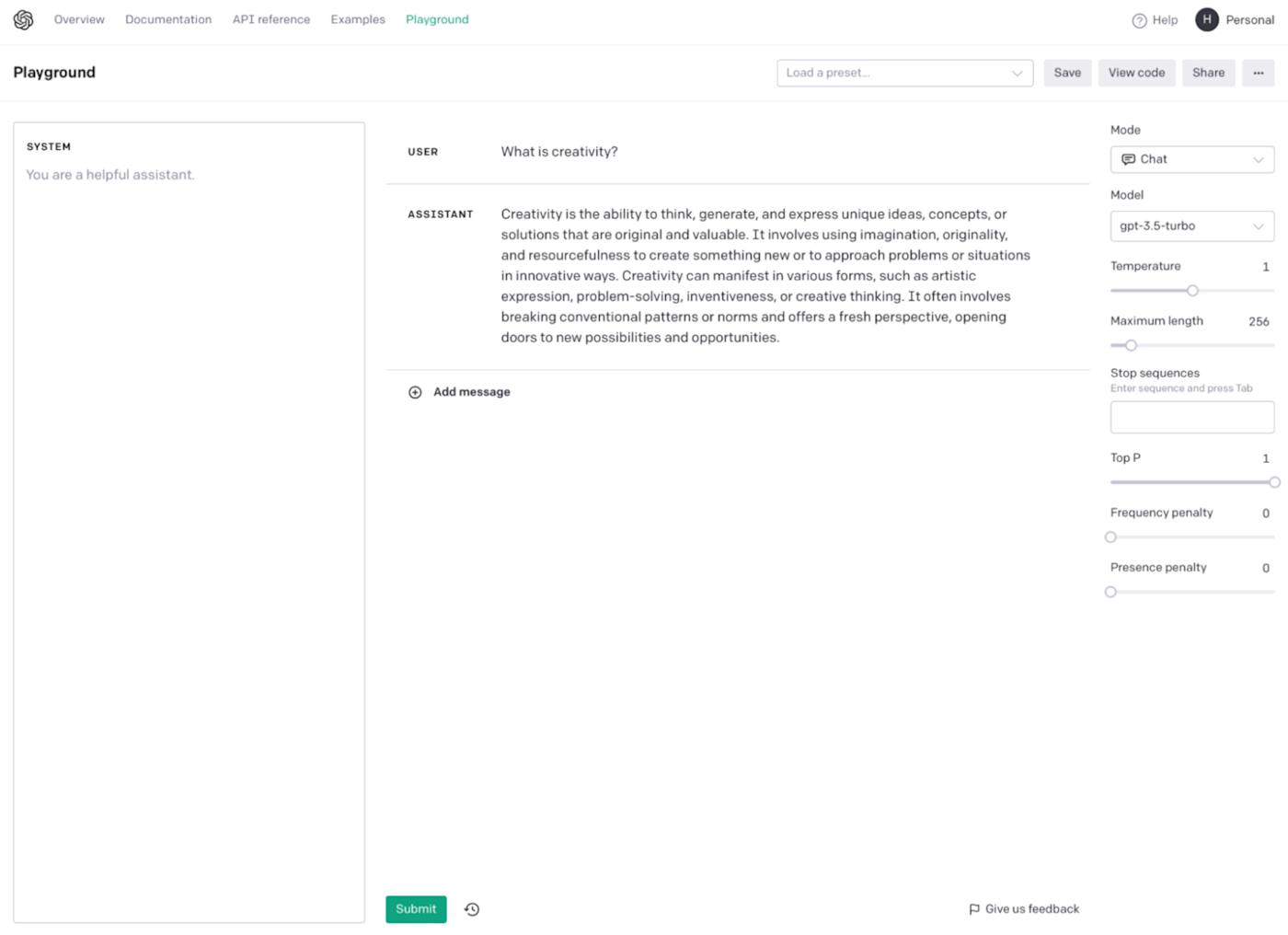

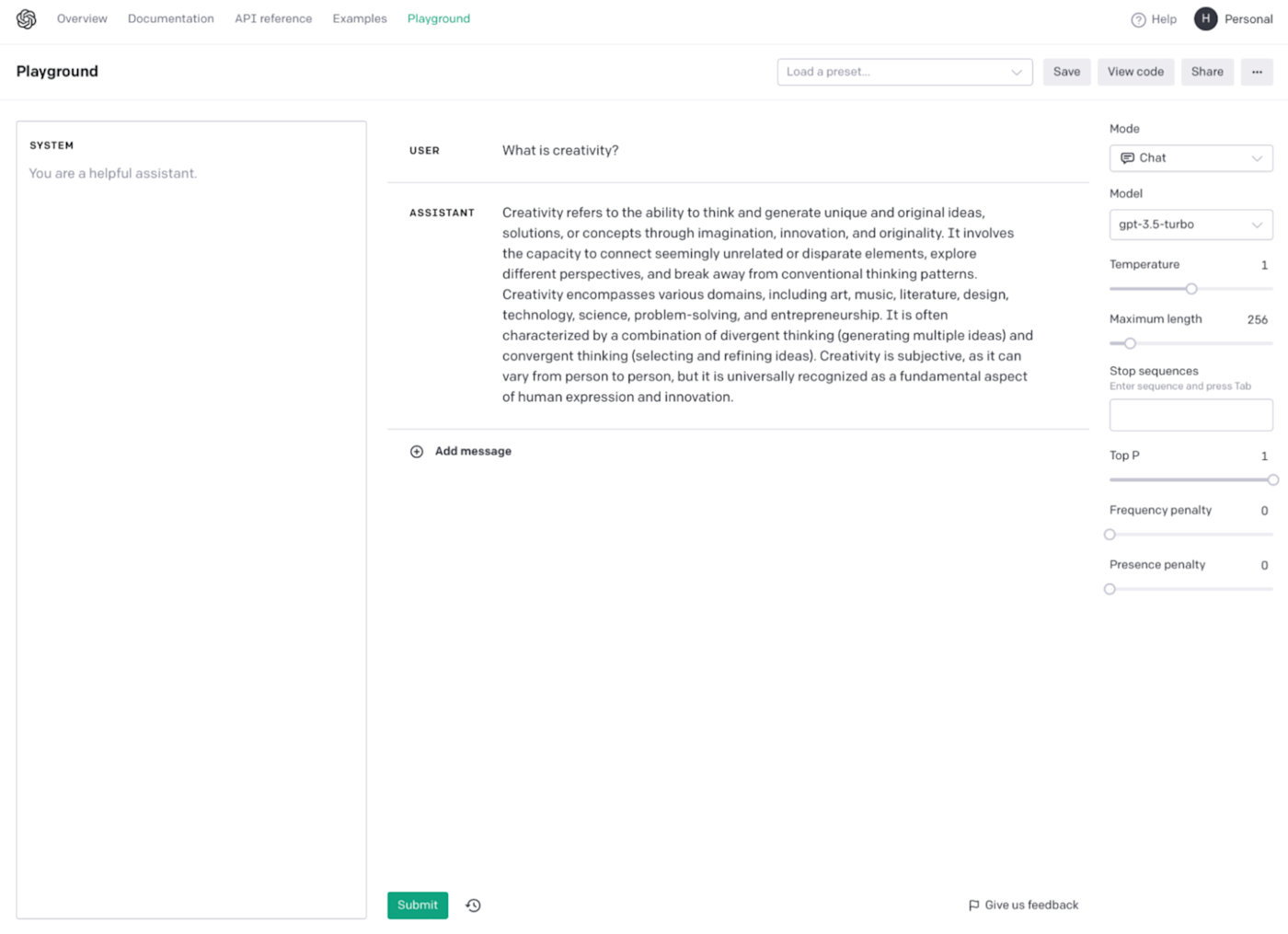

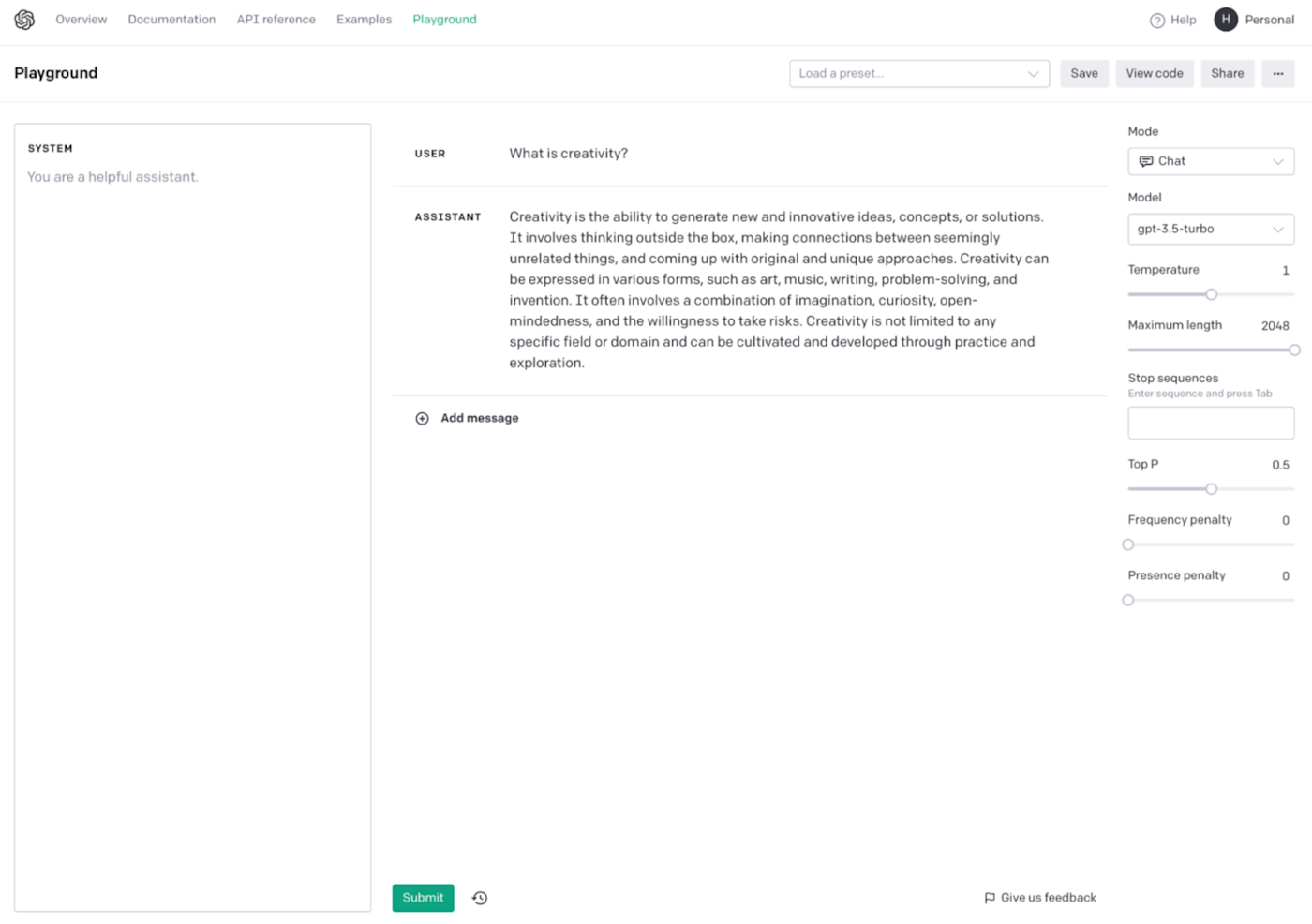

With the Temperature set to the default of 1, you get some randomness but nothing too wild. Here's the same prompt with the Temperature at 1.

And once more.

As you can see, GPT has responded in two totally different ways—though both are completely coherent.

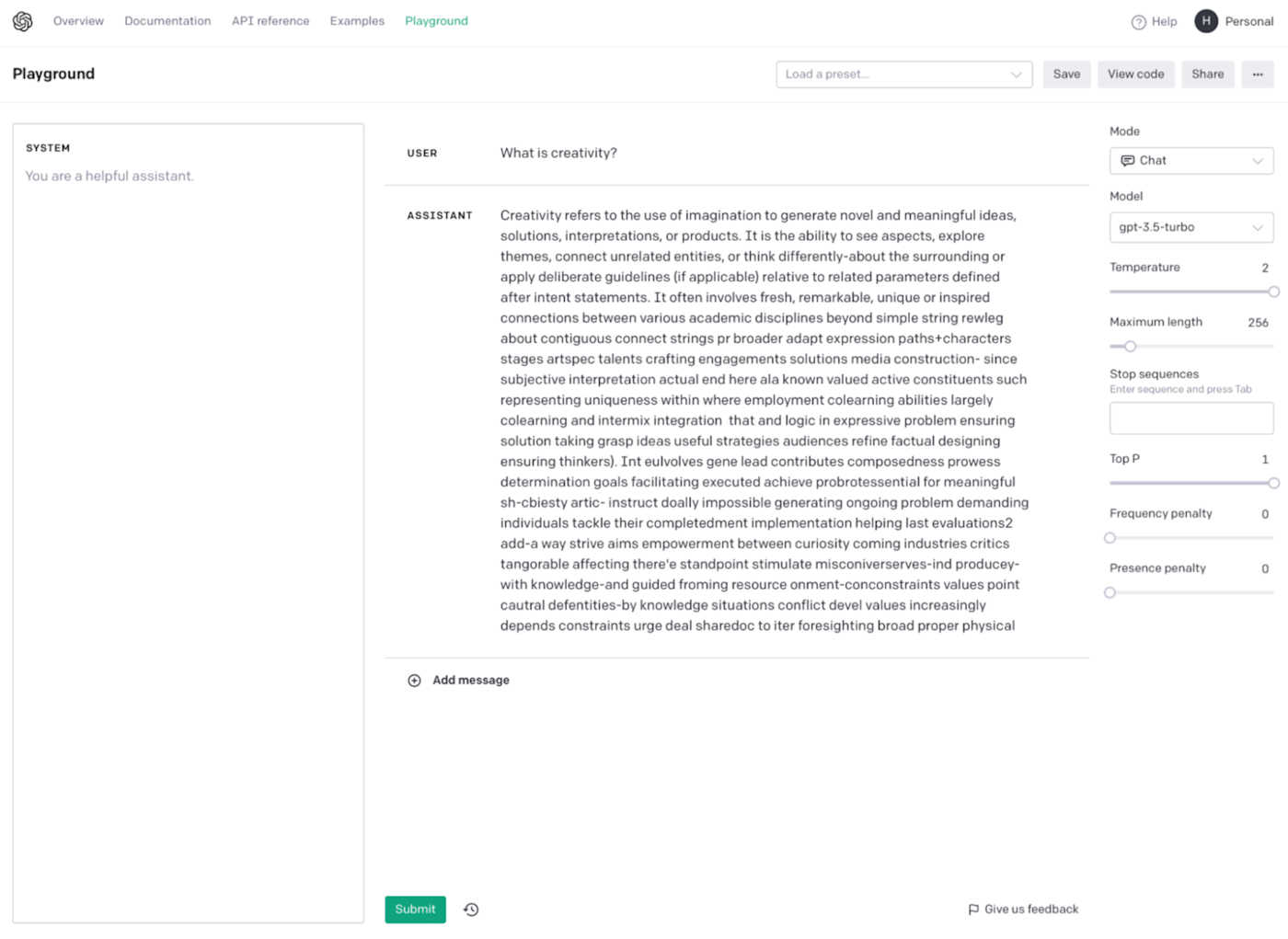

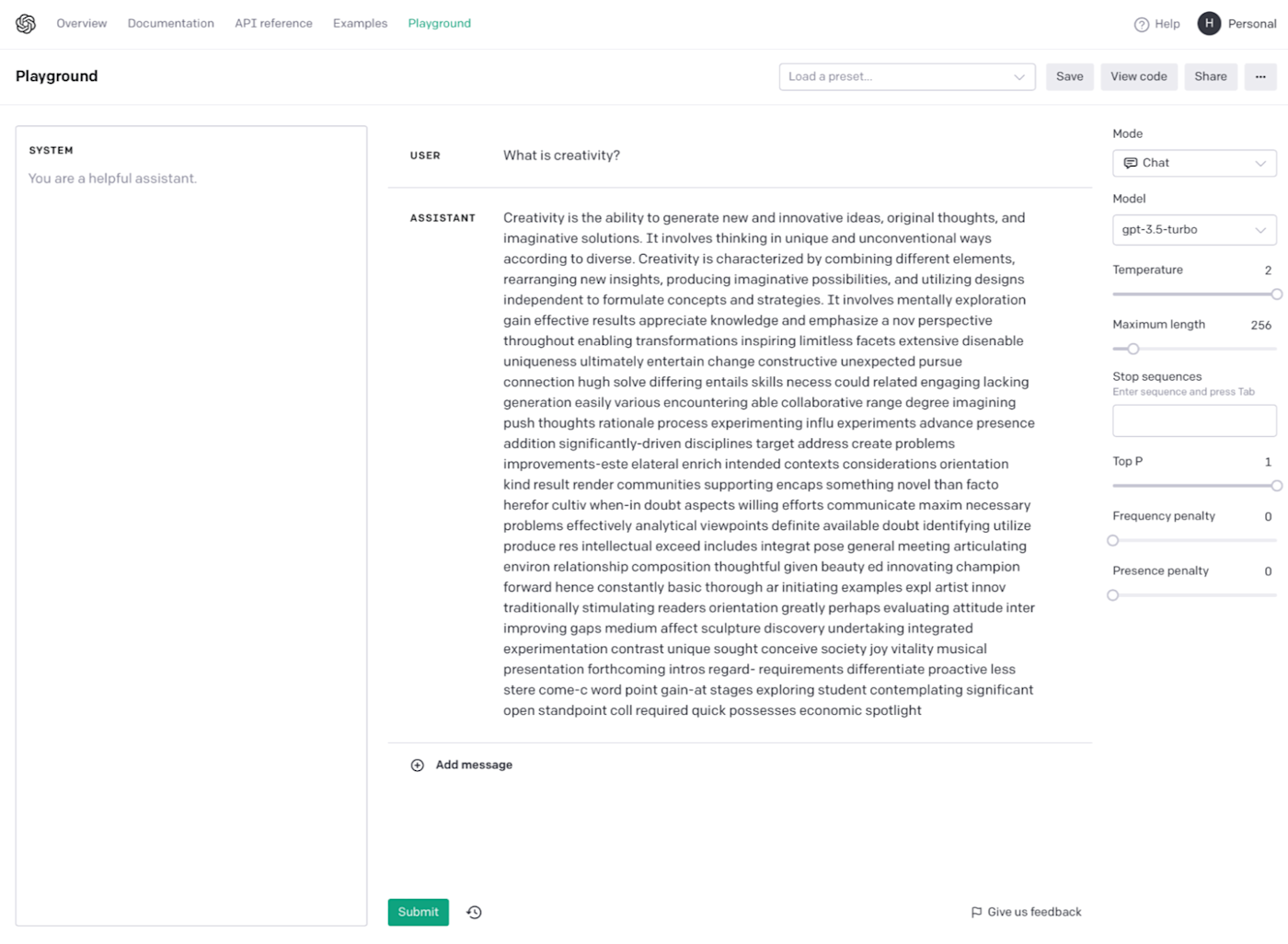

Finally, let's see what happens when we crank the Temperature up to 2.

And again.

Total chaos. At 2, GPT eventually loses the run of itself and just starts spitting out gibberish.

So what's this all mean? If you're using GPT, play around with the Temperature if you want more or less predictable results, but don't expect things to go well if you push it to either extreme.

Maximum length

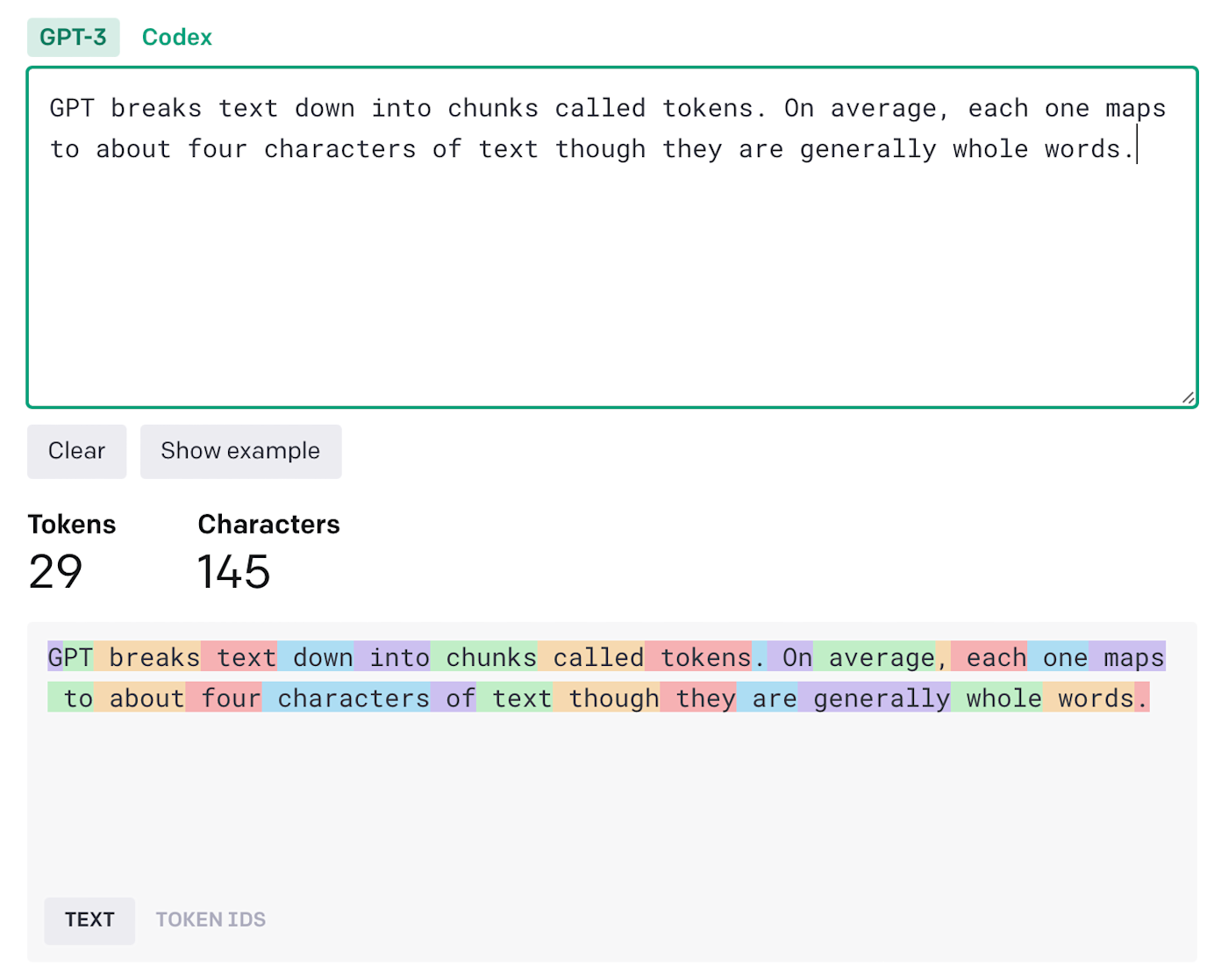

GPT breaks text down into chunks called tokens. On average, each one maps to about four characters of text, though they're generally whole words. With the API, the Maximum length parameter sets the maximum number of tokens of the output. With GPT-3.5, the maximum allowed is 2048 or approximately 1,500 words.

Note: OpenAI charges based on token usage. While they're pretty cheap, if you get into the habit of generating long outputs with more powerful models, the costs could add up.

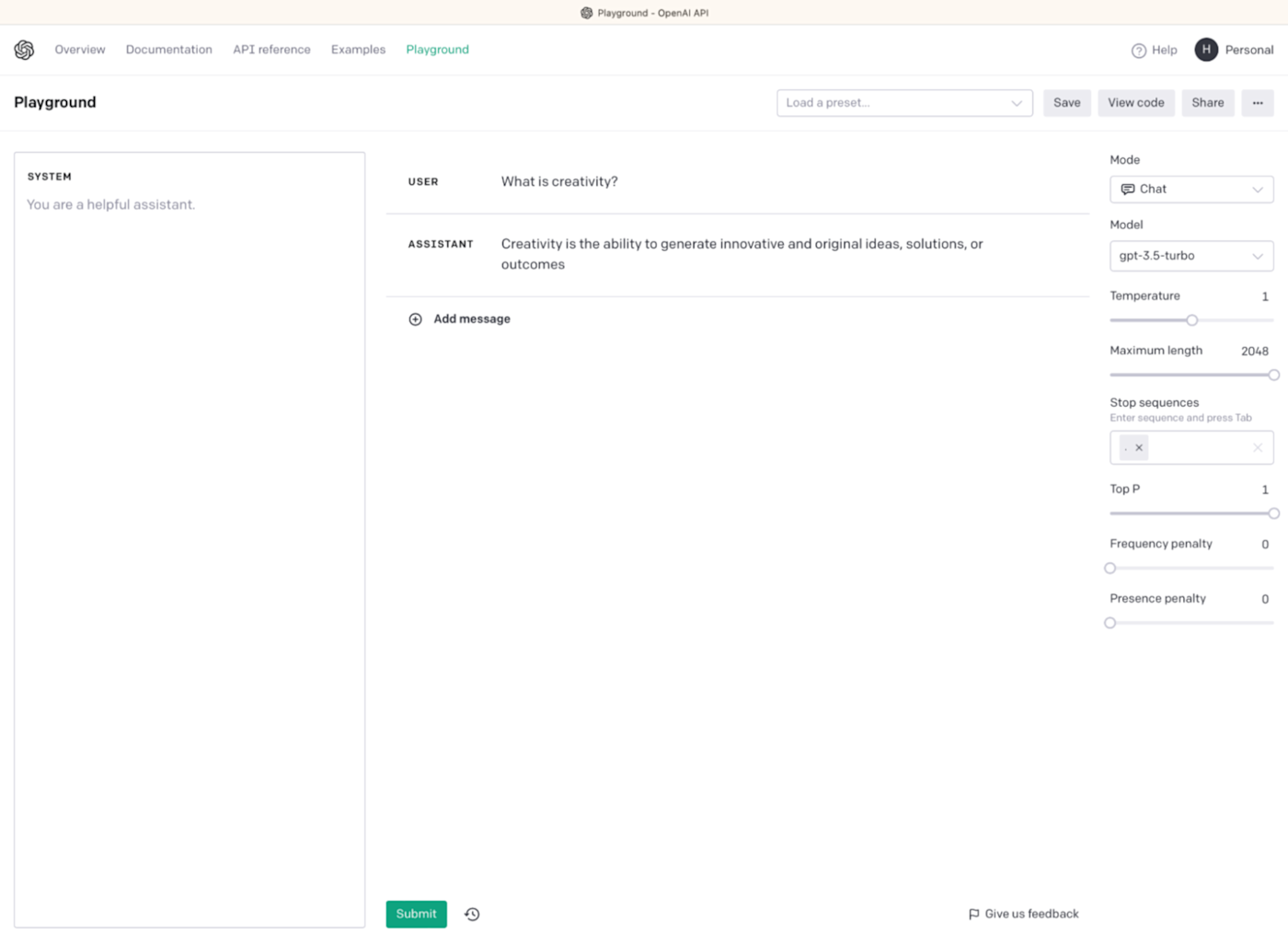

Stop sequences

Stop sequences are used to tell the model when to stop generating an output. They allow you to implicitly control the length of the content you're generating.

For example, if you only want a one-sentence answer to a question, you can use . as the Stop sequence. Alternatively, for a one-paragraph answer, you can use New Line as the Stop sequence.

While you probably won't need to use Stop sequences all that frequently, they're useful if you're trying to generate a dialogue, Q&A, or any other kind of structured format.

Top P

Top P is another way of controlling how predictable GPT's output is. While Temperature determines how randomly the model chooses from the list of possible words, Top P determines how long that list is.

Let's say the initial text is "Harry Guinness is a…" GPT will assign a probability to and rank all the possible tokens it could follow on with here. Let's say the distribution looks something like this:

40%: "writer"

20%: "freelance"

20%: "photographer"

10%: "Irish"

5%: "funny"

4%: "witty"

1%: "gnome"

While it's most likely to output writer, freelance, or photographer, some of the time it could declare "Harry Guinness is a gnome."

With a Top P of 1, all the GPT's possible words are included—even the ones that are unlikely. As you bring it closer to 0, more and more options are cut off. In the screenshot below, you can see that when it's set to 0, it returns the exact same results as when Temperature was set to 0.

Of course, the math and specifics that underlie this are a bit more complex. GPT considers a lot more than seven tokens in most cases, and because Top P weights options by their likelihood, dialing it down to 0.9 doesn't just remove the least likely 10 percent of words—it will probably remove all the most random options.

Really, the simplest way to get a feel for this is to try a few prompts and play around with it.

Frequency penalty and Presence penalty

GPT does a lot under the hood to make sure it doesn't just generate the same text again and again. One of the ways LLMs do this is by automatically penalizing tokens that it's already used. For example, if it has already used the line "Harry Guinness is a writer," it's more likely to say "Harry Guinness is a photographer" next time—though, really, it's even more likely to say "Harry is a photographer."

With the GPT API, you have control over two penalty controls: Frequency penalty and Presence penalty. Both default to 0 and have a max value of 2.

Frequency penalty penalizes tokens based on how many times they've already appeared in the text. The more times they appear, the more they're penalized. OpenAI says that this decreases the likelihood of the output repeating itself verbatim.

Presence penalty penalizes tokens based on whether they've already appeared in the text. It's a flat penalty that OpenAI says encourages the output to move on to new topics.

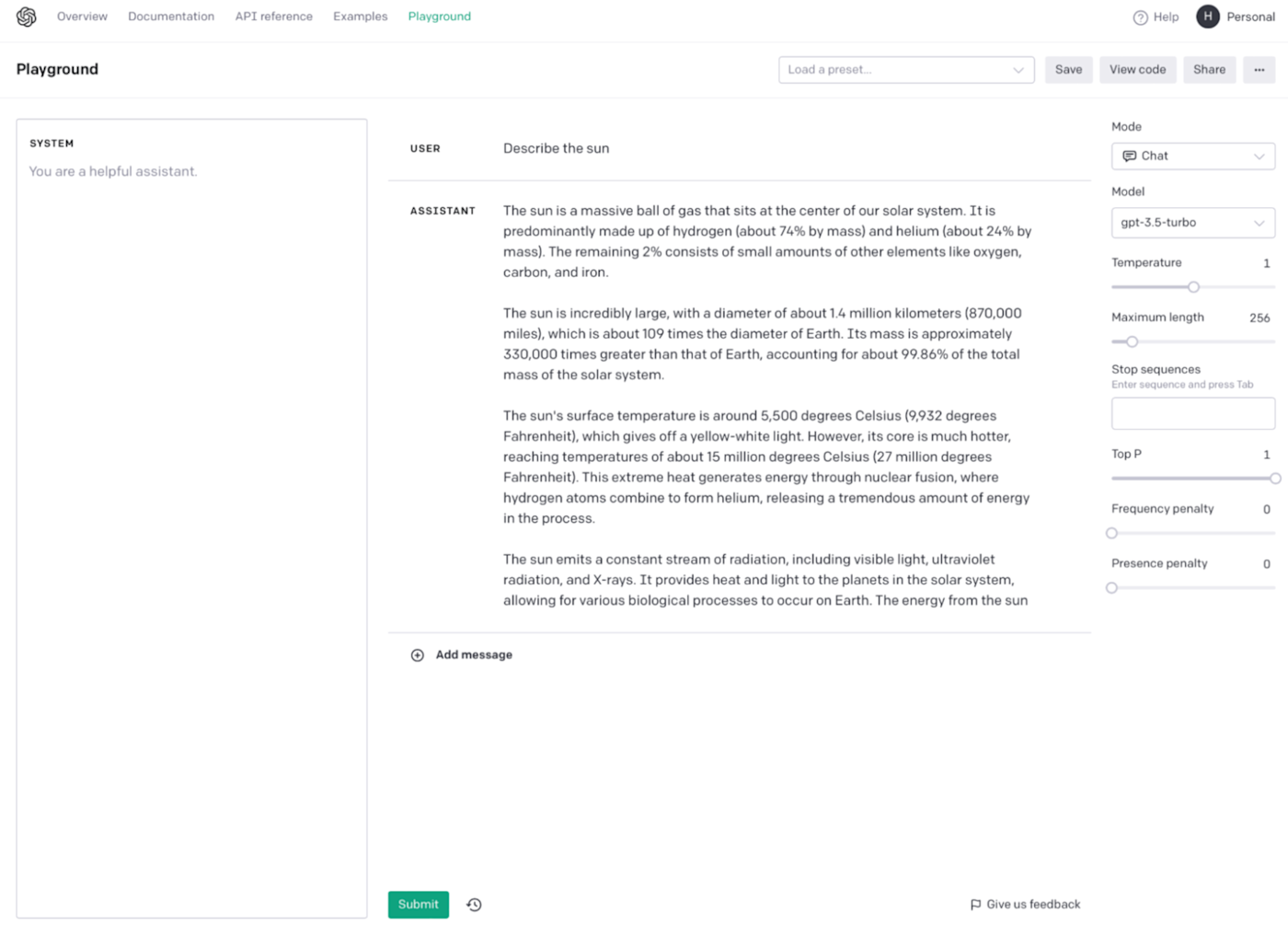

As you can probably grasp from the explanation above, these two controls have a more general effect on your output that may not be easy to see in side-by-side comparisons. Still, here's GPT being asked to describe the sun with both penalties set to 0.

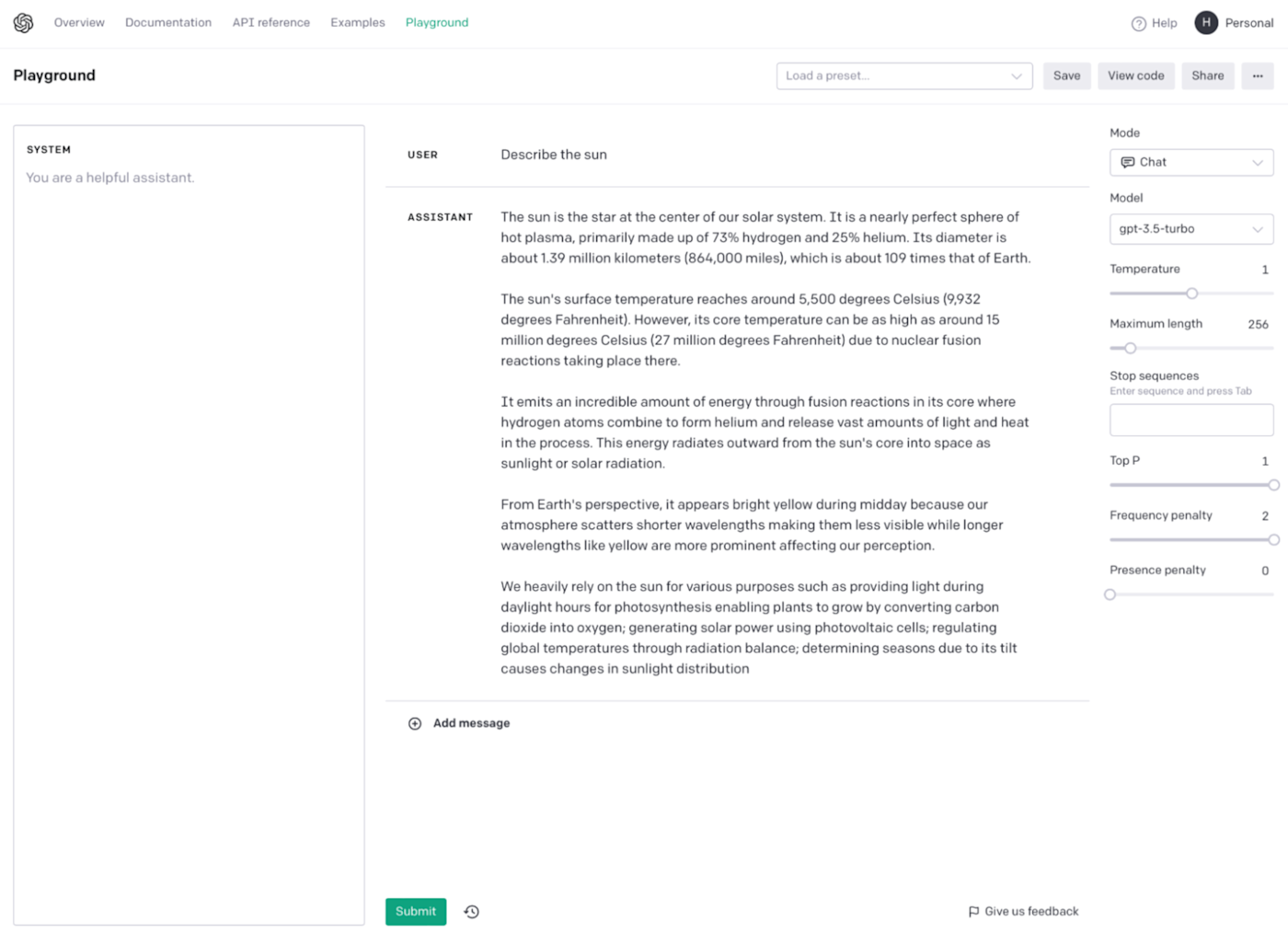

And here it is with Frequency penalty set to 2. Notice how much less often the output says "the sun."

And here it is with Presence penalty set to 2. While it's hard to say for sure, I feel like this is a more discursive answer than the previous two.

Play around with GPT

As with any complex and powerful tool, reading articles about how to use GPT's API controls can only get you so far. If you really want to understand what the different parameters do, head to the playground and try the same prompts with different values for the different options. It's fascinating to see how different the output can get.

Related reading: