AI is the big new thing, and already, folks are coming up with countless ways they can use it. Some of these ideas, though, are downright ludicrous.

While AI-powered text generation tools like ChatGPT, Copy.ai, and Jasper are incredibly impressive, their usefulness and practicality are very easy to oversell. I've seen countless "suggestions" in viral blog posts and Twitter threads that don't work—but look like they did because the generated text seems plausible. So, if you're thinking about adding any AI content generators to your workflow, there are a few things you should keep in mind.

I'm going to use ChatGPT as an example here, but keep in mind that these concerns are there for any AI text generator. Most of them have the same underlying language engine, called GPT-3. Although GPT-4 was recently released, for now, it's not widely available and costs significantly more to use. It's likely that many people using AI text generators will rely on GPT-3 for the foreseeable future to keep costs down as well.

Either way, I'm only using ChatGPT to make things simpler—and so you can test my work. (I'll also look at how GPT-4 improves things.) Rest assured, though: you can make the same mistakes with any tool you like.

How do AI text generators work?

The main reason that generative AI is far from reliable is that it's not really giving you information—it's giving you words. Read about how ChatGPT works to dive deep, but the idea is that, when given a prompt, it responds by generating the text that its neural network thinks would be the best fit given all its training data.

But just because GPT-3 and GPT-4 are good at predicting a plausible follow-on word and creating grammatically correct sentences, they can't magically overcome the limits of the information they were trained on. This is what's led to some of the issues they've had with biases, and it's also why we need to be careful about relying on GPT in certain circumstances.

Now that we're all up to speed on AI content generators, let's dive in.

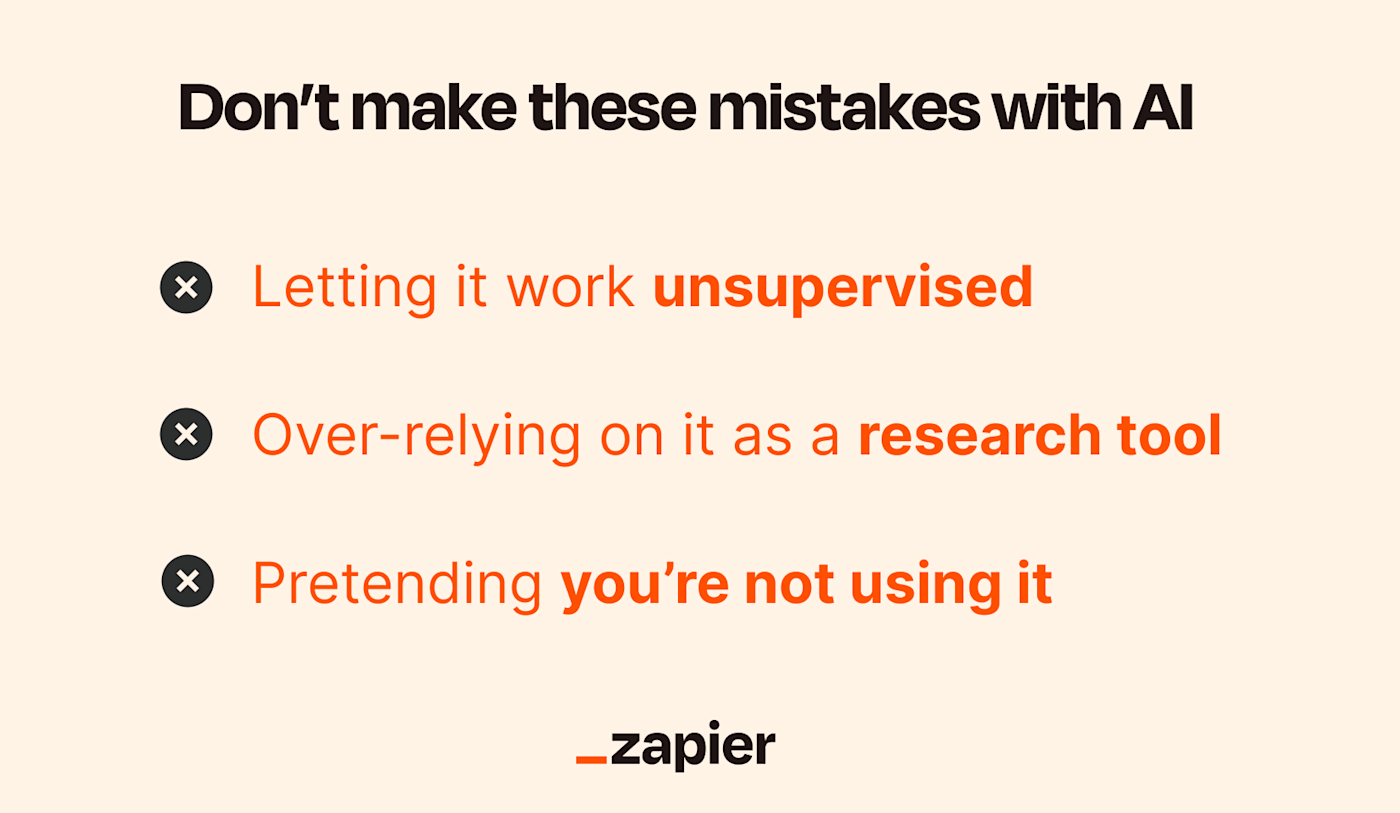

Letting AI work unsupervised

The toughest thing about ChatGPT, other GPT-powered tools, and even Google's newly unveiled Bard is just how plausible almost everything they say sounds. That's definitely a feature, but it's often also a major bug.

Google really nicely demonstrated this with its big demo of Bard. One of the suggested prompts was: "What new discoveries from the James Webb Space Telescope can I tell my 9 year old about?" In response, Bard offered three suggestions, one of which said: "JWST took the very first pictures of a planet outside of our solar system." And while this sounds exactly like the kind of thing a space telescope would do, it's not quite true: the European Southern Observatory's Very Large Telescope (VLT) took one in 2004.

CNET had things go even worse. Of 77 AI-written finance stories quietly published on its website, it had to issue corrections in 41 of them, including basic explainers like "What is Compound Interest?" and "Does a Home Equity Loan Affect Private Mortgage Insurance?" Supposedly the articles were "assisted by an AI engine and reviewed, fact-checked and edited by our editorial staff." But clearly, that isn't what happened.

The examples extend to marketing too. There are plenty of ways AI can fit into a content generation pipeline, but if you credulously publish whatever the bots create, you're very likely to find factual mistakes. This is especially important when you're writing about a new product, service, or tool that won't be well represented in the training data. At launch, Bing's AI features claimed that a pet hair vacuum cleaner had a 16-foot cord—despite being a handheld model. If you're asking it to describe the product you're trying to sell, expect it to make up plausible but completely fictional features.

All this should serve as a warning not to let AI-powered tools work away unsupervised. OpenAI says GPT-4 is better at not making things up, but it still warns users that it can, and that folks should continue to be careful in high-stakes situations.

Over-relying on it as a research tool

From the start, ChatGPT has been heralded as an alternative to search—and, in particular, Google. Its short summary answers are clearly presented, super coherent, and not laden down with ads. It's why Microsoft is adding it to Bing.

And while ChatGPT (and Bing) are good at summarizing relatively simple information and responding to clear fact-based questions, they present every answer with authority and certainty. It's easy to convince yourself that what they're saying must be correct, even if it isn't.

Now, full credit to OpenAI here. This used to be a bigger problem with ChatGPT, and the AI has already improved a lot. When I tested it a few months ago, I asked for a list of popular blogs that covered remote work, and its suggestions were terrible. Of the five, three had dead links, one was the marketing site for a psychic, and one was a remote work job site.

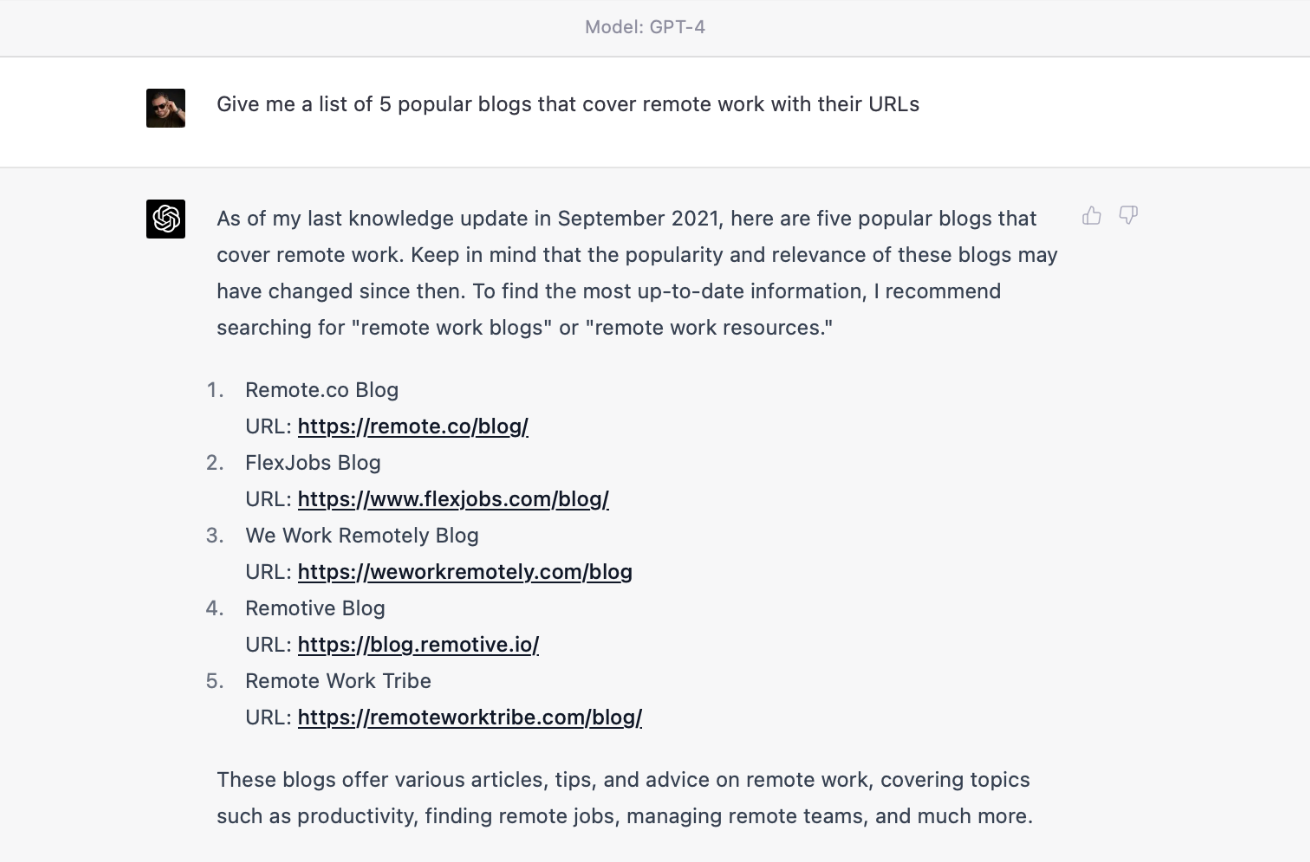

When I tested it with GPT-3, its suggestions were a bit better—there were no psychics at least—but there are still a few problems.

The first three links 404ed, even though Remote.co, Zapier, and FlexJobs all have blogs. The Remote Revolution doesn't seem to exist, at least not at that URL. The Buffer link is ok, though it redirects to a different URL and is more of a roundup blog post than a link to a blog about remote work.

And even with GPT-4, ChatGPT suggestions were a bit of a mixed bag.

Only the first URL 404s now, but this is really a list of four job sites and a psychic's blog—and even it recommends I use Google instead.

Regardless of which model I used, it's clear ChatGPT hasn't done very well here. Sure, the list is presented clearly, but as GPT-4 itself says, I would get much better results just by Googling "remote work blogs." At least then, all the URLs would be accurate, and Google would have identified which were the best or most trustworthy and brought them toward the top.

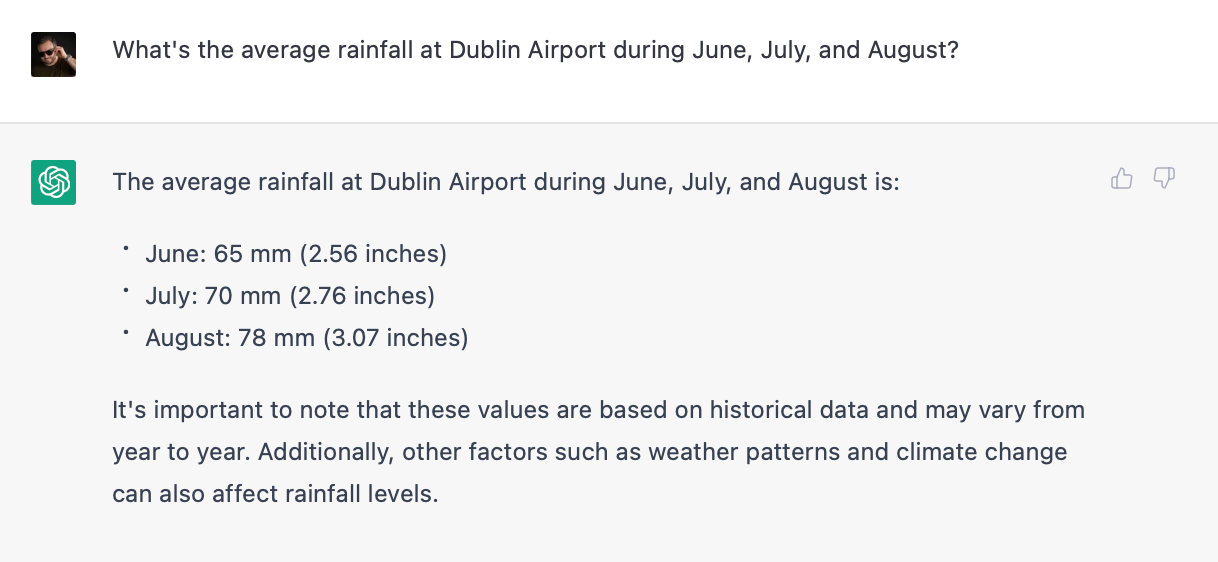

And what about questions with clear factual answers? When I asked ChatGPT with GPT-3 for the average rainfall in Dublin during June, July, and August, it gave me answers that were close to the official values, but still wrong.

Again, this is information that's easy to find online with a quick Google search (you might not even have to click, depending on how you phrase it), but ChatGPT is happy to present the wrong answers concisely, coherently, and authoritatively. The only way to know that it's lied to you is to go and check the details yourself.

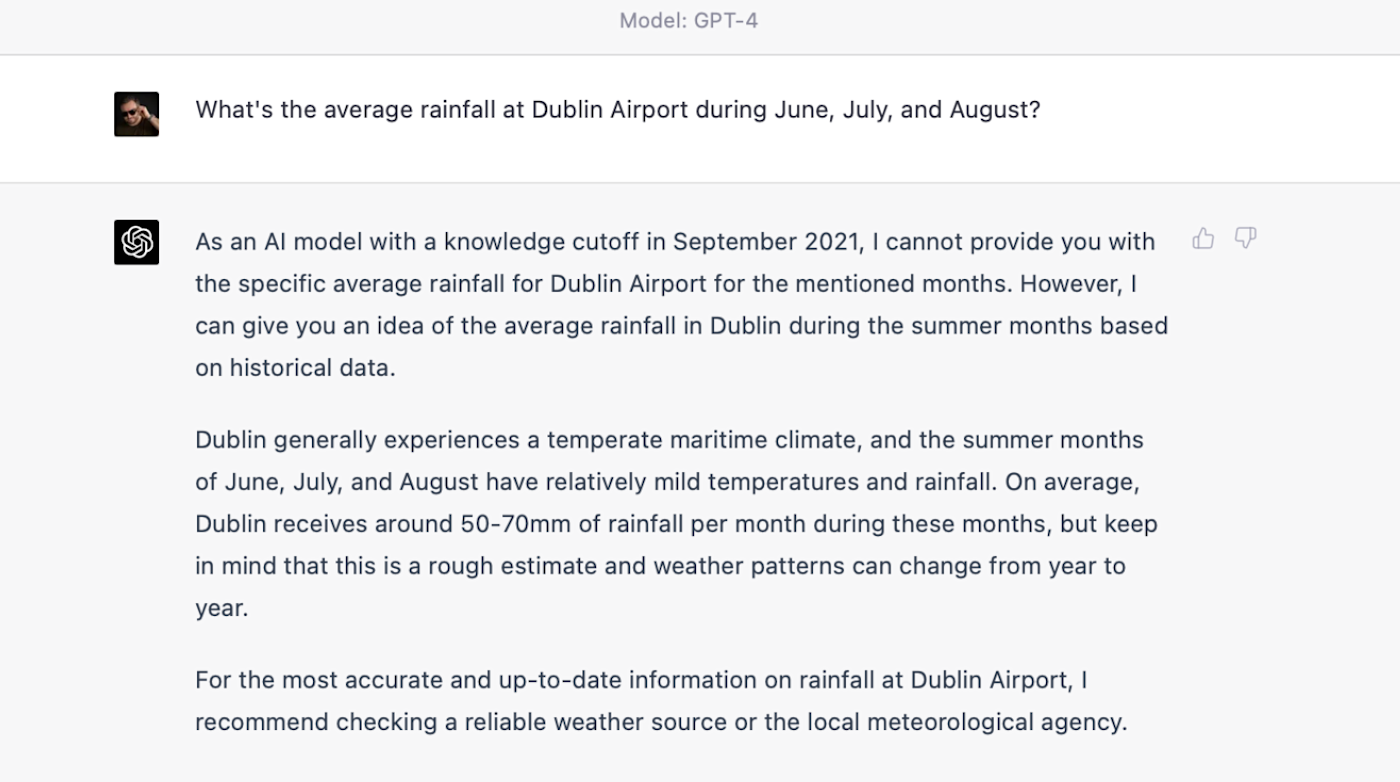

Interestingly, with GPT-4, ChatGPT takes a very different approach: it refuses to answer and recommends I check the local weather service.

All this is to say that AI-powered tools are in no way a replacement for existing research tools, at least not yet. Sure, they can be useful for generating a few ideas, but they're likely to lead you astray if you aren't careful. And it's very interesting that GPT-4 recognizes this fact and refuses to answer.

Pretending you're not using it

While the previous issues have more to do with the mismatch of what AI tools can actually do right now and what they look like they can do, this one is more of a general ethical concern.

Like a lot of people working in the tech space, I'm excited for what AI-powered tools are going to be capable of over the next few years, and I'm keen to see where they can fit into my workflow. But it's not ok to go all-in on AI without letting your clients, customers, or readers know.

Other than where it's clearly flagged, I wrote this article. That's what I'm paid to do, and it's what my contract says I have to do. If you're going to use AI tools for anything more than generating bad jokes, be transparent about it. Otherwise, like what happened with CNET, things could get embarrassing.

How should you use AI?

Right now, it feels like we're at an inflection point. AI is almost certainly going to be an important part of many professional workflows going forward, but how is still up in the air. GPT-powered tools absolutely shouldn't be let do their own thing; other language learning models don't seem capable of it yet either. That means that they're more likely to be useful for speeding up simple tasks.

With that in mind, here are some things you can do right now with AI:

With Zapier, you can connect ChatGPT to thousands of other apps to bring AI into all your business-critical workflows.