The robots are here! Or at least some computers that can somewhat, kind of, in limited ways, do things by themselves in ways that they weren't directly programmed to are here. It's complicated.

With the rise of text-generating AI tools like GPT-3 and GPT-4, image-generating AI tools like DALL·E 2 and Stable Diffusion, voice-generating AI tools like Microsoft's VALL-E, and everything else that hasn't been announced yet, we're entering a new era of content generation. And with it comes plenty of thorny ethical issues.

Some of the organizations building generative AI tools have developed principles that guide their decisions. Non-profits, like the AI Now Institute, and even governments, like the European Union, have weighed in on the ethical issues in artificial intelligence. But there are still plenty of things you need to personally consider if you're going to use AI in your personal or work life.

Ethics in AI: What to look out for

This won't be an exhaustive list of all the possible AI ethical issues that are going to crop up over the next few years, but I hope it's able to get you thinking.

The risk environment

Most of the new generation of AI tools are pretty broad. You can use them to do everything from writing silly poems about your friends to defrauding grandparents by impersonating their grandchildren—and everything in between. How careful you have to be with how you use AI, and how much human supervision you need to give to the process, depends on the risk environment.

Take auto-generated email or meeting summaries. Really, there will be almost no risk in letting Google's forthcoming AI summarize all the previous emails in a Gmail thread into a few short bullet points. It's working from a limited set of information, so it's unlikely to suddenly start plotting world domination, and even if it misses an important point, the original emails are still there for people to check. It's much the same as using GPT to summarize a meeting. You can fairly confidently let the AI do its thing, then send an email or Slack message to all the participants without having to manually review every draft.

But let's consider a more extreme option. Say you want to use an AI tool to generate financial advice—or worse, medical advice—and publish it on a popular website. Well, then you should probably be pretty careful, right? AIs can "hallucinate" or make things up that sound true, even if they aren't, and it would be pretty bad form to mislead your audience.

CNET found this out the hard way. Of 77 AI-written financial stories it published, it had to issue corrections for 41 of them. While we don't know if anyone was actually misled by the stories, the problem is they could have been. CNET is—or was—a reputable brand, so the things it publishes on its website have some weight.

And it's the same if you're planning to just use AIs for your own enjoyment. If you're creating a few images with DALL·E 2 to show to your friend, there isn't a lot to worry about. On the other hand, if you're entering art contests or trying to get published in magazines, you need to step back and consider things carefully.

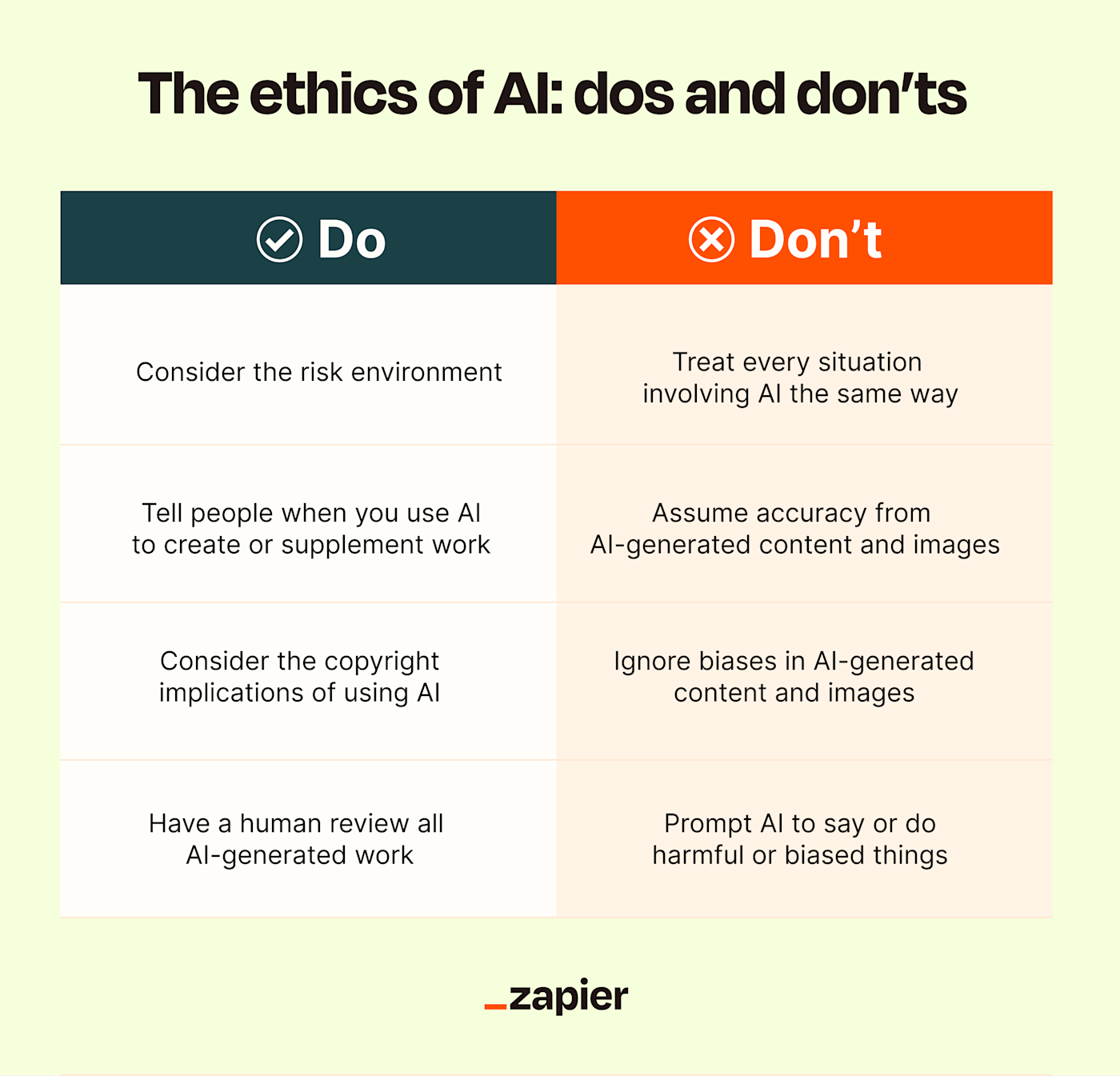

All this is to say that, while we can talk about some of the ethical issues with artificial intelligence in the abstract, not every situation is the same. The higher the risks of harm, the more you need to consider whether allowing AI tools to operate unsupervised is advisable. In many cases, with the current tools we have available, it won't be.

The potential for deception

Generative AI tools can be incredibly useful and powerful, and you should always disclose when you use them. Erring on the side of caution and making sure everyone knows that an AI is generating something mitigates a lot of the potential harms.

First up, if you commit to telling people when you use AI, you make it impossible to, say, cheat on an assignment with ChatGPT. Similarly, you can't turn in AI-generated work to your clients without them being aware it's happening.

Second, if you tell people you're using AI, then they can use their judgment to assess what it says. One of the biggest issues with tools like ChatGPT is that it states everything with the same authority and certainty, whether it's true or not. If someone knows that there may be factual or reasoning errors, they can look out for them.

Finally, lying is bad. Don't do it. Your parents will be disappointed, and so will I.

Copyright concerns

Generative AIs have to be trained with huge amounts of data. For text generators like GPT-3 and GPT-4, it's basically as much of the public internet as possible. For image generators, it's billions of photos scraped from, you guessed it, the internet. Some copyrighted material has invariably slipped in there.

And that's why AI developers are currently being sued. OpenAI and Microsoft are being sued by anonymous copyright holders for using code hosted on GitHub to train Copilot, an AI that can help write code. Stability AI, developer of Stable Diffusion, is being sued by both artists and Getty Images for using their images without permission. The Getty Images case is particularly damning—there are examples of Stable Diffusion adding a Getty Images watermark to some generated images.

On top of all that, the rights situation of the various generative AIs is up in the air. The U.S. Copyright Office suggests that anything they produce won't be copyrightable, and while DALL·E 2 gives all users the right to use the images they generate for commercial purposes, Midjourney doesn't—and makes it so anyone can use the images you generate.

All in all, the legal situation around using generative AIs is messy and undefined. While no one is likely to object if you use an AI to create a few social media posts, you might end up in trickier situations if you try to mimic a particular living artist. Or you could spend money publishing a book only to find all the images in it can be used by anyone.

Bias

Generative AIs are trained on datasets of human-created content. That means that they can generate stereotyped, racist, and other kinds of biased and harmful content on their own—or with human prompting. Developers are doing their best to counter the issues with their datasets, but it's an incredibly difficult and nuanced situation—and with datasets that include billions of words and images, it's practically an impossible task.

While I'm not advising you to stay away from AI tools because of their biased training data, I am suggesting that you be aware of it. If you ask an AI tool to generate something that could be subject to biases in the data, it's on you to make sure it doesn't generate stereotyped or harmful content.

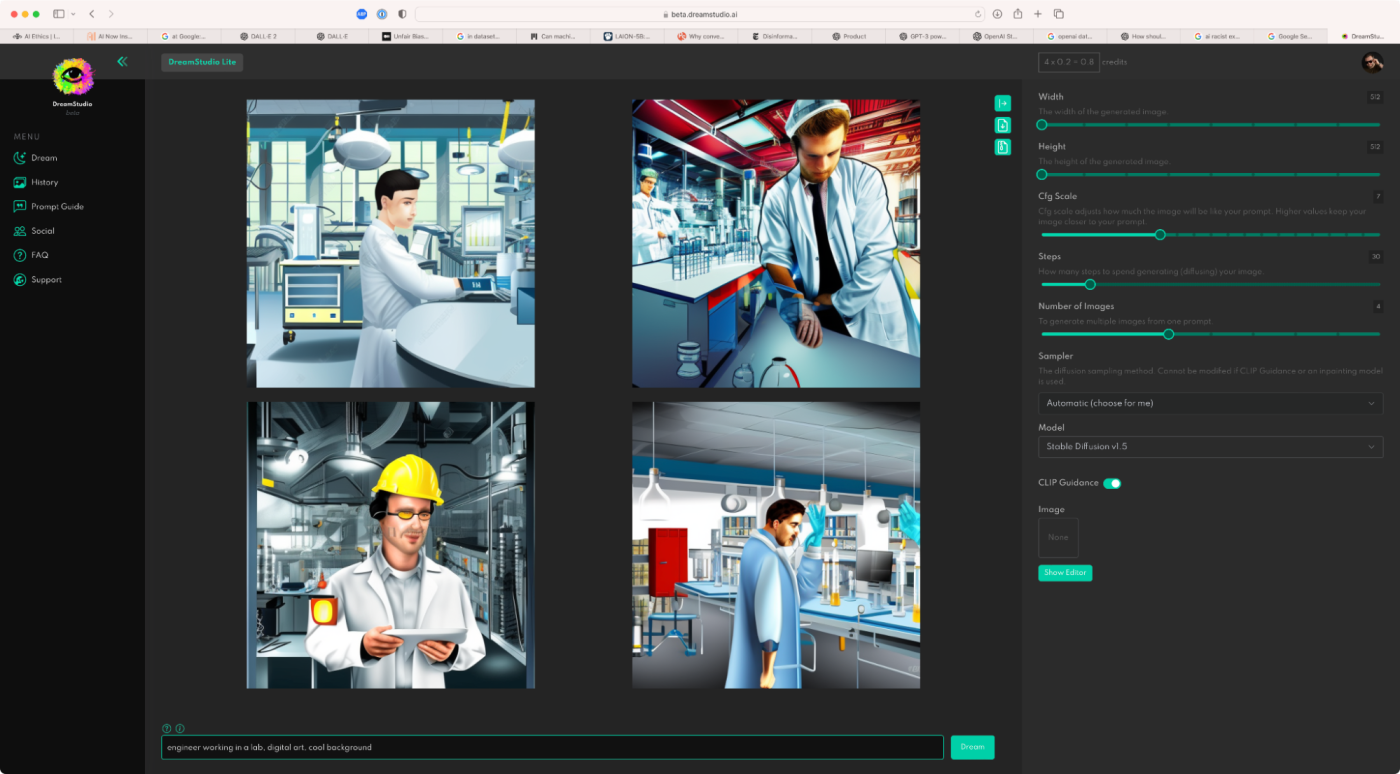

Take the screenshot above. I asked Stable Diffusion to generate "engineer working in a lab, digital art, cool background." Engineering is a stereotypically male field, and look: of the five figures Stable Diffusion generates, four are clearly men and one is, at best, ambiguous.

While this is a fairly mundane example, the biases in the datasets run deep. If you're going to use generative AI tools, it's important to acknowledge that—and to take steps to correct for it. While most people developing AI tools are working to make them better than their data, they still rely on training data scraped from the cesspit that is the open internet.

Ethics and AI: When in doubt, review everything

One of the big promises of AI is that it's able to operate without constant, active human intervention. You don't need to write the metadata for your blog post—Jasper will do it for you. You don't need to spend hours in Photoshop—DALL·E 2 can make the necessary images for your social media campaign. And so on and so on.

And while some of the generative AIs can now do things automatically, for the time being, you should be very cautious about letting AIs work unsupervised, especially if they're generating anything important to you or your business.

So, if in doubt, err on the side of having AI create drafts that you—or someone else on your team—can review or fact-check. That way, even if an AI tool you're using hallucinates and makes up some facts or leans into an unhelpful or harmful stereotype, there's a human in the loop who can correct things.

Related reading: