It's hard to tell what's real on the internet anymore.

In early 2023, an AI-generated photo of the Pope in a snazzy puffer jacket duped millions, including celebrities, until it was sadly debunked. And there's plenty more where that came from. The rise of readily available, free text and image generation tools has inundated the web with artificial content—from AI-written Buzzfeed quizzes that promise to compose a rom-com about you in 30 seconds to the dozens of news websites put together entirely with AI.

And those are the tamest examples. There have reportedly been deepfake ads from presidential candidates, and what appeared to be a picture of an explosion at the Pentagon went viral on Twitter, leading to a brief dip in the stock market until the Department of Defense confirmed that the image was fake.

Europe's law enforcement agency expects as much as 90% of the internet to be synthetically generated by 2026. Most of it, more often than not, won't have a disclaimer either—and you can't always expect the DoD to come to the rescue.

Why is detecting AI-generated content so hard?

AI language models are trained on mountains of existing human works, like written text and photos, so that they can mimic our behaviors. Their whole point is to accomplish human-level fluency, and over time, they become more sophisticated at it—so much so that it's close to impossible for an untrained eye to tell them apart, one study concluded. Other research found its participants trusted AI-generated faces more than real ones and believed fake news articles were credible 66% of the time.

Building a detection system that can keep up with AI's rapid progress is a challenge that has stumped researchers for years. Though some efforts have been successful to an extent with novel methods, such as looking for how robotic a piece of text is and exploiting geometric irregularities in manipulated faces, each loses its effectiveness when a new and better generative AI tool is released.

AI detection tools are also usually easy to evade: while a detection algorithm engineered by Nvidia could flag AI-generated images, its efficacy would drop dramatically whenever the image was even slightly resized. Most of these tools are designed to spot the mistakes AI leaves behind while synthesizing an artificial photo and can't yet detect when a real picture is edited or tweaked with AI. Similarly, paraphrasing an AI-generated text is often enough to break a detector.

"We show both theoretically and empirically, that these state-of-the-art detectors cannot reliably detect LLM outputs in practical scenarios," wrote an author of a recent University of Maryland report.

Why is it important to build effective tools for AI content detection?

Generative AI tech lowers the cost of disinformation and enables bad actors to quickly build false narratives, as was evident in the aftermath of the Pentagon turmoil. With an AI chatbot like ChatGPT and a text-to-image generator like DALL·E 2, one can synthesize fake articles, faces, and pictures within minutes. Experts and governments fear it could be weaponized, for instance, as a means of spreading alarmingly convincing conspiracy theories at scale.

Plus, the absence of a reliable AI detection tool leaves for false positives. One Texas professor, for example, threatened to fail his entire class after he ran their assignments through ChatGPT, and the chatbot told him the students had used AI to do their homework—even when they hadn't.

In day-to-day activities as well, a tool that helps us consistently spot artificial content is now indispensable. Whether it's a piece of information you've just come across on your social timeline, or a suspicious text you got with a profile picture of someone you know, you'll need an AI detection tool at every step of the way to verify data and identities.

How to detect AI-generated content

Though none of the available AI detection tools so far are foolproof, there are a few you can turn to every now and then when you're not sure if the text you're reading or the media you're looking at is created by a bot.

Hugging Face

The open source AI community Hugging Face has a free tool that lets you instantly recognize AI images. All you have to do is upload the picture, and in seconds, the web app will tell you the likelihood of it being produced by a machine and a human. It's trained on a large sample of images labeled as "artificial" and "human." So there's a chance its efficacy may drop as AI creation services improve.

The tool's accuracy is hit and miss at best. When I ran the viral Pope-in a-puffer-jacket picture and another DALL·E 2 creation depicting a teddy bear skateboarding in Times Square, Hugging Face correctly labeled it as artificial. But it struggled against media generated from other platforms, like neural.love. For an AI-generated photo of the Eiffel Tower on the moon, it told me there was a 57% chance it was made by a human. 🤔

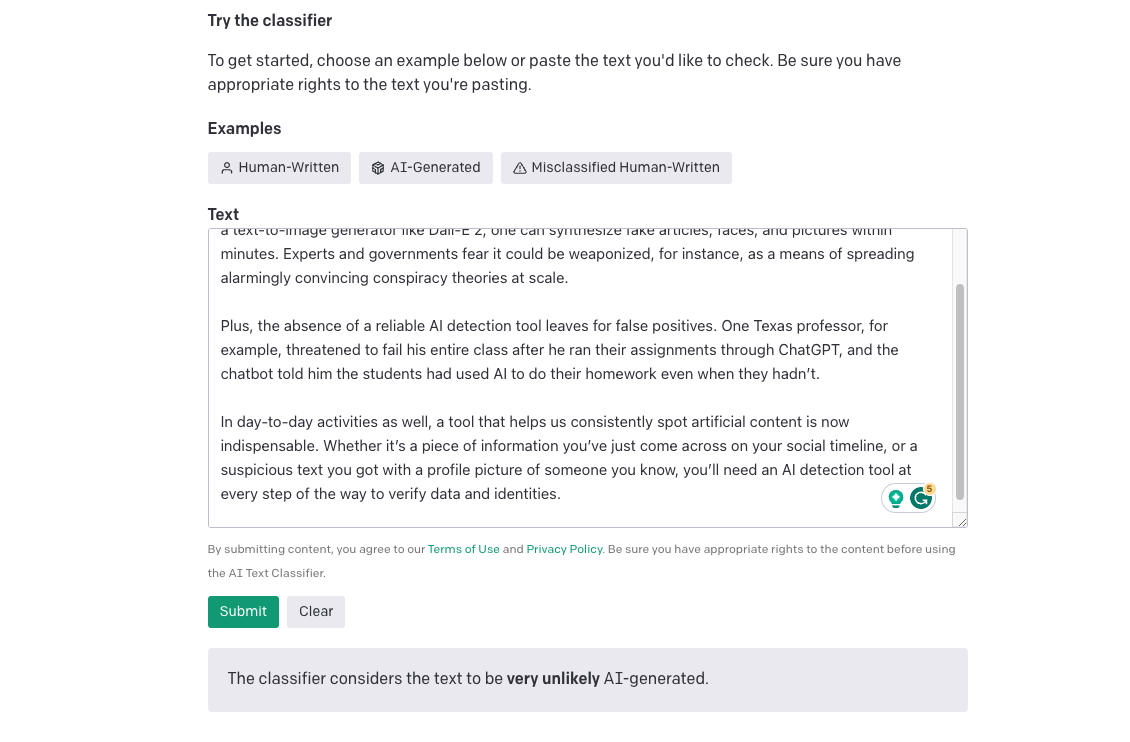

OpenAI's AI Text Classifier

The Microsoft-backed research firm behind the viral ChatGPT bot also offers a tool to detect AI-written text plainly called the AI Text Classifier. Similar to Hugging Face, OpenAI fed its detector a huge corpus of pre-labeled text written by a human and a machine until it could tell the difference on its own.

It needs a minimum of 1,000 characters to function and can spot AI-written text from not just ChatGPT but also from other generators like Google Bard. Once you submit your text, it hedges its answer, and based on how confident it feels, it will label the document as "very unlikely, "unlikely," "unclear if it is," "possibly," or "likely AI-generated."

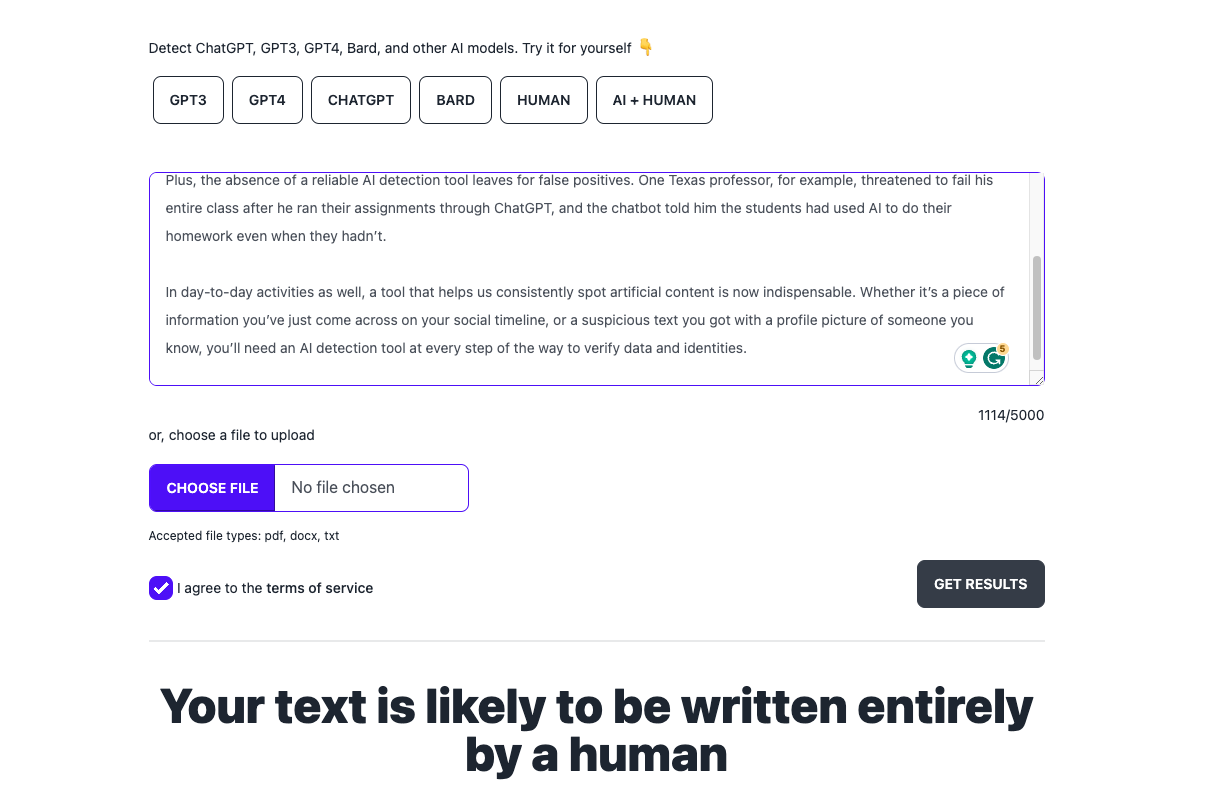

GPTZero

Developed by a Princeton grad, GPTZero is another AI-generated text detector that's mainly built for professors who want to know if the essays their students are turning in are authored by ChatGPT. It evaluates them on two parameters.

If the submission is familiar to GPTZero, which is trained on more or less the same data as AI bots, it's more likely to be machine-generated.

It sifts the content for how uniform it is. AI sentences are more robotic in their rhythm, whereas humans write with a greater variance in sentence length.

What's next for AI content detection?

Detection tools can spot AI-generated text and media with reasonable accuracy for now. But their efficacy will always remain an ever-shifting goalpost as tech companies like OpenAI and Google are already gearing up to roll out next-gen AI architectures that exponentially boost how realistic synthetic images and text their chatbots and text-to-image generators can produce. Plus, for them to be truly effective, they'll need to become more accessible and integrated inside the websites we frequent most (like social media).

Until that happens, hoaxes will keep getting more creative—and people will continue to fall for them.

Related reading: