Artificial intelligence models or AI models are computer programs and algorithms that have been trained on large datasets so that they can understand the patterns and relationships in the data and then make predictions or decisions when presented with new data.

Image identification models are one of the easiest to understand: the AI model is trained on millions or billions of image-text pairs. If there's a photo of a dog, the text says dog; if there's a photo of a parrot, the text says parrot; and so on, and so on. After all the training is complete, when the model is presented with a new image, it should be able to identify a dog or a parrot or anything else in its dataset even if it hasn't seen that exact image before.

All AI models have similar ideas and techniques underpinning them, though it might not always be as obvious or easy to understand. And what's going on under the hood isn't always clear even to AI experts.

I've been writing about AI since long before ChatGPT existed, but I'm not an AI researcher. So this article isn't going to be a technical explanation of the last three decades of AI research. Instead, I'm going to make an incredibly varied and complex subject as simple and understandable as possible. This means I'll be talking in generalities and doing away with some of the nuance—please keep that in mind as you read. If you want to dig into the more technical side of things, I'll link to some resources at the end.

Now, let's dig in.

Table of contents:

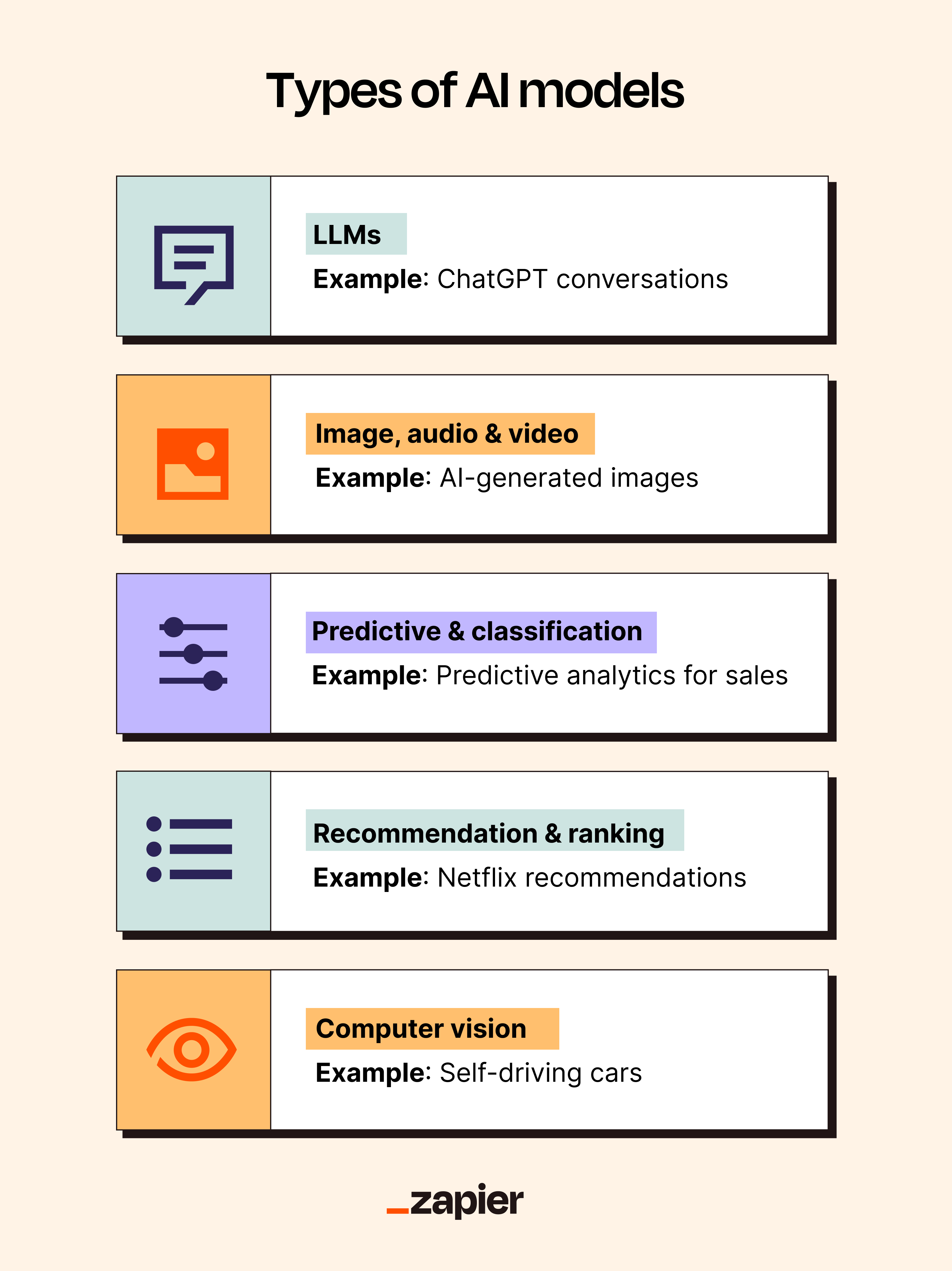

Types of AI models at a glance

Most AI products combine multiple AI models. ChatGPT uses the GPT-5 family, for example, but it also uses GPT Image 1 and other models to decide what to do.

Really, when it comes down to it, AI models are trained on data to make predictions, classifications, or decisions. If there's some kind of training phase, the chances are the algorithm counts as AI—or at least can be described that way.

Type of AI model | Description | Examples |

|---|---|---|

Large language models (LLMs) | Power modern chatbots and AI assistants; variants include multimodal models, coding models, small models, and reasoning models | GPT models, Claude models (Haiku, Sonnet, Opus), Google Gemini models |

Image, audio, and video generation models | Generate images, audio, or video from text and/or image inputs | GPT Image 1, Google Nano Banana, OpenAI Sora 2 |

Predictive and classification models | Traditional ML models used to make predictions or categorize inputs | Spam filters, fraud detection models, demand forecasting, logistics planning systems |

Recommendation and ranking models | Learn from behavioral data to predict what a user will want next | Netflix/YouTube recommendations, Spotify Discover Weekly, Amazon product suggestions, social media feeds |

Computer vision models | Allow machines to perceive and interpret images or real-world environments | Models for robotics, autonomous vehicles, image recognition systems |

Medical diagnostic models | Trained on medical datasets (X-rays, MRIs, clinical data) to help diagnose conditions | Radiology diagnostic models, imaging-based classifiers |

Agentic/tool-use models | LLM-based systems capable of multi-step reasoning, taking actions, and using external tools to accomplish goals | OpenAI Computer-Using Agent |

Speech-to-text models | Convert spoken audio to text; greatly improved with recent LLM techniques | GPT-4o Transcribe |

Large language models

Large language models (LLMs) are one of the most important types of AI models right now. They're the AI models that power ChatGPT, Google's AI search, and pretty much every modern AI chatbot. If someone is talking about AI models, there's a good chance they mean LLMs.

LLMs use something called the transformer architecture, which was one of the biggest breakthroughs of the past decade. I explained it a bit in this article on how ChatGPT works.

At their most basic, LLMs are trained on massive corpuses of text so that they can predict the next bit of text in a sequence. They've read every book ever, so when they see "It was a dark and…" they know the next bit of text is probably "stormy night." Of course, they're also trained on countless other data sources, like the open internet, scientific papers, movie scripts, private data, and anything else you can think of.

Things get complicated, as LLMs work with semantic tokens that encode the meaning of text in multidimensional space, so they're a lot more powerful than a simple autocomplete tool. They're able to understand from context whether the follow-up to "Apple" should be "pie," "iPad," "stock price," "bottom jeans," or anything in between.

Of course, ChatGPT isn't just regurgitating the most likely text from its training data. There's additional training, lots of instructions, and whole additional algorithmic layers built in so that it can operate as a useful tool. All the trappings do a good job of obfuscating it, but the underlying LLM is trying to predict what text should come next.

Types of AI language models

LLMs are now a huge category of AI models. Some of the related models are:

Large multimodal models (LMMs): LMMs or multimodal LLMs are also trained on other modalities of data, like audio and images.

Coding models: Computer code is just text with a strict syntax and structure. There are lots of LLMs trained specifically to write it.

Small language models (SLMs): Small language models are like LLMs but smaller and less powerful.

Reasoning models: These are LLMs that have been trained to use multi-step reasoning to work through harder problems and give better answers.

LLM examples

There are hundreds of major LLMs now. I compiled a list of some of the best LLMs, but if you were to only know three, these are the ones:

OpenAI's GPT models: GPT-3 kicked off the latest AI boom. GPT-5 is the latest generation.

Anthropic's Claude models: Anthropic is OpenAI's biggest competitor. The models are called things like Sonnet, Haiku, and Opus.

Google's Gemini models: Despite developing some of the most important concepts in AI, Google was a bit slow off the mark with actually releasing usable LLMs. Over the past two years, it's fixed that with Gemini.

Image generation models and video generation models

While LLMs generate text, image and video models generate visual content. For the sake of clarity, I'm going to focus on image models, but video models are based on the same ideas.

Most image models work in one of three ways:

They can take a text prompt and generate an image

They can take an image prompt and generate an image

They can take both a text and an image prompt and generate an image

In all cases, some combination of text and images are your input, and an image is the output.

Like all AI models, image and video models are trained on massive quantities of relevant data. For image models, we're talking about billions upon billions of images. For video models, it's millions of hours of footage. Both text and images are mapped into a shared multidimensional space, which allows the model to learn visual concepts and how they relate to language.

To actually generate the resulting image, these models typically use a technique called diffusion (they start with a field of randomly generated noise and then edit it to resemble the prompt over multiple steps), but there are other ways to go about it too.

Types of image and video generation models

Here are some of the related kinds of models:

Video models: Instead of still images, these generate video and sometimes even audio. The techniques are similar, though, with massive amounts of additional complexity.

Autoregressive transformer models: This is a different technique for generating images than diffusion, but it's not yet as popular and is a lot slower.

Audio models: These models generate audio using similar techniques.

Examples of image and video generation models

While there are fewer visual generative models than there are LLMs, it's still a rapidly growing category. Here are the top ones to know right now:

OpenAI's GPT Image 1: OpenAI's DALL·E 2 was the first wildly successful image model. GPT Image 1 is its successor.

Google's Nano Banana: Despite the fun name, Google's image model is one of the best around.

OpenAI's Sora 2: The state-of-the-art video model; it also generates accompanying audio.

Predictive models and classification models

While generative AI models like LLMs and image models dominate the headlines, they aren't the most widely used AI models. Predictive and classification models based on traditional machine learning (ML) techniques are far more common.

These models quietly power everything from your email spam filter and your bank's fraud detection systems to global logistics planning, supply-chain optimization, and demand forecasting. There are many cargo ships on their way to a specific port because an ML algorithm suggested it.

These models are trained to predict or classify based on their training data. Depending on their purpose, techniques like linear or logistic regression, decision trees, and random forests are used to take input data and make a prediction or decision. For example, for every email you receive, an ML model is asked to decide whether or not it's spam.

Recommendation models and ranking models

Recommendation and ranking models are another widely used class of AI models that most people interact with every day, often without realizing it. Rather than generating new content, these models decide what to show you, and in what order.

Recommendation models learn from large amounts of historical data—what people click, watch, buy, skip, or ignore—to predict what you're most likely to engage with next. Ranking models take those predictions and sort the available options so that the most relevant suggestions appear first.

This should sound pretty familiar: it's how Netflix and YouTube recommend what you watch next, how Spotify creates its Discover Weekly playlists, how Amazon populates its suggested products boxes, and how news feeds and social media timelines get filled.

Recommendation and ranking models often rely on traditional machine learning techniques alongside newer deep learning approaches for training models on massive quantities of data. Before LLMs took over the spotlight, these big data models were the ones getting headlines.

Other types of AI models

This has obviously been a far from exhaustive exploration of AI models, and there are a few other AI model types I haven't listed above but want to highlight:

Computer vision models: These AI models allow computers to see and assess the real world. They're incredibly important in fields like robotics and autonomous vehicles.

Medical diagnostic models: Some AI models are trained on medical data to make diagnoses, like assessing X-rays and MRIs. They're becoming more popular and effective, but there are issues with legality and liability in deploying them at scale.

Agentic and tool-use models: Based on LLMs, these models are able to pursue multi-step goals and decide what tools to use and when. For example, you can ask one to "find you the best TV you can buy locally," and it should search the web, use your location to find a nearby store, and use a browser to find a TV that's in stock.

Speech-to-text models: These AI models transcribe spoken words or recorded audio to text; they've significantly improved as a result of the developments in LLMs.

A few more resources to understand AI models

I've tried to keep things as broadly accessible as possible here, so I've had to skimp on a lot of the fun technical details. If you want to look deeper into what AI is and how it works, here are some resources that I rely on:

IBM has a great website with moderately technical explainers on major AI concepts.

Similarly, Google's Machine Learning Glossary is a great resource.

Simon Willison is one of the leading writers on LLMs.

DeepLearning.AI is a membership site, but the courses are very good if you're interested in learning to use AI.

The Illustrated Transformer is the best accessible explanation of transformers I've seen.

Or, if you're more concerned with using AI and less concerned about the technical side of how it works, the Zapier blog has hundreds of articles on AI apps, AI automation, and how to put AI to work in your day-to-day.

Related reading: