A/B testing. The phrase itself sounds complicated and time-consuming—something that can really wait for another day. But it doesn't need to be that way. Modern email marketing apps are often equipped with features that make A/B testing (also called split testing) as simple as scheduling a campaign or customizing a template.

Though there are all sorts of A/B tests you can run—mainly on the pages of your website—this post focuses on tweaking what ends up in your customers' inboxes. Let's examine the basics of A/B testing: how it works, guidelines to follow, and email characteristics to test.

Table of contents:

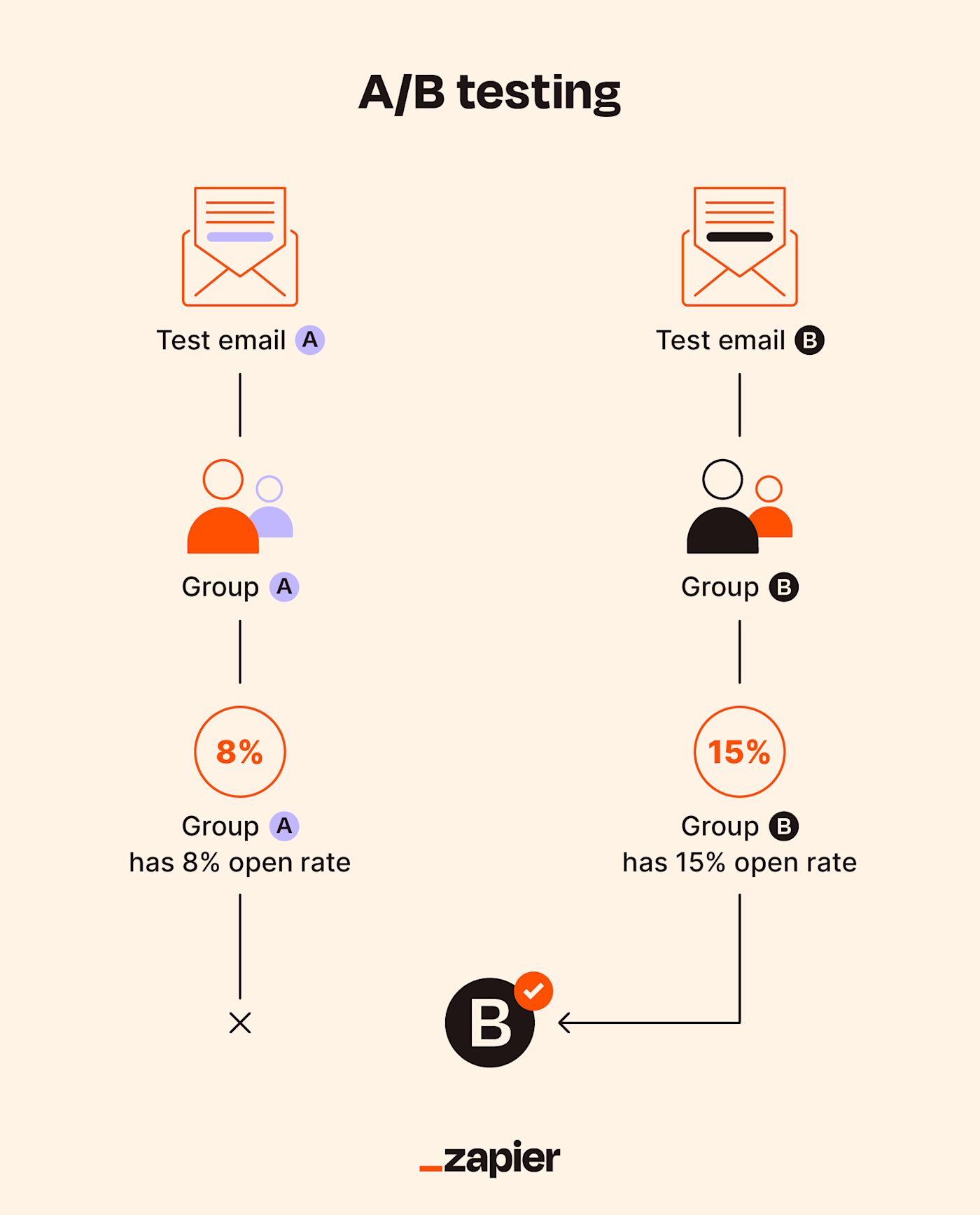

What is A/B testing?

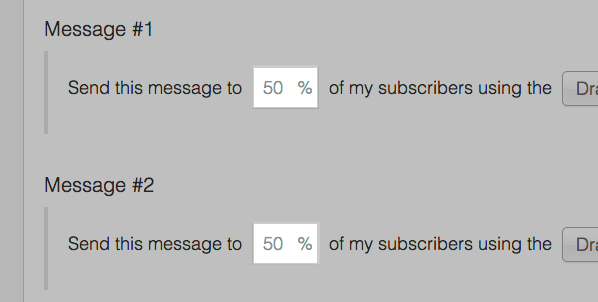

A/B testing email campaigns involves sending two different versions of an email to two randomly segmented halves of the same list to measure how one change affects performance. This change is usually in the subject line but can also be in the body of the email.

Here's an example.

Pretend you own a restaurant—the Chatterbox Café—and after a few months of flat revenue, you're tempted to change up your menu to see if it has an impact on sales. You create a new menu where instead of just text, you include five pictures of your highest-priced items. That's the only change—everything else about the menu stays the same. You then split your café down the middle—patrons on one side get the new menu, and everyone else gets the old menu.

After two days, you tally up the revenue and compare the two sides, pleasantly finding that the menus with the five pictures increased the average table ticket by 15%. You have a winner. Next stop: the copy machine.

Benefits of A/B testing

The main benefit of A/B testing is knowledge: you learn which specific element of your email works and to what degree it works. Consider this:

If you change multiple elements across multiple emails, you can't isolate which changes account for which improvements (or reductions) in performance.

If you change one element but don't split test by comparing it to a control group (randomized recipients from the same list receiving an unchanged email), then you won't know whether a shift in performance can really be attributed to the change in your email.

A/B testing allows you to identify specific improvements, so you can apply them across the board, learn from them, and continue to hone your campaigns.

Tips for setting up an A/B email test

Let's start with an honest admission: before I dug into the A/B testing features provided by email marketing software, I fully expected I'd be manually setting up, executing, and analyzing A/B tests. In reality, A/B testing just takes applying an extra bit of text to your email body copy, using an extra subject line field, or setting up a second email and marking it as variant "B." Once you do that, your email marketing service takes care of the rest.

But setting up the campaign in your platform of choice is the easy part. Here's how to figure out what to change, what to measure, and how to conceptualize your test to get the best possible results.

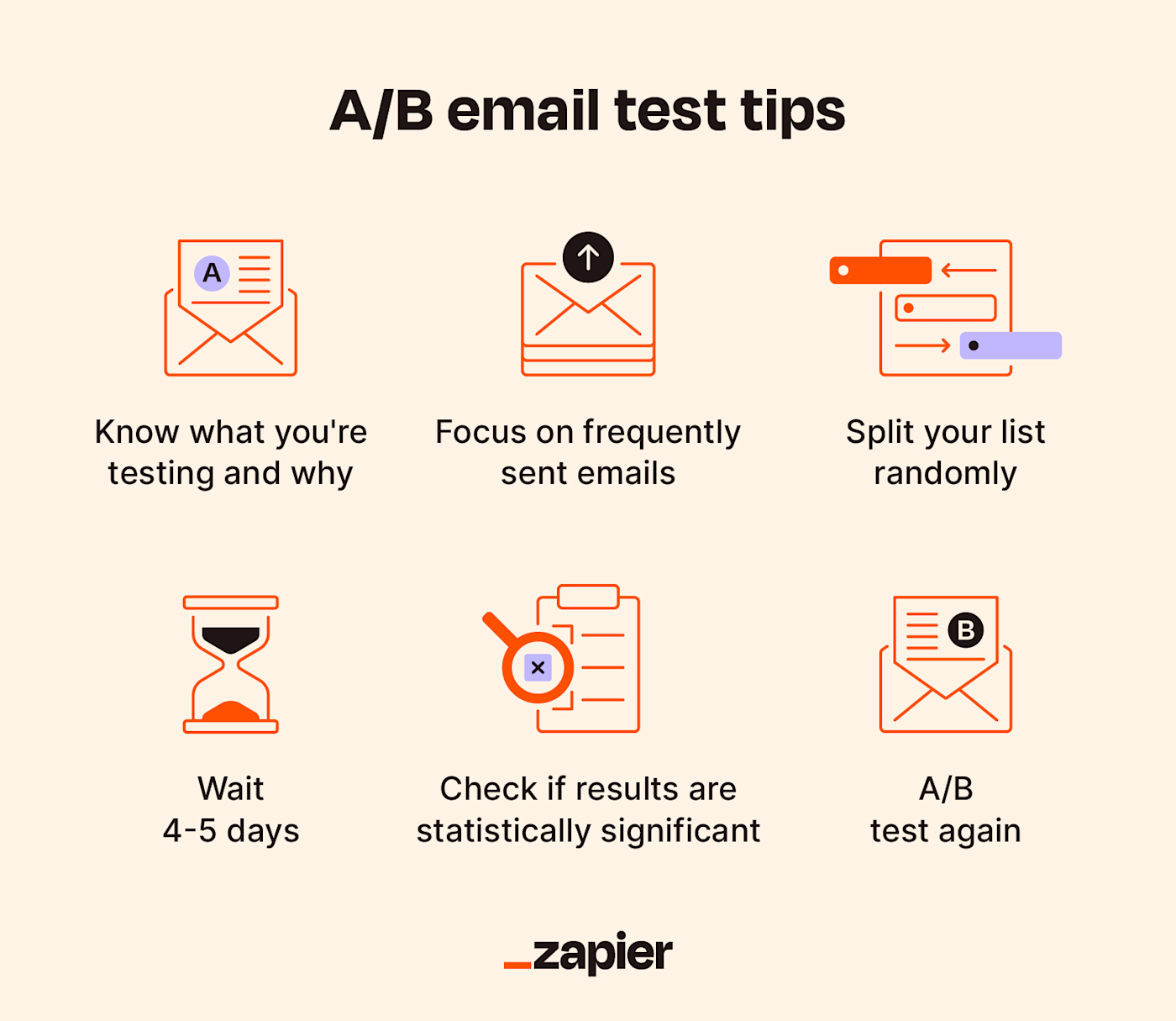

Know what you're testing and why

What are you trying to improve about your marketing emails? Ask yourself that simple question before proceeding.

At Zapier, we'd like to increase the click-through rate on our blog newsletter emails and amp up the open and click-through rates on our new user onboarding emails. If we hit those goals, we'd likely see more unique pageviews on our blog and a higher level of engagement with our app. So, in our case:

What: Increase open and click-through rates

Why: To increase engagement

Focus on frequently sent emails

You want to A/B test your "Happy Holidays!" email? Think again: you'll be waiting 12 months until you can put your results to good use. A/B testing works best for perfecting frequently sent emails—think:

Blog newsletters

New user onboarding emails

Error alerts

Automated follow-up funnels

We send millions of those emails weekly; if we can increase the open or click rate by just 1%, that's a major win. The key challenge with frequent emails is making sure that sample sizes are enough for the inference you're trying to make. To that end, Evan Miller offers a helpful calculator to find your minimum sample size per group.

Split your list randomly

If your email marketing software doesn't offer A/B testing—or you want to spin up a manual test on your own—remember to split your subscribers randomly. One way to do this is to download your list as a CSV and randomly sort it using Excel. Or, you can simply arrange it in alphabetical order with any spreadsheet software, slicing it up from there. Luckily, most email marketing apps will handle this step for you.

Wait 4-5 days

In our experience, a single email's effect declines sharply over time, petering out around day four or five after being sent. If your email has no effect after five days, it's not likely to ever have any meaningful difference. Four to five days is a good rule; otherwise, you might destroy your experiment. (Zapier data scientist Christopher Peters recommends waiting a "representative time" that amounts to the amount of time you'd be willing to wait.)

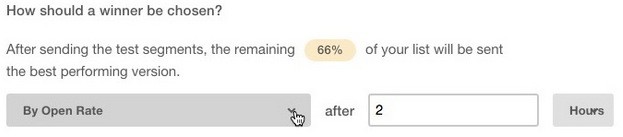

Check if results are statistically significant

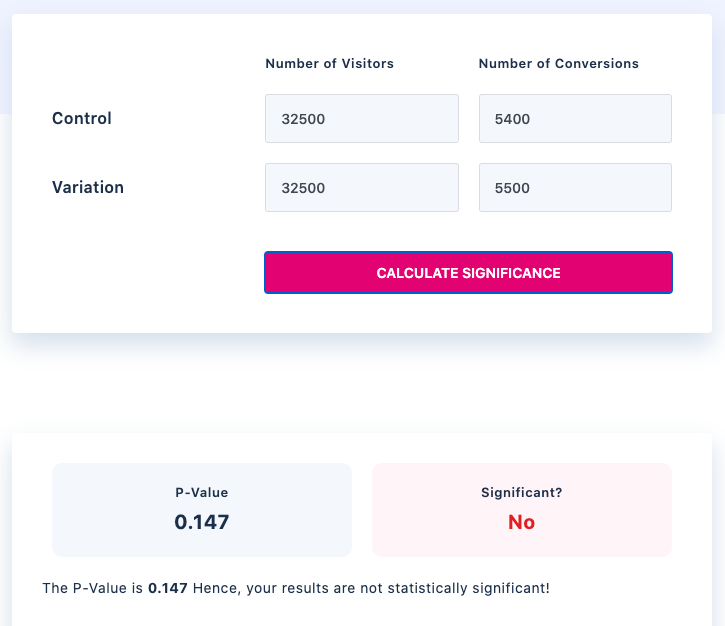

You dispatch your A/B test to your 65,000 subscribers, and it comes back that 5,400 of them opened the "A" version and 5,500 of them opened the "B" version. B's the winner, right? Not so fast. Just as news reporters have a tough time calling a political race on election night, you too should be slow to call a winner in your A/B test. An easy way to check if your results are statistically significant is to use a free calculator like Visual Website Optimizer.

A/B test again

Customer behavior never stops changing, and neither should your A/B tests. If you've increased your click-through rate, challenge yourself to spike your open rate. If you bumped your open rate with a better subject line, consider changing up the message preview. If your message preview is perfected, change up the sender.

Out of testing ideas? That's what we'll cover next.

10 things to A/B test for

Now that you know how to A/B test, it's time to explore the possibilities. You can optimize just about every element of a marketing email with real user data through split testing. Here are some of the most useful elements to turn your attention to.

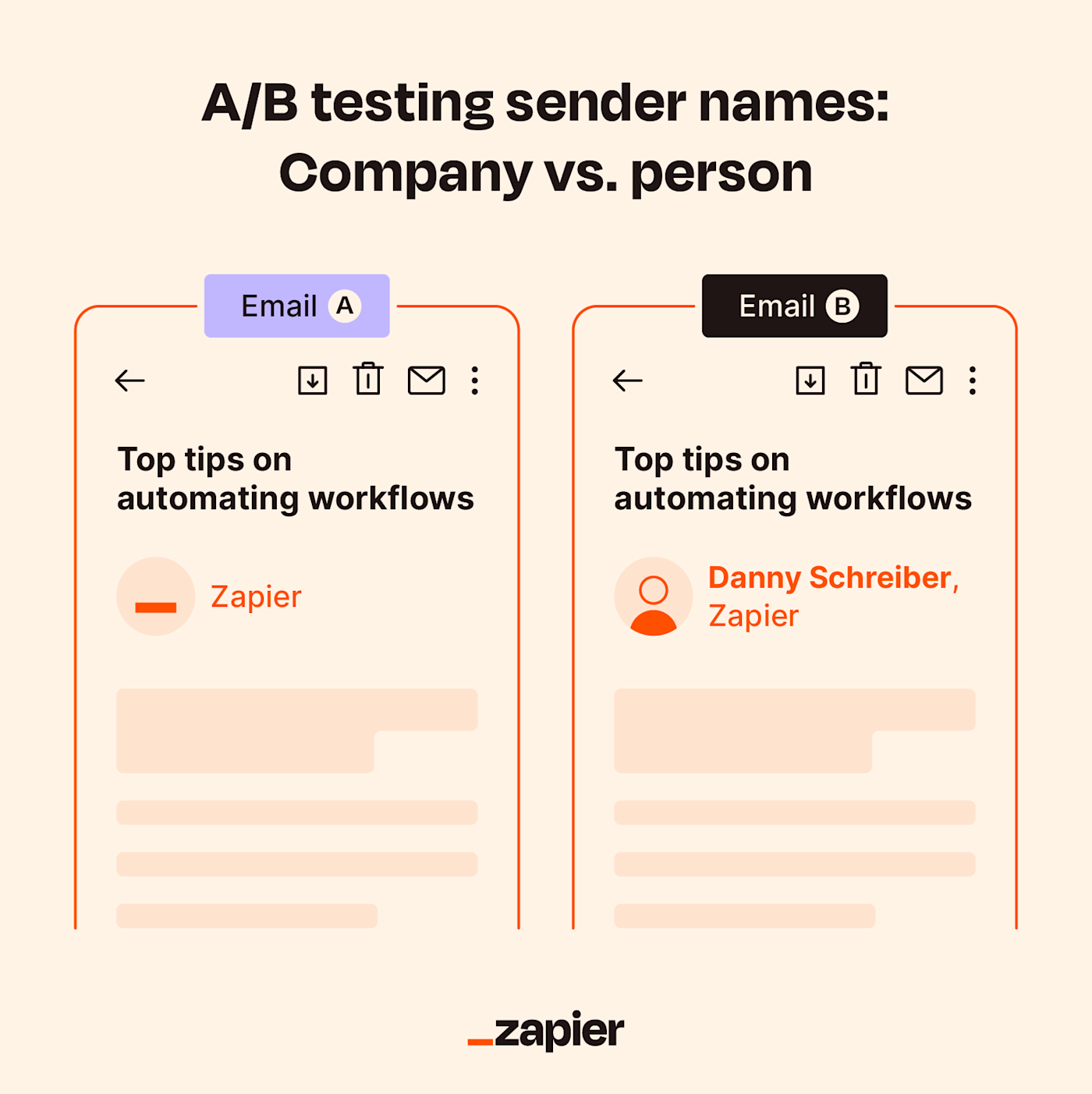

1. From name / sender name

Who would you rather get an email from: a company or a person?

Zapier saw a higher email open rate after sending a campaign using a team member's name as the from name—"Deb at Zapier"—versus the company name alone. That may or may not prove to be true for your list, so here's what an A/B test of the from name could look like for you:

Company: Zapier

Company newsletter: Zapier Blog

Company department: Zapier Content Team

Team member full name: Deb Tennen

Team member full name and title: Deb Tennen, Managing Editor

Team member name and company: Deb Tennen (Zapier)

Team member first name only: Deb

Team member first name and company: Deb at Zapier

2. Subject line

Does this seem like a good subject line length?

According to an oft-cited Marketo study, those 47 characters are right around the ideal length of 41. But hitting a character count is just a small part of the art of subject line composition. Since the attitudes and behaviors of your particular list may vary, consider A/B testing for these factors:

Length: "Longer email subject lines work better than shorter, here's why" vs. "Longer email subject lines win"

Simple vs. detailed: "Get to the point" vs. "See the A/B test that proves once and for all shorter emails are better"

Answer vs. question: "Red call to action buttons works better than blue buttons" vs. "Which works better: red or blue call to action buttons?"

Casual vs. urgent: "Save 50% off your subscription" vs. "6 hours left to save 50%"

Negative vs. positive: "10 habits that kill productivity" vs. "10 ways to boost your productivity"

Numbers: "9 ways to delight your customers" vs. "How to delight your customers"

Punctuation: "Thanks for signing up" vs. "Thanks for signing up!"

Symbols and emoji: "How to delight your customers" vs. "How to delight your customers 😊"

Company name: "Five new features for you" vs. "Five new Zapier features for you"

Team member name: "A message from our CEO" vs. "A message from our CEO, Wade Foster"

Customer name: "Welcome to Zapier!" vs. "Welcome to Zapier, Lloyd Christmas!"

Capitalization: "How Zapier writes copy that converts" vs. "How Zapier Writes Copy That Converts" vs. "how zapier writes copy that converts"

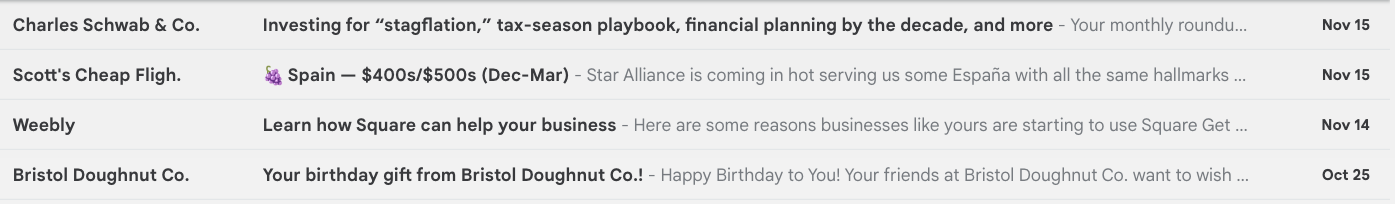

3. Message preview / preheader

The message preview is an easy-to-overlook part of an email campaign, one that I'll admit we've missed making the most of on several occasions. The little snippet of text that shows in your inbox—also called the email preheader—is often customizable using your email marketing client.

A/B testing this could be cumbersome since it might require manual testing, but it will be worth the effort. Think to yourself: how often do you read that little piece of copy to help decide if a new newsletter is worth reading or not?

Here are a few variations you can test:

Copy from the first line of the email: "Hello there, welcome to Zapier! You're joining thousands of people who use our tool to automate time-consuming tasks…"

Short summary: "Welcome to Zapier! I'm excited to show you how you can start automating your work."

Call to action: "Here are 101 ways you can start automating your work."

4. Plain text

Sometimes simple is refreshing. That can be the case when you get an email in plain-text format rather than a flashy, over-designed newsletter. The thinking here is that plain-text emails feel like something you would get from a friend. They can also be easier to load and display for some users, reducing the risk that important links or information might not show up right.

In either case, it can absolutely be worth testing.

5. Subject line or salutation personalization

Hey reader! If I knew your name, I'd have inserted it there. Unfortunately, I don't, which is one reason I've been cautious of personalizing emails in the past—not all subscribers supply their first and last names.

Whether you've got the goods on your subscribers' names or not, here are some salutation structures you can try out:

Recipient's first and last name: "Welcome to the Zapier Premium Plan, Daniel Rose!"

First name only: "Hey Daniel"

Persona or role: "Why marketers read less"

Terms that resonate with your audience: "The automation solution for explosive efficiency"

"You": "How you can be more productive today" vs. "How to be more productive today"

6. Body copy

"Cut the length of your email copy in half. Now cut it in half again."

That's Morgan Brown's top-voted piece of advice on a GrowthHackers thread soliciting marketers' favorite pieces of advice. It shouldn't be a surprise. It's a safe bet that Brown's users—like the rest of us—don't often care for long emails. In the Automata marketing email above, there are just a few short sentences of copy. None of them takes up more than two lines, and the CTA at the end stands out as the shortest line with visual pop from the hyperlink and all-caps coupon code.

So what else can you do to your email body copy beyond length to start A/B testing it? How about formatting shifts like:

Shorter versus longer

Bullet points

Numbered list

Question and answer format

Only one sentence and a call to action

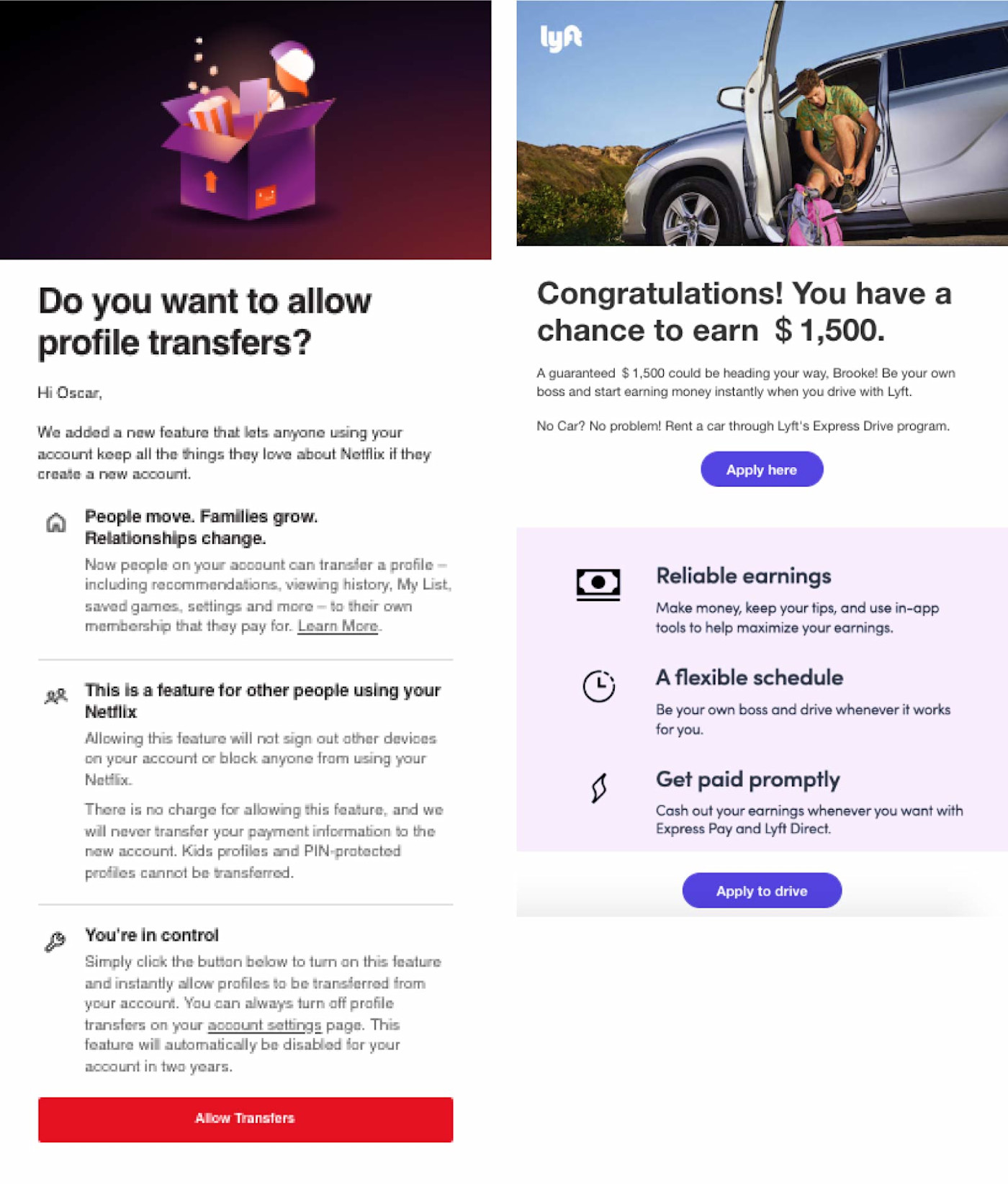

7. Images

Which of the above emails draws you in more?

These two examples of marketing emails are theoretically fairly similar, with three main points and simple icons in the body and a header image at the top. The Netflix email on the left, however, includes a fairly generic illustrated header image and supplements the body icons with fairly extensive text copy. The Lyft email on the right uses a stock image with a person and includes very minimal text copy and slightly larger body icons.

Whichever approach you take to images, be sure to use common file types like JPG or GIF to give your images the best chance of showing up in your target inboxes (and don't forget about the alt text just in case they don't). In the meantime, here are some image variations to consider testing:

One versus multiple images

Text on images

Screenshots of a video

Calls to action incorporated in the image versus calls to action separate from images

Animated GIFs

8. Design and layout

Three to four seconds. That's it. That's the length of time you have to grab a customer's attention when they open up your email. So what better way to grab it than with a design that appeals to their eye? Moreover, your design needs to be ready to be viewed on mobile, too. So testing responsive design is almost a must; larger text and clearer calls to action are good places to start.

9. Call to action (CTA)

Try Zapier now! Or… Try Zapier now! Or, maybe… Start automating your work!

Which one do you want to click? That's your goal here—see how many different ways you can write a call to action. Not only write it, but present it, too: should it be a text link or a button? Should it go at the top of the email, the bottom, or both? Try testing out these aspects of your CTAs:

Shorter vs. longer: "Go!" vs. "Start Automating Your Web Apps!"

Capitalization: "Try the new feature" vs. "Try The New Feature" vs. "try the new feature" vs. "TRY THE NEW FEATURE"

Punctuation: "Get started" vs. "Get started!"

Text formatting: "See for yourself" vs. "See for yourself" vs. "See for yourself" vs. "See for yourself"

Size: Test the size of fonts, button, or both

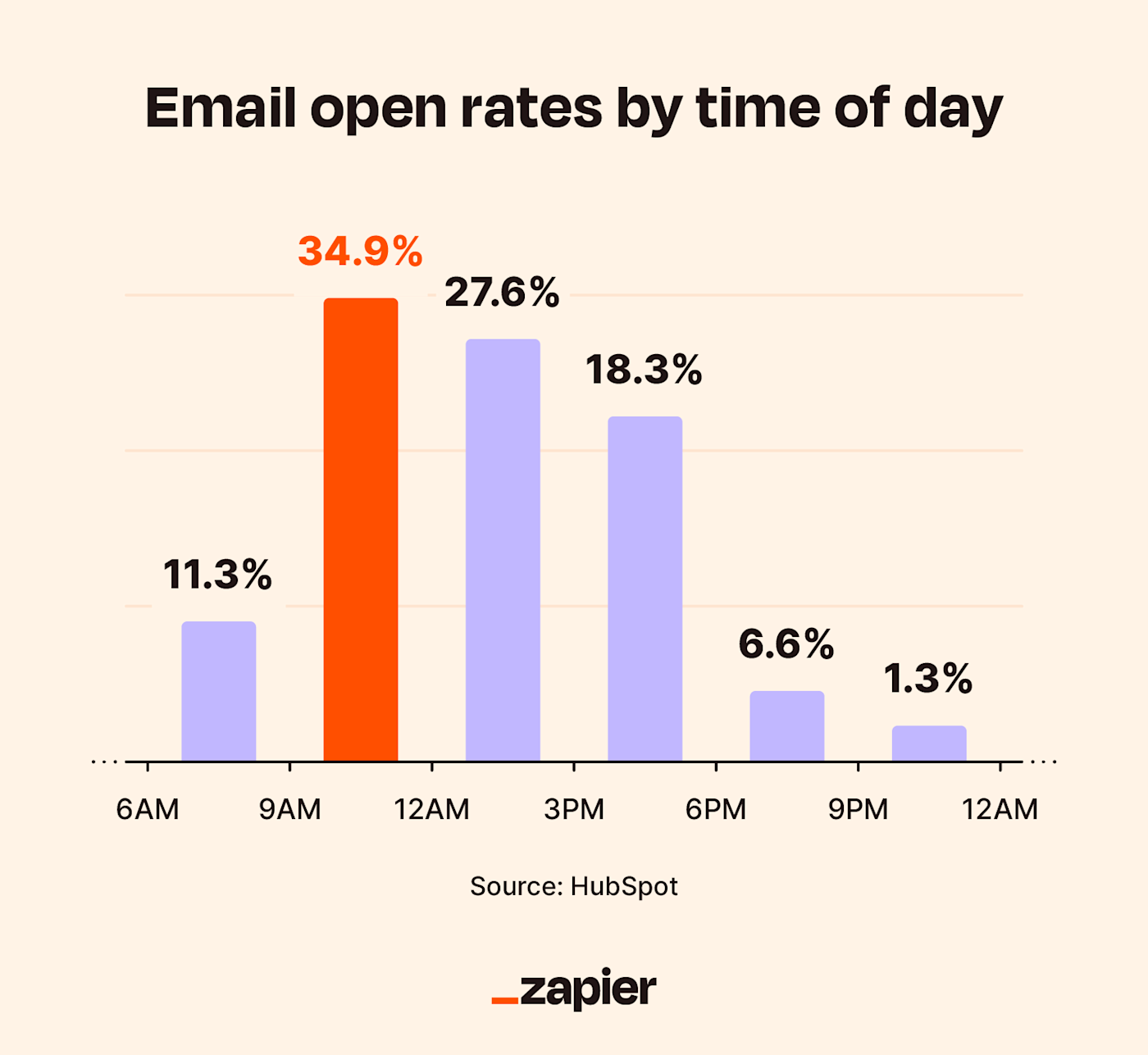

10. Delivery day and time

What if you received the Zapier blog newsletter every Sunday night at 8 p.m. EST? Would you be more or less likely to open that message and click the link to read our latest post?

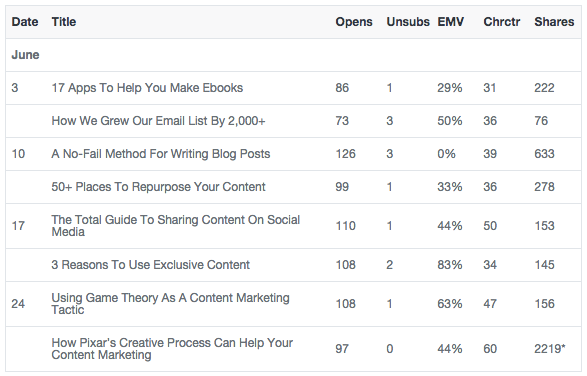

If you listen to the stats, Tuesday is not only the most popular day to send emails but also the day with the highest open and click-through rates, as well. If you follow your gut instead, consider your own email browsing habits. When are you most likely to check in on non-essential emails? This could give you a clue into when people in your particular industry are likely to do the same.

Testing for the optimal day and time is hard—especially as online businesses cater to customers and subscribers around the globe—but the question of when it's best to send emails continues to plague marketers. The truth is, it probably varies by industry, demographic, and individual.

A/B testing for day of the week and time of day can help you get to the bottom of it for your particular list.

Next: Automate your email marketing campaigns

So you've A/B tested your email to kingdom come and know the perfect subject line, formatting, CTA, and send times. Now you're ready to take your marketing to the next level with email marketing automation that ensures the right messages go to the right contacts at the right time—with no extra effort on your part.

Here are more ways to get the most out of your email marketing: