Shadow AI is the use of any AI tools without official approval, oversight, or governance.

I'm a big "ask for forgiveness, not permission" girl. Like the time my partner said we could discuss adopting a dog in a year, so I submitted an application that night and told him we were bringing home a dog in two weeks.

That instinct—to just go for it and deal with the fallout later—is fine when the stakes are relatively low. But when the stakes are high, that same instinct can create bigger problems than a few surprise chew toys on the living room floor.

That's exactly what's happening with shadow AI. Seventy percent of employees report working without AI policies, guidance, or clarity, leaving them to experiment in the shadows—putting security, productivity, and trust at risk.

Here's everything you need to know about shadow AI: what it is, why it happens, and ways to mitigate the risks.

Table of contents:

What is shadow AI?

Shadow AI refers to the use of artificial intelligence tools inside an organization without official approval, oversight, or governance.

This isn't hypothetical—it's already happening. One report shows that employees are using AI three times more than executives expect, even at companies that have officially "banned" the tools. That's not just a gap—it's a liability waiting to surface.

Examples of shadow AI

Shadow AI isn't about employees going rogue; it's about employees being resourceful. Still, without the right structures in place, those shortcuts can backfire. Here are a few categories of tools where shadow AI tends to pop up:

Generative AI tools. Marketing or comms teams might use tools like ChatGPT to draft copy, brainstorm campaign ideas, or summarize reports. This creates risk because sensitive data can be pasted into public models, and the outputs may include inaccuracies or biased language.

AI chatbots and assistants. Customer support reps or HR teams lean on chatbots for quick answers to common questions. Handy, yes—but if those bots aren't connected to approved systems, they can give out outdated or incorrect information, creating confusion and, sometimes, compliance issues.

Predictive analytics software. Finance or operations teams might experiment with AI dashboards to predict revenue or model supply chain needs, which is helpful when planning out your next quarter. But without appropriate oversight, decisions end up being made on unvetted data, which can lead to poor decisions and potential security breaches.

Creative AI tools. Design or content teams might use AI to generate images, mockups, or video snippets. The catch: many of these tools don't meet enterprise security standards, and the outputs can raise copyright or brand compliance issues.

What causes shadow AI?

Shadow AI usually pops up as a means to fill in gaps. And those gaps show up in four main ways.

Leadership lag

Nearly every company is investing in AI, yet only 1% say they've reached AI maturity. Leaders want to move carefully, which makes sense—new technology brings unknowns, and no one wants to create risks by rushing in. But while leadership is still drafting roadmaps, employees aren't waiting. While official guidance lags, employees fill the void and test tools on their own to keep work moving.

Tool sprawl

When there isn't a centralized approach, employees gravitate toward whatever AI apps feel helpful in the moment. Marketing might test out a copy generator, sales might pick up a forecasting add-on, and design might play with AI image tools. Each on its own seems harmless, but together they create a patchwork of subscriptions, overlapping functionality, and unmonitored data flows. What starts as resourcefulness can quickly snowball into security gaps, compliance risks, and a tech stack that's expensive and difficult to untangle.

Skills gap

Even when strong use cases are identified—like an AI chatbot to triage support tickets or a workflow to summarize research—most teams don't have the technical expertise or bandwidth to deploy AI securely and at scale. That bottleneck leaves employees in a tough spot: they see opportunities to save time, but they don't have the support to pursue them through official channels.

When formal resources aren't available, employees turn to tools they can access themselves.

What are the risks of shadow AI?

Shadow AI isn't just a governance headache—it carries real risks that grow bigger the longer they go unchecked. Here are some of the key concerns of shadow AI.

Security and compliance exposure

Picture this: someone pastes a customer record into ChatGPT to speed up a support email. Handy in the moment, but that single action can expose sensitive data to systems outside your security framework. Multiply that by dozens of employees experimenting on their own, and the risks escalate quickly—everything from data leaks to regulatory fines to damage to customer trust. Gartner predicts that by 2027, 40% of AI-related data breaches will come from improper use. That's a number no legal or security team wants on their plate.

Operational inefficiency

On paper, shadow AI looks scrappy and resourceful—teams finding quick fixes to move faster. In practice, it often creates duplication, ballooning "shelfware" costs, and tools that don't scale across departments. One team's chatbot, another's AI writing tool, and a third's analytics plugin all start stacking up until the tech stack looks like a junk drawer: full of useful things, but impossible to keep organized.

Bottlenecks in IT

Ironically, shadow AI makes IT's job harder, not easier. As employees bypass formal processes to adopt their own tools, IT inherits the fallout: fragmented systems, inconsistent data, and mounting security reviews. Instead of focusing on innovation or scaling official AI programs, technical teams end up firefighting—a backlog of troubleshooting requests and half-integrated tools that were never designed to work together.

Competitive disadvantage

AI isn't just about efficiency—it's a competitive lever. Companies with clear strategies are embedding AI safely into their core workflows, giving them faster execution and a stronger edge. Organizations that let shadow AI run unchecked risk the opposite: scattered experiments that never connect, slowing growth, and leaving room for competitors to leap ahead. In a race this fast, falling behind even a little can make it hard to catch up.

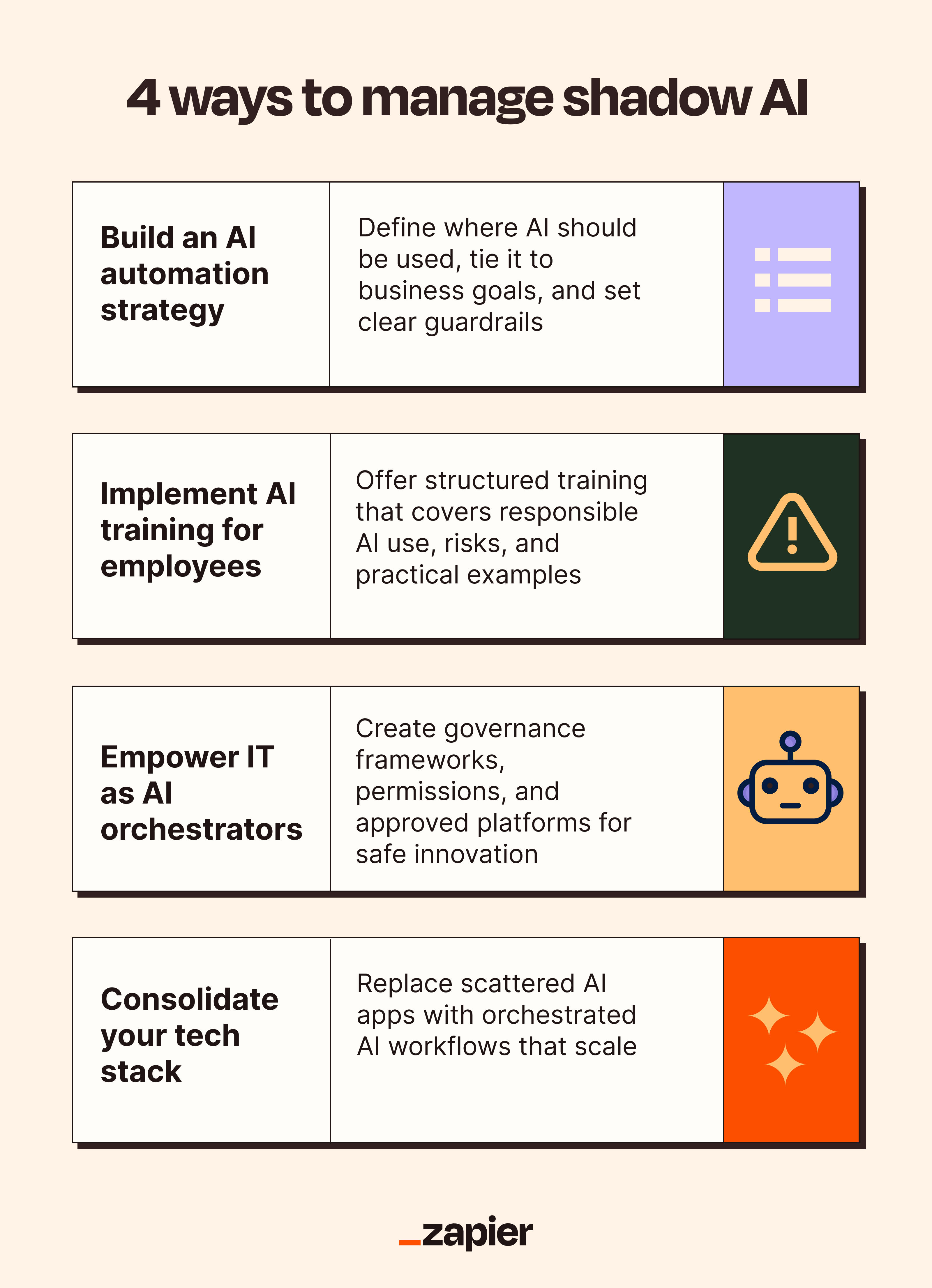

4 ways to manage the risks of shadow AI

Managing shadow AI isn't about hitting the brakes on innovation—it's about building the guardrails that let your teams move fast without creating risk. Here are key steps you can take to manage the risks of shadow AI.

1. Build an AI strategy

Policies on their own aren't enough. A true strategy defines where AI should be used, how it ties to business goals, and what guardrails are in place. Start by identifying high-impact use cases for AI and document them. Then, give employees clear guidelines for experimenting responsibly, so AI adoption is intentional, not accidental.

At Zapier, we created a central AI enablement hub for self-serve learning, and our legal team detailed AI use guidelines for safety and clarity.

2. Implement AI training for employees

Employees want more direction, but most aren't getting it. Half say they'd like formal AI training, yet nearly half of companies offer none. Build structured programs that go beyond tool tutorials—show teams how to use AI responsibly, highlight risks like data privacy, and provide examples of effective use cases. Well-trained employees turn ad-hoc experimentation into scalable, business-aligned results.

When we rolled out AI internally at Zapier, we didn't just tell people to "use AI." We ran company-wide hackathons, built a central knowledge hub, and held regular office hours so teams could ask questions and swap best practices. Our AI playbook helped us reach almost universal adoption across the company—because when people feel supported, they use AI with confidence.

3. Empower IT as AI orchestrators

Shadow AI often grows when IT is positioned as a gatekeeper instead of a partner. Flip that script: let IT lead by creating governance frameworks, setting permissions, and providing approved platforms that give employees safe ways to innovate. This approach keeps sensitive data secure while ensuring innovation happens in the open, not in the shadows.

On Zapier, IT teams have a single platform to let every team connect AI models, apps, and workflows. This means IT teams aren't forced to say "no" to innovation. Instead, they can offer a safe way to do it. It's a win-win: employees get the tools they need, and IT gets the oversight they require.

4. Consolidate your tech stack

A bloated toolset doesn't just cost money; it creates risk. Companies now spend more than $3,000 per employee per year on SaaS tools, much of which goes underutilized. By consolidating overlapping apps and centralizing AI capabilities into orchestrated workflows, you'll cut costs, reduce complexity, and ensure teams are working with tools that scale across the business.

Zapier connects with thousands of apps and has built-in AI tools, allowing you to do exactly this. This means you can replace scattered, single-purpose AI apps with one platform that securely connects the tools you already use and embeds AI directly into those workflows. For example, you can build an AI-powered customer support workflow that pulls new tickets from Zendesk, uses AI to summarize the issue, and then auto-routes it in Slack or Salesforce—no extra point solutions required.

Here are a few templates to get you started.

Automate personalized coaching for your sales team using this AI-powered call analysis template.

Improve your IT support with AI-powered responses, automatic ticket prioritization, and knowledge base updates.

Shift from shadow AI to strategic AI

The real question isn't whether your teams are using AI—they are. It's whether you'll give them the structure, tools, and guardrails they need to move from scattered experiments to scalable impact. With a clear AI strategy, shadow AI stops being a liability and becomes an advantage: secure, orchestrated, and aligned with your business goals.

Zapier is the leader in AI orchestration, giving teams a single platform to innovate safely. Instead of chasing one-off tools or worrying about compliance gaps, you can build AI-powered systems that are visible, auditable, and scalable across your organization.

Related reading: