Your AI agent knows your product inside-out. You've written detailed prompts, uploaded your docs, and tested it dozens of times. Then a customer asks about pricing, and the agent quotes last quarter's rates. Or it recommends a demo to someone who's been paying you for three years. Or it cheerfully offers a discount code that expired two weeks ago.

The frustrating part: the correct information was right there in your prompt. Line 347, clearly stated. The AI just... ignored it.

Researchers have a name for this: "lost in the middle." LLMs exhibit a U-shaped attention curve, processing information at the beginning and end of inputs reliably while performance drops by more than 30% for anything buried in the middle. That carefully crafted rule you added after your last customer complaint? Good chance the model never sees it.

Wharton's Generative AI Labs found something similar when they tested prompts the way scientists test hypotheses, running each question 100 times instead of once. At strict accuracy levels, most conditions "barely outperform random guessing." The outputs looked good individually. They just weren't reliable.

Your prompt is probably fine. But prompt engineering has become one layer in a larger stack, and teams building AI that actually works in production now spend equal or more time on what surrounds the prompt: the context.

Table of contents:

What is context engineering?

The LangChain team paraphrases Andrej Karpathy, who helped build Tesla's AI and co-founded OpenAI: "LLMs are like a new kind of operating system. The LLM is the CPU, the context window is the RAM."

I've found a simpler way to explain this to my marketing friends who glaze over at "tokens" and "inference."

Your AI is an employee. The context window is their desk. [...] Your prompt is the sticky note you handed them this morning.

Your AI is an employee. The context window is their desk. Whatever's on the desk right now—the customer file on the screen, the campaign brief they printed out, the brand guide shoved in a drawer—that's what they can work with. Desks fill up. So does an AI's memory.

Your prompt is the sticky note you handed them this morning. Important, sure. But the stack of folders on their desk? The CRM they have open? That's what determines if they actually help the customer or fumble around asking questions the customer already answered.

Anthropic wrote up their approach to context engineering in 2025. Their definition: "the set of strategies for curating and maintaining the optimal set of tokens during LLM inference." Translation: give your AI the right information, in the right format, at the right time. Not more. Just what it needs.

The evolution: From chatbots to context-aware agents

Before diving into the how, let's understand where we are in the AI adoption curve.

Phase 1 was copy-paste ChatGPT. Marketers discovered they could paste customer emails into a chat window and get draft replies. Exciting, but every session started from zero.

Phase 2 was custom GPTs and assistants. You could pre-load instructions and documents. Better. But the context was frozen. No live connection to what was happening in your business.

Phase 3 is agentic AI. Agents that take actions, not just generate text. They update your CRM, create tickets, send emails, make decisions. This power requires a new discipline: you can't give an agent instructions and hope. You have to architect its knowledge.

Most marketers are stuck in Phase 1 or 2. The ones pulling ahead are building for Phase 3.

The context gap: Why your prompts aren't working

When an AI misbehaves, the instinct is to add more rules. The prompt grows. 200 lines. 400 lines. 500+. More instructions should mean better behavior.

It doesn't work that way.

The middle gets ignored

I mentioned the Stanford research. Chroma's 2025 "Context Rot" study goes further. They tested a bunch of models, including GPT-4.1, Claude 4, Gemini 2.5, and Qwen3, and found that "models do not use their context uniformly; instead, their performance grows increasingly unreliable as input length grows." They discovered this while studying agent learning: on multi-turn conversations where the whole window gets passed in, the token count explodes and instructions clearly present in context get ignored anyway.

Static vs. live information

A mega-prompt is frozen. You wrote it last month. Since then, pricing changed, a customer opened a support ticket, Marketing launched a new campaign this morning. The prompt doesn't know any of that. It can only contain what existed when you wrote it.

Context window limits

Every model has a context window. Claude's is 200K tokens, GPT-5 is 400K, Gemini 3 Pro goes up to 1M. Big numbers. But research shows most models start getting unreliable well before they hit those limits.

In practice, your mega-prompt takes up space, conversation history piles on, documents get loaded. It adds up fast. Hit the limit and older information gets pushed out. The AI forgets things it knew ten minutes ago.

Here's what surprised me when I started building production agents: Manus, an AI agent company, found that their agents consume about 100 input tokens for every 1 output token. On a complex task with ~50 tool calls, that's roughly 50,000 tokens of context being processed just to generate 500 tokens of response. Most of that context is tool outputs, conversation history, and retrieved documents piling up.

How context fails

Context doesn't just run out. It fails in specific, predictable ways. Drew Breunig identified four failure modes worth knowing:

Context poisoning: An early error or hallucination gets into context and compounds. The AI references wrong information repeatedly because it's "in the record." Once context is poisoned, each subsequent decision builds on the mistake.

Context distraction: Irrelevant information drowns out relevant information. You loaded ten documents, but only one matters for this question. The AI attends to everything, relevant or not.

Context confusion: The model can't figure out which pieces of context apply to the current situation. You have pricing rules for enterprise and SMB customers in the same context. The AI mixes them up.

Context clash: Contradictory information exists in context. Last month's pricing and this month's pricing are both there. Old campaign rules and new ones. The AI has to pick, and it might pick wrong.

When your agent misbehaves, these categories help diagnose the problem. Is it poisoning (bad data got in early)? Distraction (too much irrelevant stuff)? Confusion (can't tell what applies)? Or clash (contradictory info)?

What AI actually needs: Context engineering vs. prompt engineering

Most people treat AI like a very literal employee and try to fix problems with more instructions. More rules, more examples, more edge cases. But I've come to think the issue runs deeper: AI needs better information architecture, not just better wording.

Prompt engineering asks "what should I say?" Context engineering asks "what should I know?" Prompts are static, written once, frozen. Context is live, pulled in real-time based on who's asking and what they need. A prompt is text. Context is a data system.

Think about onboarding a new hire. You don't hand them a 50-page handbook and say "memorize this before every call." You give them CRM access, point them to the knowledge base, share the style guide with real examples. You make sure they know what campaign is running this week and which situations to escalate.

Context engineering does the same thing for AI.

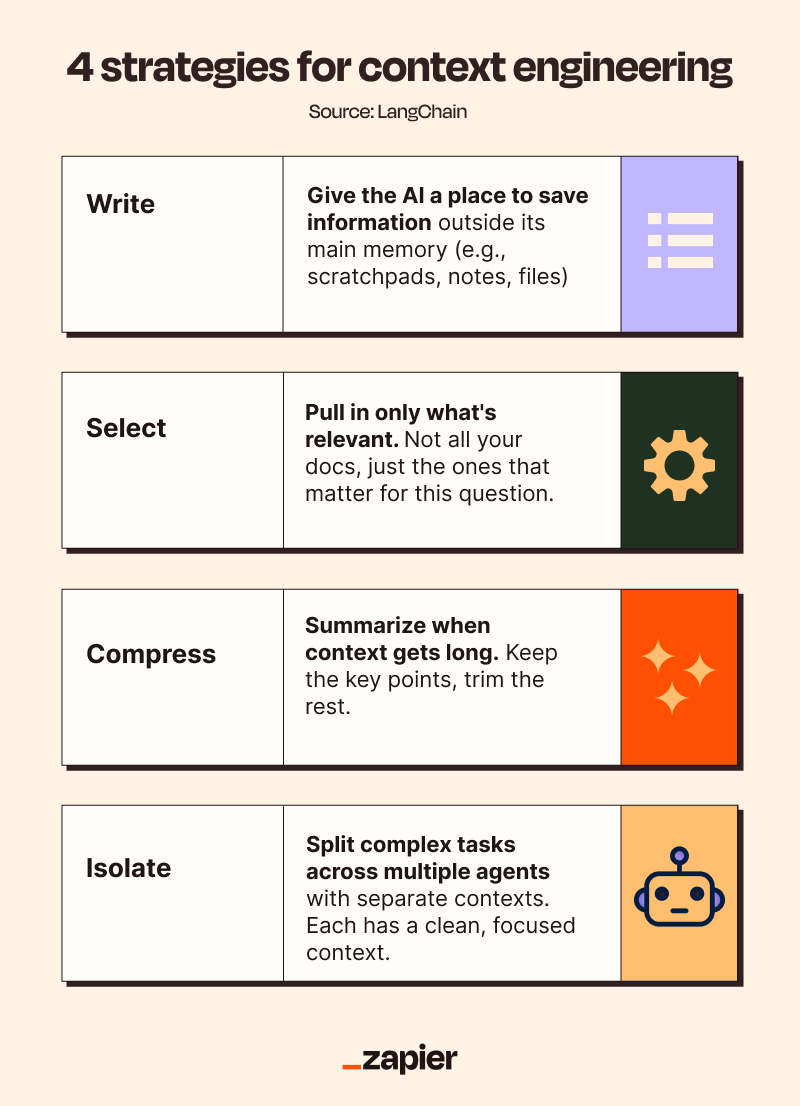

The 4 strategies

LangChain's framework breaks context engineering into four strategies. I find this useful for thinking about what your system actually needs:

Write: Give the AI a place to save information outside its main memory. Scratchpads, notes, files. This way it doesn't have to keep everything in its head.

Select: Pull in only what's relevant. Not all your docs, just the ones that matter for this question. Not every customer field, just the ones that help right now.

Compress: Summarize when context gets long. A conversation that's been going for 20 turns doesn't need all 20 turns in full. Keep the key points, trim the rest.

Isolate: Split complex tasks across multiple agents with separate contexts. One agent researches, another writes, a third reviews. Each has a clean, focused context instead of one agent drowning in everything.

In practice, most teams lean heavily on Select. That's where a tool like Zapier Tables comes in: giving your agent access to structured context it can pull from selectively rather than loading everything at once.

Context is finite. It has diminishing returns. Anthropic's engineering team puts it well: "good context engineering means finding the smallest possible set of high-signal tokens that maximize the likelihood of some desired outcome." Recent academic research confirms this: strategic selection of relevant information consistently outperforms dumping in everything you have. More context isn't always better context.

There's a difference between what you store and what the model sees. Your database can hold terabytes. But at any given moment, the AI should only see what matters for this conversation.

Don't dump information upfront. Let the AI reach for it. Customer details when a customer asks. Product specs when features come up. Not everything, all the time.

And the one that took me longest to learn: 500 tokens of the right stuff beats 50,000 tokens of everything you have.

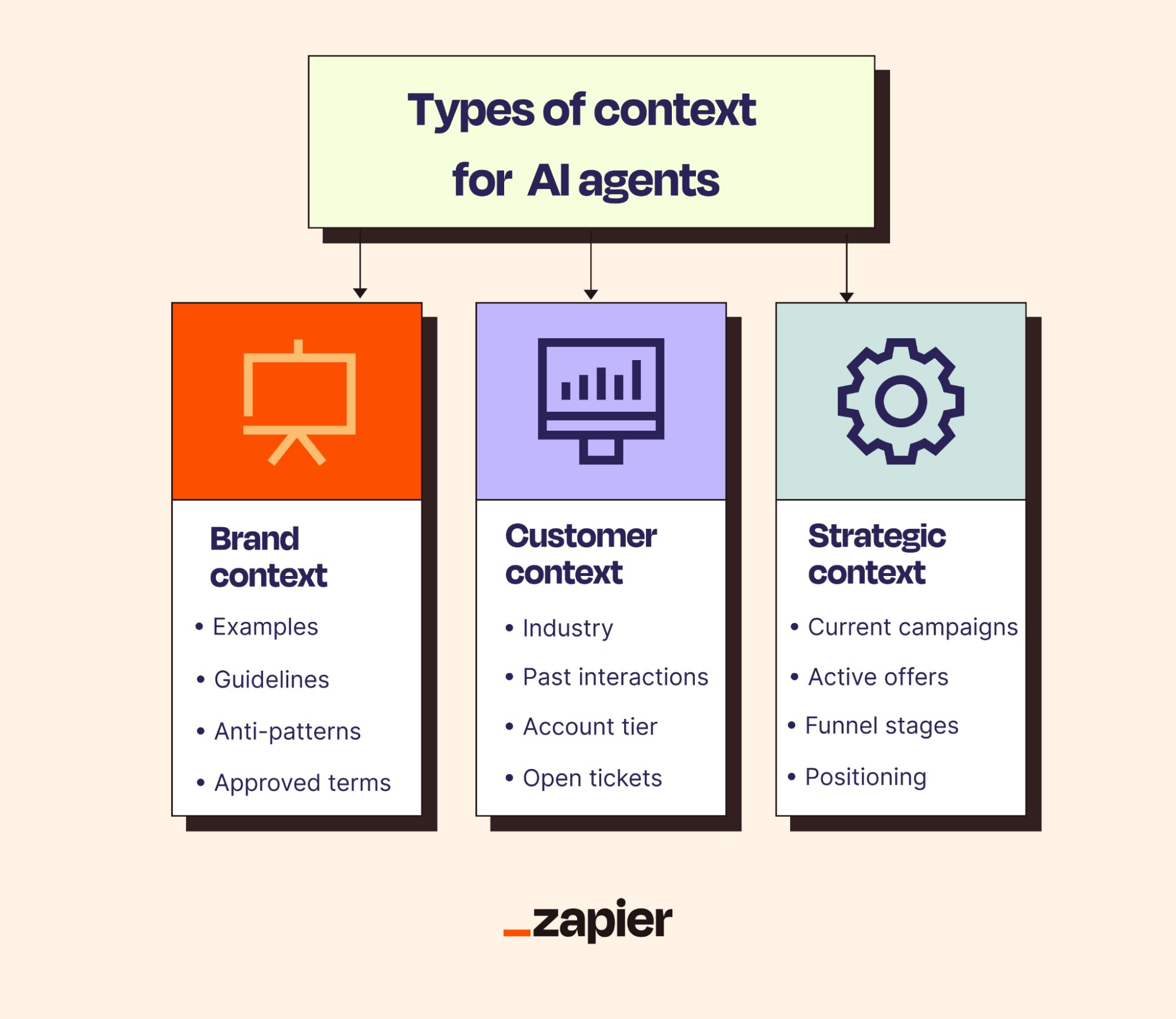

3 types of context that matter

To build agents that actually work, you need three types of context. Think of them as databases your AI always has access to.

Brand context: who you are

This is your AI's personality. The rules, voice, and boundaries that make responses sound like you instead of generic ChatGPT.

Most marketers miss something here: you cannot invent a brand persona by writing creative prompts. Research shows LLM-generated personas contain systematic biases: positivity bias, idealized profiles, skewed viewpoints.

So extract, don't invent. Take your best-performing emails, your highest-rated support responses, your most-shared social posts. Feed those to the AI as examples. Let brand context come from what you've already done well, not what you imagine your brand should sound like.

Brand context includes voice guidelines ("direct and confident, not salesy"), anti-patterns ("never say synergy, ever"), approved terminology (especially product names people get wrong), and topics that are off-limits like competitor names or unannounced features. I'd also add ten to twenty real responses that nailed the tone, plus escalation rules: when to hand off to a human, what promises the AI should never make.

Customer context: who they are

This one changes with every conversation.

Without customer context, every interaction starts from zero. The AI asks "What industry are you in?" when the customer already told you twice. With customer context, your AI can say: "Last time we spoke, you were evaluating our API integration. Did you get a chance to review the documentation I sent?"

That sentence requires memory. Memory is what separates an assistant from a chatbot that makes customers repeat themselves. What goes in here?

Company info and account tier (so it knows whether to pitch enterprise features)

Industry (so it uses relevant examples)

Whether they have open tickets (so it doesn't cheerfully ask "how can I help?" when they're mid-crisis)

Purchase history and past conversations (so they never have to repeat themselves)

Where they are in the funnel (so the AI adjusts how deep to go on product details)

Strategic context: what you're trying to achieve

In my experience, marketers almost always forget this one.

Your AI doesn't know it's Q1. It doesn't know you're pushing annual plans or that your goal this quarter is demos, not free trials. Unless you tell it.

This layer holds:

Current campaigns (so the agent knows what pricing benefits to mention)

Active offers (so it knows which discounts are real)

Rules for different funnel stages, your conversion goals

Competitive positioning

The kind of stuff that changes quarter to quarter and shapes what the AI should actually be pushing.

How they work together

A customer asks: "What makes you different from [Competitor]?"

Brand context says never name competitors directly. Customer context shows they're an enterprise trial user in fintech. Strategic context indicates the current push is compliance features.

Result: a response highlighting compliance capabilities (relevant to fintech), mentioning enterprise-grade security (relevant to their tier), positioning against competitors without naming them. All in your brand voice.

I don't think any prompt engineering trick achieves this. It requires architecture.

Building it with Zapier

Context engineering requires three things:

A place to store structured context

AI that can pull from it on demand

Connections to your existing tools

Zapier fits this well. Tables is a no-code database with built-in AI fields. Agents can access Tables as knowledge sources, pulling context live. And with 8,000+ integrations, your CRM, support tools, and marketing platforms can sync into your context layer.

Before diving in, familiarize yourself with how to use Zapier Tables and how to use Zapier Agents.

Step 1: Brand context table

Create a Zapier Table called "Brand context" with these fields:

Field | Type | Purpose |

|---|---|---|

Category | Dropdown | Voice, terminology, boundaries, examples, escalation |

Rule | Long text | The actual guideline |

Priority | Number | 1 (critical) to 5 (nice-to-have) |

Good example | Long text | What this looks like done right |

Active | Checkbox | Toggle rules on/off |

Sample entries:

Category | Rule | Priority |

|---|---|---|

Voice | Use "we" instead of "I" when representing the company | 1 |

Voice | Be direct-most responses should be under 150 words | 2 |

Anti-pattern | Never use buzzwords like "synergy" or "leverage" | 1 |

Terminology | Our product is "Acme Platform" not "Acme Tool" | 1 |

Boundaries | Never promise delivery dates for feature requests | 1 |

Escalation | Always offer human handoff for billing disputes | 1 |

Use Priority strategically. Your agent can filter to Priority 1 items only, keeping context lean.

Step 2: Customer context table

Create a "Customer profiles" table that syncs with your CRM. Include company name, contact info, account tier, industry, funnel stage, open tickets, last interaction.

Add an AI field for automatic enrichment: "Based on this customer data, write a 2-sentence summary a customer success agent should know before any interaction."

This generates a briefing for every customer. No separate workflow needed.

Step 3: Strategic context table

Create a "Campaign context" table with active offers, target segments, key messages, offer codes, and funnel stage rules. Update this weekly or when campaigns change.

Step 4: Build the agent

Create a pod called "customer Operations."

Create an agent named "Customer success agent," and add your three tables as knowledge sources.

Write instructions for how to use context:

Instructions

Before every response:

1. Look up the customer in "Customer profiles."

2. Read their AI summary.

3. Check "Brand context" for voice guidelines (Priority 1 first).

4. Check "Campaign context" for relevant offers.

Escalate for:

- Customers mentioning "cancel" or showing frustration

- Billing disputes over $500

- Requests outside your authority

Configure Human-in-the-Loop for sensitive actions.

Add actions: update CRM, create tickets, send emails.

Step 5: Test

Before going live, run through these scenarios:

Test query | What should happen |

|---|---|

"What's your pricing?" | Checks customer tier, pulls active campaign offers |

"How do you compare to [competitor]?" | Follows competitor mention rules, emphasizes differentiators |

"Can I get a discount?" | Checks tier, current offers, provides code or escalates |

"I want to cancel" | Triggers escalation path, offers human connection |

Run each query 3-5 times. Watch for consistency, appropriate personalization (does it know their tier?), adherence to brand boundaries, correct campaign info, and proper escalation.

When your agent goes off-brand, ask: "What would a human have needed to know?" Add that to your Tables.

From scattered to context-engineered

Two pictures.

Before: Scattered

Brand guidelines live in a PDF somewhere in Google Drive. Customer data is in HubSpot, synced maybe. Product specs are in a Notion wiki that hasn't been touched in months. Campaign details are buried in Slack threads. Pricing is in a sales deck from last quarter. AI instructions get copy-pasted into ChatGPT fresh every morning.

The symptoms are predictable. Every AI session starts from zero. Brand voice varies depending on who wrote the prompt. Customers repeat themselves because there's no memory. You spend more time prompting than the AI saves you. Different team members get completely different outputs for the same question.

The prompts aren't the issue. The information architecture underneath them is.

After: Context-engineered

Zapier Tables becomes the single place where brand, customer, and strategic context lives. Zapier Agents pull from those Tables, knowing what to load and when. Chatbots use the same knowledge sources. Zaps keep everything synced from your CRM, support tools, and campaign platforms.

You end up with an agent that knows your business like a two-year employee. Brand voice enforced automatically, customer context pulled live, strategic priorities shaping every response.

Advanced patterns

The voice library

Instead of describing your voice, show it. Create a table with 20-50 examples of ideal responses:

Scenario | Customer Says | Ideal response |

|---|---|---|

Pricing (trial) | "How much?" | "Your trial gives you full Pro access. When ready, Pro is $49/month or $470/year." |

Frustrated | "I've been trying for hours" | "I hear you. Hours of troubleshooting is exhausting. Let's fix this now. Can you tell me..." |

Competitor question | "Why should I pick you over [Competitor]?" | "For teams like yours, here's what sets us apart: [specific differentiator]..." |

Configure your agent to retrieve relevant examples before responding. When it sees a pricing question, it pulls the pricing example. When it detects frustration, it pulls the frustrated customer example. AI learns better from examples than rules.

Progressive loading

Not every interaction needs all context. This is one of the most practical context engineering techniques, and production teams call it "just-in-time" context loading.

The logic: start lean, expand as needed. If your context starts small, the AI processes faster and costs less. When the conversation requires more information, pull it in then—not before.

Always load: top five voice rules, active campaigns, escalation triggers.

Load on lookup: full customer profile, AI summary, recent history.

Load on topic: product specs when features come up, pricing when costs are discussed.

Well-structured, cacheable context can cost 10x less to process than context assembled differently for each request. Progressive loading helps here: the stuff you always load (voice rules, escalation triggers) stays the same across requests and can be cached. The stuff you load on demand is smaller and cheaper to process fresh.

Keep context fresh

Set up Zaps to sync automatically.

Trigger | Action |

|---|---|

Support ticket closes (Zendesk) | Update customer notes |

New campaign launches (HubSpot) | Add to "Campaign context" |

Deal stage changes (Salesforce) | Update funnel stage |

If pricing changes, your agent should know within an hour, not next week when someone remembers to update a prompt.

Internal agents and external chatbots

Use Zapier Agents for internal tasks (research, drafting, analysis) and Zapier Chatbots for customer-facing interactions. Both pull from the same context tables. This keeps things consistent while separating concerns (internal agents can take actions you'd never expose to customers).

What this makes possible

Your best salesperson has good instincts but can't remember every detail of every customer interaction. Your best support rep knows the product cold but might miss last week's pricing change. Your best marketer understands the brand voice intuitively but might not know what campaign is running in APAC.

A context-engineered agent doesn't have these blind spots. Instant access to every brand guideline, product detail, and policy. The customer's complete history before saying hello. Today's campaigns across every region. The right information, when it's needed.

A prospect reaches out at 2 a.m. asking about enterprise pricing. The agent knows they're in fintech (customer context), knows enterprise accounts get custom quotes (brand context), knows Q1's goal is annual contracts (strategic context). It doesn't quote the wrong price. It doesn't offer a free trial when it should offer a demo. It handles the inquiry the way your best salesperson would, except your best salesperson is asleep.

Your next steps

Audit your current state. Where does your brand, customer, and strategic context live today?

Start with brand context. One Zapier Table with your top 10 voice guidelines. This is usually what's missing when people ask "why does our AI sound so generic?"

Add customer context. Sync your CRM to a "Customer profiles" table with one AI field.

Build a simple agent. One Zapier Agent pulling from both tables.

Iterate based on failures. Every weird response signals missing context.

The models will keep getting smarter. Context windows will keep getting larger. But the marketers who win will be the ones who master context, not just prompts.

Remember that agent quoting last quarter's pricing? With context engineering, it pulls the current rate from your "Campaign context" table before every response. The one recommending demos to existing customers? It checks your "Customer profiles" table first and sees they've been paying you for three years. The expired discount code? It only surfaces offers that are actually active.

The prompts didn't change much. The architecture around them did.

Further reading

Here are some resources I've found helpful.

Context engineering:

Zapier products: