Hallucinations on long documents. Formulaic writing. Code that doesn't run. If your AI app is doing any of this, you don't need better prompting: you need Claude. With its extended context window, nuanced writing that's easy to guide, and strong reasoning capabilities for technical challenges, it feels like a friendly junior worker tuned for collaboration. And with its Constitutional AI training, it keeps safety at the top of the list.

There are two ways to integrate Claude into your apps and internal tools. The easiest path is via Zapier: you can send data from over 8,000 work apps to Claude and get the results wherever you need them. But if you're building a new product or working on a custom integration, you can connect to the Claude API directly for complete control over requests and responses. This guide walks you through getting your API key, making your first successful call, and customizing Claude's behavior for your specific use case.

Table of contents:

What is the Claude API?

The Claude API lets you integrate Claude's AI capabilities into your apps and internal tools. Send Claude a prompt via an API call, and it returns a response you can use however you need—whether that's answering customer questions, analyzing documents, generating content, or powering complex decision-making logic—all without users needing to visit Claude.ai.

Claude API models

Claude offers three model tiers—currently all in version 4.5—with increasing intelligence, capabilities, and cost:

Haiku: Fastest and most cost-effective. Best for straightforward tasks like content moderation or data extraction where speed matters more than reasoning.

Sonnet: Balanced intelligence and speed. Handles most workloads including coding or data analysis.

Opus: Most intelligent and capable. Excels at creative writing, complex problem-solving, and writing code.

All models support the same core features below, but higher-tier models produce more accurate, nuanced, and contextually appropriate responses.

What can you do with the Claude API?

Category | Features |

|---|---|

Generative and multimodal | Messages API: Send prompts and get text responses for chat apps, content generation, and analysis Vision: Analyze images alongside text prompts Document understanding: Extract information and summarize PDFs Citations: Get answers with references to source documents Extended thinking: See Claude's reasoning process before the final answer Structured outputs: Receive responses in guaranteed JSON format |

Agents and actions | Tool use: Let Claude call functions and APIs in your application Web search: Claude can fetch current information from the web Code execution: Run code in a secure sandbox Computer use (beta): Automate desktop tasks with mouse and keyboard actions Agent Skills: Package reusable instructions, scripts, and resources for consistent agent behavior MCP integration: Connect Claude to external tools and data sources |

Data, retrieval, and optimization | Files API: Upload documents once and reuse them across multiple requests Prompt caching: Reduce costs and latency by caching repeated prompt elements Message batches: Process large volumes of requests asynchronously at lower cost |

Quality and safety | Content moderation: Screen user content for safety issues Evaluation tools: Test and measure your AI application's performance |

What the Claude API can't do: It doesn't generate images, audio, or video—if you need to create visual or audio content, you'll need to use a different service. Claude also doesn't offer fine-tuning through the API, but you can customize behavior via system prompts and Agent Skills. For embeddings, Anthropic recommends using Voyage AI, which is optimized to work with Claude.

Claude API pricing

The Claude API runs a prepaid pay-as-you-go model. Every time you send a request to Anthropic's servers, you'll pay based on the number of tokens in the prompt (input) and the response (output). Prices per million tokens (~750,000 words).

Model | Input tokens | Output tokens |

|---|---|---|

Claude Opus 4.5 (best) | $5 | $25 |

Claude Sonnet 4.5 (balanced) | $3 | $15 |

Claude Haiku 4.5 (economical and fast) | $1 | $5 |

Full pricing information in the Claude Docs models & pricing page.

Keep in mind:

Credits are non-refundable and expire one year after they're purchased if you don't use them.

Credits are consumed every time you send a successful request to the Claude API or when you use the Workbench to try out new prompts.

As soon as you run out of credit, you won't get any more responses. All functionality in your apps will stop. You can set up auto-reload to always keep your account funded.

Use Zapier to connect to Claude

Before we walk through how API calls exactly work, let's explore a faster option if you need Claude working with your apps right now. Zapier is an automation platform that connects over 8,000 apps without code—Claude models included. It handles all the technical plumbing for you, so you don't have to worry about calls and responses.

Zapier is like a massive API switchboard. Instead of spending hours figuring out the API documentation of Google Sheets, understanding how to list Slack messages, or formatting your data so your CRM API can accept it, Zapier has already done that work for you.

The platform keeps all its integrations up to date, each handling the specific quirks and requirements of that service. This means that you can focus on what you want to build rather than on how to connect and run everything.

And because it's a no-code tool, you can let your non-technical teams build their own logic, automation, and internal tools with Zapier. Your engineers can audit workflows, set permissions, and see which automations are good candidates for deeper integration—while they have more time to tackle the big problems.

Here are some pre-built workflows (called Zaps) that show what Zapier and Claude are capable of.

Generate an AI-analysis of Google Form responses and store in Google Sheets

Write AI-generated email responses with Claude and store in Gmail

Create LinkedIn posts with Claude and post to LinkedIn

Create AI-generated social media posts with Claude

You can also use Zapier MCP to trigger these kinds of workflows while you're chatting with Claude, so you never even have to leave the chatbot. Learn more about how to automate Claude with Zapier and how to use Claude with Zapier MCP.

Zapier is the most connected AI orchestration platform—integrating with thousands of apps from partners like Google, Salesforce, and Microsoft. Use forms, data tables, and logic to build secure, automated, AI-powered systems for your business-critical workflows across your organization's technology stack. Learn more.

How to set up Claude API connections

Grab a coffee, turn off distractions, and let's make some magic. You can complete this tutorial in about 30 minutes. Don't worry if you can't do it in one sitting: each step is numbered so you can easily come back and finish.

Part 1: Preparation

Part 2: Calling the Claude API

Part 3: Building with the Claude API

Step 1: Before you begin

Before we jump in, here's what you need to have and know to make it to the end quickly:

A basic understanding of what an API is—read Zapier's guide on how to use an API for a refresher.

Fundamentals of what is JSON. This is used to format API call bodies. If you're lost, ChatGPT can help you spot errors or fix formatting.

15 to 30 minutes of focused time.

A credit card to buy Claude API credits (minimum $5).

Optional: a Postman account (free) for following along and making your first calls.

Step 1: Create an Anthropic account

(2.1) First, go to the Anthropic console, and create a developer account.

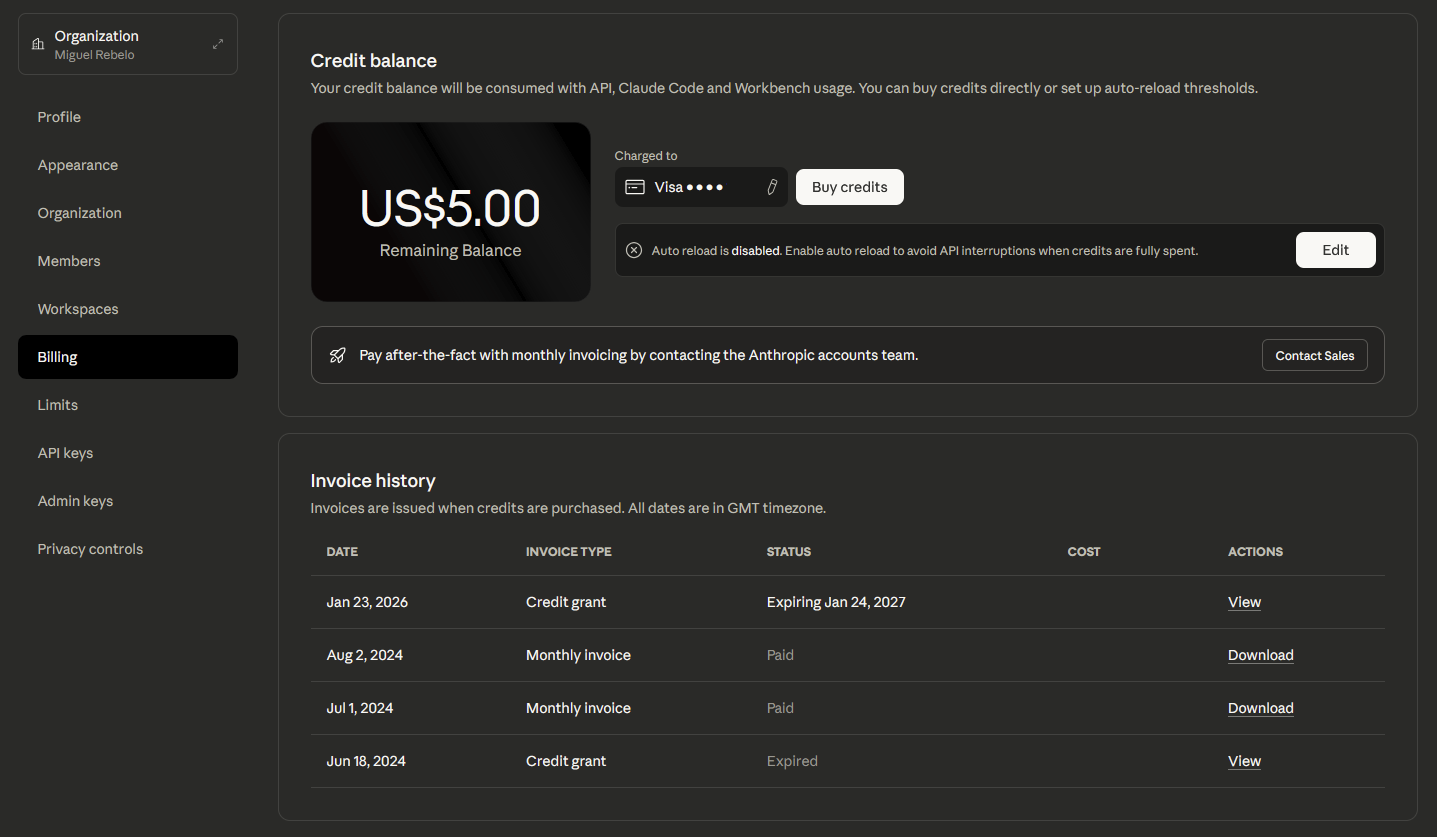

Step 3: Add credits to your account

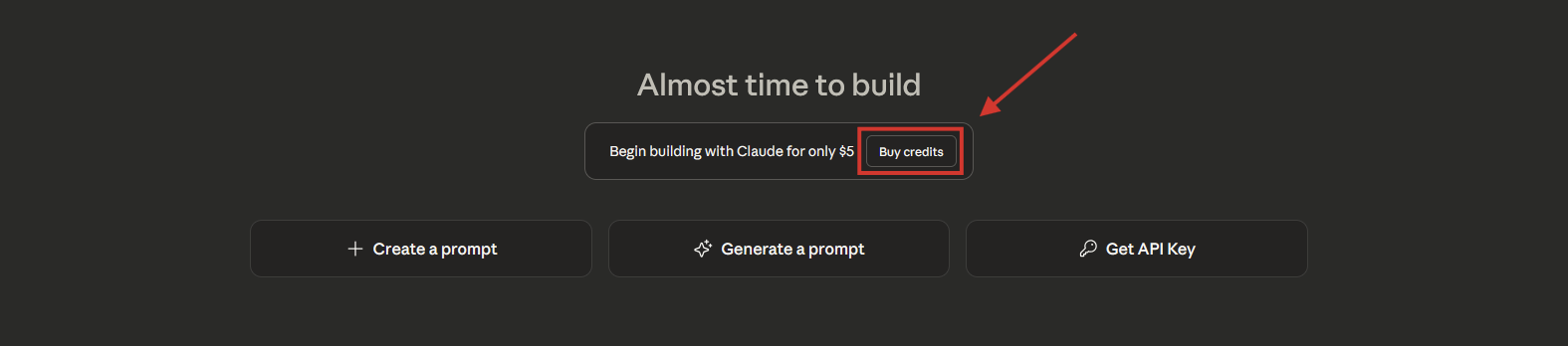

(3.1) Click the Buy credits button at the top of the screen. If it's not there, expand the left side menu, and head to Settings > Billing.

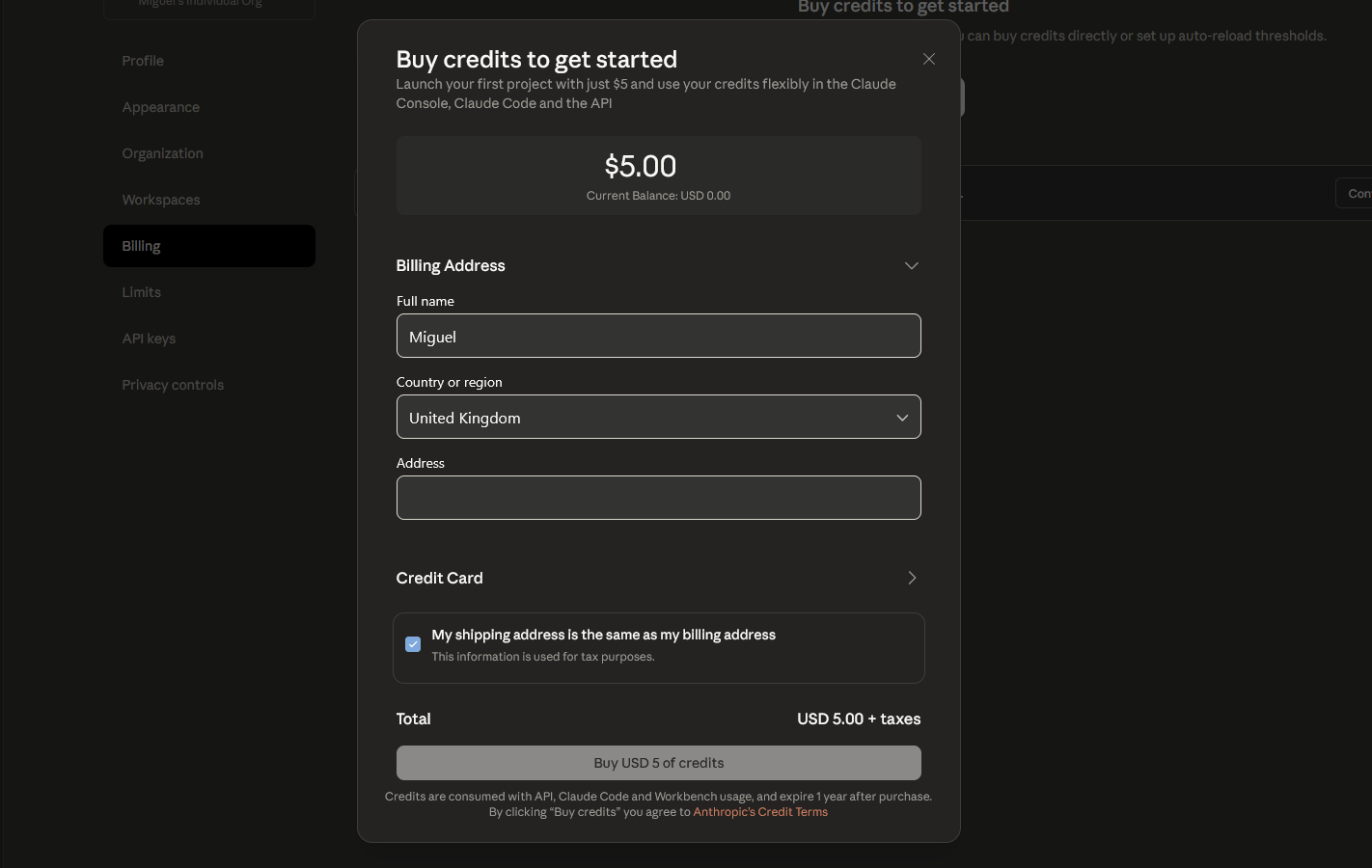

(3.2) Add your address and credit card details to purchase $5 worth of credits.

(3.3) Once the transaction completes, you'll see your credit in the Billing page.

Step 4: How to get a Claude API key

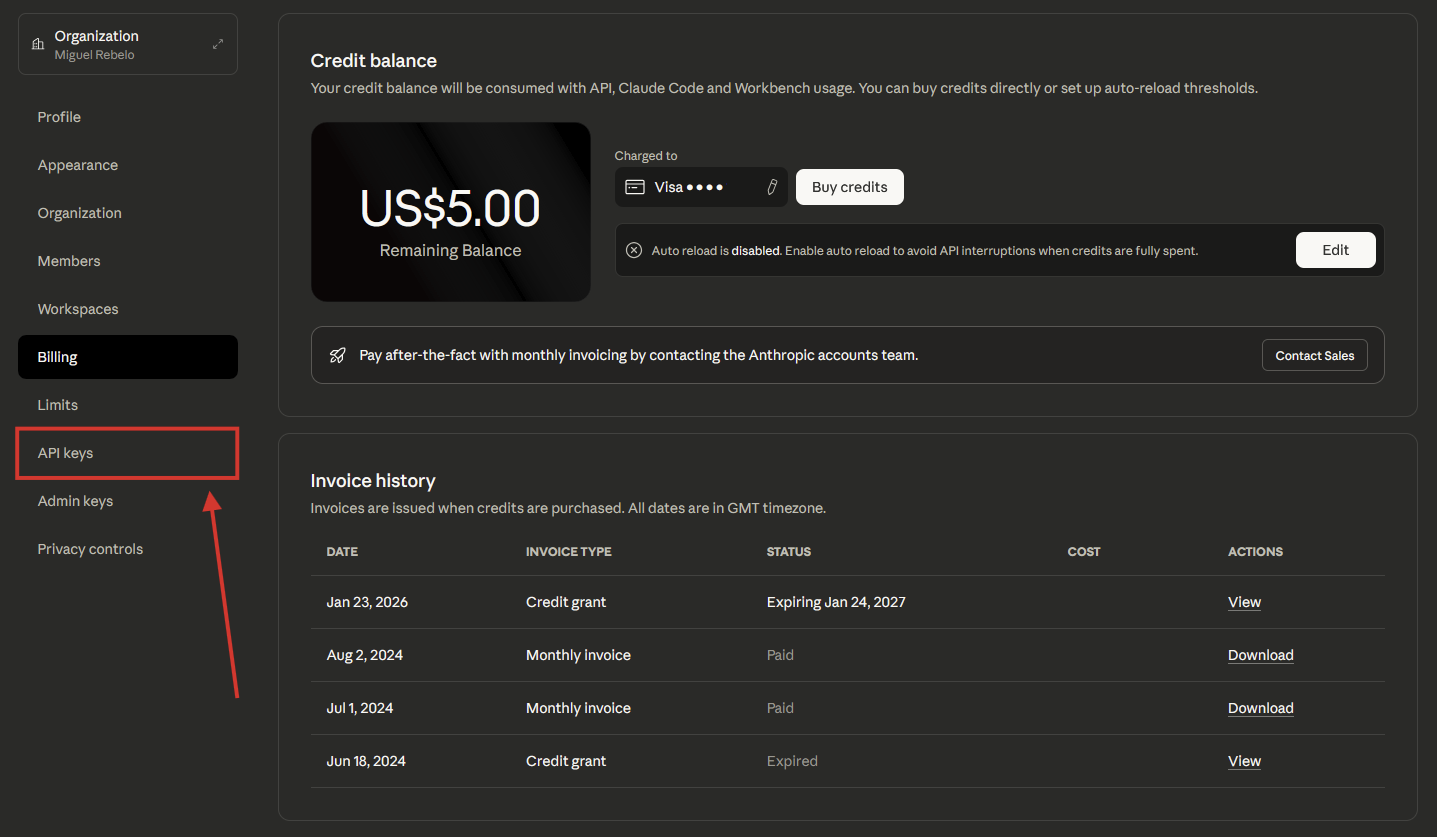

(4.1) In account settings, click API keys.

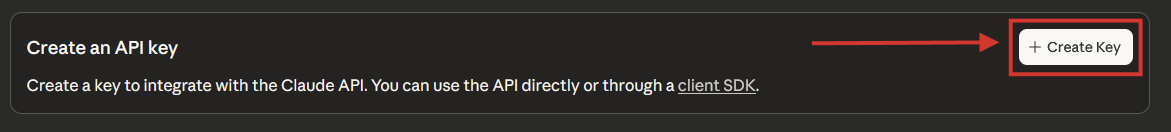

(4.2) Click Create Key.

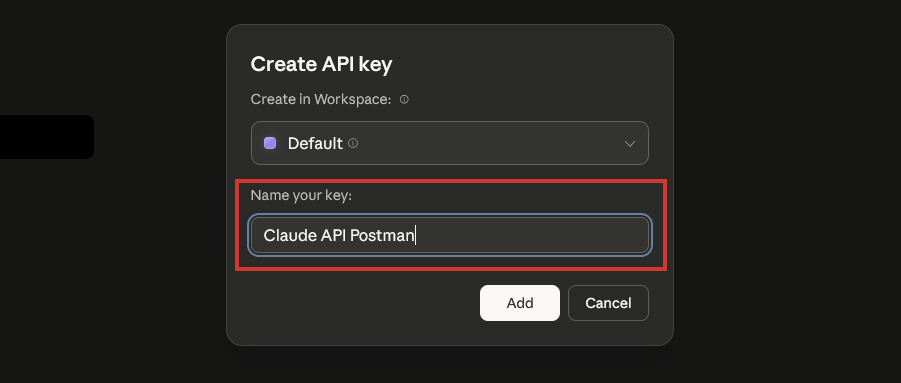

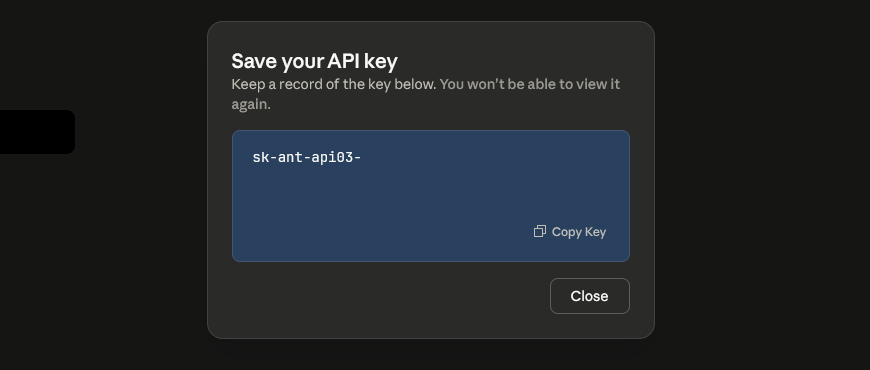

(4.3) Name your API key. For this tutorial, I'll name mine "Claude API Postman." Click Add when done. Optional: you can assign this key to a workspace, useful for organizing your keys across projects or use cases.

(4.4) Copy the entire key to a safe place—you won't be able to see it again once you close the pop-up.

Very important: You need to keep this API key safe at all times. If someone finds your key, they may use it themselves, consuming credits in the process. Don't share this key with anyone who doesn't need it, and if you're publishing an app to the public web, be sure to read up on API security best practices.

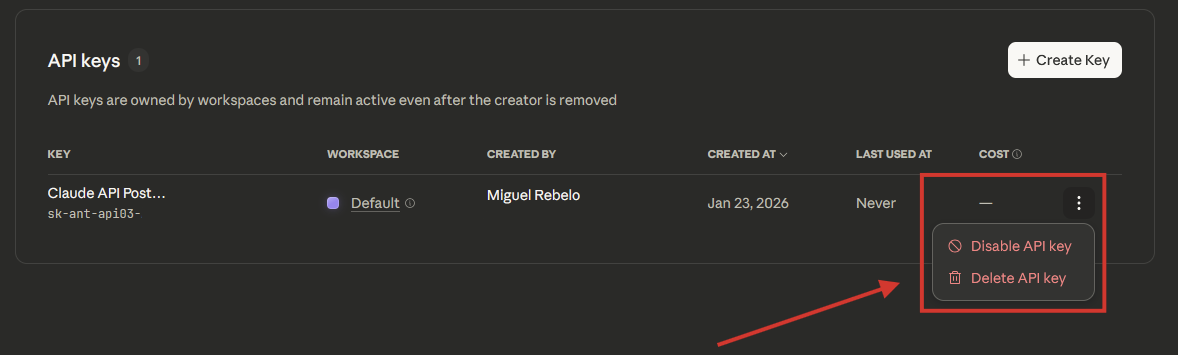

(4.5) Once you close the pop-up, you'll see a list of all the API keys you created so far. If you need to, you can disable or delete them by clicking the three-dots icon on the right side.

Step 5: Open the API documentation and reference

(5.1) Anthropic has rules on how you should make API calls: you need to follow the structure and instructions to get a result without triggering any errors. There are two important resources you'll need to familiarize yourself with:

The API documentation, called User Guides, offers general information about the API, what it can do, and how you can implement it in your projects.

The API Reference is a technical reference that helps you set up a call, structure the requests, and see expected responses.

(5.2) For this tutorial, I'm going to ask for a text generation job. Here's the API Reference article that discusses this topic. I'll be referring a lot to it, so you can keep it open on a tab on your browser if you're following along.

Step 6: Paste the endpoint

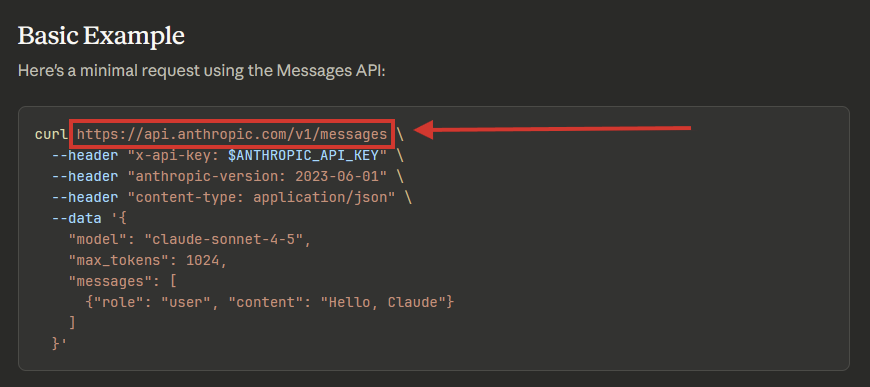

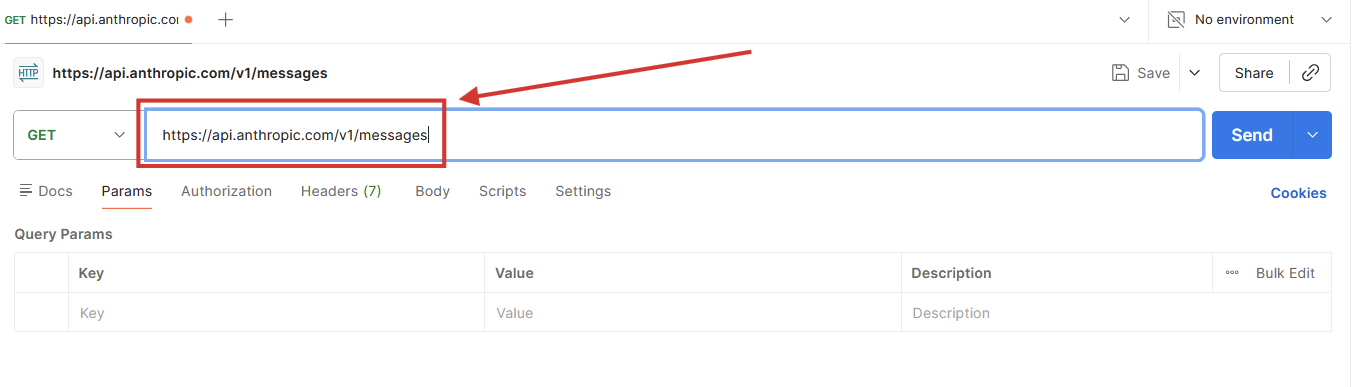

(6.1) To call the Anthropic API, we need to point Postman to its URL: the documentation shows it in the basic example section.

Copy the endpoint URL (https://api.anthropic.com/v1/messages).

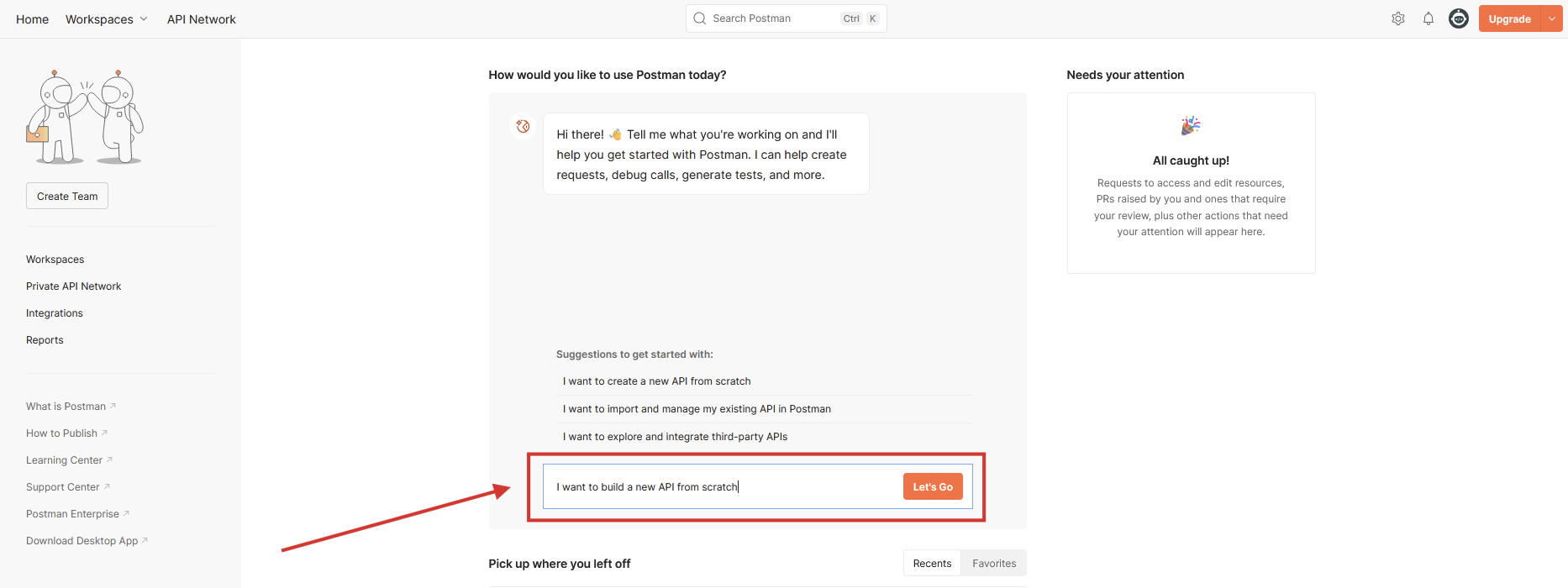

(6.2) Optional: If you're following along, open Postman's home screen and write in the AI assistant chat that you want to create a new API from scratch. However, if you're starting off with your own tool, you can use ChatGPT to help you code or refer to your internal tool builder or no-code app builder documentation on API connections.

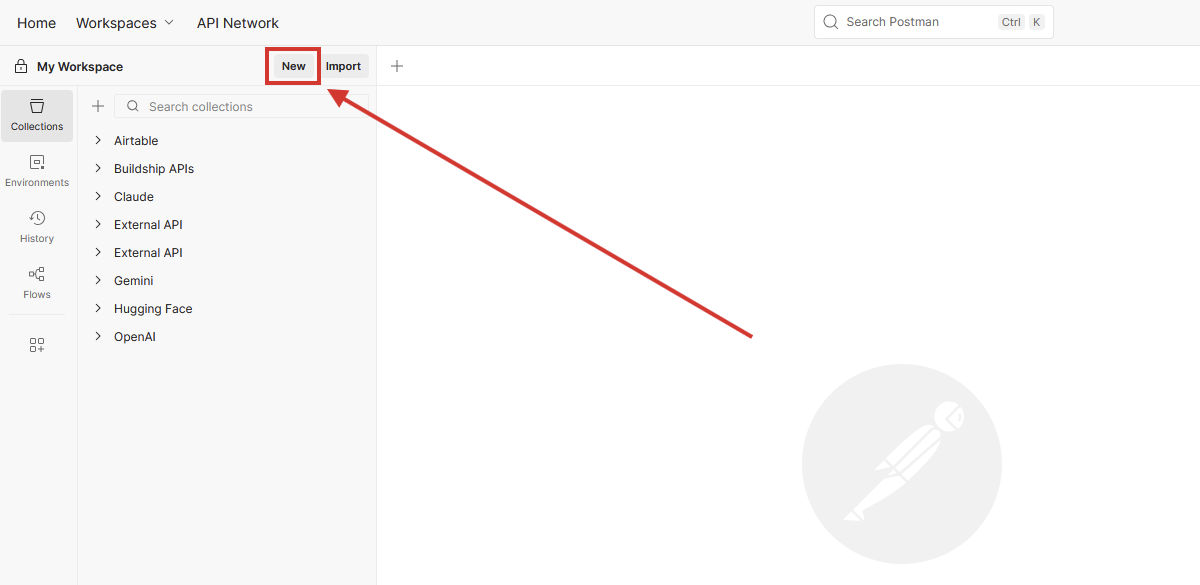

(6.3) In the Postman workspace screen, click New at the top left.

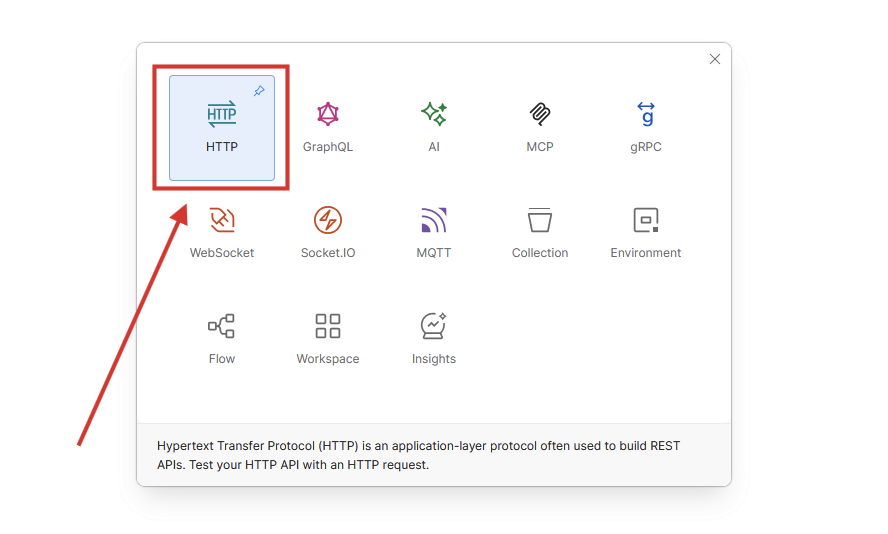

(6.4) Still in Postman, select HTTP.

(6.5) Finally, paste the URL in the request's input field. The platform may suggest quick setup for Anthropic API, but I suggest you skip it: going the slow way now will help you understand how this works faster.

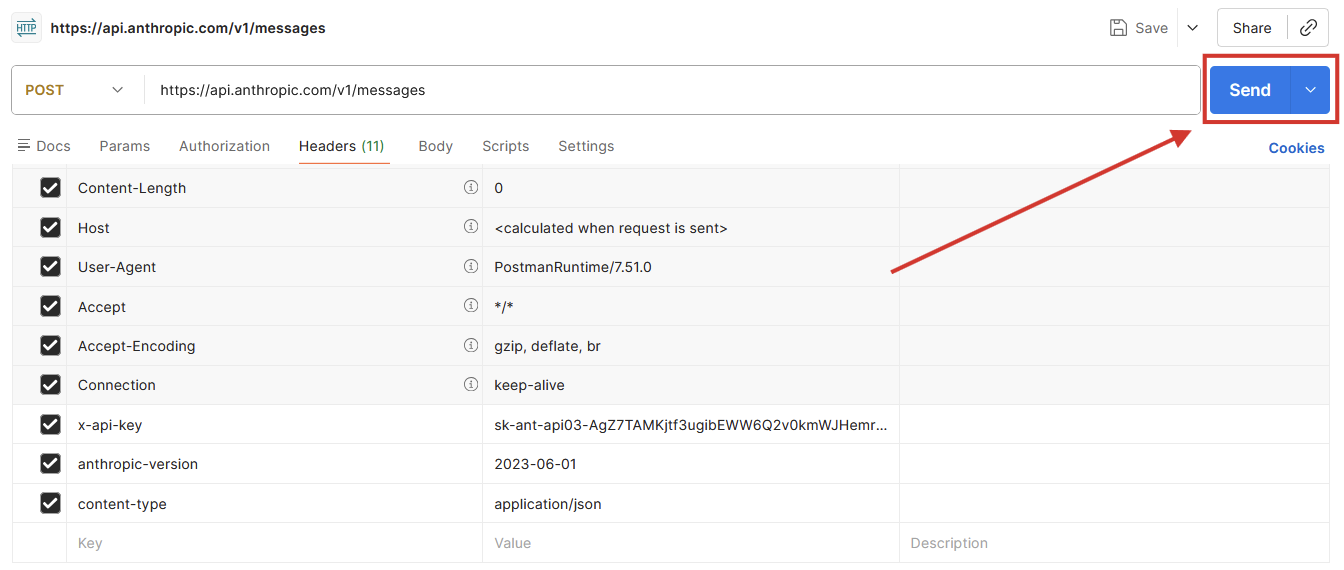

Step 7: Handle authentication

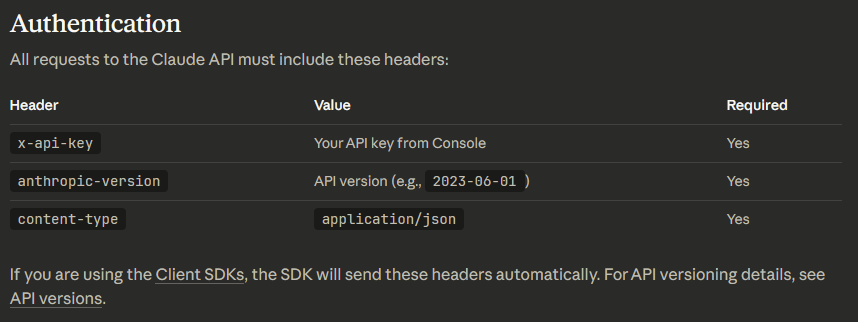

The API won't accept calls without knowing who's making them. This is why we created an API key associated with the Claude account earlier: it will be used to authenticate the requests and bill the work correctly. The documentation says that it requires the key in the header of the call with the x-api-key parameter, along with anthropic-version, and content-type.

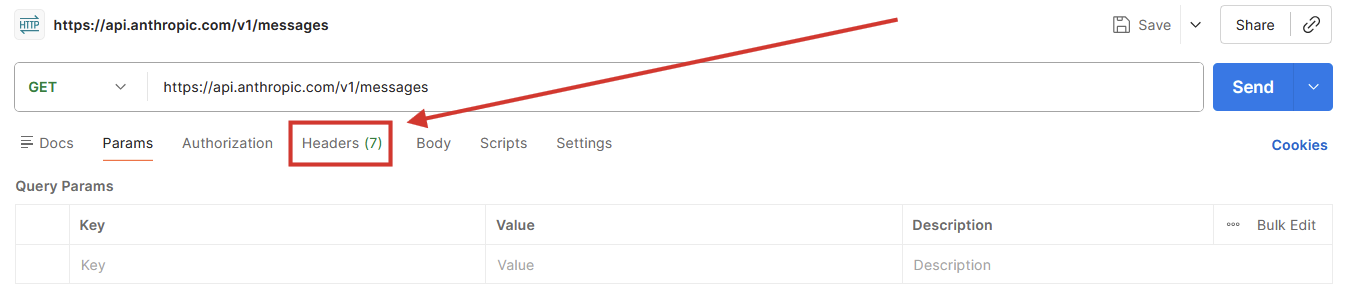

(7.1) In Postman, click the Headers tab.

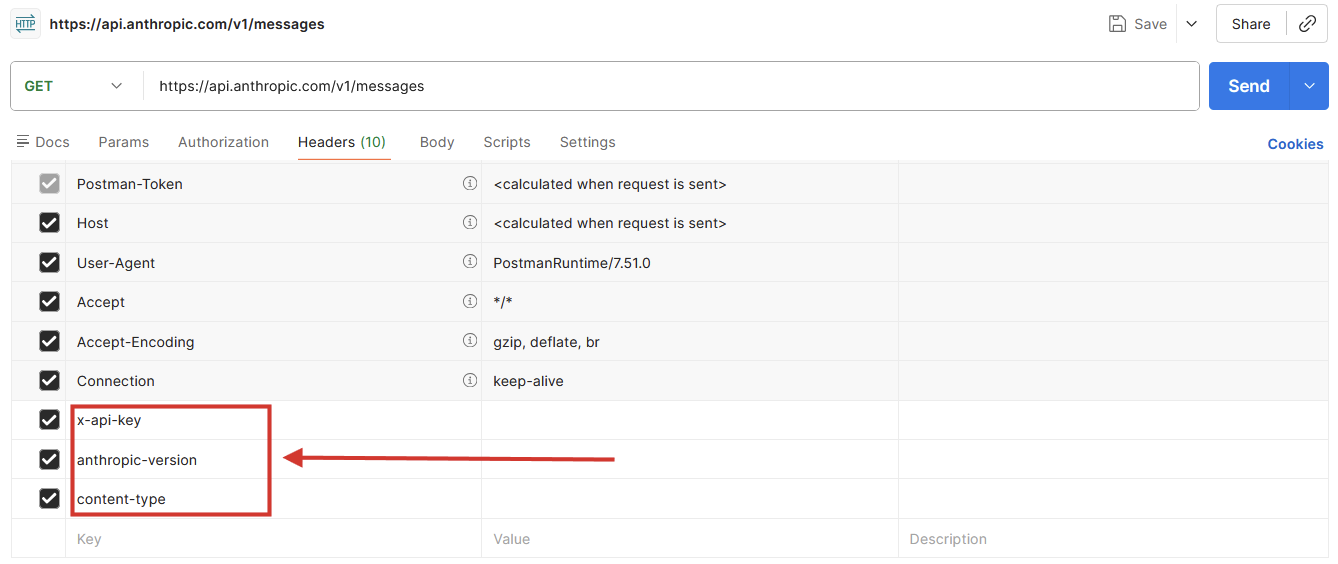

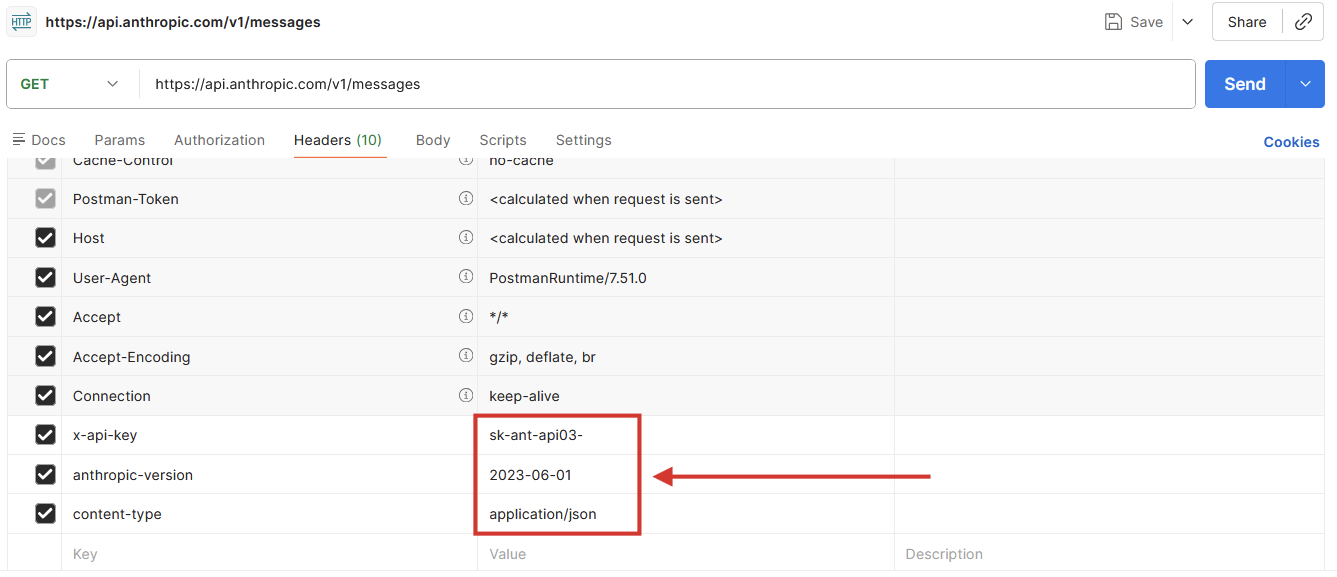

(7.2) At the end of the list, add the following parameters to the header of the call: x-api-key, anthropic-version, and content-type.

(7.3) Fill out the value of x-api-key with the API key you created earlier, anthropic-version with 2023-06-01 (or whichever new date you see in the Claude API documentation), and content-type with application/json.

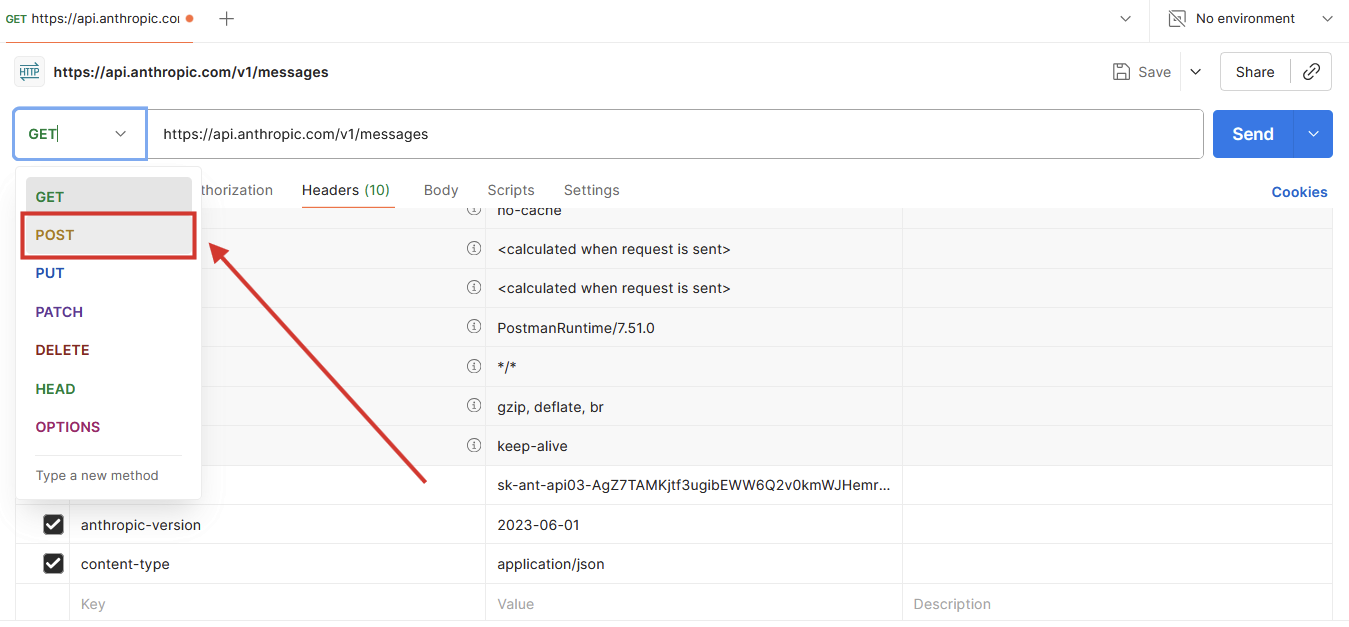

Step 8: Set up the HTTP method

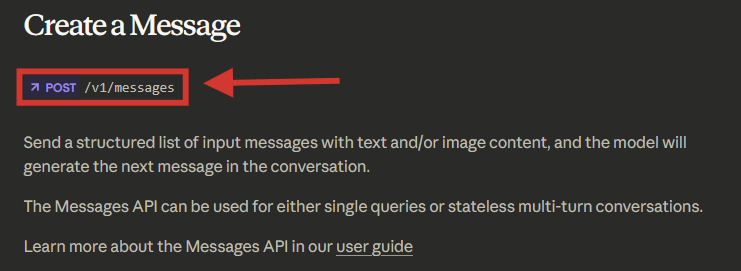

(8.1) Open the create a message API reference article if you haven't already. At the top of the article, notice:

The HTTP method: It's POST in this case.

And the URL suffix after the POST method. Different API features may be served in other URLs or URL suffixes, so always be sure you're connecting to the right endpoint.

(8.2) In Postman, click the GET method and change it to POST.

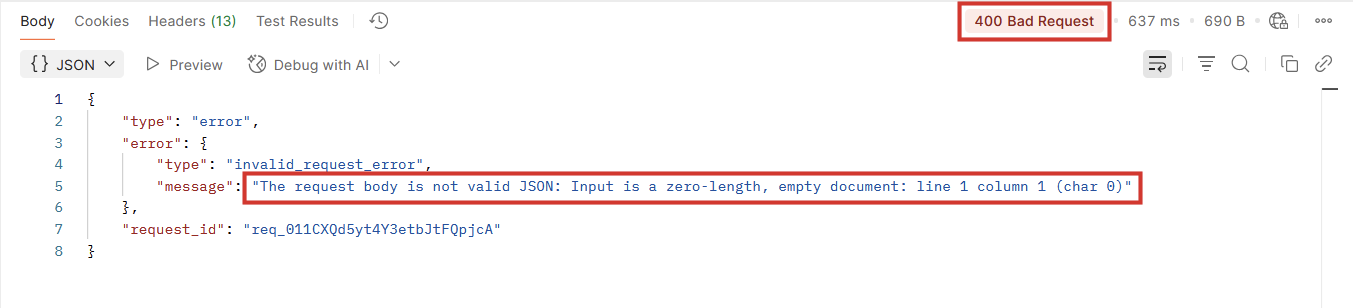

Step 9: Troubleshooting and learning through mistakes

Configuring a new API requires patience and troubleshooting skills. Struggling is normal: don't get discouraged if you see errors or if the systems don't behave as expected. Let's make a couple of intentional mistakes to understand what to look for when things go wrong—and how to get back on track.

(9.1) In Postman, click the Send button.

(9.2) The response appears at the bottom of the request interface. The Anthropic API returns an HTTP 400 Bad Request error, along with the message that it doesn't accept an empty request body. The API is right: we've only set up the endpoint and the authorization header, but not the body yet.

Reading errors and understanding them is vital to building successful API calls. If anything breaks:

Check all the syntax. Something small, like an extra/missing comma or bracket, can easily break a request.

Go back to the API documentation to find out the requirements for the call you're configuring.

Make sure to test each call when you make even a small change, so you can easily spot what's causing issues as you move forward.

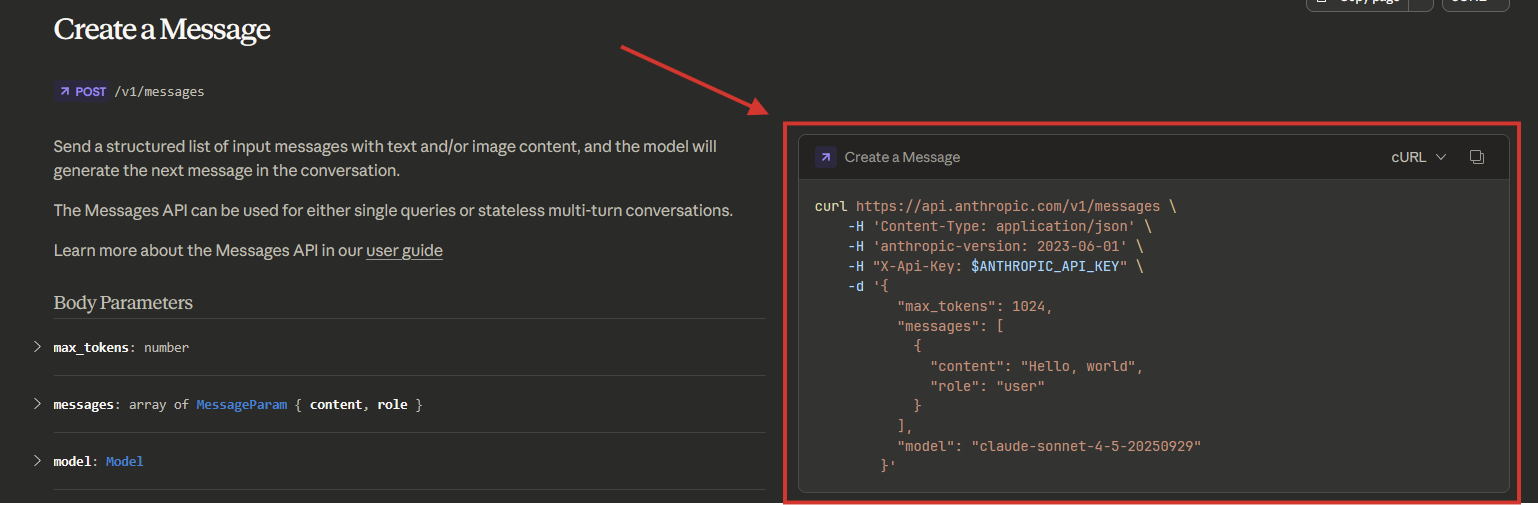

Step 10: Set up the request body

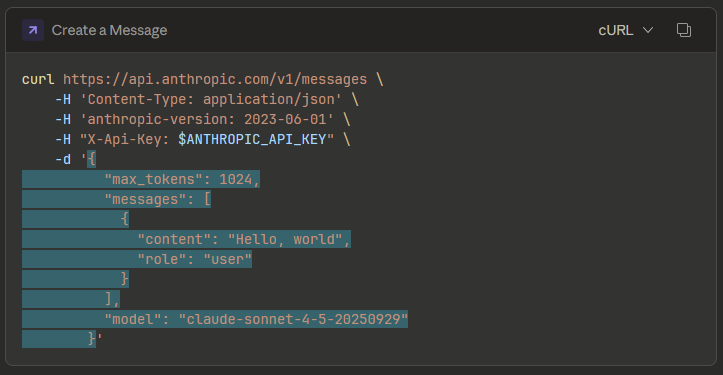

(10.1) Let's go back to the API reference for creating messages. Take a look at the box to the right of the introduction.

This request includes a cURL for people using a command line to interact with the API. We won't need it: we're using Postman. Still, here's what each part means:

curl <https://api.anthropic.com/v1/messages> \\

The cURL command tells the system to contact the endpoint URL. The backslashes at the end are a line break for cURL, used to improve readability—they do nothing else.

-header "x-api-key: $ANTHROPIC_API_KEY" \\

-header "anthropic-version: 2023-06-01" \\

-header "content-type: application/json" \\

The request passes three headers:

"x-api-key": followed by your API key handles authentication. We've already configured this in Postman.

"anthropic-version: 2023-06-01" states the appropriate system version.

"content-type: application/json" sets the content type of the request body so the system knows how to handle it.

Everything after the -d flag is the data of the request, also known as body or payload.

-d '{

"max_tokens": 1024,

"messages": [

{

"content": "Hello, world",

"role": "user"

}

],

"model": "claude-sonnet-4-5-20250929"

}'

"max_tokens": 1024, sets the maximum output length for each AI response. In this case, it won't exceed approximately 1024 tokens (approximately 768 words).

"messages": contains the message type along with the content.

"role": "user" labels this message type as a standard user prompt.

"content": "Hello, world" is the actual content of the user message.

"model": "claude-sonnet-4-5-20250929", points to the model you want to call. In this case, it's set to Claude Sonnet 4.5, 29th of September version. You can browse the full model list here and use the names in the row labeled "Claude API ID."

(10.2) Copy the entire request body JSON example without the -d flag or the single quotes at the beginning or end.

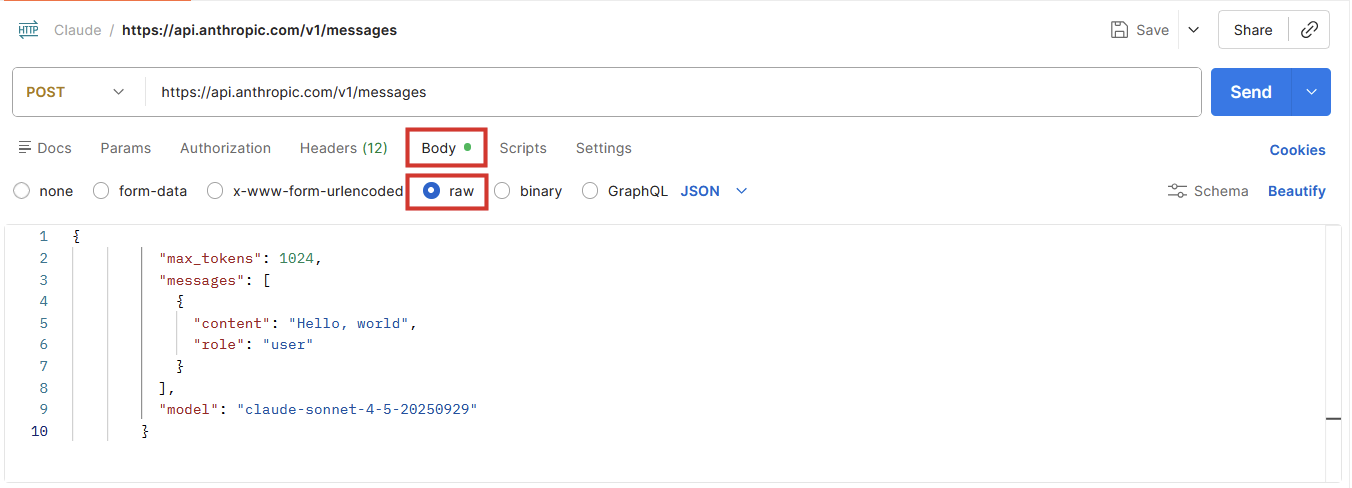

(10.3) Back in Postman, click Body followed by raw under the endpoint input field. Then, click on line 1 and paste the body there.

Step 11: Call the AI model

Hey Claude, pick up the phone.

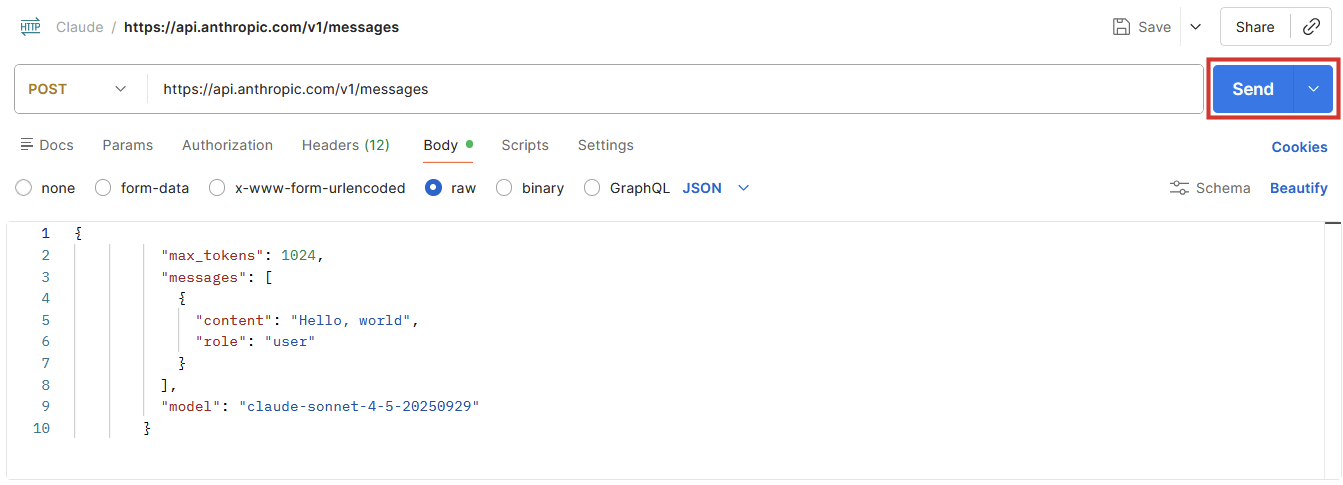

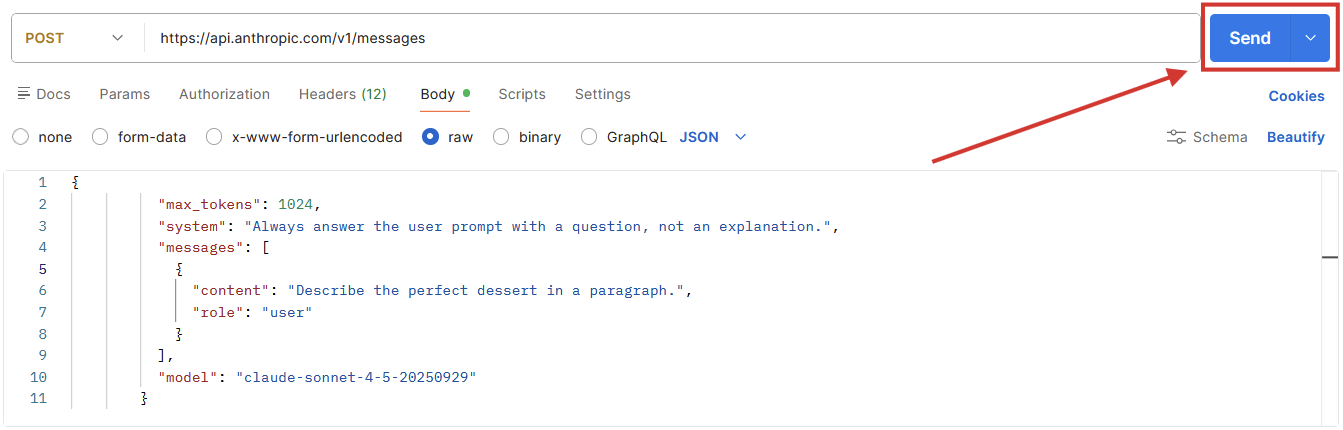

(11.1) Click the Send button, and see what happens.

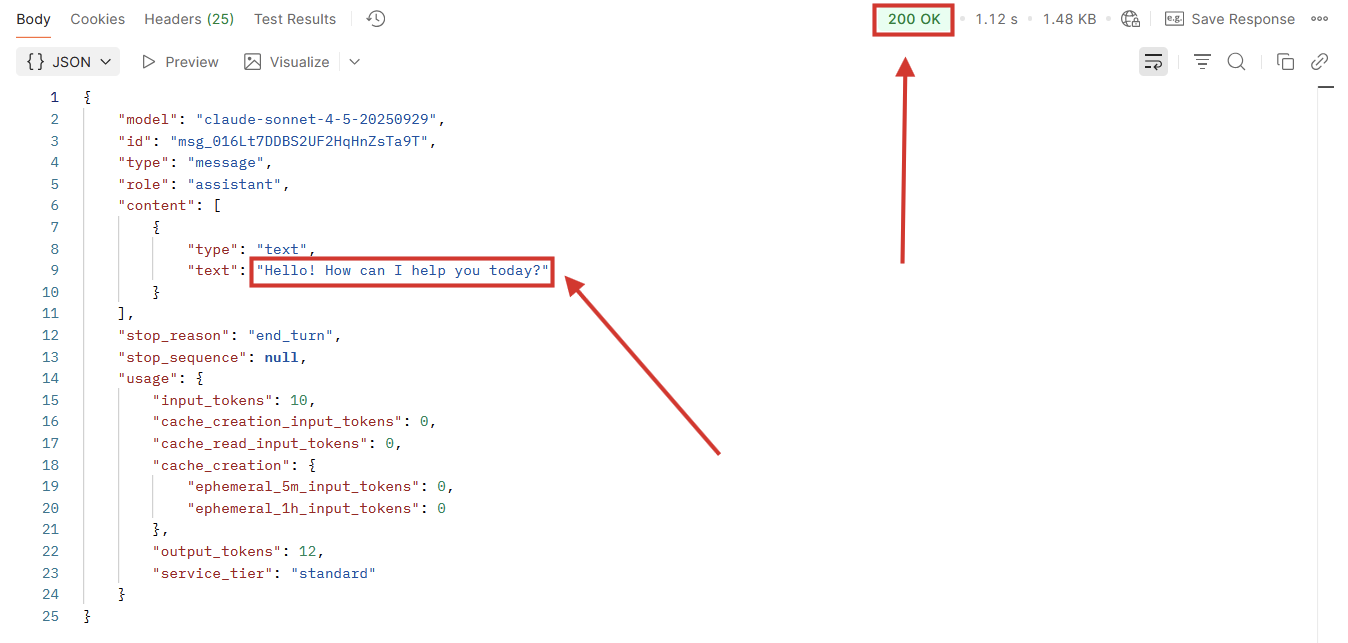

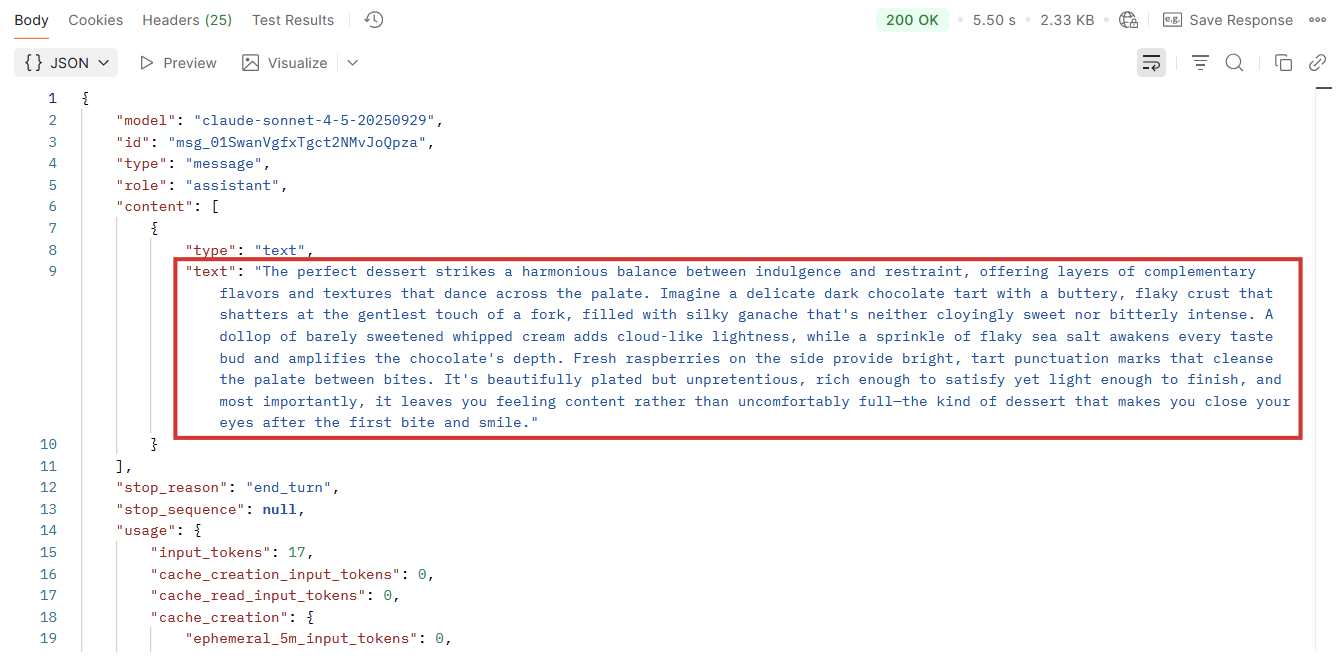

(11.2) If you followed all the instructions so far, you'll get an HTTP 200 OK status code, along with a response from Claude, both highlighted in the image below.

Note: Every time you click send and get a response from the Claude API, Anthropic will deduct the corresponding cost from your account credits based on the selected model's pricing.

Step 12: Pass your prompts

The API call is ready, but it isn't useful if we just keep sending "Hello, world." Let's explore how to both change the prompt we're sending to Claude and the system instructions that control how it should respond.

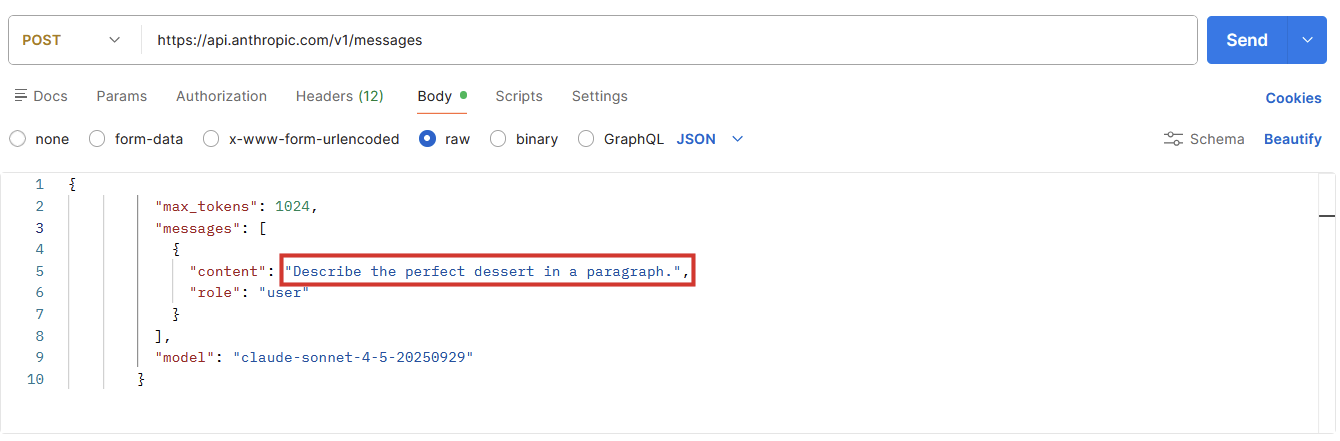

(12.1) In Postman, change the text of the content key to something different, such as "Describe the perfect dessert in a paragraph." Only change the text within the quotes: keep the remaining syntax exactly as it was.

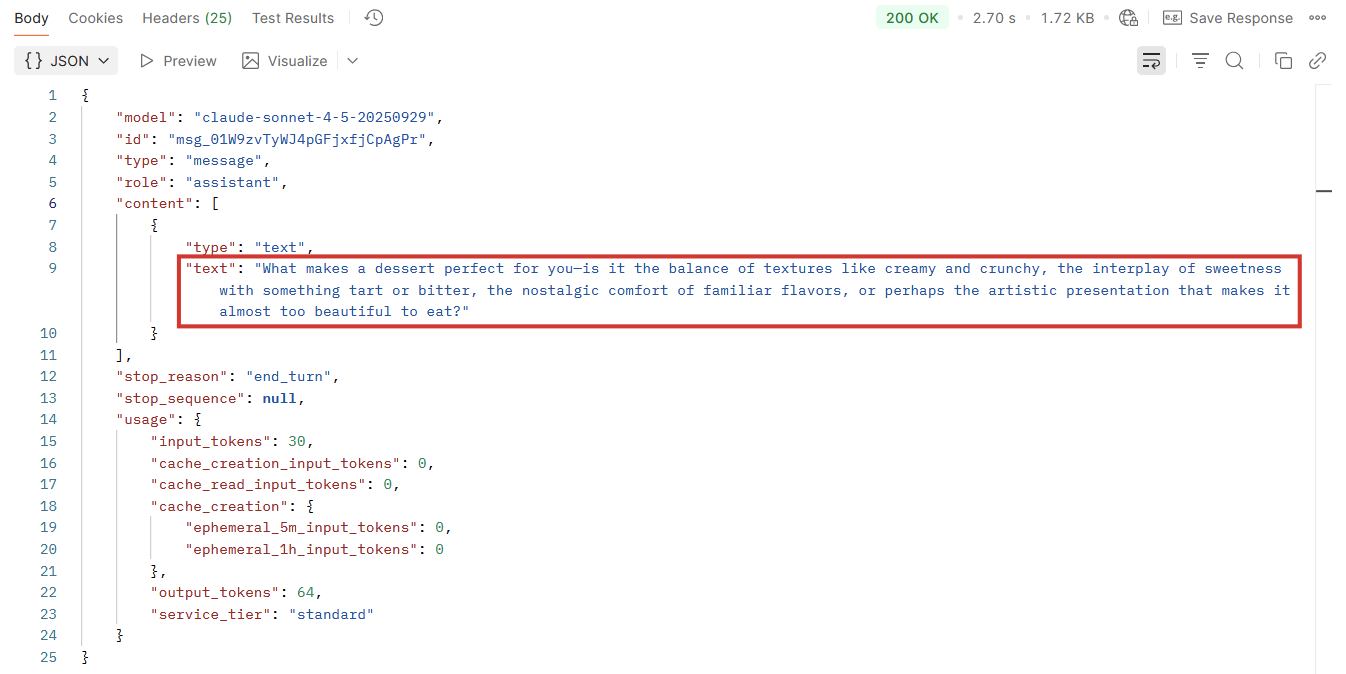

(12.2) Claude responds to the prompt in the response section below.

Tip: Changing the content of the user's message is the equivalent of writing a prompt and sending it to Claude via the Claude.ai website.

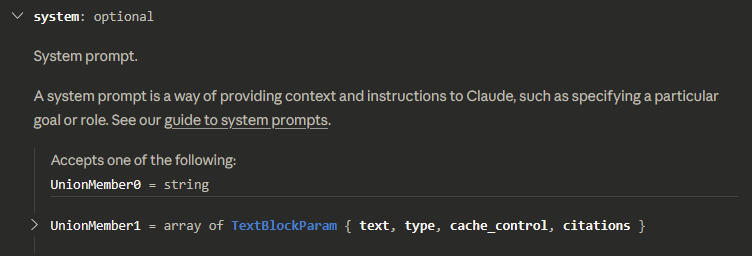

(12.3) Let's expand the API call with system instructions, telling the model how it should interpret user prompts. Keep reading the message API reference article, focusing on the body parameters section until you find the system parameter. Click the row to expand.

This snippet has important information:

systemis the parameter's name. It's optional: the API won't return an error if it's not there, but if we include it, we get to customize the output with it.It accepts a

string, meaning the accepted value can be any combination of text, letters, or characters enclosed within quotations.The description text shows how this parameter works. In this case, there's an entire guide to system prompts. (Heads up: the examples on that page are structured in Python, not JSON. If you can't guess the correct syntax, paste the example in ChatGPT and ask to regenerate it into JSON body.)

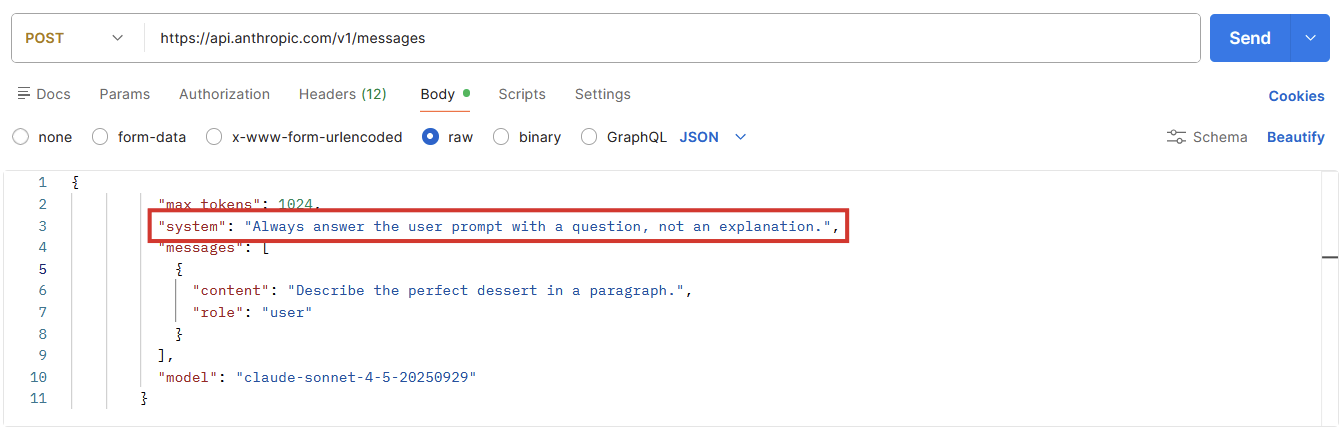

(12.4) In Postman, add the following parameter under max_tokens:

"system": "Always answer the user prompt with a question, not an explanation.",

You can replace the text in bold here with your system instructions. Be sure to check the syntax: the line must end with a comma after the closing quotes.

(12.5) Click Send.

(12.6) The content of Claude's answer changes right after we introduce the system instructions, even when using the same user prompt.

Step 13: Customize the response

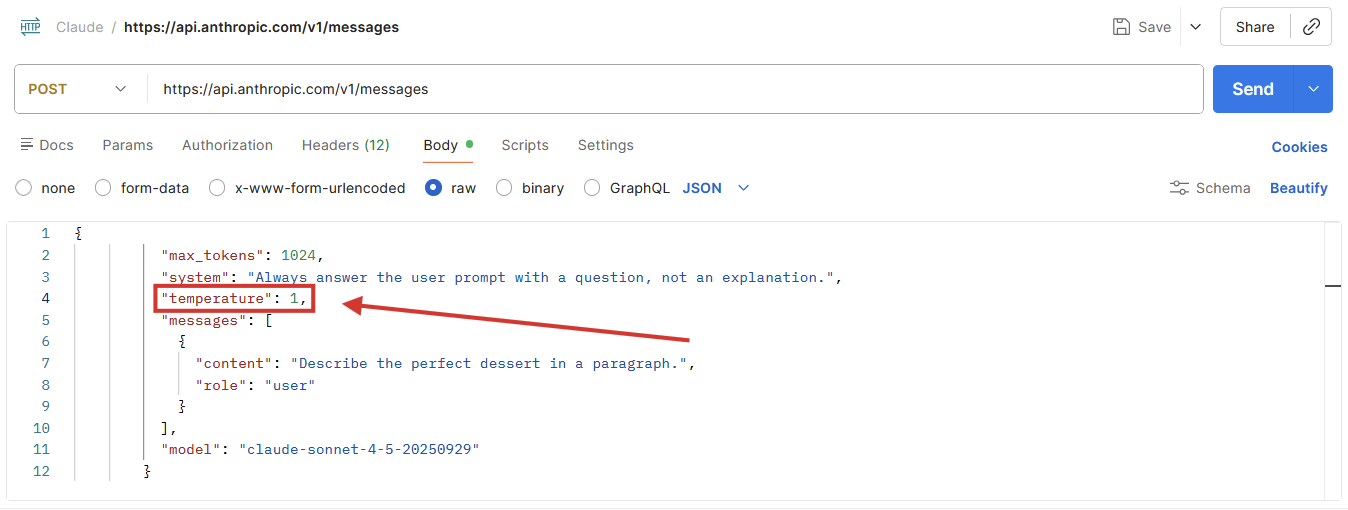

Adding other parameters to the call to customize the response follows a similar process to the previous step.

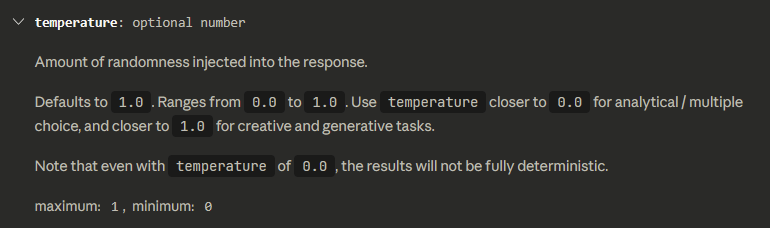

(13.1) Learn more about the temperature parameter in the create a message API reference page.

(13.2) Add temperature to the API call, right under system. Remember to enclose the parameter name inside quotes, but don't do the same for the number. Finish the line with a comma.

Example: "temperature": 1,

(13.3) Click the Send button a few times to see how the response changes with a maximum temperature setting.

Step 14: Design new features with the Claude API

Using Postman is good for trying out what an API can do and build calls that are ready to integrate into your apps. You can keep experimenting by adding more body parameters, adjusting values, and exploring what responses you get.

Over time, you'll find that the API call you're working on is the perfect solution for your use case—for example, it writes a good response to an email message that you pass as an input. When that happens, save the call in Postman, create a new one, and keep working on the next problem.

While Postman acts as a platform to design the call before integrating it, the Claude Workbench is better for working on prompt engineering and optimizing outputs. The user interface exposes the core controls of the API visually, so you can focus on how the responses change when you adjust the settings. It also includes evaluation tools to help you judge how well an output solves a task, useful so you can launch a new feature with confidence.

Step 15: From testing to production: Integrate Claude into your apps

Once you have all your API calls and prompts set up the way you want to, it's time to integrate them into your app or internal tool. This will let you:

Pass dynamic values via variables. You can build a user interface to replace any value of the API call, such as changing the user prompt, the system instructions, or temperature.

Bind the API calls to buttons, database operations, visual elements, or to triggers such as "when a page is loaded." This is the most visible part of integrating the Claude API into your app, as you'll be able to see information generated by Claude inside the user interface you built.

To complete this integration process, refer to your no-code/low-code platform or internal tool builder's documentation. These usually have external API connectors that you can use to make calls from within the platform. Here are a few examples:

Empower your apps with the Claude API

Setting up your first API call takes patience and persistence, but it quickly becomes second nature when you grasp the basics. Once you get past the initial learning curve, you'll get an intuitive sense of how parameter values change responses, and how you can integrate them into your product or tool.

And if you want to skip the whole process, stick with Zapier and connect ChatGPT to all the apps you use with no code.

Related reading:

This article was originally published in July 2024. The most recent update was in February 2026.