Generative AI has come a long way from the early days of nightmarish images and alien-ish misspellings. I have to drop my favorite examples from one of the early-days ChatGPT Facebook groups I joined.

(Sorry.)

Plenty of AI image models have made things a lot more impressive, and Google's groundbreaking image model, Gemini 2.5 Flash (also known as nano-banana because AI people), is now making waves. It solves a lot of the problems that have been associated with AI image generation models up until this point, and it's just super fun to play around with.

Table of contents:

What is nano banana?

Nano-banana (officially, Gemini 2.5 Flash Image) is Google's latest AI image generation and editing model, specializing in photorealism and character consistency.

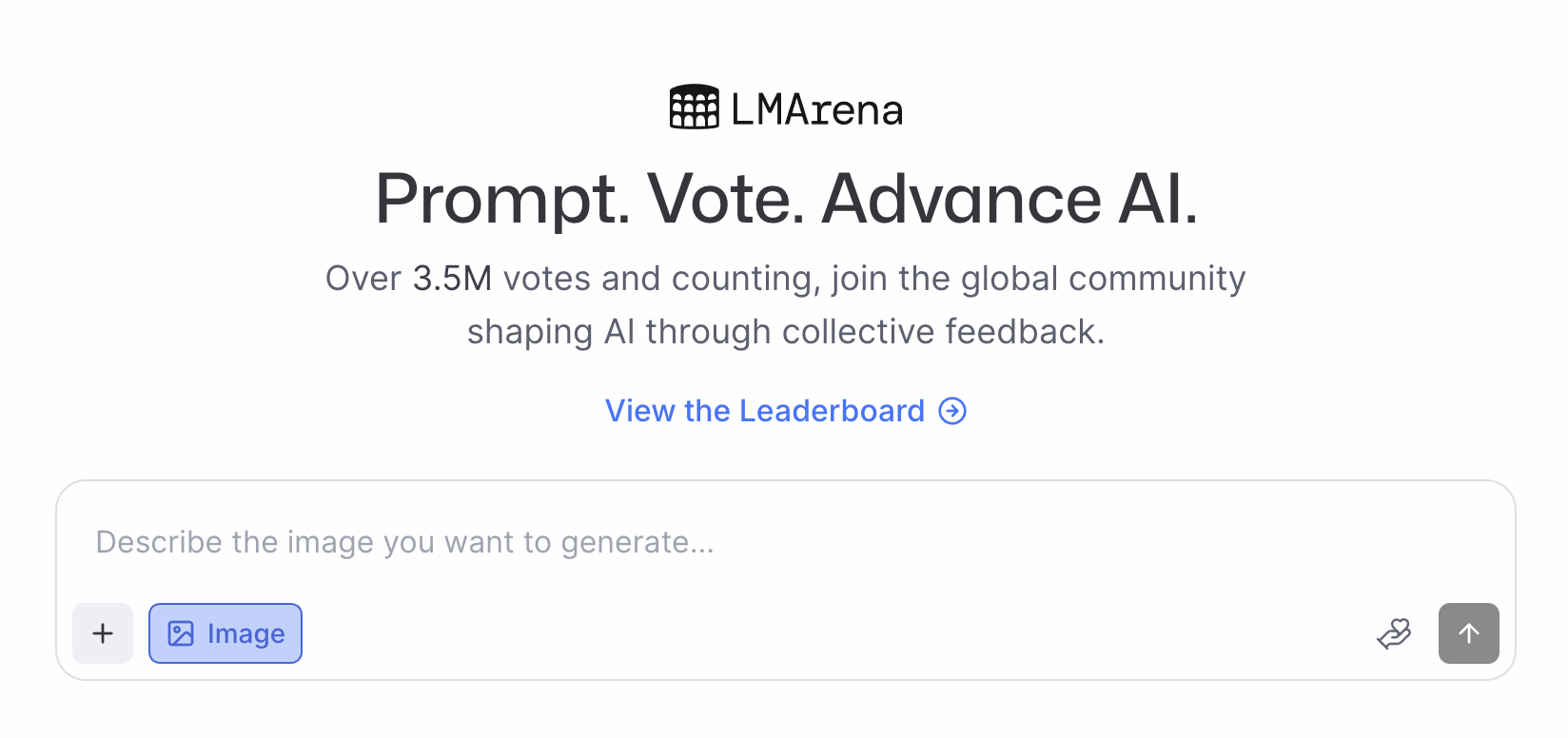

It had some stealth beginnings, which ramped up the hype. At first, it was only accessible via LMArena, a tool for testing the performance of top AI models against each other in anonymous "battles"—only revealing competing models' names after users vote on which result did a better job of addressing their query.

As users started to get excited by what they were seeing in LMArena, Google employees began acknowledging their claim to nano-banana with sneaky tweets featuring banana imagery.

https://t.co/C3fD2Evdyj pic.twitter.com/1zAgALY6od

— Simon (@tokumin) August 21, 2025

After getting everyone talking and keeping them guessing, on August 26, 2025, Google finally revealed its ties to the model.

Our image editing model is now rolling out in @Geminiapp - and yes, it’s 🍌🍌. Top of @lmarena’s image edit leaderboard, it’s especially good at maintaining likeness across different contexts. Check out a few of my dog Jeffree in honor of International Dog Day - though don’t let… pic.twitter.com/8Y45DawZBc

— Sundar Pichai (@sundarpichai) August 26, 2025

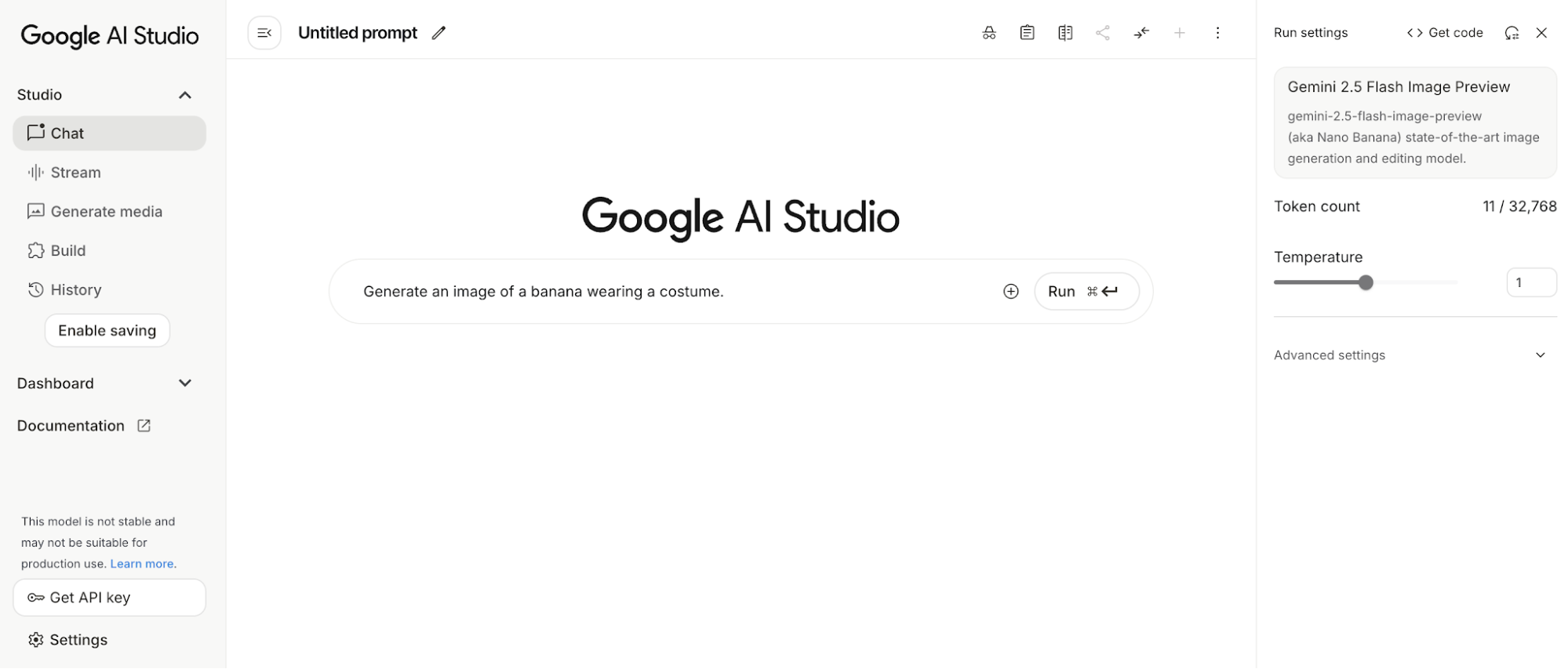

The nano-banana launch kicked off with a livestream, showcasing how to use it in Google AI Studio.

Where can you access nano banana?

As part of the announcement, Google granted more reliable access to nano-banana outside of chance encounters in LMArena.

Now, users can try it for themselves in Google products, including Gemini, Vertex AI, and AI Studio; you can also grab it via API access.

Other AI tools have begun integrating it into their offerings, too. OpenRouter uses it as its sole AI image generation model), and even Adobe Express and Firefly have integrated it. And since Zapier integrates with Google AI Studio and OpenRouter, you can integrate Google 2.5 Flash capabilities into all your workflows.

Beware of lookalikes trying to capitalize on the buzz, especially those that came out prior to Google laying official claims to nano-banana. For example, nanobanana.ai is not currently recognized by Google.

Why people are losing their minds over nano banana

So why is there so much buzz about nano-banana? After all, it's just one of many attempts by AI companies to figure out image generation.

For starters, it's new. Everyone loves a shiny new AI model.

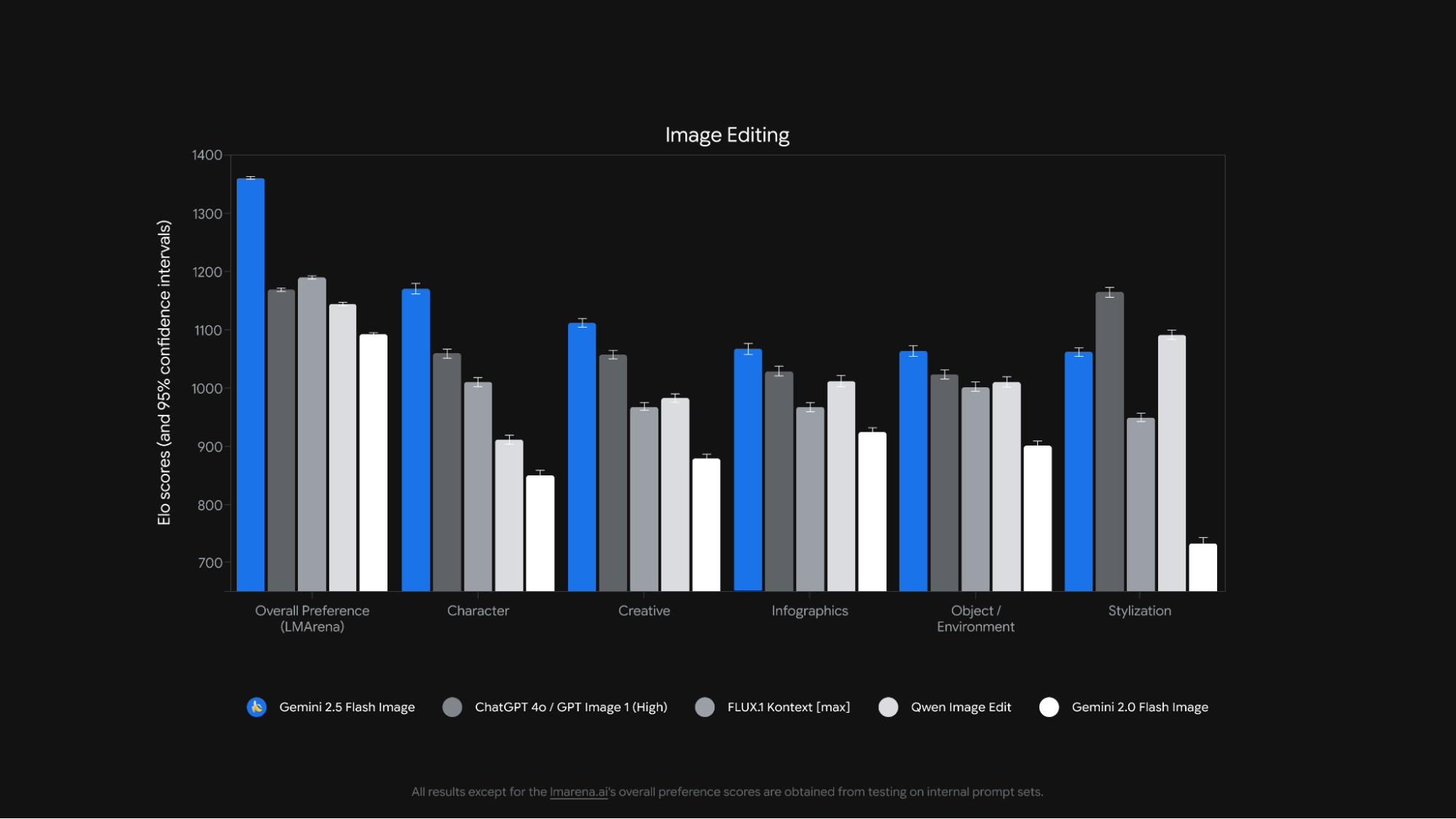

But it's more than that: it's consistently outperforming competitors on most major image generation benchmarks.

Early reviews are calling it a "Photoshop killer" because of users' ability to make image edits using natural language, rather than requiring mastery of a complex software interface. It's creating a new level of AI editing accessibility for us non-graphic designers.

Another powerful feature is its ability to maintain character and narrative consistency. Usually, when you play around with AI generation, creating consistency between responses (image or text) feels nearly impossible. That's because AI is nondeterministic: it produces different outputs from the same input.

You can test Gemini 2.5 Flash's character consistency for yourself with the Past Forward template app.

Google 2.5 Flash also lets you seamlessly weave together elements from multiple images into one final generated image. Google Veo 3 (for video generation) has a similar feature called Ingredients to Video, but it's only available in the higher-priced Google AI Ultra plan. With nano-banana, the comparable feature for image generation isn't limited to a specific or paid plan.

Test Gemini 2.5 Flash's ability to combine multiple elements in one image for yourself with the Home Canvas template app.

Finally, my tests, as well as those by other early users, resulted in solid results from initial prompts—not endless revisions to get something useful that's seemingly become typical of AI image generation.

Of course, you still have to know how to effectively direct AI tools and write descriptive prompts to get solid results. I hesitate to call it a graphic design industry killer. It's just giving people (like generalist marketers) more tools to do simple things for themselves.

Nano banana pricing

Using Google 2.5 Flash via API results in approximately $0.04 per image generation.

I have the Google AI Pro plan ($19.99/month): it offers higher Google Drive storage limits (2 TB) and expanded access to AI features like this.

Credits are still a little fuzzy, but it seems like with Google AI Pro, you get 100 daily queries for the Gemini 2.5 Pro model, and specialized Google tools like Flow and Whisk have their own usage limits. There isn't necessarily a hard limit on tokens you can use in a month, but rather a "fair usage policy" with a daily query limit.

Can you use nano banana for free?

There is a free version of Google AI Studio you can use to access Gemini 2.5 Flash on a limited basis without a paid account.

Of course, the other free way to use nano-banana is by taking your chances of it coming up in your anonymous battles on LMArena.

How to create your first image with nano banana

Using nano-banana is simple: access Gemini 2.5 Flash in a connected Google product or external AI tool (I'm using Google AI Studio). Then, describe what you want to generate or upload an image or multiple images for editing. That's it.

Here are a few ways I had nano-banana edit my photos, and the results.

(Spoiler: things get a little weird as complex requests kick in.)

Test 1: I gave it an old family photo of my mom and her 9 (!) siblings and asked it to colorize it. The results were pretty sharp.

Then, I tried to push it to the limits:

I asked it to change the clothing color of any girl wearing yellow or tan to neon green. It missed one person and changed another person that I wasn't expecting it to change.

I asked it to change the lion painting to an elephant painting in the same style. It did a decent job.

I asked it to give everyone Mickey ears, but it missed the youngest kids.

Here's the end result with all of these changes:

Test 2: I had it make slight updates to a professional photo of myself, starting with changing my hair color to red:

I made a few more changes:

I asked it to add an alien walking in the background, like the one from Signs.

Then I asked it to move my top hand so the palm was facing up and holding a potato.

Gemini 2.5 Flash is still a little wonky (see: my three hands). It doesn't magically erase the fundamental issues with AI image generation, though it greatly improves upon them.

When I asked it to remove the background from my headshot, it came up with this wonky and inconsistent tiled mess, not an actual transparent image.

So, though many call it a Photoshop killer, it's not quite there. Despite its limitations, here are some more exciting examples to inspire you, via Google:

Our new native image generation and editing is state-of-the-art, and ranked #1 in the world. And we're rolling it out for free to everyone today.

— Google Gemini App (@GeminiApp) August 26, 2025

You’ve got the tools. Now go bananas. Ideas & inspiration in the 🧵below. pic.twitter.com/mw7XyG5nes

There really are so many potential use cases—here are some ideas (with specific examples curated by @markgadala):

eCommerce teams staging product photos in different situations and different backgrounds.

I’ve been testing nano-banana on my own designs with different lights, settings and styles and this tool feels like a game-changer for brand designers!

— Daria_Surkova (@Dari_Designs) August 18, 2025

Here are 10 examples based on my projects

+ pros & cons pic.twitter.com/U7kv2cP4qWSocial media content adjusted slightly for different channels and campaigns.

Gaming and video scenes: consistent character portraits and settings.

And just like that, a movie is made. All this needs now is motion. And then it'll be a Motion Picture, literally. nano-banana is next level. One shot, endless angles. It didn't just capture the scene; it captured the vibe and tone as well, even the colors. Insane model. pic.twitter.com/xDt7tCs4Pp

— WuxIA Rocks (@WuxiaRocks) August 19, 2025Educational visuals for students with better accuracy that require fewer edits than other popular models.

Add nano banana directly to your workflows

One of the best parts about nano-banana isn't just playing around with it in Google AI Studio—it's bringing that same creative power directly into your day-to-day work. With Zapier, you can orchestrate nano-banana alongside all the other tools you already use, no manual copy-pasting required.

Because Zapier integrates with both OpenRouter and Google AI Studio, you can automatically pass prompts or data from your favorite apps straight into Gemini 2.5 Flash. That means you could generate product mockups when new items are added to your Shopify store, auto-edit headshots or team images whenever they're uploaded to Google Drive, or spin up on-brand visuals for marketing campaigns as soon as a new blog post is published in your CMS.

You can layer in logic, filters, and formatting so that nano-banana becomes just one step in a larger, fully automated creative process. If you're just starting to experiment, this is a low-effort way to test the waters and see how image generation can fit into your team's existing processes.

Learn more about how to automate Google AI Studio, or get started with one of these pre-made workflows.

Generate draft responses to new Gmail emails with Google AI Studio (Gemini)

Create a Slack assistant with Google AI Studio (Gemini)

Generate responses in OpenRouter for new emails in Gmail

Generate responses in OpenRouter from completed chatbot conversations in Zapier Chatbots

Zapier is the most connected AI orchestration platform—integrating with thousands of apps from partners like Google, Salesforce, and Microsoft. Use forms, data tables, and logic to build secure, automated, AI-powered systems for your business-critical workflows across your organization's technology stack. Learn more.

Should you use nano banana?

I personally haven't put much stock in AI image generation up until this point for many reasons (see: deranged dog breeds).

Part of this comes down to my personal feelings about it. I'm not confident that we can use image models trained on other people's creations in commercial projects without issues. And although Google integrated SynthID watermarking into Gemini 2.5 Flash to promote responsible and transparent use and help prevent misuse, I don't want to put myself in a position now that could get me or my clients in trouble in the future, or misuse the work of creators who aren't being fairly compensated for their inputs.

But what I can absolutely get behind is a tool like nano-banana (fine, Google 2.5 Flash), which empowers users to rework their own content in ways they would have previously had to use tools like Photoshop for. This is a smart use and evolution of AI, and I, for one, am excited about the possibilities.

Related reading: