Telling employees you're "all in" on AI is one thing. Knowing whether it's actually being used—and creating impact—is another. If you don't track adoption, you risk falling into the trap of vanity wins: a few flashy pilot projects that never make their way into day-to-day work.

To avoid that, you need clear, consistent ways to measure AI adoption. That means tracking both how widely employees are using AI and how deeply it's embedded into workflows across teams so you can be sure you're not mistaking hype for impact.

Here are four practical ways to measure AI adoption across your organization.

Table of contents:

1. Percentage of active employee usage

One of the easiest and most telling ways to measure AI adoption is to look at the percentage of employees actively using AI tools to help them work faster and better. If the number's high, you know AI has officially made it out of the fun side project category and into actual day-to-day work. If it's low, you may still be in the phase where everyone thinks it's cute that ChatGPT can write haikus.

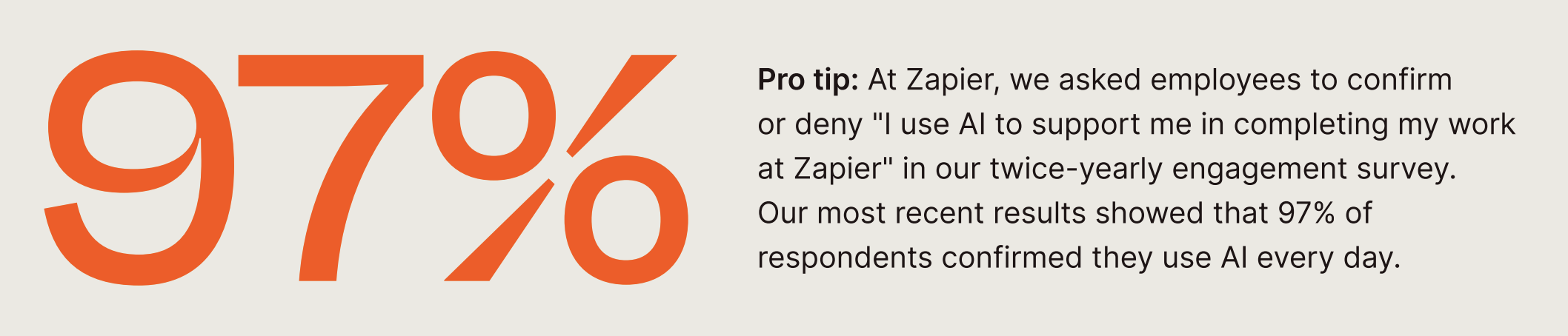

Of course, "high" and "low" are all relative and context-dependent. When Zapier first started tracking active AI adoption rates in late 2023, it came in at 63%. By the end of 2024, that number climbed to 77%. And more recently, it was hovering at 97%. So rather than benchmarking against some arbitrary percentage dictated by the internet, define what "high" means for your company and track your progress over time. The trend line matters more than the absolute number.

How to measure:

Monthly pulse surveys: Incorporate specific questions into your existing team surveys—like "Which AI tools did you use for work this week?" with checkboxes for tools you've made available to your team. At Zapier, we included this question in our employee engagement surveys.

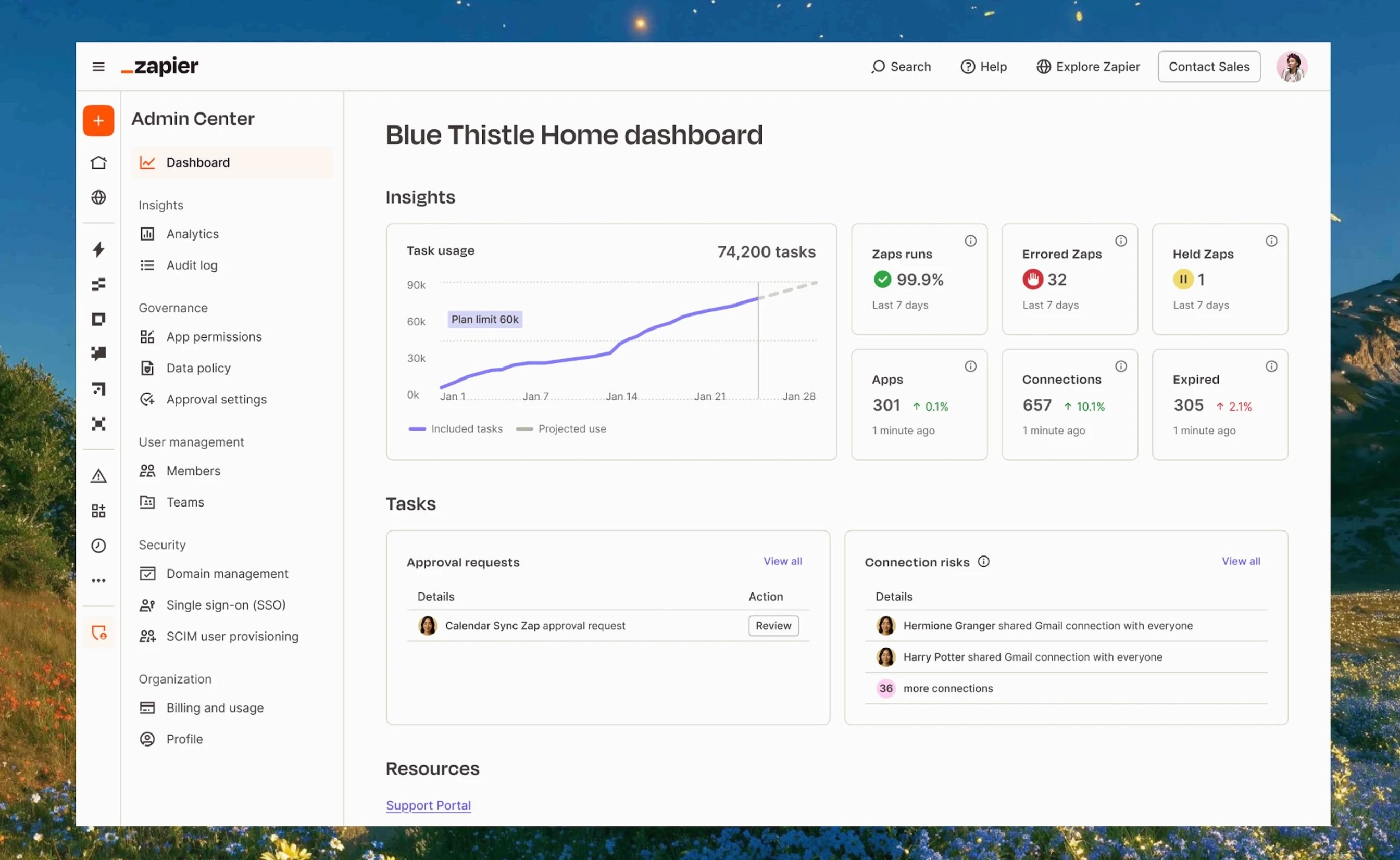

AI analytics dashboards: Most AI tools have admin dashboards that show active users, session frequency, and usage patterns. It's like checking your screen time report, but less depressing (hopefully). This is why enterprise accounts are valuable: they give you another lens on who's actually using AI—not just talking about it in Slack. For example, here's what an admin dashboard for Zapier looks like.

2. Number of AI workflows deployed

I could use ChatGPT every day to mock up images of my dog in different Halloween outfits—all in the name of testing its AI-image generation capabilities, of course—and that would technically count as active AI usage. But as much as it pains me to say it, seeing a hound dog dressed as a bowl of spaghetti and meatballs doesn't exactly support the business.

That's why it's important to go beyond just measuring who's logging in to AI tools. A clearer signal of adoption is the number of AI workflows actually deployed. Workflows are where experimentation turns into lasting value—automating lead routing in sales, drafting customer support replies, or streamlining reporting in ops. Tracking these workflows by department also helps you see where AI has become part of everyday operations and where there's still room to grow.

How to measure:

Centralized AI registry: Keep a single source of truth where leaders can log new AI use cases. It makes it easier to track growth over time and spot which departments are leaning in.

Self-reporting mechanisms: Build measurement into the tools teams already use. For example, set up a Slack bot that prompts employees to share which AI workflows they used that week. It's lightweight, quick, and captures activity that might otherwise fly under the radar.

3. Number of AI experiments launched

Experiments show that employees aren't just learning about AI—they're actively testing how it fits into real problems and workflows.

You don't need to log every tiny test, but keeping a pulse on how many experiments are launched each quarter gives you a sense of momentum. If the number is rising, adoption is spreading. If it's flat or declining, it may be time to step in with more training, better tooling, or fresh inspiration. Over time, you can also track how many experiments graduate into full workflows. That transition—from test to scaled process—is a strong marker of sustainable adoption.

How to measure:

Project management tags: Use tags like "AI experiment" in project management tools to easily track and filter AI-related pilots.

Hackathon participation: If you run internal hackathons or innovation weeks, track the number of AI-focused projects submitted. These events are a natural breeding ground for experiments. You can also set up a way to follow up on hackathon experiments: how many of them develop into consistent AI usage?

4. Rates of completion for AI training

Rolling out AI tools without training is like handing someone a power drill without explaining how and when to change the bits. Sure, they'll figure out how to turn it on. But will they know you have to flip it into reverse to swap out the flathead bit for the hole saw attachment that lets you carve a perfect circle through drywall? Speaking from experience, absolutely not. Instead, they'll keep drilling sad little holes and wonder why it's taking forever.

That's why tracking completion rates for your AI training programs matters. It's a simple way to see whether people are engaging with the resources you've put in place.

How to measure:

Learning management systems (LMS): Most LMS platforms have built-in reporting dashboards. They'll tell you exactly who completed training, how long it took, and where people dropped off. (If you notice everyone bailing halfway through Module 3, that's not on them—that's a signal to rework Module 3.)

Post-training surveys: A quick survey right after training helps you gauge whether employees found it useful. Ask questions like, "Do you feel confident applying what you learned to your work?" It adds color to the raw completion data.

Make measurement part of the culture

Measuring AI adoption isn't about adding more bureaucracy or creating dashboards no one looks at. It's about understanding whether AI is making its way into the daily rhythm of your business—and that it's driving real value, not just hype.

That starts with giving teams the right tools to experiment and build. If employees are stuck juggling disconnected apps or shadow AI tools, you'll never get a clear read on what's working.

Zapier lets you orchestrate sophisticated, multi-step AI workflows across your tech stack—tying together data, automations, and approvals in a way that scales across departments. That visibility makes it easier to see how AI is being adopted, and where it's having an impact.

Start simple: track usage, workflows, experiments, and training. Then use those insights to double down on what's working and adapt where needed. Over time, these metrics give you a living picture of how AI is embedding into your culture so measurement isn't an afterthought, but part of how you grow.

Related reading: