When you're in the middle of an intense online game only to have your character freeze mid-action because the server didn't respond quickly enough, that's latency in its purest, most infuriating form.

Latency is that invisible gap between when you do something (like clicking a link) and when you see the result (like a web page loading). And if you've ever rage-quit a video game, abandoned a shopping cart while waiting for the checkout page to load, or disassociated while watching a spinning wheel, you know why it's not great for business.

This post will break down what latency is beyond "that thing that ruins everything," what causes latency, and most importantly, how you can reduce it.

Table of contents:

What is latency?

Latency is the time delay between a user action in a network or application and the system's response. More commonly known as "lag" (a word that fills the hearts of gamers everywhere with pure rage), it measures how long it takes for a data packet to move from a sender (such as your computer) to a receiver (such as a server).

Let's say you're using Zapier to connect Trello and Slack because you're trying to get your life together. You want to receive a Slack DM whenever a new card gets created in Trello. Here's what happens behind the scenes:

Trello detects the new card (processing time).

Trello sends the data to Zapier (network travel time).

Zapier receives and processes the data (server processing time).

Zapier formats the message.

Zapier sends the message to Slack (more network travel).

Slack receives the message and displays it.

The total time from creating the Trello task to seeing the Slack notification represents the end-to-end latency of the workflow. If any one of those steps stalls (network hiccup, API throttling, step backlog), you'll notice a delay before the notification appears in Slack.

How is latency measured?

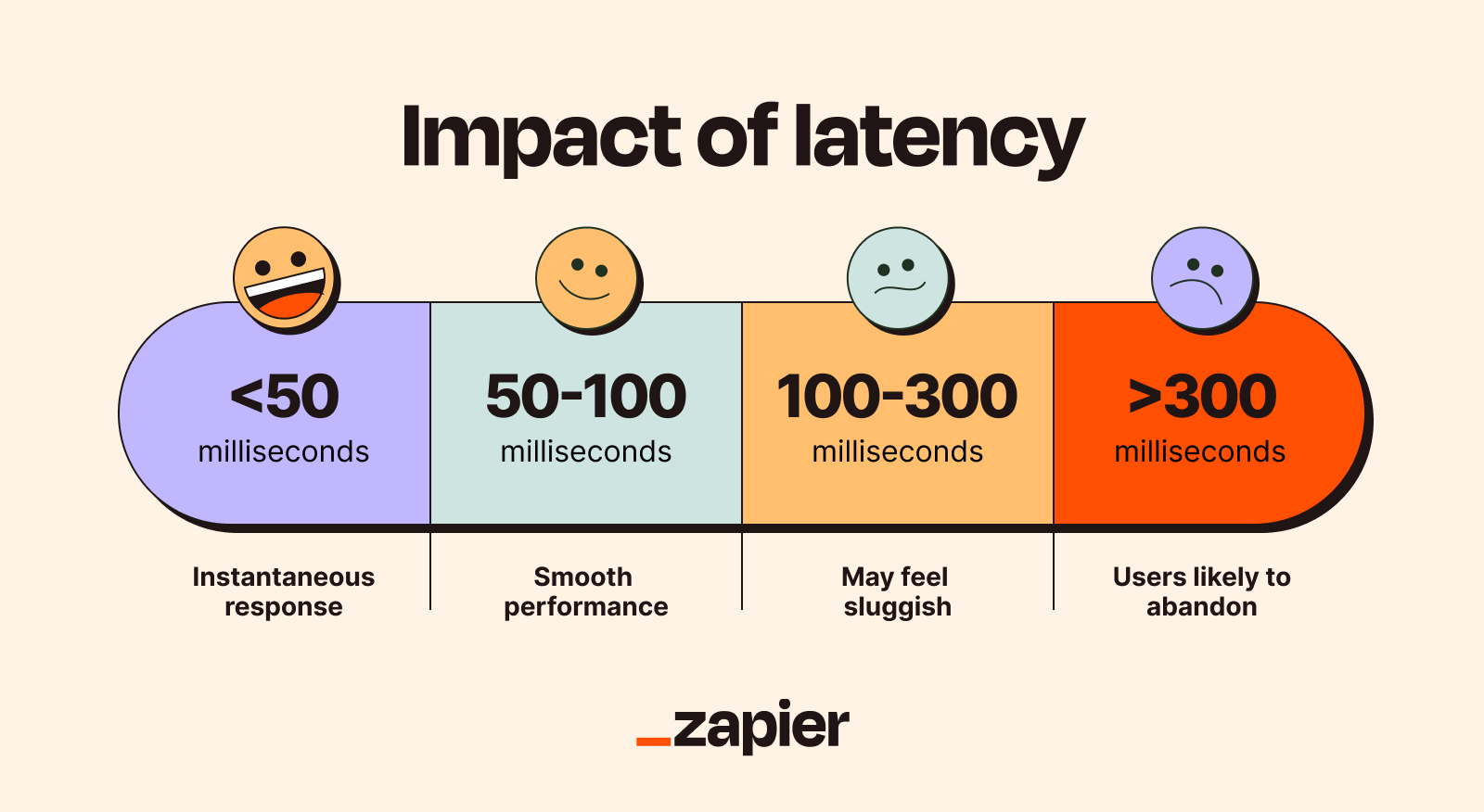

Latency is measured in milliseconds. To put this into perspective, 1,000 milliseconds is one second. So when we talk about 50ms of latency, we're discussing a delay of just 0.05 seconds. But don't let the small numbers fool you. Even tiny delays can make a big difference in performance.

Simply put, low latency is good. It means things are fast and responsive, which you absolutely need for video calls, competitive gaming, or any real-time service. High latency, by contrast, makes things feel sluggish and disconnected, even if you have a fast internet connection. The difference between low and high latency often comes down to just a few dozen milliseconds.

High latency doesn't have some universal magic number where everyone agrees, "Ok, this is officially terrible." But generally speaking, if you're noticing delays, that's your cue that something's not right.

Use case | Good | Borderline | Poor |

|---|---|---|---|

Web browsing | <100ms | 100-300ms | >300ms |

Video calls and gaming | <50ms | 50-100ms | >100ms |

APIs and automation | <250ms per hop | 250-500ms | >500ms |

Here are some common ways to measure latency:

Time to first byte (TTFB): This measures how long it takes for the first piece of data to reach you after making a request. TTFB includes DNS lookup time, connection establishment, and server processing time. It's like waiting for the first kernel of popcorn to pop—you know more is coming, but that first one sets the pace for everything else.

Round-trip time (RTT): Round-trip time is the total time it takes for a packet of data to travel from its source to its destination and back again. RTT gives you a complete picture of network latency.

Ping command: This simple tool measures the RTT to a specific server. It sends a tiny "ping" packet and calculates how long it takes for the "pong" to come back, giving you your current latency.

Common causes of latency issues

All sorts of things can cause latency, but here are the most common culprits you'll run into:

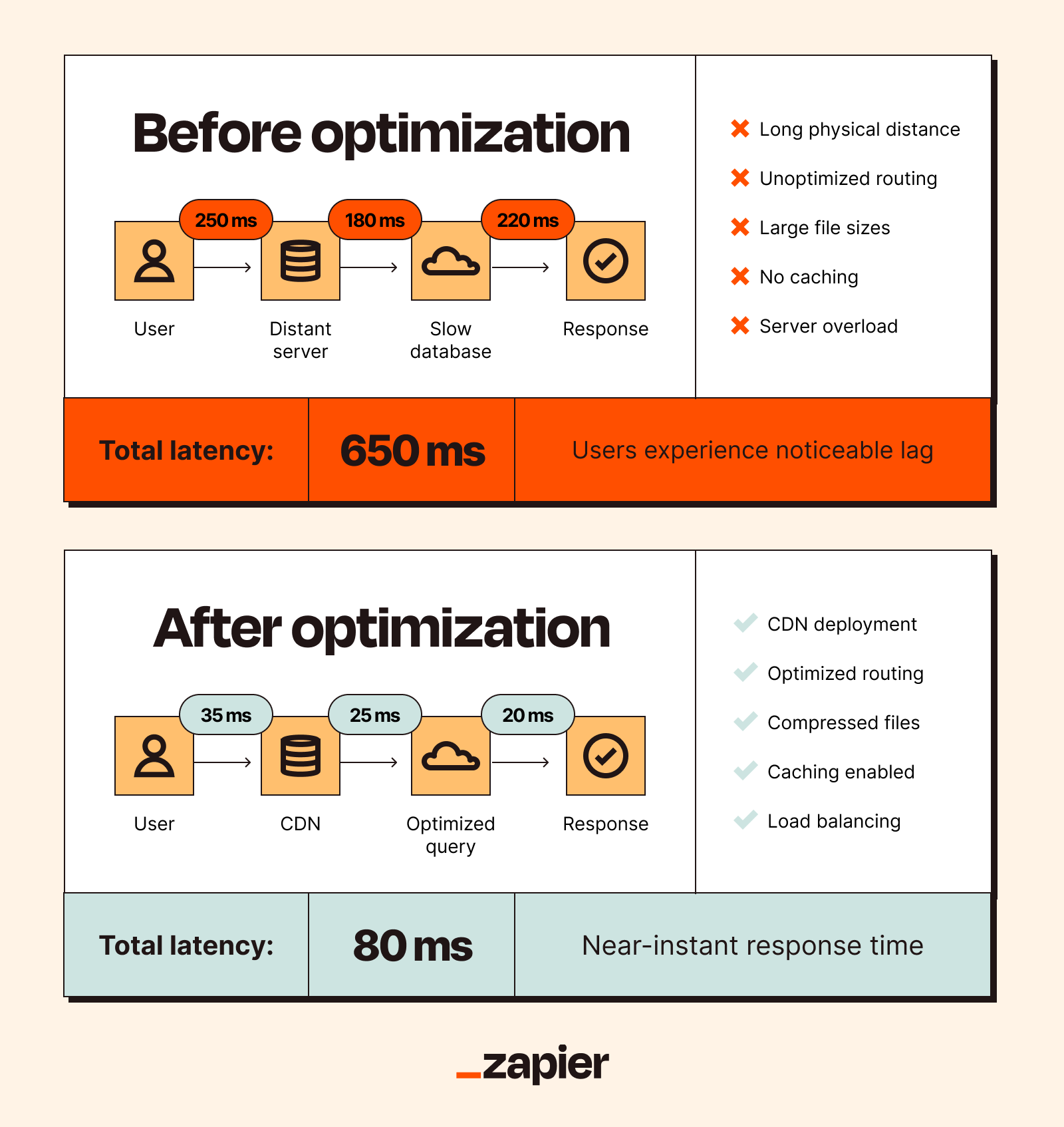

Data travel distance: Even at nearly the speed of light, a longer physical distance (between a user and a server, for instance) means increased data travel time. Just ask an Australian about their experience with latency.

Data congestion: When too much data is traveling through one path, it all gets delayed. Heavy traffic or popular times of day can all cause congestion.

Data packet size: Data packets are like suitcases. Just like how dragging a giant suitcase up three flights of stairs is way harder than carrying a small overnight bag, bigger data packets take longer to process and transmit.

Hardware performance: Older or slower devices increase latency. This should be fairly intuitive, since you wouldn't expect your grandma's dusty Gateway to be as fast as a cutting-edge gaming rig.

Transmission medium: There are different kinds of cables that can send data, and some are simply faster than others. Fiber optic cables, for example, offer faster speeds compared to copper wires or wireless networks.

DNS lookup time: Before your device can connect to a website or app, it has to translate the domain name (like zapier.com) into an IP address. That translation (called a DNS lookup) takes time. If DNS lookup is slow, you're delayed before the data transfer even starts.

Server processing: The server itself takes time to process your request. It has to look up data, run calculations, check permissions, and format a response. Just as a server at a restaurant might get overwhelmed during a lunch rush, a computer server can slow down during periods of high traffic.

Protocol overhead: A protocol is the set of rules machines use to talk to each other (like HTTP for websites). Some protocols require extra steps and formalities that add processing time. That's great for reliability but tragic for speed.

Routing inefficiency: Data traveling through the internet can take different paths to reach its destination. Sometimes your data takes the scenic route through multiple servers and networks instead of the direct path, which adds to the lag.

How to reduce latency

Resolving latency issues involves optimizing both infrastructure and operational practices. Let's break down what that means.

Use content delivery networks

Content delivery networks (CDNs) are one of the most effective ways to reduce latency. A CDN is a network of servers distributed around the world. Instead of hosting your content in one place, a CDN caches your content on those distributed servers, which means you can access data from a server that's geographically closer to you.

Without a CDN, visitors to your website get data from your server (say, in New York), so a customer in London has to wait for data to travel from New York to England. With a CDN, that same user gets your content from a nearby CDN server in London. The data has a much shorter trip, which means lower latency and a faster, happier user.

Implement operational practices

Server overload is a perpetual headache for network engineers. Their go-to fix is load balancing, which is deliberately spreading work across multiple servers and resources. This keeps any single server from getting overloaded.

Request batching helps, too. Instead of sending many small requests one at a time, you can combine them into fewer, larger requests. This reduces the number of trips data has to make, and fewer trips mean lower latency.

Other operational best practices to reduce latency include:

Database optimization: Efficient structure, indexing, and data organization prevent slow queries.

Network monitoring: Track latency trends to identify and fix issues.

Congestion minimization: Control data flow, space out requests, and schedule large transfers for off-peak hours.

Reduce file sizes

Smaller files travel faster than larger files. It's that simple.

Image compression is particularly important. Most images can be compressed significantly without noticeable quality loss. I promise your users won't miss the 4 MB hero image of a stapler.

Code minification removes unnecessary characters, spaces, and comments from your code, which won't make your website look any different but will make it load faster.

Minimize workflow steps

Not all latency lives in your servers. A lot of it is process latency. This could be the time lost when work sits in someone's inbox, waits for a manual export, or bounces between tools and teams before the next step even starts. Reducing those handoffs won't make an API respond faster, but it will shrink the end-to-end time from "something happened" to "someone acted on it."

Start by pinpointing areas where work gets passed between tools or people—like when Marketing exports leads from a form tool, uploads them to a CRM, and then messages Sales to follow up. Each handoff adds waiting, context switching, and opportunities for things to stall.

Zapier removes those gaps by automating the transitions so one step triggers the next immediately.

For instance, you could set up a workflow where every new lead from a Meta Lead Ad is automatically added to HubSpot, enriched with company data, and then sent to the right rep in your sales collaboration software. What used to be three separate handoffs now happens automatically, shaving minutes (or hours) of human-induced delay out of every response.

It admittedly takes some effort to find and remove those extra links in the chain, but if your latency problem is really a waiting-on-humans-and-handoffs problem, this is one of the highest-leverage fixes.

Optimize network infrastructure

Your cables matter. Upgrading to high-speed wired connections, especially fiber optics, is one of the best ways to slash lag.

While you're at it, check the other basics:

Get a better ISP: A reliable internet service provider is non-negotiable.

Use modern routers: An old router can bottleneck even the fastest internet.

Prioritize your traffic: Enable your router's quality of service (QoS) settings. This lets you create a "fast lane" to make sure your video calls don't freeze just because someone else is downloading a huge file.

Spread out the work: Tools like load balancing and edge computing help distribute workloads to prevent any one server from getting slammed.

Build low-latency connections with Zapier

If you're sweating milliseconds for your end users, you should also be ruthless about the minutes (and hours) hiding inside your own workflows. A fast product still feels slow when alerts sit unassigned, approvals stall, or teams have to manually move data between systems before anything happens.

Zapier helps reduce process latency by orchestrating workflows across apps so steps run automatically, right when an event happens. That means fewer manual handoffs, fewer delays, and faster end-to-end response times, even when your underlying systems are already as optimized as they’re going to get.

Try Zapier for free, and orchestrate workflows that fire in seconds.

Related reading: