AI governance is what lets you operate (and develop) AI ethically, securely, and responsibly. It's the rulebook your company follows that includes policies and best practices for everything from choosing software and training employees to complying with regulations and auditing data.

I have a friend whose company almost launched a promotional campaign 100% written by AI. The bot said their project management system offered deadline forecasting (not a real feature) and that subscriptions were 40% off for the first month (they weren't). They caught it last minute, but the edge was that close.

And this is just one of the tamer risks you take without AI governance. An employee can download any shiny new model they see online and run with it. It's like the Wild West, but for autonomous robots. Plus, improper AI use can break things: your reputation, your budget, or even the law.

So that's what this guide on AI governance is for. Learn the risks of AI, how to innovate while controlling your tech stack, and how Zapier can help you deliver enterprise-grade compliance for AI tools.

Table of contents:

What is AI governance?

AI governance is what lets you operate (and develop) AI ethically, securely, and responsibly. It's the rulebook your company follows that includes policies and best practices for:

Choosing reputable AI vendors and tools

Training on how employees should use AI responsibly

Gating access to specific AI systems via access controls

Complying with regulations and privacy laws for AI

Roles and responsibilities (and accountability) for AI use

Auditing AI models and data

Documenting how AI decisions are made

Consider traditional governance, like HR policies, financial controls, codes of ethics, and operations or project management frameworks, just to name a few. AI governance is an extension of that. Because AI comes with unique risks and opportunities you can't ignore, you need a security framework specifically designed to manage them.

Why is AI governance important?

The usual motivation behind AI governance is risk management. Nobody wants to watch their brand's reputation tumble or pay legal penalties because the company's customer service chatbot started giving discount codes in exchange for Social Security numbers.

But the benefits go way beyond just covering your bases. Here's how a strong AI governance framework can help you:

Avoid costly, high-profile mistakes: One biased algorithm, data leak, or bad prompt can lead to a PR nightmare. But governance acts as a quality control checkpoint, making sure outputs are accurate and ethical through testing before they reach the public.

Reduce data security and privacy risks: Every time employees feed an AI tool data, they assume it's protected with solid security and privacy features. But you can't really know for sure without established accountability or auditing practices. Governance sets strict protocols for managing input data by setting rules for which tools you can and can't use, and which controls they need in place.

Build trust with customers and stakeholders: Customers are more likely to engage with brands that enforce sound policies for AI use and set strict security standards. A governance framework is what sets these standards and proves you're handling their information with care.

Improve AI fluency with compliance training: Formal governance rules and training around AI create a culture of responsible use. It lets everyone understand their role in using AI safely, and treats it as more than a corporate formality.

Maintain ethical standards: Governance bakes company values into your technology. It helps you do right by your customers, employees, and industry by preventing harmful outcomes from biased decisions, privacy violations, and data leaks.

Don't think of responsible AI governance as a roadblock. It's more of a guardrail for innovation, letting you go full steam ahead on AI adoption safely, not recklessly.

Key objectives of AI governance

An AI governance program should offer an endpoint, a spot where you stop and say, "We're using AI securely and responsibly." So, while planning the governance journey, keep these target outcomes top of mind:

AI ethical standards and trustworthiness: Do your AI systems earn user confidence by respecting fundamental rights, dignity, and ethical principles? Are you auditing for bias, being transparent on data usage, and bringing in human insight where necessary?

Algorithm transparency and explainability: Can you demystify the "black box" and clearly articulate how your AI makes decisions or produces outputs? When a customer asks "why?" do you have a real answer, or just a guess?

Product accountability and ownership: Is it crystal clear who in your organization is on the hook when an AI system succeeds (or fails)? Have you assigned responsibility for outcomes, or is it disappearing into the gaps between teams?

Safety, reliability, and risk mitigation: Are you proactively building guardrails to ensure your brand and customers don't experience bias or hallucinations? Have your AI tools been tested for these errors and other potential misuse?

AI (input) data privacy and security: Do you have controls around the sensitive data fueling your AI systems? Can you assure the world it's not being leaked, misused, or becoming a liability that walks out the door with an employee?

Regulatory compliance and future-proofing governance: Is your governance framework agile enough to adapt to new laws, standards, and technology? Are you just reacting to headlines, or building a system that supports proactive compliance?

If you can answer yes to these questions, you've mastered governance.

6 AI governance examples

It's one thing to talk about principles, but it's another to see them in action. Here are six real-world AI governance models shaping how businesses deploy and use the technology today.

1. OECD AI Principles

Adopt if: Your business operates in any of the 38 countries that are part of the co-op.

The Organisation for Economic Co-operation and Development (OECD) brings together 38 countries to solve emerging challenges. And yes, one of those challenges is responsible AI use.

Members of OECD have agreed on five main principles that set the baseline of "good" AI:

Inclusive growth and well-being: AI should benefit people and the planet, driving fair development and improving quality of life for everyone.

Human-centered values and fairness: AI must respect the rule of law, human rights, and democratic values. It should include safeguards to ensure a fair and just society (i.e., no shady algorithms allowed).

Transparency and explainability: There should be clear disclosure that lets people know the reasoning and logic behind an AI's logic outputs (no more mysterious black boxes).

Robustness, security, and safety: AI systems must be secure, reliable, and built to fail safely. They shouldn't be easy to manipulate or compromise, as that can lead to harm.

Accountability: Organizations and individuals developing, deploying, or operating AI systems should be held accountable for their proper functioning. Someone always has to be responsible for the outcomes.

2. EU AI Act

Adopt if: Your business operates within the EU.

The EU AI Act is the world's first enforceable AI law on the books. It sorts AI systems by risk, from "unacceptable" (which are outright banned, like government-run social scoring) to "high-risk" (which face strict requirements for implementation in areas like hiring, education, and essential services).

Internal governance policies might suggest auditing for bias; the AI Act requires it. Similarly, a company's values might promote transparency. But the Act mandates that users know they're interacting with an AI.

For any business operating in or with the EU, this legislation isn't just another compliance checklist; it's the definitive framework your entire AI governance strategy must be built upon.

3. ISO/IEC 42001

Adopt if: You want a certified, internationally recognized framework for constructing an AI governance program.

Who doesn't love a good ISO standard? With ISO/IEC 42001, you can certify that you've established and maintained an AI management system fitting the ISO-recommended best practices.

Like other similar standards, ISO/IEC 42001 offers a practical way to bring AI governance into your business. It's basically a "how-to" guide for building a program from the ground up, providing the step-by-step framework to navigate AI lifecycles from policies to continuous program improvement.

4. NIST AI Risk Management Framework (RMF)

Adopt if: You need a basic, flexible foundation to build governance from scratch.

Created by the U.S. National Institute of Standards and Technology (NIST), the AI RMF provides guidelines for using AI and managing its risks. These really are just guidelines, so it's not quite as strict as the EU AI Act (a law) or ISO/IEC 42001 (a compliance standard).

NIST isn't about hard rules, so it works particularly well for businesses needing a stress-free baseline for AI governance. It's basically a playbook addressing four key areas: ways to map your AI context and risks, measure how your systems perform and where they might fail, manage those risks with clear policies and controls, and govern your entire AI lifecycle to ensure continuous oversight.

5. AI ethics boards

Adopt if: Your AI programs carry significant brand or legal risk and require broad oversight.

Ethics boards offer a more collaborative, human-centered approach to AI governance. These cross-functional committees bring together experts to review proposed AI projects before deployment to make sure they align with company values, ethical principles, and regulatory policies.

Many ethics boards include a mix of lawyers, engineers, compliance managers, data scientists, and product leads. Together, they'll look at AI in terms of technical feasibility, potential (regulatory or ethical) red flags, public trust and marketing implications, the list goes on. Then they can decide whether a project is a go or a no-go—and if it's a go, how the project should be managed.

6. General Data Protection Regulation (GDPR)

Adopt if: Your business operates or handles the private, personal data of EU citizens.

GDPR isn't new, and it wasn't explicitly designed for AI, but it's still a critical piece of the governance puzzle. Its core principles—data minimization, purpose limitation, and data security—directly shape how organizations collect and use the data that fuels AI systems.

When you look closely at GDPR's requirements for lawfulness, fairness, and transparency, you'll find they directly tackle two major AI challenges: preventing algorithmic bias and informing individuals when automated systems are making decisions about them.

How to implement AI governance in your business: 6 steps

If you're tired of reading about AI governance and ready to start doing it, here are some practical steps you can take to bring the above frameworks to your organization.

1. Define your foundation

You can't manage what you haven't defined. So, create a set of core principles for AI and how you want it used in your business. Don't be afraid to steal some (or all) principles from existing governance frameworks.

During this time, define your non-negotiables, like zero tolerance for biased outcomes or mandatory human review for consumer-facing decisions. Double and triple-check whether you're subject to any regulations (e.g., the EU AI Act or GDPR). Once you know the rules, you can start sketching a playbook for how to actually live by them.

This is your foundation, so document it. It'll ultimately be your North Star for every governance decision that follows.

2. Choose reputable AI vendors

Most of us aren't building complex learning models and algorithms from scratch; we're using AI product vendors. Who you partner with matters, so ask yourself: Does the provider follow the same guiding AI principles as your business? Are they transparent about their data handling and model training processes? Do they have policies for navigating ethical issues?

Choosing a vendor with solid governance in place can save you a ton of risk downstream.

3. Establish roles and responsibilities

If AI governance is everyone's problem, it becomes no one's. Assign clear ownership and make it someone's job to own the framework, track compliance, and escalate issues.

Some businesses, like Zapier, have even hired a Chief AI Officer (CAIO) or AI Transformation Leader who oversees all AI-related programs and projects. Others use a dedicated compliance or ethics committee. You could also add it as a responsibility for your CTO, CDO, risk manager, or compliance officer.

The point is that someone has to be accountable for AI governance oversight. Otherwise, if things do go sideways, you'll have a conference room full of people pointing at each other like the Spider-Man meme.

4. Train your staff

Your governance framework will be useless right out of the gate if your teams don't know it exists. It's critical to train every employee on everything they need to know for compliance, including:

Policies for how to identify and avoid inputting sensitive company or customer data into public AI tools

How to recognize prompts or use cases that could generate biased, unethical, or illegal outputs

The approved process for selecting and vetting new AI vendors and tools

Understanding the specific AI risks relevant to their department (like hiring bias for HR)

Knowing when a decision requires human oversight as opposed to leaning on automation or AI agents

How to properly document and disclose AI use in projects and communications

Reporting guidelines for potential AI incidents or security flaws

And this training isn't just for product engineers. Anyone with access to AI tools is now a stakeholder in need of guidance and training.

5. Gate your work

Bad data leads to risky AI outcomes. And you don't want just anyone being able to alter records or manipulate algorithms. So, use technical controls to enforce your policies.

Role-based access controls, for example, ensure only authorized personnel (like the AI lead or compliance officer) can manage sensitive AI systems. Also, implement a strict approval workflow for launching new AI models into production as a quality and safety checkpoint.

And don't forget to create detailed audit logs to help track how your AI is being used, when, where, and by whom.

6. Monitor your operations

Governance is a never-ending cycle. Monitor your AI systems for performance, drift, and unintended consequences. If something is off, you might have to rethink your governance framework and revise policies from the ground up.

You may also want to establish a feedback loop so employees and customers can report issues or red flags. Don't stress if your governance model isn't perfect from the start, since it's a living system that should be improved over time.

How are AI governance and AI ethics different?

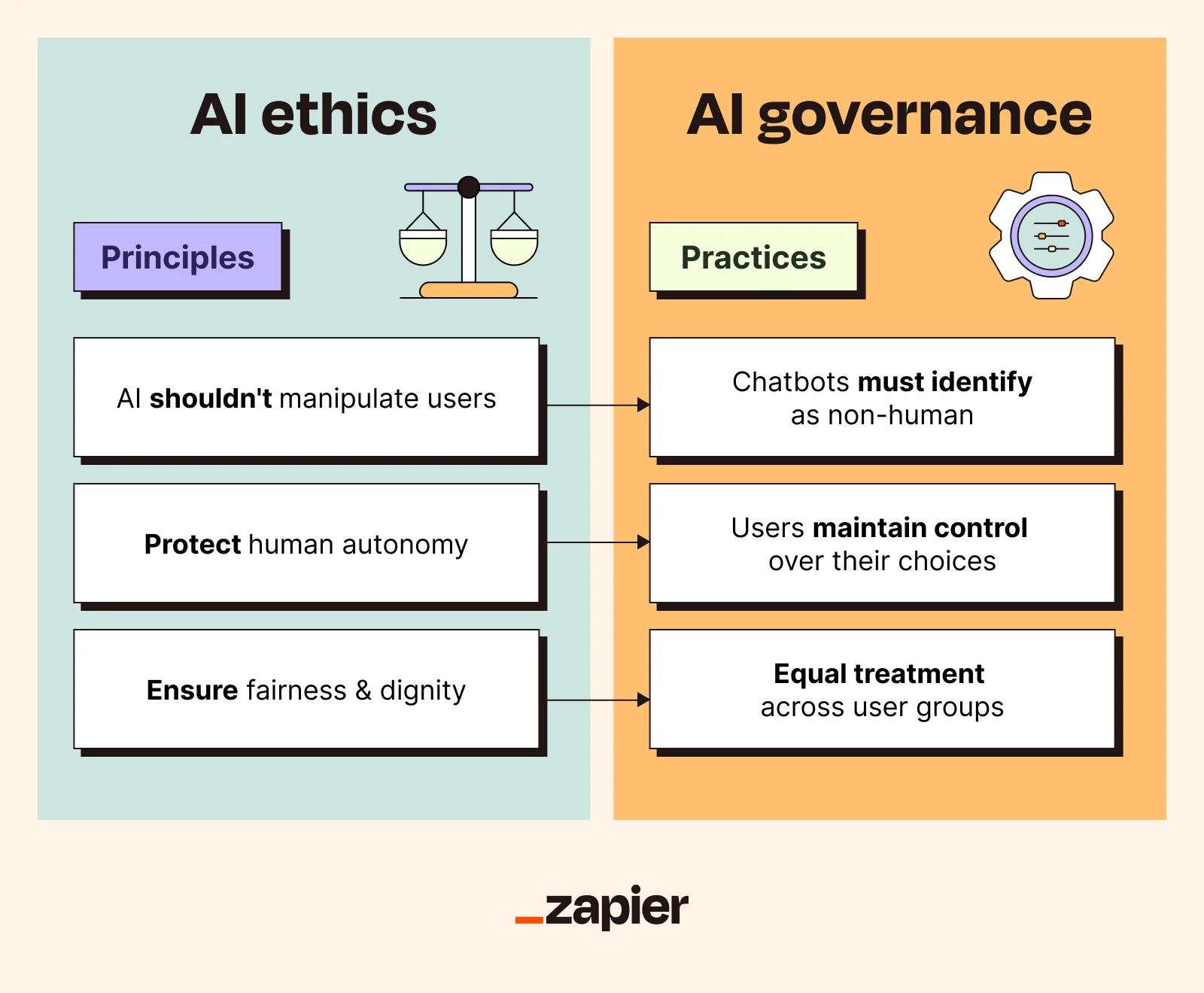

The terms "AI governance" and "AI ethics" are often used in the same conversations, but they don't mean the same thing. Here's the best way to distinguish them:

Ethics are principles. They define what's right and what's wrong. In the context of AI, for instance, your company might believe that AI shouldn't replace human intuition, or that people should have autonomy and not be manipulated by AI systems. These are the kinds of principles that dictate AI governance.

Governance defines how AI is managed in practice. To support the ethical principles above, you might implement a policy that any customer-facing AI chatbot must clearly introduce itself as non-human, and add warnings to its outputs, like: "AI merely makes suggestions" and "all final decisions should be consulted by experts."

In short, ethical principles inform governance structures.

To give one more example, think of an AI that automatically triages customer support tickets. An ethical guideline might be to always escalate "urgent, safety-related issues" to (human) staff.

The corresponding governance protocol would be a hard-coded rule in the workflow that overrides the AI's general ranking to always bump tickets containing keywords like "safety hazard" or "outage" to the front of the queue.

Bring governance-ready AI to the enterprise with Zapier

AI has made its way into every area of daily business operations. Chatbots are running customer service, artificial agents are advising in large-scale decisions, and GenAI is churning out long-form copy in seconds.

Responsible AI governance determines whether you adopt this tech safely or become a news headline. With Zapier AI, you don't have to choose between moving fast and maintaining safety. Zapier's enterprise-grade security compliance keeps AI under control, with role-based access to AI integrations, audit logs, real-time monitoring, model training opt-out, and everything else you need for true oversight.

Connect AI tools to the rest of your tech stack in minutes and create AI workflows securely and accurately across governed systems.

Related reading: