Historical service metrics are essential to making good decisions for the future. This is how we used Thanos and Prometheus to store 130 TB of metrics, keep data for years, and provide metrics within a few seconds.

What we expect from our system

As infrastructure grows in complexity, we need more sophistication from our observability tools. The main requirements for modern monitoring systems are:

Availability (be reliable with a high uptime)

Scalability: (be able to scale horizontally and handle the load)

Performance: (return results reasonably quickly)

Durability: (keep metrics for a long time)

How we built our system at Zapier

There are many different approaches and tools like Thanos, Grafana Mimir, VictoriaMetrics, and many other commercial tools to achieve the requirements for modern monitoring tools. We solved the challenges with Prometheus Operator and Thanos.

Prometheus Operator aims to simplify the deployment and management of Prometheus. In contrast, Thanos is a long-term metrics platform that extends the basics of Prometheus capabilities by providing scalability, durability, and much better performance.

There are a few motivations behind that tool selection:

We have been running our monitoring system for ages, and Thanos has proved to be one of the system's most durable components. We continue to trust this tool, and it pays us back with stability.

Prometheus Operator makes life much easier when running Prometheus in production. It's simple to configure and automatically handles changes.

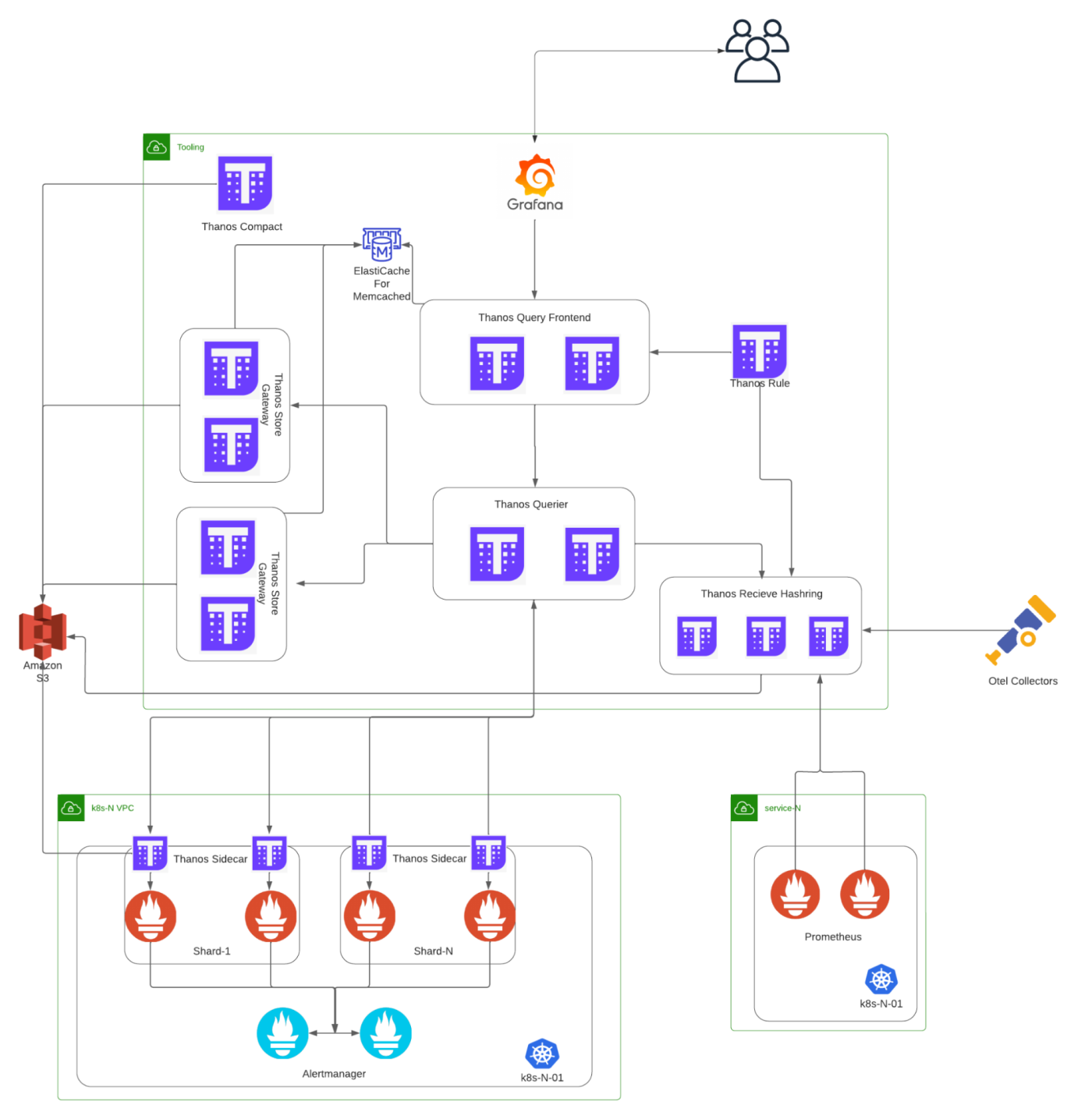

Here is our simplified architecture diagram with Thanos and Prometheus:

What we've learned

Thanos and Prometheus are robust systems that provide fast and reliable monitoring capabilities when you run them according to the best practices. Here are the lessons we've learned that can significantly improve its performance, scalability, and availability.

1. Caching

Caching is one of the most common solutions to speed up your response time. Thanos query frontend is key to improving your query performance.

Here are the settings that we use to serve our Thanos Query Frontend:

query-frontend-config.yaml: |

type: MEMCACHED

config:

addresses: ["host:port"]

timeout: 500ms

max_idle_connections: 300

max_item_size: 1MiB

max_async_concurrency: 40

max_async_buffer_size: 10000

max_get_multi_concurrency: 300

max_get_multi_batch_size: 0

dns_provider_update_interval: 10s

expiration: 336h

auto_discovery: true

query-frontend-go-client-config.yaml: |

max_idle_conns_per_host: 500

max_idle_conns: 500

2. Metric downsampling

Do you need a 4K resolution for the Apple Watch display? Most likely not. Similarly, you don't need to keep the raw resolution of your metrics. Resolution is the distance between data points on your graphs. When you query your data, you are interested in the general view and trends within some specific timeframe. Downsample and compact your data with Thanos Compact.

Here are our Thanos Compactor settings:

retentionResolutionRaw: 90d

retentionResolution5m: 1y

retentionResolution1h: 2y

consistencyDelay: 30m

compact.concurrency: 6

downsample.concurrency: 6

block-sync-concurrency: 60

Take care to ensure that Thanos Compactor is working! When your pod runs, an absence of errors doesn't necessarily mean it's working. We found this out the hard way. Create alerts that track if compaction and downsampling happen. You will be surprised how it affects the performance of the queries.

3. Keep your metrics in good shape

No matter how much you cache or downsample your data, too many metrics with high cardinality will kill your performance.

Two recommendations we can make are to ensure you only store metrics that are important and to discard metrics that aren't. We have disabled most metrics from the Node Exporters—we keep just what we need.

Here is an example of our Node Exporter settings:

--no-collector.arp

--no-collector.bonding

--no-collector.btrfs

--no-collector.conntrack

--no-collector.cpufreq

--no-collector.edac

--no-collector.entropy

--no-collector.fibrechannel

--no-collector.filefd

--no-collector.hwmon

--no-collector.infiniband

--no-collector.ipvs

--no-collector.nfs

--no-collector.nfsd

--no-collector.powersupplyclass

--no-collector.rapl

--no-collector.schedstat

--no-collector.sockstat

--no-collector.softnet

--no-collector.textfile

--no-collector.thermal_zone

--no-collector.timex

--no-collector.udp_queues

--no-collector.xfs

--no-collector.zfs

--no-collector.mdadm

4. Shard your long-term storage

We keep 130TB of metrics stored in S3 and served by the Thanos Store.

This huge amount of data is challenging to serve with a single group of Thanos Store services responsible for scanning every TB of metrics.

The solution here is similar to databases. When a table is too big, just shard it! We have done the same with S3 storage. We have three shard groups of the Thanos Store that watch and serve different shards of our S3 bucket.

Here are our settings:

# Sharded store covering 0 - 30 days

thanos-store-1:

"min-time": -30d

"store.grpc.series-sample-limit": "50000000"

# Sharded store covering 30 - 90 days

thanos-store-2:

"min-time": -90d

"max-time": -30d

"store.grpc.series-sample-limit": "50000000"

# Sharded store covering > 90 days

thanos-store-3:

"max-time": -90d

"store.grpc.series-sample-limit": "50000000"

5. Scaling and high availability

While we've talked a lot about performance, scalability and availability are often more important.

How can we scale Prometheus? Can we scale up replicas, then sit back and relax? Unfortunately, not quite. It's designed to be simple and reliably provide its main features. Scaling and high availability aren't available out of the box.

There are a lot of discussions about scaling and sharding in the Prometheus Operator and other places. We would split these approaches into two main groups:

Manual scaling

Semi-automatic scaling

There are two ways to scale semi-automatically: Prometheus operator hashing and Kvass horizontal auto-scaling solution. However, we opted for manual scaling.

The primary motivation for manual scaling was to split services from shared Kubernetes clusters into small independent clusters. Also, our team is actively working on adopting the Otel collectors. In the future, we may not run Prometheus and start pushing metrics directly to Thanos or another central monitoring system.

In our manual scaling approach, we run different independent Prometheus Shards for multiple service groups.

For example:

We have Prometheus servers that scrape and manage the infrastructure metrics.

Other groups operate Kafka metrics.

The ideal setup for us would be to serve HA pairs of Prometheus for every service group or namespace. We're slowly moving to an architecture that has proven reliable and scalable.

Conclusion

We're sure there are many other good suggestions and tips for improving the monitoring system. We found these five recommendations to be the most critical and effective. As the Pareto principle suggests, we believe these five recommendations make up 80 percent of the impact on the performance and reliability of our monitoring system.

We continue to improve and grow. We set the next metric milestone for our monitoring system to 240 TB of metrics per year. The new goal has new challenges and solutions. Stay tuned for updates from our future monitoring journeys!