How to Set Up TravisCI-like Continuous Integration with Docker and Jenkins

Lately, I've been working on a number of improvements to our continuous integration setup here at Zapier. It's a delicious combination of Jenkins, Docker and the Github Commit API that anyone can accomplish with a little work!

The Vision

Before delving into our continuous integration (CI) setup, it's important to note that the Zapier engineering team follows what is known as Github Flow. There's no super formal procedure here, just some simple ground rules:

- Long running features are developed on feature branches

- Small changes that can go whenever are pushed to develop

- When features are finished, a pull request is opened to develop for any ad hoc review before merging

- Develop branch is merged to master via pull request in preparation for deployment

- Deployments can happen many times a day! So whenever something is pushed to develop it should be a "potentially shippable" feature!

With these details in mind, we wanted our tests to run whenever a pull request is opened and whenever a new commit is added to an open pull request.

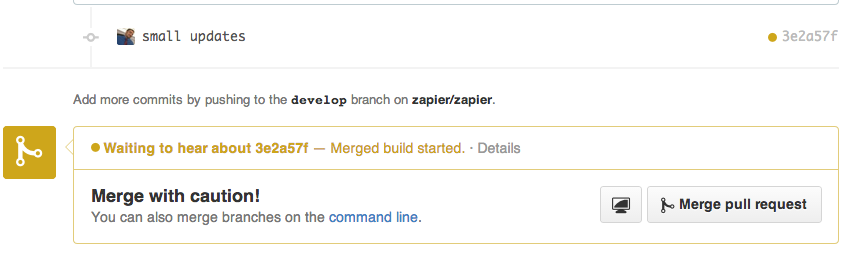

Jenkins actually works quite well here. Coming from a Java background, I had a ton of experience with Jenkins so getting it set up and running our tests was easy enough. But how could we have tests run on pull request and update the pull request the same way Travis CI does? Enter GitHub Pull Request Builder.

GitHub Pull Request Builder

This is a pretty sweet Jenkins plugin that will trigger a job off of opened pull requests. Once it is configured for a project, open a pull request and you'll see this indication that GitHub tests are running.

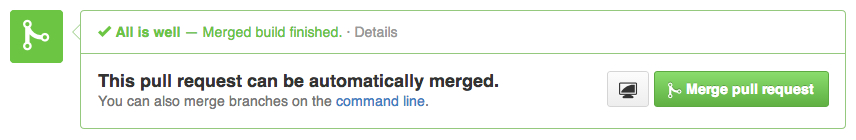

If they pass, all is green!

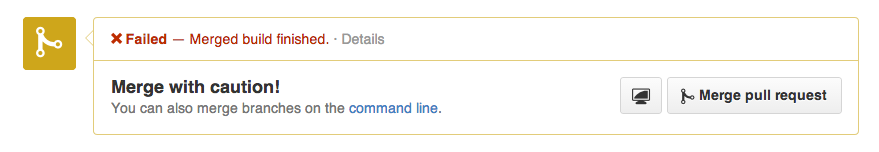

If they fail though, you'll see a warning.

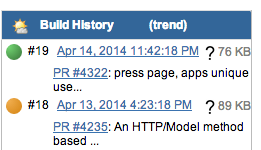

The "Details" link in both screenshots link back to the passing/failed test run. Also, the Jenkins job for this project keeps track of the build history for each pull request with a handy link back to the PR.

All good here! Next, how can we get builds to run in an isolated fashion?

Docker

We've been using Docker at Zapier for a number of things since its initial release at PyCon and love it! For me, Docker and Jenkins have been a perfect fit. I've been working with CI servers in some fashion since 2007 and in my experience the CI server over time becomes a hodgepodge of system libraries, special applications, and different language installations needed for the various jobs running on it. It's especially nasty when you have a CI box with applications that actually have the same native library dependency but different, incompatible versions!

With our current setup, almost all applications are run through Docker containers. Tests for our Python and Node.js applications all run within a container. Further, one of our Django applications even has a Docker image that comes complete with Redis and MySQL running inside of it. Gone are the days of having various gemsets, virtualenvs, and other nightmares installed on our Jenkins server. Now we just have Jenkins and Docker, with each application repository containing a Dockerfile that defines the image it needs to be ran within.

The Setup

In the root of our project we have a single Dockerfile that defines everything the application needs installed to run, and typically we define a CMD to be the default test command to run. For example, here's the Dockerfile for one of our run of the mill flask applications.

A dedicated Jenkins job builds an image from this Dockerfile. We keep the image building as a separate task because it's time consuming… once an image is built, containers can be run from it over and over and the start up time is practically nil!

We then define a job to run tests. Each project typically has two test jobs: one that runs tests on pull request and another that runs tests periodically. While they have some differing setups the core task of running tests are the same. We just execute a command shell like the following.

This runs a container with /project mounted to the workspace directory on the Jenkins server and copies the test artifacts out of it afterward. We can then have Jenkins publish the test results, coverage reports and all of that good stuff.

As I mentioned previously we also have a separate Jenkins job with a similar setup run tests against the master branch periodically. This ensures that projects that have no activity for a prolonged period of time don't wind up in a state where engineers can't even run the tests for them when they pull the project down to work on it.

Shipyard

The final piece to the puzzle is Shipyard. When we first started using docker with jenkins one annoying thing that kept happening was old images and containers being left behind, taking up precious disk space and other system resources. This usually meant ssh'ing into the CI server to clean up containers. So we installed Shipyard on the CI server to provide a more graphical interface to manage containers.

Using the Jenkins Sidebar Plugin, we can link directly to the Shipyard interface using a docker icon for engineers to quickly navigate to it if need be! ;)

Alternative Approaches

Of course this "Do It Yourself" type of setup isn't quite for everyone and while the Docker + CI ecosystem is slightly young, it is growing. If you use Jenkins, there IS a Docker Plugin which was a little immature at the time of this writing. There is also Drone CI which in my opinion is a serious contender in the space.

If you're using Docker in your continuous integration setup, we'd love to hear what ways you've accomplished it!

Comments powered by Disqus