AI is everywhere. Thousands of new AI apps launch every day. And there's a constant message stream reminding you that if you're not getting on board the AI train, you're falling behind. But don't let the pressure of jumping on the AI Express lead you to ignoring real cybersecurity risks.

We're embedding AI into our browsers, email inboxes, and document management systems. We're giving it autonomy to act on our behalf as a virtual assistant. We're sharing our personal and company information with it. All this is creating some new cybersecurity risks and amplifying the risk of traditional hacker games.

I went out to get the perspectives from leaders in generative AI—both those who develop AI apps and those in cybersecurity—about the security risks that come from AI. Here's what I learned.

New risks from generative AI

First, let's look at five new cybersecurity risks associated with using generative AI tools.

It's incredibly easy to disguise an AI app as a genuine product or service when in reality, it's been put together in one afternoon with little or no oversight or care about the user's privacy, security, or even anonymity.

Adrian Volenik, founder of aigear.io

1. Poor development process

The pace at which companies can deploy generative AI applications is unprecedented in the software development world. The normal controls on software development and lifecycle management may not always be present.

According to Adrian Volenik, founder of aigear.io, "It's incredibly easy to disguise an AI app as a genuine product or service when in reality, it's been put together in one afternoon with little or no oversight or care about the user's privacy, security, or even anonymity."

Greg Hatcher, founder of White Knight Labs, a cybersecurity consulting firm, agrees:

"There's an AI Gold Rush. All over Twitter and LinkedIn, there's tons of messages pushing new AI applications that someone wrote the night before. And you have to use them or you'll fall behind. But it's a bunch of bunk. They're just throwing some HTML and JavaScript to make a front end, but really, it's just ChatGPT underneath the hood."

2. Elevated risk of data breaches and identity theft

When we share our personal or corporate information with any software application, we do so trusting that the company handles it responsibly and has strong protections against cyberattacks in place. However, with generative AI tools, we may be unintentionally sharing more than we think.

Ryan Faber, founder and CEO of Copymatic, cautions: "AI apps do walk around in our user data to fetch important information to enhance our user experience. The lack of proper procedures on how the data is collected, used, and dumped raises some serious concerns."

3. Poor security in the AI app itself

The addition of any new app into a network creates new vulnerabilities that could be exploited to gain access to other areas in your network. Generative AI apps pose a unique risk because they contain complex algorithms that make it difficult for developers to identify security flaws.

Sean O'Brien is the founder of the Yale Privacy Lab and a lecturer at Yale Law School. He told me:

"AI is not yet sophisticated enough to understand the complex nuances of software development, which makes its code vulnerable. Research assessing the security of code generated by GitHub Copilot found that nearly 40% of top AI suggestions as well as 40% of total AI suggestions led to code vulnerabilities. The researchers also found that small, non-semantic changes such as comments could impact code safety."

O'Brien shared some examples how these risks could manifest:

If AI models can be tricked into misclassifying dangerous input as safe, an app developed with this AI could execute malware and even bypass security controls to give the malware elevated privileges.

AI models that lack human oversight can be vulnerable to data poisoning. If a chatbot developed with AI is asked for the menu of a local restaurant, where to download a privacy-respecting web browser, or what the safest VPN to use is, the user could instead be directed to imposter websites containing ransomware.

4. Data leaks that expose confidential corporate information

If you've been playing around with AI tools for a bit now, you've probably already learned the role writing a good prompt has in getting good results. You provide the AI chatbot with background information and context to get the best response.

Well, you may be sharing proprietary or confidential information with the AI chatbot—and that's not good. Research done by Cyberhaven, a data security company, found that

11% of data employees paste into ChatGPT is confidential.

4% of employees have pasted sensitive data into it at least once.

Employees are sharing the company's intellectual property, sensitive strategic information, and client data.

Dennis Bijker, CEO of SignPost Six, an insider risk training and consultancy firm, reiterates:

"A most concerning risk for organizations is data privacy and leaking intellectual property. Employees might share sensitive data with AI-powered tools, like ChatGPT and Bard. Think about potential trade secrets, classified information, and customer data that is fed into the tool. This data could be stored, accessed, or misused by service providers."

5. Malicious use of deepfakes

Voice and facial recognition are getting used more as an access control security measure. AI is an opportunity for bad actors to create deepfakes that get around that security, as has been reported on here, here, and here.

How to strengthen your security posture in the age of AI

It will take some time to catch up to these new risks, but there are a few places to get started.

Research the company behind the app

You can evaluate an app's reputation and track record with its other tools and services. But don't assume a known name provides an acceptable level of security.

For example, Hatcher says, "There isn't necessarily greater security in going with a big name. Some companies are more protective of personally identifiable information and customer information. It's actually pretty hard to get a malicious iOS app on the Apple app store. It's fairly easy to do that on the Android Play and Google Play stores."

You also want to review the company's privacy policy and security features. Information you share with an AI tool may get added to its large language model (LLM), which means it could surface in responses to other people's prompts.

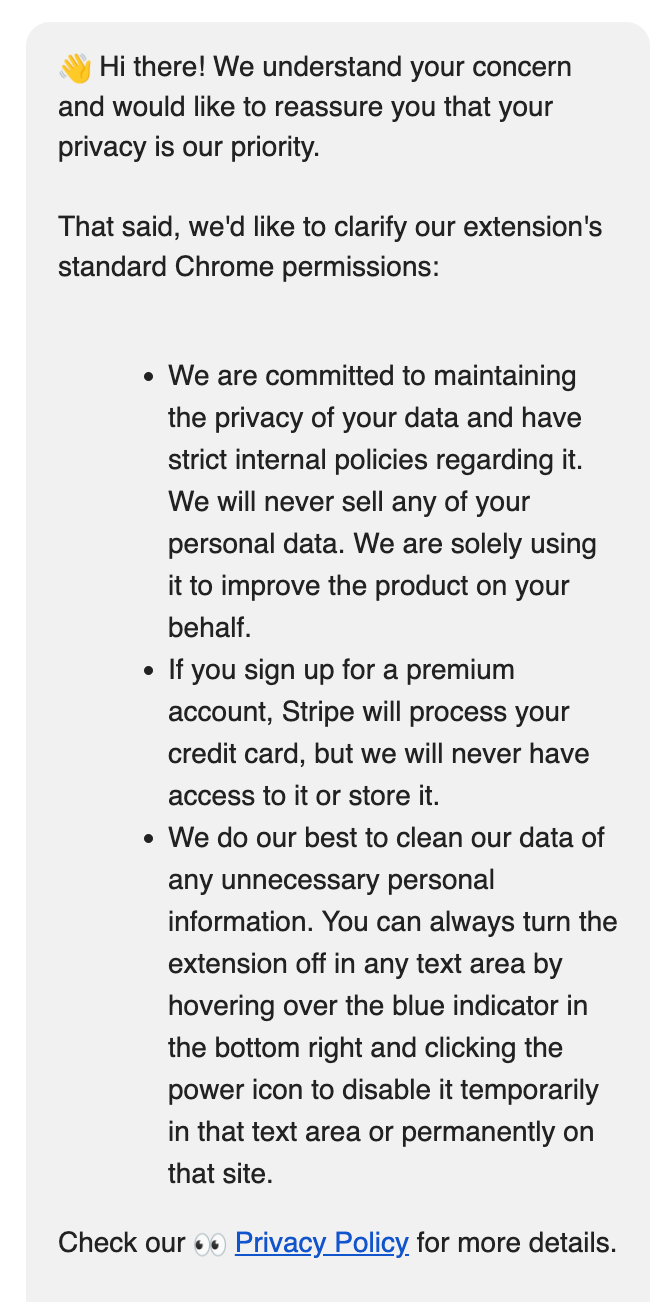

Hatcher recommends asking the company for an attestation letter that says the app security has been tested by verified third parties. I tried this with an AI extension I'd embedded into my email service. Here's the response I got:

Not a confidence-builder—and I removed the browser extension.

Train employees on safe and proper use of AI tools

You have acceptable social media usage policies for employees, and you should be training employees on good cybersecurity behaviors already. Widespread use of generative AI tools means adding some new policies and training topics to that framework. These can include:

What they can and cannot share with generative AI apps

General overview of how LLMs work and potential risks in using them

Only allowing approved AI apps to be used on company devices

Here's a nice overview of what some big companies are doing regarding generative AI usage by their employees.

Consider using a security tool designed to prevent oversharing

As generative AI tool production continues, we'll soon see a growing collection of cybersecurity tools designed specifically for their vulnerabilities. LLM Shield and Cyberhaven are two that are designed to help prevent employees from sharing sensitive or proprietary information with a generative AI chatbot. I'm not endorsing any specific tool—just letting you know the market is out there and will grow.

You can also use a network auditing tool to monitor what AI apps are now connecting to your network.

AI improves hacker productivity too

Everybody wants that edge.

AI tools aren't just for the hardworking folks or white hat hackers. Bad actors are also using AI and generative AI tools to improve and scale up their ability to conduct the types of bad acts we already need to protect against.

One cybersecurity company, HYAS Labs, built BlackMamba, an AI-base malware that could dynamically alter its own code each time it executes, which allows it to bypass endpoint detection software. In a blog sharing the results of their BlackMamba experiment, the researchers wrote:

"The threats posed by this new breed of malware are very real. […] As the cybersecurity landscape continues to evolve, it is crucial for organizations to remain vigilant, keep their security measures up to date, and adapt to new threats that emerge by operationalizing cutting-edge research being conducted in this space."

BlackMamba was a keylogger type of malware. Keyloggers are used to capture access credentials that hackers use to get into networks, steal information, or take control in a ransomware attack.

Hackers are also using generative AI tools to improve the sophistication of their phishing attacks. They can make them more personal by creating apps that crawl the internet, including social media and other public sources of information, to create detailed phishing profiles of targets. It also lets them gather personal information at scale. And generative AI tools can help hackers generate better spoof websites that trap people into sharing their credentials. They can easily create countless spoof websites, each with only a minor difference from the other fakes, increasing their odds of getting past network security tools.

Now is a good time to double down on traditional cybersecurity measures

With AI helping hackers improve their traditional scams, the call is out to make sure you double down on your traditional cybersecurity defenses.

Keep your software and operating systems up to date. Hackers exploit known vulnerabilities, and now they can do it much easier.

Use some sort of anti-virus/anti-malware endpoint protection software.

Tighten up credentials. Use strong passwords with multi-factor authentication. Think about using a passkey where available, instead of passwords.

Have a data and application backup system in place as part of your business continuity plan, so you can stay operational during a ransomware or other attack.

Train yourself and your employees on security-minded behaviors.

Stay safe out there

This isn't an argument to ignore generative AI and its potential. Just don't get distracted by every new shiny object that comes along. Be deliberate and take precautions as you explore. But do explore—I am. We can all enjoy it while bolstering our cybersecurity postures to protect digital assets, operations, and privacy.

Related reading: