Research Report

Research Report

Research Report

The future of AI transformation in 2026

The future of AI transformation in 2026

The future of AI transformation in 2026

Exclusive trends and insights from enterprise leaders

84%

84%

84%

are confident they'll prove AI ROI this year

74%

74%

74%

say AI is the last budget they'd cut

43%

43%

43%

are investing $5M+ to get there

Introduction

Introduction

AI is no longer experimental—it’s embedded across enterprise systems, workflows, and decision-making. Now, a new challenge has emerged: enterprises can integrate AI, but scaling it responsibly, measurably, and at pace is still difficult.

This report examines the next chapter of enterprise AI: the transformation from adoption to orchestration. It explores how leaders assess their maturity, build governance into every layer of automation, develop AI fluency across the workforce, and set higher ROI standards.

Our findings come from 200 enterprise leaders (CIOs, CTOs, VPs, and Directors of IT, Engineering, Operations, and Data) across the U.S., Canada, and Europe. These leaders are shaping AI strategy, governance, and performance inside their enterprise organizations. Their collective perspectives offer a dual view: the boardroom’s strategic intent for AI contrasted with the operational reality for teams leveraging AI at scale.

AI’s future isn’t just about faster adoption—it’s about smarter systems, stronger accountability, and clearer proof of value. This report reveals what enterprise AI maturity will really look like in 2026 and how leaders plan to get there.

Chapter 1

How enterprises plan to scale AI maturity in 2026

AI maturity is accelerating—but only a quarter of companies are close to full orchestration

25%

of leaders expect full AI orchestration by 2026

43%

of leaders expect to reach agentic AI

30%

of leaders will focus on task-level automation

Enterprises are advancing through distinct stages of AI maturity, but progress is uneven across the journey.

The maturity model follows three distinct stages:

AI-powered workflows. Automation improves discrete tasks within individual functions.

Agentic AI. Autonomous systems work together across functions and operate with minimal human input.

Scaled orchestration. AI is the connective layer across an entire enterprise, embedding governance, visibility, and coordination across end-to-end processes.

Enterprises are advancing through distinct stages of AI maturity, but progress is uneven across the journey.

The maturity model follows three distinct stages:

AI-powered workflows. Automation improves discrete tasks within individual functions.

Agentic AI. Autonomous systems work together across functions and operate with minimal human input.

Scaled orchestration. AI is the connective layer across an entire enterprise, embedding governance, visibility, and coordination across the end-to-end processes.

Enterprises are advancing through distinct stages of AI maturity, but progress is uneven across the journey.

The maturity model follows three distinct stages:

AI-powered workflows. Automation improves discrete tasks within individual functions.

Agentic AI. Autonomous systems work together across functions and operate with minimal human input.

Scaled orchestration. AI is the connective layer across an entire enterprise, embedding governance, visibility, and coordination across the end-to-end processes.

By 2026, leaders expect to be spread widely across that curve:

25% anticipate reaching full-scale orchestration, where AI functions as a governed operating system for the business

43% expect to reach the agentic AI stage, linking systems and workflows with limited human supervision

30% will still focus on task-level automation within individual functions

2% report having no maturity target at all

Maturity has become the clearest signal of enterprise readiness; not whether an org is using AI, but how structurally it’s embedded.

This new data exposes the growing chasm between the automation and orchestration stages. Most enterprises are still building that connective tissue—consolidating data, workflows, and governance—and only a small minority have taken steps to operationalize AI across the organization.

By 2026, leaders expect to be spread widely across that curve:

25% anticipate reaching full-scale orchestration, where AI functions as a governed operating system for the business

43% expect to reach the agentic AI stage, linking systems and workflows with limited human supervision

30% will still focus on task-level automation within individual functions

2% report having no maturity target at all

Maturity has become the clearest signal of enterprise readiness; not whether an org is using AI, but how structurally it’s embedded.

This new data exposes the growing chasm between the automation and orchestration stages. Most enterprises are still building that connective tissue—consolidating data, workflows, and governance—and only a small minority have taken steps to operationalize AI across the organization.

Leaders trust AI with internal processes long before leveraging it for customer-facing workflows

Enterprise leaders trust AI to manage these business-critical workflows

We asked leaders which business-critical workflows they'll allow AI to manage without human intervention, and the top three answers spanned security and identity management, finance and procurement, and customer communications.

40% — Security and Identity management

31% — Finance and procurement

25% — Customer communications

Most (71%) enterprise leaders are confident AI will fully manage internal, rules-driven workflows, such as identity management and procurement, by 2026. However, many are still hesitant to forego the human touch when it comes to customer communications and tasks.

Only 4% of leaders say they'll always need a layer of human approval for all workflows. So, nearly all enterprises agree that AI will eventually manage some functions independently (with a few guardrails in place, of course).

Most (71%) enterprise leaders are confident AI will fully manage internal, rules-driven workflows, such as identity management and procurement, by 2026. However, many are still hesitant to forego the human touch when it comes to customer communications and tasks.

Only 4% of leaders say they'll always need a layer of human approval for all workflows. So, nearly all enterprises agree that AI will eventually manage some functions independently (with a few guardrails in place, of course).

When asked to forecast which business-critical workflows AI will manage without human intervention, leaders pointed to:

Security and identity management (40%). Managing user provisioning and access permissions, where rules and controls are clearly defined.

Finance and procurement (31%). Handling approvals, invoices, and vendor onboarding under structured, policy-based hierarchies.

Customer communications (25%). Managing escalations and service updates, where automation supports response consistency but still requires oversight to protect customer trust.

When asked to forecast which business-critical workflows AI will manage without human intervention, leaders pointed to:

Security and identity management (40%). Managing user provisioning and access permissions, where rules and controls are clearly defined.

Finance and procurement (31%). Handling approvals, invoices, and vendor onboarding under structured, policy-based hierarchies.

Customer communications (25%). Managing escalations and service updates, where automation supports response consistency but still requires oversight to protect customer trust.

Precision and accountability still define the outer limits of trust

98%

of leaders believe that some business-critical workflows should never be fully automated

83%

say AI error rates must stay at 5% or below for high-stakes operations

When asked which workflows they expect AI systems to never manage, leaders most frequently said:

29% — Legal and compliance approvals

30% — Sensitive workforce actions

26% — Finance or budget decisions

That growing confidence doesn’t translate to blind autonomy. Leaders may be willing to let AI execute governed, rule-based work, but they are equally clear about what workflows still need human judgment.

98% of leaders believe that some business-critical workflows should never be fully automated, underscoring that human oversight remains the ultimate safeguard against potential risks.

When asked which workflows they expect AI systems will never manage, leaders most frequently said:

Legal and compliance approvals (29%). Requiring human review to ensure accountability and adherence to regulatory standards

Sensitive workforce actions (30%). For HR tasks that require interpersonal judgement and ethics, including hiring, promotions, and layoffs

Finance or budget decisions (26%). Involving strategic prioritization and trade offs that depend on human context and discretion.

That growing confidence doesn’t translate to blind autonomy. Leaders may be willing to let AI execute governed, rule-based work, but they are equally clear about what workflows still need human judgment.

98% of leaders believe that some business-critical workflows should never be fully automated, underscoring that human oversight remains the ultimate safeguard against potential risks.

When asked which workflows they expect AI systems will never manage, leaders most frequently said:

Legal and compliance approvals (29%). Requiring human review to ensure accountability and adherence to regulatory standards

Sensitive workforce actions (30%). For HR tasks that require interpersonal judgement and ethics, including hiring, promotions, and layoffs

Finance or budget decisions (26%). Involving strategic prioritization and trade offs that depend on human context and discretion.

Only 2% of leaders believe every workflow could eventually run without human involvement.

Additionally, most enterprise leaders acknowledge that AI is fallible. 83% of enterprise leaders say AI error rates must stay at 5% or below for high-stakes operations, proving that trust in automation is conditional.

AI earns leaders’ trust through measurable precision, not assumed competence.

As AI becomes embedded, expectations for accuracy only increase—greater adoption brings stricter accountability, not relaxed oversight. In effect, trust grows only as governance deepens.

Only 2% of leaders believe every workflow could eventually run without human involvement.

Additionally, most enterprise leaders acknowledge that AI is fallible. 83% of enterprise leaders say AI error rates must stay at 5% or below for high-stakes operations, proving that trust in automation is conditional.

AI earns leaders’ trust through measurable precision, not assumed competence.

As AI becomes embedded, expectations for accuracy only increase—greater adoption brings stricter accountability, not relaxed oversight. In effect, trust grows only as governance deepens.

Visibility, not volume, determines who scales responsibly

A lot of stuff in work is pretty like ambiguous, ill-defined and very much on the front lines... it’s actually pretty hard for senior management to just be like, this is what you should be doing with AI. Cause frankly, you don’t even know what people are doing day to day.

A lot of stuff in work is pretty like ambiguous, ill-defined and very much on the front lines... it’s actually pretty hard for senior management to just be like, this is what you should be doing with AI. Cause frankly, you don’t even know what people are doing day to day.

A lot of stuff in work is pretty like ambiguous, ill-defined and very much on the front lines... it’s actually pretty hard for senior management to just be like, this is what you should be doing with AI. Cause frankly, you don’t even know what people are doing day to day.

40%

say visibility is the most critical capability

33%

cite workflow errors as the first warning sign

30%

point to governance gaps when AI falters

Leaders view transparency as the foundation for responsible AI scale. 40% rank end-to-end visibility and observability as the most critical capability—outpacing coordination across AI agents (22%), traceability of data sources and decisions (17%), and optimization for performance and cost (13%). This emphasis on full visibility underscores a broader shift toward transparency over speed or efficiency as the defining advantage in responsible AI.

Leaders understand that scale without visibility is risk, not progress. Sustainable maturity depends on whether enterprises can monitor,

Leaders view transparency as the foundation for responsible AI scale. 40% rank end-to-end visibility and observability as the most critical capability—outpacing coordination across AI agents (22%), traceability of data sources and decisions (17%), and optimization for performance and cost (13%). This emphasis on full visibility underscores a broader shift toward transparency over speed or efficiency as the defining advantage in responsible AI.

Leaders understand that scale without visibility is risk, not progress. Sustainable maturity depends on whether enterprises can monitor, audit, and explain how AI makes decisions in real time. Visibility, not volume, will determine who scales responsibly.

When AI programs falter, the failures are systemic, not cultural. 33% of leaders cite workflow errors, 30% governance gaps, and 29% stakeholder-trust erosion as leading warning signs, while only 8% point to workforce strain. So, AI success is being measured less by enthusiasm and more by integrity—the stability, governance, and traceability of the systems themselves.

audit, and explain how AI makes decisions in real time. Visibility, not volume, will determine who scales responsibly.

When AI programs falter, the failures are systemic, not cultural. 33% of leaders cite workflow errors, 30% governance gaps, and 29% stakeholder-trust erosion as leading warning signs, while only 8% point to workforce strain. So, AI success is being measured less by enthusiasm and more by integrity—the stability, governance, and traceability of the systems themselves.

CASE STUDY

How Grammarly slashed lead sync errors 87% with Zapier

With Zapier, I can just block off an hour and figure it out myself. We’ve been able to scale our operations while staying lean—Zapier lets us do more without needing more people.

With Zapier, I can just block off an hour and figure it out myself. We’ve been able to scale our operations while staying lean—Zapier lets us do more without needing more people.

With Zapier, I can just block off an hour and figure it out myself. We’ve been able to scale our operations while staying lean—Zapier lets us do more without needing more people.

The challenge

With 1,500+ employees and millions of users worldwide, Grammarly’s Marketing and Support Ops teams struggled with numerous manual processes across systems that didn't communicate with each other. Lead data syncing was error-prone and time-consuming, while support agents spent hours manually transferring data between tools, which took time away from actually helping customers.

The solution

With a little help from Zapier, Marketing Ops automated lead routing from LinkedIn ads directly to their CRM, while Support Ops eliminated manual data entry by auto-tagging conversations in Intercom and streamlining dev escalations. And they built these systems themselves—no waiting on Engineering to get around to it.

The results

87% reduction in lead sync errors across paid campaigns

31% improvement in plan efficiency for marketing operations

6 hours per day saved for the Support Ops team by removing manual data entry

Dev escalations handled instantly with automated communication

Over 90% CSAT due to faster, more reliable support workflows

Chapter 2

How AI is redefining the enterprise workforce

AI transformation is driving work force expansion, not contraction

71%

say AI will reshape their teams

61%

are hiring AI Automation Specialists

51%

plan to redeploy employees into new roles (vs. 21% reducing headcount)

71% of enterprise leaders say AI will reshape teams through redeployment or new hiring. More than half of those leaders (51%) plan to move employees into newly defined roles, while another 20% expect to increase headcount to capitalize on AI opportunities. Only 21% anticipate reductions, and 8% foresee no change. This pattern confirms that AI transformation is expanding organizational capacity, not shrinking it.

Enterprises with embedded AI are using automation to restructure teams, not shrink them. Leaders who say AI is mission-critical are about 2X more likely to anticipate workforce growth, compared to those who say AI is still limited but expanding in their organization.

The picture is clear: most enterprises are reorganizing, not downsizing.

71% of enterprise leaders say AI will reshape teams through redeployment or new hiring. More than half of those leaders (51%) plan to move employees into newly defined roles, while another 20% expect to increase headcount to capitalize on AI opportunities. Only 21% anticipate reductions, and 8% foresee no change. This pattern confirms that AI transformation is expanding organizational capacity, not shrinking it.

Enterprises with embedded AI are using automation to restructure teams, not shrink them. Leaders who say AI is mission-critical are about 2X more likely to anticipate workforce growth, compared to those who say AI is still limited but expanding in their organization.

The picture is clear: most enterprises are reorganizing, not downsizing.

71% of enterprise leaders say AI will reshape teams through redeployment or new hiring. More than half of those leaders (51%) plan to move employees into newly defined roles, while another 20% expect to increase headcount to capitalize on AI opportunities. Only 21% anticipate reductions, and 8% foresee no change. This pattern confirms that AI transformation is expanding organizational capacity, not shrinking it.

Enterprises with embedded AI are using automation to restructure teams, not shrink them. Leaders who say AI is mission-critical are about 2X more likely to anticipate workforce growth, compared to those who say AI is still limited but expanding in their organization.

The picture is clear: most enterprises are reorganizing, not downsizing.

AI transformation is reshaping how work gets done and by whom. Employees are moving from manual execution into higher-value roles that focus on oversight, enablement, and system governance. AI and automation are increasingly absorbing repetitive tasks, so human contributions are shifting toward judgment, design, and orchestration—the functions that define the next era of enterprise leadership.

When asked which level is most at risk from AI, leaders ranked entry-level roles as No. 1, followed by middle managers. The exposure comes not from redundancy, but reinvention. They anticipate a shift in how early-career employees build experience and how managers add value as automation takes on more routine tasks.

AI transformation is reshaping how work gets done and by whom. Employees are moving from manual execution into higher-value roles that focus on oversight, enablement, and system governance. AI and automation are increasingly absorbing repetitive tasks, so human contributions are shifting toward judgment, design, and orchestration—the functions that define the next era of enterprise leadership.

When asked which level is most at risk from AI, leaders ranked entry-level roles as No. 1, followed by middle managers. The exposure comes not from redundancy, but reinvention. They anticipate a shift in how early-career employees build experience and how managers add value as automation takes on more routine tasks.

AI transformation is reshaping how work gets done and by whom. Employees are moving from manual execution into higher-value roles that focus on oversight, enablement, and system governance. AI and automation are increasingly absorbing repetitive tasks, so human contributions are shifting toward judgment, design, and orchestration—the functions that define the next era of enterprise leadership.

When asked which level is most at risk from AI, leaders ranked entry-level roles as No. 1, followed by middle managers. The exposure comes not from redundancy, but reinvention. They anticipate a shift in how early-career employees build experience and how managers add value as automation takes on more routine tasks.

AI fluency is the new employee benchmark

Leaders are split on how AI fluency will impact employee promotions and pay in 2026, with 46% saying it will and 54% saying it won't. AI fluency is now a defining signal of readiness—the ability to operate confidently and responsibly within AI-driven systems. As automation becomes a shared layer across functions, fluency isn’t just about productivity—it’s about compliance, accountability, and credibility.

Workforce priorities underscore that shift. Almost two-thirds (65%) of leaders plan to hire an AI Automation Specialist or equivalent by 2026, followed closely by an AI Platform Engineer (64%).

Typically, hiring for AI-focused roles follows a prioritized order:

AI Automation Specialist or equivalent. Embedded in functions to design and maintain AI-driven workflows

AI Platform Engineer or equivalent. Builds and maintains infrastructure for AI applications and orchestration

AI Automation Manager or equivalent. Oversees AI operations, governance, and alignment across teams

Chief AI Officer or equivalent. Executive ownership of AI vision and strategy

Only 6% of leaders don't have any plans to add AI-specific positions to their headcount.

Together, these roles reflect a decisive transition—from isolated pilots technical teams own to formalized roles that connect AI policy, governance, and execution across the enterprise. These specialized positions prove that AI is no longer an experimental function within a company; it’s an organizational discipline that demands sustained oversight, cross-team accountability, and measurable outcomes.

Together, these roles reflect a decisive transition—from isolated pilots technical teams own to formalized roles that connect AI policy, governance, and execution across the enterprise. These specialized positions prove that AI is no longer an experimental function within a company; it’s an organizational discipline that demands sustained oversight, cross-team accountability, and measurable outcomes.

Together, these roles reflect a decisive transition—from isolated pilots technical teams own to formalized roles that connect AI policy, governance, and execution across the enterprise. These specialized positions prove that AI is no longer an experimental function within a company; it’s an organizational discipline that demands sustained oversight, cross-team accountability, and measurable outcomes.

how to build your ai team

Not sure how to recruit employees with these specialized AI skills?

Not sure how to recruit employees with these specialized AI skills?

Not sure how to recruit employees with these specialized AI skills?

Check out examples of job descriptions and hiring tips in our guide to building an AI transformation team.

The positive and negative impacts of AI

69%

prioritize upskilling over new hires

14%

are hiring AI specialists instead

61%

expect improved employee well-being thanks to AI

Leaders believe AI will positively impact the workforce. 61% expect that'll manifest as increased employee well-being and mobility.

This optimism reflects a major reframing of AI’s role inside enterprise organizations: leaders see automation not as a threat to headcount, but as a release valve for repetitive work that frees people to focus on analysis, creativity, and decision-making.

When asked what workforce strategies would be most important for their organizations to capture value from AI, 69% of leaders cite employee upskilling and AI enablement.

This is far ahead of hiring specialists (14%), redesigning roles to reduce burnout (9%), or linking financial incentives to AI fluency (7%). Enterprises are optimizing for consistency so teams can engage with AI systems safely and effectively, regardless of function or role.

When asked what workforce strategies would be most important for their organizations to capture value from AI, 69% of leaders cite employee upskilling and AI enablement.

This is far ahead of hiring specialists (14%), redesigning roles to reduce burnout (9%), or linking financial incentives to AI fluency (7%). Enterprises are optimizing for consistency so teams can engage with AI systems safely and effectively, regardless of function or role.

When asked what workforce strategies would be most important for their organizations to capture value from AI, 69% of leaders cite employee upskilling and AI enablement.

This is far ahead of hiring specialists (14%), redesigning roles to reduce burnout (9%), or linking financial incentives to AI fluency (7%). Enterprises are optimizing for consistency so teams can engage with AI systems safely and effectively, regardless of function or role.

You need to run a hackathon and teach every single person in your team that they can take whatever it is—it’s Claude Code, it’s Lovable, it's Cursor... and we took our recruiting team and had them build apps. And they were just shocked... people have a mental block of ‘I’m not a builder,’ and you’ve got to unblock it for them, and you’ve got to give them that chance.

You need to run a hackathon and teach every single person in your team that they can take whatever it is—it’s Claude Code, it’s Lovable, it's Cursor... and we took our recruiting team and had them build apps. And they were just shocked... people have a mental block of ‘I’m not a builder,’ and you’ve got to unblock it for them, and you’ve got to give them that chance.

You need to run a hackathon and teach every single person in your team that they can take whatever it is—it’s Claude Code, it’s Lovable, it's Cursor... and we took our recruiting team and had them build apps. And they were just shocked... people have a mental block of ‘I’m not a builder,’ and you’ve got to unblock it for them, and you’ve got to give them that chance.

Curiosity matters a lot.

And the most curious teams are gonna win.

You could be an A+ at your current job and I just don’t know if that job is going to be the same, or if it’s going to exist in five years.

Curiosity matters a lot.

And the most curious teams are gonna win.

You could be an A+ at your current job and I just don’t know if that job is going to be the same, or if it’s going to exist in five years.

Curiosity matters a lot.

And the most curious teams are gonna win.

You could be an A+ at your current job and I just don’t know if that job is going to be the same, or if it’s going to exist in five years.

Andrew Bialecki

Co-Founder and CEO

37%

cite inconsistent AI adoption across teams as the top negative impact

53%

say managers can oversee 10–25 AI workflows

29%

say governance complexity is the second-largest negative impact

Still, progress is uneven. When asked which negative impacts they expect AI will create in their workforce, leaders point first to inconsistent adoption across teams (37%), followed by governance complexity (29%). Uneven maturity and movement across functions has become the hidden friction in AI transformation, slowing scale even as enthusiasm grows. The data implies that leadership alignment and governance are the biggest determinants of success.

That fragility shows up in how managers define their AI management limits. 53% of leaders believe a manager can effectively oversee 10–25 AI-driven workflows or agents, while 17% say more than 25 is possible, and 30% believe fewer than 10 is ideal.

Leaders who report AI as mission-critical are 2.2X more likely to believe managers can effectively oversee 25+ AI-driven workflows or agents.

In sum, AI fluency, leadership accountability, and organizational alignment now define workforce readiness. The most mature enterprises aren’t merely automating—they’re building AI fluency as infrastructure, ensuring humans are the connective tissue powering AI orchestration across their organizations.

Leaders who report AI as mission-critical are 2.2X more likely to believe managers can effectively oversee 25+ AI-driven workflows or agents.

In sum, AI fluency, leadership accountability, and organizational alignment now define workforce readiness. The most mature enterprises aren’t merely automating—they’re building AI fluency as infrastructure, ensuring humans are the connective tissue powering AI orchestration across their organizations.

Leaders who report AI as mission-critical are 2.2X more likely to believe managers can effectively oversee 25+ AI-driven workflows or agents.

In sum, AI fluency, leadership accountability, and organizational alignment now define workforce readiness. The most mature enterprises aren’t merely automating—they’re building AI fluency as infrastructure, ensuring humans are the connective tissue powering AI orchestration across their organizations.

It’s less about the process and the methodology and the transfer of information and selling a product and talking about a product. I think all that transfer of information is going to go to AI and agents. Then, [workers can focus on] creating connections to other humans.

It’s less about the process and the methodology and the transfer of information and selling a product and talking about a product. I think all that transfer of information is going to go to AI and agents. Then, [workers can focus on] creating connections to other humans.

It’s less about the process and the methodology and the transfer of information and selling a product and talking about a product. I think all that transfer of information is going to go to AI and agents. Then, [workers can focus on] creating connections to other humans.

Amanda Kahlow

CEO and Founder

How to prepare your workforce for AI orchestration

As automation absorbs coordination and reporting, managers shift from supervising tasks to orchestrating systems: owning system health, exception handling, and team enablement around governed AI.

According to enterprise leaders:

71% expect AI to reshape teams through redeployment or new hiring.

Entry-level roles will be most impacted by AI.

53% say a manager can oversee 10–25 AI workflows or agents; leaders with mission-critical AI programs are 2.2X more likely to forecast 25+.

46% plan to link pay and promotions to AI fluency.

69% of leaders cite employee upskilling and AI enablement across teams as the top strategies for capturing AI value.

1

1

1

Build AI fluency across every role

Develop an AI learning model that enables employees with the tools to upskill their AI competency.

Make AI fluency a core performance metric for all roles.

💡 AI fluency signals readiness. When every team understands how to manage and escalate AI outcomes, orchestration becomes sustainable.

2

2

2

Equip managers to oversee AI systems

Define AI management as a core competency.

Set expectations: overseeing 10–25 AI workflows or agents as the average.

Expand only with proven visibility and governance maturity.

💡 A manager’s actual span of control will soon be measured in systems, not headcount.

3

3

3

Redeploy talent toward AI execution

Audit where human effort still drives repetitive tasks and coordination.

Transition those roles into AI enablement and oversight, empowering employees to leverage AI to execute.

💡 Redeployment isn’t reduction—it’s repositioning human judgment where it matters most.

Preparing for AI orchestration starts with people, not platforms.

Enterprises that upskill their workforce, equip managers to oversee AI systems, and redeploy talent into enablement roles end up orchestrating their orgs from the inside out.

To design the systems and structures that make this possible, talk to an AI orchestration expert today. We’ll help you translate governance, visibility, and workforce fluency into an orchestration model that scales safely.

Chapter 3

What’s on the AI governance and compliance horizon

The top governance priorities

Priority 1

Human-in-the-loop

Human-in-the-loop

Priority 2

Real-time error monitoring

Real-time error monitoring

Priority 3

Audit logs and versioning

Audit logs and versioning

Human-in-the-loop approvals emerged as the leading governance priority for 2026, selected by 71% of leaders—well ahead of real-time error monitoring, audit logs and version history, and data lineage tracking. This finding highlights that human oversight remains crucial to the responsible adoption of AI and achieving competitive differentiation.

When asked which governance capabilities will be most critical as a competitive advantage in 2026, leaders prioritized them in the following order:

Human-in-the-loop approvals

Real-time error monitoring

Audit logs and version history

Provenance and data lineage tracking

Role-based access controls (RBAC)

Single Sign-On (SSO) and SCIM

These priorities confirm a decisive shift toward governance mechanisms that keep humans in the loop and offer real-time visibility. Enterprises are emphasizing oversight embedded in workflow design—not added after the fact. As teams weave AI into daily operations, the priority is to build visibility, traceability, and human-verified intervention into every critical decision point.

These priorities confirm a decisive shift toward governance mechanisms that keep humans in the loop and offer real-time visibility. Enterprises are emphasizing oversight embedded in workflow design—not added after the fact. As teams weave AI into daily operations, the priority is to build visibility, traceability, and human-verified intervention into every critical decision point.

These priorities confirm a decisive shift toward governance mechanisms that keep humans in the loop and offer real-time visibility. Enterprises are emphasizing oversight embedded in workflow design—not added after the fact. As teams weave AI into daily operations, the priority is to build visibility, traceability, and human-verified intervention into every critical decision point.

Few expect to reach full governance by 2026

4%

expect to achieve full AI governance by 2026

59%

foresee only partial or patchy oversight

71%

say human-in-the-loop approval is the top governance priority

When asked how close their organizations expect to be to full AI governance by 2026—where all AI use is covered and shadow AI is eliminated—leaders paint a picture of steady but incomplete progress.

Only 4% of leaders expect to achieve full AI governance in 2026. 26% of leaders anticipate governance being “strong” in 2026, with nearly all AI use governed with only minor gaps remaining. The other 59% foresee only partial coverage or patchy oversight—evidence that most organizations will still be managing isolated governance gaps in the near term. Although the majority anticipate governance remaining a struggle in 2026, leaders from enterprises where AI is mission-critical are almost 9X more likely to expect their organizations to achieve comprehensive AI governance by 2026.

The takeaway: governance maturity is advancing, but unevenly. Enterprises are tightening oversight, but full coverage—where every model, workflow, and decision path is governed end-to-end—remains aspirational.

Leaders are pragmatic: they know what “comprehensive” governance looks like, but recognize that eliminating shadow AI will require more time, investment, and cultural alignment.

This uneven progress highlights a tension: as enterprises scale AI, the governance perimeter must expand in lockstep. The organizations that close this gap first will define the next frontier of responsible AI leadership.

The takeaway: governance maturity is advancing, but unevenly. Enterprises are tightening oversight, but full coverage—where every model, workflow, and decision path is governed end-to-end—remains aspirational.

Leaders are pragmatic: they know what “comprehensive” governance looks like, but recognize that eliminating shadow AI will require more time, investment, and cultural alignment.

This uneven progress highlights a tension: as enterprises scale AI, the governance perimeter must expand in lockstep. The organizations that close this gap first will define the next frontier of responsible AI leadership.

The takeaway: governance maturity is advancing, but unevenly. Enterprises are tightening oversight, but full coverage—where every model, workflow, and decision path is governed end-to-end—remains aspirational.

Leaders are pragmatic: they know what “comprehensive” governance looks like, but recognize that eliminating shadow AI will require more time, investment, and cultural alignment.

This uneven progress highlights a tension: as enterprises scale AI, the governance perimeter must expand in lockstep. The organizations that close this gap first will define the next frontier of responsible AI leadership.

I have a simple rule: Use AI as a partner, not a publisher. That means I don’t default to AI for high-level thinking or strategy. It’s my lived experience that’s given me a unique perspective to make important decisions, and it’s my authentic voice that people know and trust. Right now, delegating repetitive, low-risk tasks is a no-brainer. AI sorts my emails. Voice dictation helps me work faster. But by the time I finish writing this sentence, AI will have improved. Maybe in a year I’ll laugh at how conservative I was being. The line is definitely shifting.

I have a simple rule: Use AI as a partner, not a publisher. That means I don’t default to AI for high-level thinking or strategy. It’s my lived experience that’s given me a unique perspective to make important decisions, and it’s my authentic voice that people know and trust. Right now, delegating repetitive, low-risk tasks is a no-brainer. AI sorts my emails. Voice dictation helps me work faster. But by the time I finish writing this sentence, AI will have improved. Maybe in a year I’ll laugh at how conservative I was being. The line is definitely shifting.

Milly Tamati

Founder

Governance goes from a burden to a strategic necessity

70%

view governance as a strategic differentiator

52%

expect AI governance to fracture markets in 2026

48%

foresee AI governance unifying markets in 2026

Enterprise leaders have hit a turning point in how they think about AI oversight. Most (70%) leaders view AI governance as a strategic differentiator, compared to 30% who still see it primarily as a compliance burden. For most, compliance is no longer a static requirement but a competitive capability—one that enables faster and safer innovation.

As AI scales, governance is being recast as a business advantage: the framework that lets enterprises move boldly while staying within the guardrails. Effective governance is becoming synonymous with trust at scale—providing the visibility that enables speed without risk.

However, optimism about governance’s potential doesn’t erase structural complexity. More than half (52%) of leaders expect AI governance to fracture markets in 2026, with different standards creating barriers across regions. Only 48% foresee AI governance unifying markets, with consistent rules driving consolidation and interoperability.

Governance may be strategic, but it’s also splintering—it's now shaped as much by geography as by technology. For multinational enterprises, success will depend on navigating this regulatory patchwork while maintaining consistent internal standards.

However, optimism about governance’s potential doesn’t erase structural complexity. More than half (52%) of leaders expect AI governance to fracture markets in 2026, with different standards creating barriers across regions. Only 48% foresee AI governance unifying markets, with consistent rules driving consolidation and interoperability.

Governance may be strategic, but it’s also splintering—it's now shaped as much by geography as by technology. For multinational enterprises, success will depend on navigating this regulatory patchwork while maintaining consistent internal standards.

However, optimism about governance’s potential doesn’t erase structural complexity. More than half (52%) of leaders expect AI governance to fracture markets in 2026, with different standards creating barriers across regions. Only 48% foresee AI governance unifying markets, with consistent rules driving consolidation and interoperability.

Governance may be strategic, but it’s also splintering—it's now shaped as much by geography as by technology. For multinational enterprises, success will depend on navigating this regulatory patchwork while maintaining consistent internal standards.

I’m seeing companies wrestle with ownership and accountability. GDPR and the AI Act add pressure in the UK and EU, but the real challenge is internal. If AI creates an output end-to-end, who owns it? Teams need clear standards for data handling, approval flows, and how AI contributes to IP. The organizations that nail this [will] move faster and build trust faster.

I’m seeing companies wrestle with ownership and accountability. GDPR and the AI Act add pressure in the UK and EU, but the real challenge is internal. If AI creates an output end-to-end, who owns it? Teams need clear standards for data handling, approval flows, and how AI contributes to IP. The organizations that nail this [will] move faster and build trust faster.

I’m seeing companies wrestle with ownership and accountability. GDPR and the AI Act add pressure in the UK and EU, but the real challenge is internal. If AI creates an output end-to-end, who owns it? Teams need clear standards for data handling, approval flows, and how AI contributes to IP. The organizations that nail this [will] move faster and build trust faster.

Charlie Hills

Co-Founder

How AI governance fits into enterprise budgets

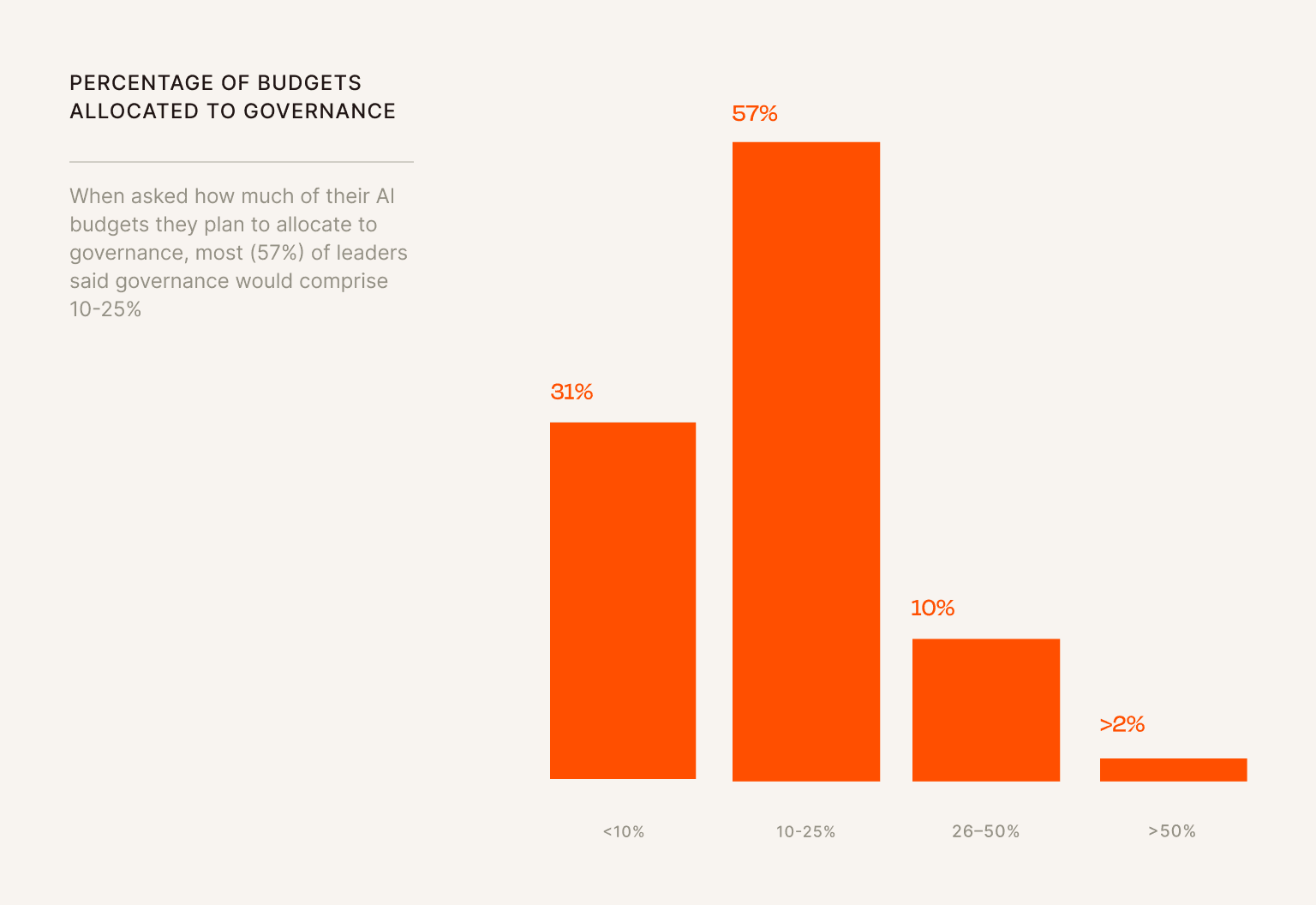

57% of enterprise leaders expect to dedicate 10–25% of their total AI budgets to governance and compliance, signaling that oversight is now an established part of AI investment planning.

Most organizations are planning moderate, structured funding for governance—embedding oversight into the broader economics of AI transformation. Governance is no longer a reactive cost center; it’s becoming a standard operating expense for scaling responsibly.

The enterprises treating governance as a core investment, rather than a regulatory checkbox, will be the ones that convert trust into a lasting competitive advantage.

The enterprises treating governance as a core investment, rather than a regulatory checkbox, will be the ones that convert trust into a lasting competitive advantage.

4 steps to scale AI without losing control

4 steps to scale AI without losing control

The challenge

As AI expands across the enterprise, the risk isn’t lack of ambition—it’s scaling without visibility. Governance, fluency, and observability have become the new control layers that keep AI measurable, accountable, and aligned to business outcomes.

Enterprise AI maturity depends on more than scale—it depends on systems that are auditable, observable, and governed by design. Leading organizations treat trust as infrastructure, so they embed identity, access, and compliance controls into every workflow.

To see how orchestration can unify governance, visibility, and ROI across your architecture, talk to an AI orchestration expert today.

1

1

1

Operationalize governance

Only 4% of enterprise leaders expect their organizations to achieve full AI governance in 2026, but those who describe AI as mission-critical are 9X more likely to predict they will reach comprehensive oversight.

What to do next:

Establish a cross-functional AI governance program (or council) with clear escalation paths and authority over risk exceptions.

Define what "strong governance" means in your architecture—which workflows are governed, which are auditable, and where shadow AI remains.

2

2

2

Prioritize observability

40% of leaders rank end-to-end observability as the most critical capability for responsibly scaling AI in their organizations, outpacing both cost and speed. 83% say AI error rates must remain ≤5% in high-stakes workflows.

What to do next:

Set clear performance standards for every AI system—what accuracy, speed, and transparency look like when it’s working as intended.

Integrate monitoring and access controls into the tooling your teams already use (e.g., identity, logging, observability stacks) so performance and permissions stay visible in one place.

When issues arise, ensure they’re flagged and handled through the same processes you use for any critical system.

3

3

3

Extend oversight

When asked which governance capabilities will be most critical for competitive advantage in 2026, leaders most frequently selected human-in-the-loop approvals.

What to do next:

Build workflows that give humans the ability to review, override, or escalate AI-driven decisions.

Define thresholds for human review. Use data sensitivity, regulatory exposure, and business risk to determine where oversight must be mandatory.

Implement audit trails, version history, and dashboards that make human approvals traceable and defensible.

4

4

4

Align investment

57% of leaders plan to allocate 10–25% of AI budgets to governance and compliance by 2026. 70% of leaders view AI governance as a strategic differentiator, rather than a compliance burden.

What to do next:

Treat governance, observability, and measurement as infrastructure. These are long-term investments, not overhead.

Prioritize funding for initiatives that can demonstrate governed workflows, clear audit trails, and measurable system reliability.

Reallocate pilot budgets toward production-ready oversight tools that make AI impact visible to technical and business leaders alike.

Chapter 4

How much enterprises spend to see AI returns

Leaders place big bets on AI as a strategic necessity

The majority (84%) of leaders are confident that they will have solid proof of AI ROI to influence budget decisions in 2026, and 74% of enterprise leaders say AI budgets would be among the last to be cut during a downturn, confirming that AI has graduated from discretionary tech to essential infrastructure.

For most organizations, AI has entered the must-maintain category of enterprise investment—viewed as foundational to continuity and competitiveness. But leaders who identify AI as “limited but expanding” are almost twice as likely to categorize AI as a “first to cut” expense, signaling a mindset divide between emerging and mature programs.

The majority (84%) of leaders are confident that they will have solid proof of AI ROI to influence budget decisions in 2026, and 74% of enterprise leaders say AI budgets would be among the last to be cut during a downturn, confirming that AI has graduated from discretionary tech to essential infrastructure.

For most organizations, AI has entered the must-maintain category of enterprise investment—viewed as foundational to continuity and competitiveness. But leaders who identify AI as “limited but expanding” are almost twice as likely to categorize AI as a “first to cut” expense, signaling a mindset divide between emerging and mature programs.

The majority (84%) of leaders are confident that they will have solid proof of AI ROI to influence budget decisions in 2026, and 74% of enterprise leaders say AI budgets would be among the last to be cut during a downturn, confirming that AI has graduated from discretionary tech to essential infrastructure.

For most organizations, AI has entered the must-maintain category of enterprise investment—viewed as foundational to continuity and competitiveness. But leaders who identify AI as “limited but expanding” are almost twice as likely to categorize AI as a “first to cut” expense, signaling a mindset divide between emerging and mature programs.

Yet, leaders still recognize that AI is growing and experimental. They plan accordingly. Only 6% of leaders expect the majority or all of their AI initiatives to have proven, measurable ROI by 2026. But leaders who identified AI as mission-critical are 3.8X more likely to expect a majority of initiatives to deliver measurable ROI by 2026.

Expectations for AI payoffs are split: 54% of enterprise leaders predict that fewer than half of their AI initiatives will show measurable ROI by 2026, while 46% are more optimistic, expecting most to deliver clear returns. This suggests that leaders remain bullish about expanding AI in their organizations, but are somewhat optimistic about how frequently they can tie those results to financial outcomes.

Yet, leaders still recognize that AI is growing and experimental. They plan accordingly. Only 6% of leaders expect the majority or all of their AI initiatives to have proven, measurable ROI by 2026. But leaders who identified AI as mission-critical are 3.8X more likely to expect a majority of initiatives to deliver measurable ROI by 2026.

Expectations for AI payoffs are split: 54% of enterprise leaders predict that fewer than half of their AI initiatives will show measurable ROI by 2026, while 46% are more optimistic, expecting most to deliver clear returns. This suggests that leaders remain bullish about expanding AI in their organizations, but are somewhat optimistic about how frequently they can tie those results to financial outcomes.

We measure [AI] value through efficiency and output, not just cost savings. If we cut a review cycle from three days to one day while maintaining quality, that’s value, too.

The payoff is not replacing people, it is letting them do more of what clients pay for.

We measure [AI] value through efficiency and output, not just cost savings. If we cut a review cycle from three days to one day while maintaining quality, that’s value, too.

The payoff is not replacing people, it is letting them do more of what clients pay for.

We measure [AI] value through efficiency and output, not just cost savings. If we cut a review cycle from three days to one day while maintaining quality, that’s value, too.

The payoff is not replacing people, it is letting them do more of what clients pay for.

AJ Eckstein

Founder and CEO

Unlocking AI investment lies in internal proof points

88%

say internal proof unlocks more investment

54%

prioritize measurable productivity gains

79%

will only tolerate a 25% loss or less on AI bets

88% of enterprise leaders say internal proof signals—financial savings, risk reduction, or productivity gains—are the triggers most likely to unlock more AI investment.

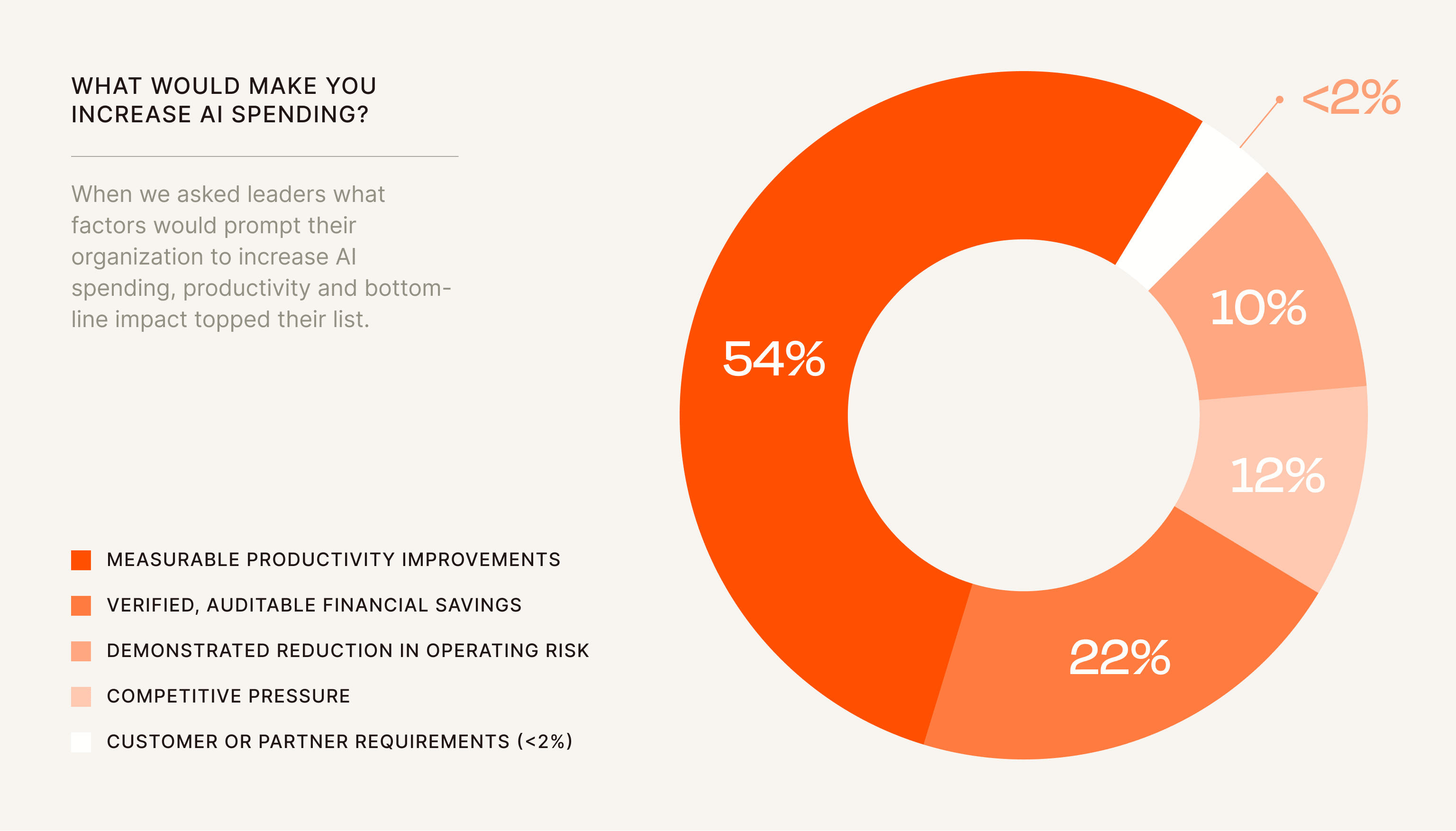

When asked what would most likely prompt their organization to increase AI spending, leaders prioritized:

Measurable productivity improvements (54%)

Verified, auditable financial savings (22%)

Demonstrated reduction in operating risk (12%)

Competitive pressure (10%)

Customer or partner requirements (2%)

Productivity gains top this list with the majority (54%) of executives seeking validation that their AI initiatives are improving their output efficiency.

Leaders are signaling a disciplined approach to risk. 79% of leaders say their organizations will only tolerate losses of 25% or less on AI investments in pursuit of long-term growth. Another 14% insist on breaking even, while only 7% would accept losses greater than 25%.

This pattern reveals a pragmatic mindset when it comes to growth. Enterprise leaders expect to invest strategically but not recklessly—accepting modest short-term trade-offs in pursuit of a more durable advantage. Accountability, not experimentation, is now the hallmark of AI investment.

Leaders are signaling a disciplined approach to risk. 79% of leaders say their organizations will only tolerate losses of 25% or less on AI investments in pursuit of long-term growth. Another 14% insist on breaking even, while only 7% would accept losses greater than 25%.

This pattern reveals a pragmatic mindset when it comes to growth. Enterprise leaders expect to invest strategically but not recklessly—accepting modest short-term trade-offs in pursuit of a more durable advantage. Accountability, not experimentation, is now the hallmark of AI investment.

In 2026, I expect ROI to become even more specific. Instead of saying “AI helped,” we will point to individual agents or automations that cut handoff time, improve conversion, or remove friction in a single journey. The proof will come from clear gains tied to the exact workflows that matter.

In 2026, I expect ROI to become even more specific. Instead of saying “AI helped,” we will point to individual agents or automations that cut handoff time, improve conversion, or remove friction in a single journey. The proof will come from clear gains tied to the exact workflows that matter.

In 2026, I expect ROI to become even more specific. Instead of saying “AI helped,” we will point to individual agents or automations that cut handoff time, improve conversion, or remove friction in a single journey. The proof will come from clear gains tied to the exact workflows that matter.

Charlie Hills

Co-Founder

ROI will be measured by outcomes and funded at enterprise scale

When asked how they plan to measure AI ROI in 2026, enterprise leaders pointed squarely toward business performance, with 45% ranking tangible business outcomes as the most important metric. The emphasis has shifted from tracking productivity metrics to demonstrating financial and operational impact that can be quantified at the board level.

Leaders ranked their top AI ROI indicators as:

Tangible business outcomes (pipeline acceleration, conversion improvement, churn reduction)

FTE-equivalent savings (workforce reduction)

Workforce efficiency gains (time saved, fewer manual tasks)

Workforce adoption rates (broader employee usage of AI tools)

Tool or SaaS consolidation savings

Governance or risk improvements (fewer compliance incidents, stronger security posture)

That focus on outcomes is being backed by real investment.

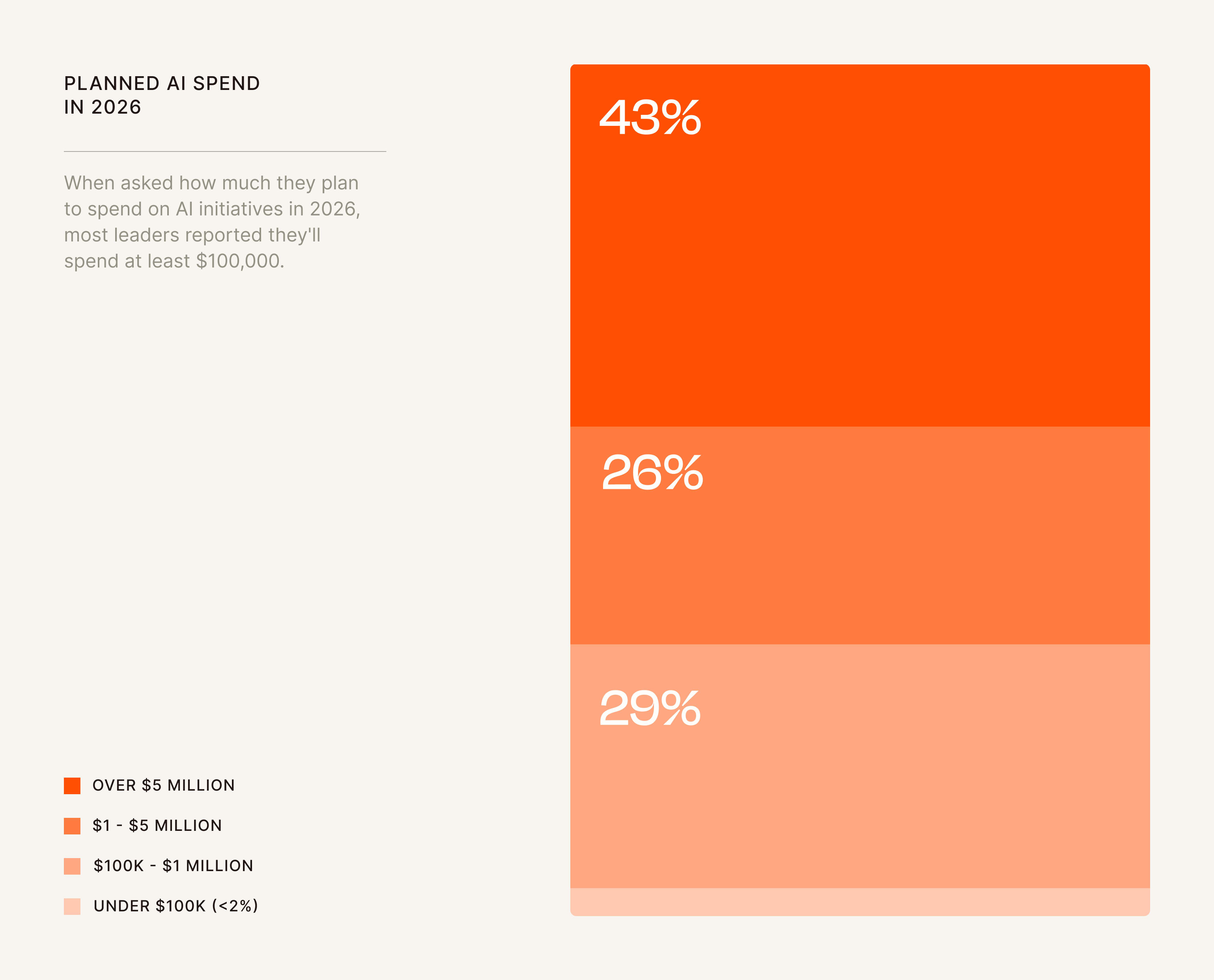

Taken together, 69% of enterprises plan to invest $1 million or more in AI over the next year, the majority of which planning to spend over $5 million—a definitive signal that AI has moved from pilot experimentation to program-level infrastructure.

Budgets now mirror ambition. Enterprises are funding AI at the scale required to demonstrate measurable ROI. The era of proof-of-concept spending is closing; the next phase of AI maturity will be defined by outcomes that can be audited, compared, and sustained.

What’s an AI experiment or project of yours that failed, and what learnings from that will you take into 2026?

“I built a complicated system that connected ChatGPT, Zapier, Slack, Google Docs, and a bunch of other custom webhooks. It looked incredible on paper, but it broke constantly due to systems that weren’t ready. The lesson I’m taking into 2026 is to start with the smallest possible wins. Automate the smaller percent of tasks that remove the biggest bottleneck. Build the next step only when the previous one is proven.”

Adam Stewart

Digital Marketing Consultant, Digital Bond Marketing

“Every project I’ve seen fail has had one common issue: messy or incomplete data. AI can only work with the information it is given, so unorganized data slows everything down, creates extra work, and stops projects from growing. It is also the part no one wants to talk about, but it is the first thing that determines whether a project succeeds.”

Kate Marshall

Founder, The Gr.ai

“One AI experiment that didn’t fully deliver for us was an automated creator post-draft review system. The goal was to have AI evaluate creator draft content by pulling in the campaign brief, checking for key messages, do’s and don’ts, and flagging compliance issues. The technical part worked. But where it failed was in creative judgment, the part that actually determines whether the post will perform. AI couldn’t assess “taste” or cultural fit. The lesson we’re taking into 2026 is that AI can support the process, but can't own it.”

AJ Eckstein

Founder and CEO, Creator Match

“I've been meaning to build a system to fully automate my newsletter, where it will do the research, interview the individuals, create images, gather screenshots, and pull it together into my email marketing software. I was too ambitious with this endeavor—[I] should have just broken it down into multiple steps.”

Kushank Aggarwal

Co-Founder, Prompt Genie

“The one area where we didn’t fully realize expected gains in 2025 is AI-driven content generation automation, especially video/image workflows. We made progress, but the end-to-end pipeline didn’t reach the consistency and quality we wanted. Key learning for 2026: treat creative automation as a workflow + quality-gates problem, not a one-shot model choice. And keep iterating until it’s dependable at scale.”

“The biggest thing I found is that AI assistants, like Zapier’s Copilot, or vibe coding tools like Zite and Lovable, are super powerful. The biggest mistake I’ve made is giving them too much to do at once or being too general. Even when I get specific but still ask for too much, the results are fine, but not great. So, now I break things down into smaller pieces and build one part at a time.”

Philip Lakin

Sr. AI Marketing Manager, Zapier

Survey methodology

Publication date: October 23, 2025 | Total respondents (N): 200

We conducted this research with verified B2B respondents screened for senior technical leadership roles with direct responsibility for AI, automation, IT, or engineering strategy. All participants held Director-level or above positions within mid to large-sized organizations across the United States, Canada, and Europe.

Audience

The survey focused on Director+ technical leaders responsible for leading AI and automation initiatives:

C-Level executives (CIO, CTO, CDO, CISO, etc.): 24.5%

VP: 27%

Director: 48.5%

Company size

500–999 employees: 14 respondents

1,000–4,999 employees: 60 respondents

5,000+ employees: 126 respondents

Primary functions represented

IT Operations / Infrastructure: 38% Security / Compliance / Risk: 4% Data / Analytics: 37% Engineering / Platform: 21%

Geography

United States: 69.5%

Europe: 27.5%

Canada: 3%

Ready to join the AI Leaders Lab?

AI transformation takes more than handing out ChatGPT licenses—it requires rethinking how people, processes, and technology work together.

That’s why we created a monthly AI Leaders Lab: an invite-only session designed for leaders like you who are shaping the future of work.

This 60-minute conversation is small by design. We hand-pick a select group of AI leaders so every participant can contribute, exchange ideas, and walk away with new perspectives. If you can’t make it, let us know—we’ll open your seat to another leader.

What you’ll experience:

A roundtable discussion tailored to the group’s top priorities

Peer-to-peer connection through interactive breakouts

Fresh insights, data, and real-world patterns from Zapier’s AI team

Practical takeaways you can apply immediately to accelerate AI adoption

Spark viral AI adoption in your org, build habits and infrastructure that endure beyond the hype, and shift AI from a project into a company-wide culture. Seats are limited—reserve yours now to be part of this exclusive conversation.

Scale your AI ROI without scaling your risk

Ready to turn AI into a powerful competitive advantage at scale? Zapier’s AI orchestration platform gives you everything you need to maximize your AI adoption by connecting tools, deploying agents, and scaling intelligent systems across your business.

Connect every app, AI model, and workflow. Securely integrate with thousands of tools using advanced authentication and data handling.

Automate complex workflows easily. Build, test, and scale AI-driven systems using no-code, low-code, or full-code—whatever your teams need.

Deploy intelligent systems across the org. Connect AI to the workflows your teams rely on—sales, support, IT, ops, marketing, and more.

Control AI at scale with IT-grade governance. Prevent shadow AI, enforce permissions, and keep AI use auditable, visible, and compliant.

▤▦▩▨

Research Reports • 2026

Research Reports • 2026

Research Reports • 2026