The sudden rise of apps powered by artificial intelligence (AI) means there are a lot of new technical buzzwords being thrown around. If you want to make sense of things, not only do you need to know about AI, but you also need to understand the different kinds of AI (like artificial narrow intelligence [ANI], artificial general intelligence [AGI], and artificial super intelligence [ASI]) and the different techniques used to create them (like machine learning and deep learning).

While it would take an entire dictionary to fully describe the nuances of every new bit of jargon, I can at least break down the most important ones. Let's dive in.

What is artificial intelligence?

As a specific technical term, artificial intelligence is really poorly defined. Most AI definitions are somewhere between "a poor choice of words in 1954" and a catchall for "machines that can learn, reason, and act for themselves," and they rarely dig into what that means.

This is why other marginally more descriptive terms like ANI, AGI, and ASI have become more prevalent. It's much easier to conclude that ChatGPT is an artificial narrow intelligence—"an AI system that's designed to perform specific tasks"—than to quibble over where it falls on the line between Clippy and Data.

But there's one other definition worth knowing, too: artificial intelligence is also the name of the branch of computer science dedicated to studying, developing, and researching the techniques necessary to create artificial intelligence.

Most of the time when we're discussing AI, we're using it as the nebulous term for machines that can, to some degree or another, "think." But when we're comparing AI to machine learning, it's the scientific field of study we're interested in.

(Yes, all this is confusing. I sometimes find it hard to follow along, and it's my job to understand it. I promise things will make sense eventually.)

What is machine learning?

Machine learning is a subfield of artificial intelligence.

Instead of computer scientists having to explicitly program an app to do something, they develop algorithms that let it analyze massive datasets, learn from that data, and then make decisions based on it.

Let's imagine we're writing a computer program that can identify whether something is "a dog" or "not a dog." One way to do that would be to write a series of rules that the app could use to identify dogs:

Does it have four legs?

Does it have two ears (floppy or standy-uppy)?

Does it have two eyes?

Does it have a waggy tail?

Is it playing fetch?

And so on and so on.

Of course, this style of program has major problems. What happens if a dog has one floppy ear and one standy-uppy ear? Or what happens if it's wearing a cute pirate hat? We might be able to write enough rules that our app could successfully identify whether or not something was a dog most of the time—but there would always be something we forgot.

With machine learning, we have a better option. Instead of writing explicit rules, we would write an algorithm that allowed the app to make its own rules. (In classic machine learning, we'd probably give it a few suggestions like "look at the ears" and "count the legs," but we'd still leave it mostly to its own devices.) Then we could show it thousands of photos of "dogs" and thousands of photos of "not dogs," and let it figure out how to tell them apart.

Given enough good data, it would come up with its own secret criteria that it could use to give a percentage certainty that a photo was "a dog" or "not a dog." We wouldn't ever need to know exactly what it was looking for—just that it could successfully identify dogs with enough accuracy to be useful.

Of course, this is all grossly simplified, but it's still a fair representation of what goes on under the hood. Tools built using these kinds of techniques are why your smartphone is able to identify the objects and animals in your photos, and why AI art generators like DALL·E 2 know what things are.

What is machine learning used for?

Machine learning is one of the most common implementations of AI in the real world. Some examples of machine learning you've probably encountered are:

Recommendation algorithms on Amazon, Netflix, and other websites

Spam filters in Gmail and other email apps

Fraud detection for your credit card, bank, and other financial services

Automatic quality control in manufacturing

Machine learning types

In general, there are three types of machine learning:

Supervised learning uses structured, labeled datasets. Models developed with supervised learning are able to make predictions and categorize things.

Unsupervised learning uses unlabeled datasets. Models developed with it are able to detect patterns and cluster things by their distinguishing characteristics.

Reinforcement learning is a process where a model learns to be more accurate by having its outputs assessed, either by a human or by another AI designed to rank them.

What kind of learning is most appropriate depends on what kind of data the developers have to work with, and what end result they're going for.

So that's machine learning, but let's dig a little…deeper.

What is deep learning?

Deep learning is a subfield of machine learning.

While structured datasets (like our imaginary "dog-not-dog" dataset) have their uses, they're incredibly expensive to produce and, as a result, pretty limited in size. It would make everything a lot easier if we could give a computer program some raw data (not split into "dog" and "not dog"), and let it work everything out for itself. Which is exactly what deep learning algorithms allow us to do.

Instead of relying on human researchers to add structure, deep learning models are given enough guidance to get started, handed heaps of data, and left to their own devices.

GPT, for example, wasn't given nicely structured training data: it was allowed to comb through millions of gigabytes of raw text and draw its own conclusions. The rough model that this created isn't the version that powers ChatGPT and all the other GPT-based tools, but it forms the basis of it. OpenAI has just done a lot of additional training, fine-tuning, and tweaking to make sure it doesn't dredge up the worst of the internet.

What are neural networks?

Artificial neural networks (ANNs) are a kind of computer algorithm modeled off the human brain, and they're typically created using machine learning or deep learning.

An ANN consists of layers of "nodes," which are based on neurons. There's an input layer, an output layer, and one or more hidden layers, where most of the computation happens. (A deep neural network is one with multiple hidden layers.)

Each node has a weight and a threshold value and connects onwards nodes in the next layer. When the threshold value is exceeded, it triggers, and it sends data onto the next set of nodes; if the threshold value isn't exceeded, it doesn't send any data. The weight determines how important a signal from a particular node is at triggering other nodes, and in most instances, data can only "feed forward" through the neural network.

Let's go back to our "dog" and "not dog" app. All our machine learning has generated a neural network that's capable of identifying what is and isn't a dog. It's likely that in one of the node layers, there will be a node for floppy ears, another node for standy-uppy ears, and another for particularly triangular ears (along with thousands more nodes to identify other specifics about the image).

When we give it a photo of a cute dog with floppy ears, the floppy ear-identifying node's threshold will be met, and it will send a signal on to the next node in the sequence. If the waggy tail-identifying node, spots-identifying node, and four legs-identifying nodes are also triggered, then the neural network will output a strong "dog" signal. On the other hand, if we give the neural network a photo of some flowers, almost none of the dog-identifying nodes will trigger, so the model will output a strong "not a dog" signal.

Again, this is a vastly simplified—but not entirely wrong—description of what goes on inside a lot of AI-based tools. Except they have a lot more nodes than we can easily explain in this way. GPT-3, for example, has 96 layers of nodes and 175 billion parameters.

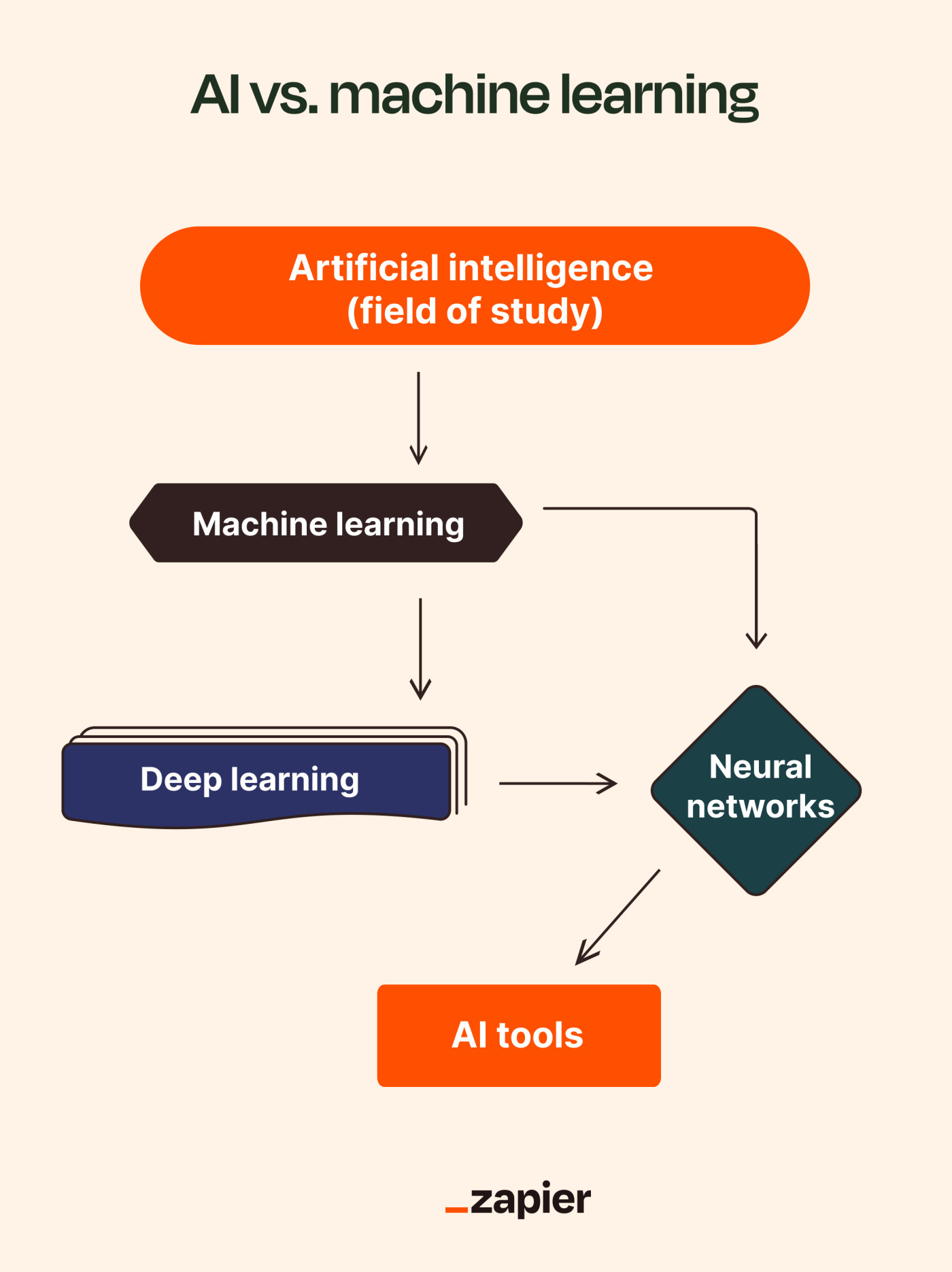

AI vs. machine learning…and then some

If you can only remember one thing from all this, here it is:

Modern artificial intelligence-based tools generally rely on neural networks, which are created using deep learning, an advanced technique from machine learning, a subfield of the computer science discipline that is also called artificial intelligence.

I hope you're not sorry you asked.

Related reading: