Google is now fully in its "Gemini era"—so buckle up and get ready for some confusing rebrandings. Gemini is the name Google gave to its current generation family of multimodal AI models it launched last year, but in typical Google fashion, it's now also applying it to basically everything else.

It can get a touch confusing since, by my reckoning, Google has:

Google Gemini, a family of multimodal AI models. This is what Google uses in its own apps, but developers can integrate it in their apps, too.

Google Gemini, a chatbot that runs on the Gemini family of models. (This is the chatbot that used to be called Bard.)

Google Gemini, an upcoming replacement for Google Assistant that will presumably integrate with the chatbot—but we don't know for sure yet.

Gemini for Google Workspace, the AI features integrated across Gmail, Google Docs, and the other Workspace apps for paying users.

And a few more Geminis that I'm sure I'm missing.

All of these new Geminis are based around the core family of multimodal AI models, so let's start there.

What is Google Gemini?

Google Gemini is a family of AI models, like OpenAI's GPT. They're all multimodal models, which means they can understand and generate text like a regular large language model (LLM), but they can also natively understand, operate on, and combine other kinds of information like images, audio, videos, and code.

For example, you can give Gemini a prompt like "what's going on in this picture?" and attach an image, and it will describe the image and respond to further prompts asking for more complex information.

Because we've now entered the corporate competition era of AI, most companies are keeping pretty quiet on the specifics of how their models work and differ. Still, Google has confirmed that the Gemini models use a transformer architecture and rely on strategies like pretraining and fine-tuning, much as other major AI models do.

Like GPT-4o, OpenAI's latest model, Google Gemini was also trained on images, audio, and videos at the same time as it was being trained on text. Gemini's ability to process them isn't the result of a separate model bolted on at the end—it's all baked in from the beginning.

In theory, this should mean Google Gemini understands things in a more intuitive manner. Take a phrase like "monkey business": if an AI is just trained on images tagged "monkey" and "business," it's likely to just think of monkeys in suits when asked to draw something related to it. On the other hand, if the AI for understanding images and the AI for understanding language are trained at the same time, the entire model should have a deeper understanding of the mischievous and deceitful connotations of the phrase. It's ok for the monkeys to be wearing suits—but they'd better be throwing poo.

By training all its modalities at once, Google claims that Gemini can "seamlessly understand and reason about all kinds of inputs from the ground up." For example, it can understand charts and the captions that accompany them, read text from signs, and otherwise integrate information from multiple modalities. While this was relatively unique last year when Gemini first launched, both Claude 3 and GPT-4o have a lot of the same multimodal features.

The other key distinction that Google likes to draw is that Google Gemini has a "long context window." This means that a prompt can include more information to better shape the responses the model is able to give and what resources it has to work with. Right now, Gemini 1.5 Pro has a context window of up to a million tokens, and Google will soon expand that to two million tokens. That's apparently enough for a 1,500-page PDF, so you could theoretically upload a huge document and ask Gemini questions about what it contains.

Google Gemini models come in multiple sizes

The different Gemini models are designed to run on almost any device, which is why Google is integrating it absolutely everywhere. Google claims that its different versions are capable of running efficiently on everything from data centers to smartphones.

Right now, Google has the following Gemini models.

Gemini 1.0 Ultra

Gemini 1.0 Ultra is the largest model designed for the most complex tasks. In LLM benchmarks like MMLU, Big-Bench Hard, and HumanEval, it outperformed GPT-4, and in multimodal benchmarks like MMMU, VQAv2, and MathVista, it outperformed GPT-4V. It's still undergoing testing and is due to be released this year.

Gemini 1.5 Pro

Gemini 1.5 Pro offers a balance between scalability and performance. It's designed to be used for a variety of different tasks and has a context window of up to two million tokens. It's the main Gemini model that Google is deploying across its applications. A specially trained version of it is used by the Google Gemini chatbot (formerly called Bard).

Gemini 1.5 Flash

Gemini 1.5 Flash is a lightweight, fast, cost-efficient model designed for high frequency tasks. It's less powerful than Gemini Pro, but it's cheaper to run and still has a context window of up to one million tokens.

Gemini 1.0 Nano

Gemini 1.0 Nano is designed to operate locally on smartphones and other mobile devices. In theory, this would allow your smartphone to respond to simple prompts and do things like summarize text far faster than if it had to connect to an external server. For now, Gemini Nano is only available on the Google Pixel 8 Pro and powers features like smart replies in Gboard.

Each Gemini model differs in how many parameters it has and, as a result, how good it is at responding to more complex queries as well as how much processing power it needs to run. Unfortunately, figures like the number of parameters any given model has are often kept secret—unless there's a reason for a company to brag.

To complicate things further, Pro and Flash are part of the Gemini 1.5 series of models, while Ultra and Nano are still part of Gemini 1.0. Presumably, they'll both be updated at some point this year.

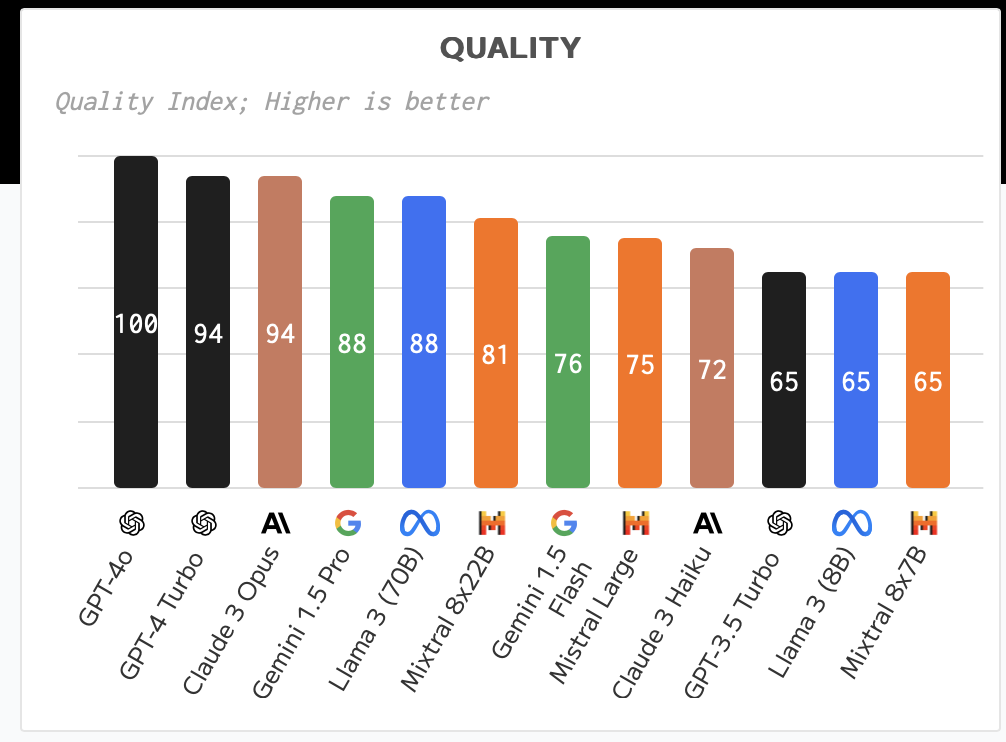

How does Google Gemini compare to other LLMs?

We're now reaching the point where directly comparing AI models is starting to feel irrelevant. The best models from OpenAI, Anthropic, and Google are all incredibly powerful—and how you fine-tune and employ them is now significantly more relevant than what model you choose.

Similarly, the trade-offs between speed and power are becoming more and more important. Google Gemini Ultra appears to be one of the most powerful AI models yet developed, but there's a reason Google is pushing Gemini Pro, Flash, and Nano. Only in a few exceptional edge cases will the extra cost to run be worth the extra computing overhead.

With that said, the various benchmarks suggest that Gemini 1.5 Pro is slightly behind the best proprietary models, like GPT-4o and Claude 3 Opus, and on a level with the best open models, like Llama 3 70B and Mixtral 8x22B. Gemini 1.5 Flash is then slightly ahead of the less powerful proprietary models, like Claude 3 Haiku and GPT-3.5 Turbo.

As Gemini 1.0 Ultra and 1.0 Nano aren't widely available yet, comparing their performance is harder but can be extrapolated from what Google has said in the past. Ultra was competitive with GPT-4 when it was announced last year, so whatever version is still in training is presumably still similar to the state-of-the-art models. Nano, on the other hand, is designed to operate efficiently on devices, so it will be significantly worse at benchmarks but probably pretty useful in the real world.

How does Google use Gemini?

Google claims Gemini is now integrated with all its "two-billion user products," which I take to mean that it's used in all Google's services that have two billion or more users. That would probably be Google Search, Android, Chrome, YouTube, and Gmail at a minimum, but Google is also integrating Gemini elsewhere.

Google Gemini (the chatbot). The most obvious place that Google deploys Gemini is with the chatbot-formerly-known-as-Bard. It's now also called Gemini and is more of a direct ChatGPT competitor than a replacement for Search.

Google One. The $20/month Google One AI Premium plan gets you access to more advanced models as well as Gemini in Gmail, Docs, and other Google apps.

Google Search. Search is also going to get a lot of Gemini-powered updates. AI Overviews are basically quick answer boxes for more complex queries. Soon, you'll be able to ask Google for simpler or more detailed summaries of information, and you'll even be able to use Search to plan multi-day trips and meals.

Google Workspace. Google's enterprise version of Workspace is also starting to get lots of handy Gemini-powered features—though most of them are locked away behind an extra $20/user/month Gemini subscription.

Google Astra. Google Astra is Google's vision of what future multimodal AI agents would be like. The whole project is built on top of Gemini models. After essentially faking a much-hyped Gemini Ultra launch video last year, the latest Astra demo was very clearly described as a real-time single-take demonstration.

And then there are countless other places that Google is either using Gemini or planning to. One of the most exciting is that it will soon be built directly into Google Chrome. When Google's CEO, Sundar Pichai, says Google is in its Gemini Era, he really means it.

Google Gemini is designed to be built on top of

In addition to using Gemini in its own products, Google also allows developers to integrate Gemini into their own apps, tools, and services.

It seems that almost every app now is adding AI-based features, and many of them are using OpenAI's GPT, DALL·E, and other APIs to do it. Google wants a piece of that action, so Gemini is designed from the start for developers to be able to build AI-powered apps and otherwise integrate AI into their products. The big advantage it has is that it can integrate them through its cloud computing, hosting, and other web services.

Developers can access Gemini 1.5 Pro and 1.5 Flash through the Gemini API in Google AI Studio or Google Cloud Vertex AI. This allows them to further train Gemini on their own data to build powerful tools like folks have already been doing with GPT.

How to access Google Gemini

The easiest way to check out Gemini is through the chatbot of the same name. If you subscribe to a Gemini plan, you'll also be able to use it throughout the various different Google apps.

Developers can also test Google Gemini 1.5 Pro and 1.5 Flash through Google AI Studio or Vertex AI. And with Zapier's Google Vertex AI and Google AI Studio integrations, you can access the latest Gemini models from all the apps you use at work. Here are a few examples to get you started.

Send prompts to Google Vertex AI from Google Sheets and save the responses

Start a conversation with Google Vertex AI when a prompt is posted in a particular Slack channel

Send prompts in Google AI Studio (Gemini) for new or updated rows in Google Sheets

Label incoming emails automatically with Google AI Studio (Gemini)

Zapier is the leader in workflow automation—integrating with 6,000+ apps from partners like Google, Salesforce, and Microsoft. Use interfaces, data tables, and logic to build secure, automated systems for your business-critical workflows across your organization's technology stack. Learn more.

Related reading:

This article was originally published in January 2024. The most recent update was in May 2024.