We've all seen a lot of AI-generated videos floating across social media. Most are dead internet theory at worst, cute at best. Of course, there are some that are scary good, but until recently, they were resource-intensive to develop, in terms of time, tokens, or even the hardware required.

Google has decided to change that. It's been launching AI offerings for every major category (think Firebase Studio for vibe coding), and Veo is its answer to AI-generated videos. And it's impressive.

Interestingly—in a side-eye kind of way—some of its training data is from YouTube. Google hasn't necessarily elaborated on what that means precisely, but do with that what you will.

In any case, Veo 3 seems to be a major breakthrough: you can now include audio generation alongside video generation, in a way that doesn't look like your videos are defying the laws of physics.

After spending time testing it myself, I can say it's a significant leap forward—though it still has plenty of quirks as this technology finds its footing.

Table of contents:

What is Google Veo?

Google Veo is a family of AI video generation models that can create videos from text prompts or from static images. The latest model, Veo 3, includes native audio generation alongside video (the previous model, Veo 2, produced silent clips).

That native audio, real-world physics simulation, and advanced prompt understanding are what make Veo 3 stand out from other AI video generators. In comparison, other AI video generators, like Sora and Runway, don't have native audio functionality (yet).

Google Veo 3 at a glance

Veo 3 is really impressive—folks are already using it to overhaul their marketing strategies. Before we dive into how it works and what it can do, here's a quick glance at what it does well and where it still needs some love.

Google Veo pros:

Native audio and video generation with natural-sounding speech and background noise or music

Realistic physics simulation for elements like water, fabric, and light

Excellent cinematic camera controls and scene composition

Advanced prompt understanding, especially for interaction cues

Multiple input options (text, image, frames)

Integrated in Flow and Gemini, with an intuitive interface (especially in Flow)

Constantly improving and already ahead of competitors like Runway or Sora

Google Veo cons:

Limited in length to 8 seconds

Inconsistent character continuity across scenes, even with detailed prompts

Prompt interpretation varies, making repeatable outputs hard

Limited text accuracy in visual elements (e.g., miswritten words)

Some bugs and crashes when combining shots or switching between modes

Visible watermarks unless you pay for Ultra ($249.99/month)

Veo 3 has native audio generation alongside video generation

Native audio generation is Veo 3's headline feature, and it's impressive…when it works. In one experiment, for example, I was trying to create an obnoxious movie trailer. The voice was ok, but it didn't produce the loud, energetic, and thunderous quality I wanted.

But overall, I found that the speech patterns feel natural, not robotic, and environmental sounds blend well with the visuals. Here's an example of something I made.

The background music is also nice, but here's where I hit a limitation: it's difficult to fit both meaningful dialogue and cinematic music within the 8-second clip limit (which is currently a limitation across all available plans).

In videos without voice, the music fills the space beautifully, but when you need both dialogue and music, something has to give. For now, you might want to iron out the voice first and add the music you want after.

The physics in Veo 3 videos actually make sense

From all the videos I've seen made with it, Veo 3 excels at water physics, fabric movement, and lighting reflections. It handles complex scenarios like "rain on glass" or "smoke dispersal" more convincingly than competitors.

And during my testing, the physics felt pretty believable. It's not perfect, as you can see in the video above, but people moved naturally, clothing behaved correctly, and lighting looked realistic.

And Google knows it—you'll see this plastered all over its marketing.

Maintaining character consistency across scenes is tricky with Veo 3

Google markets character continuity as a key differentiator. There are two main features designed to help maintain character appearance across multiple shots:

Jump to helps create consistency around bringing a specific detail, like a character, into new videos.

Extend expands upon what's happening in the current scene.

Of course, neither feature is perfect.

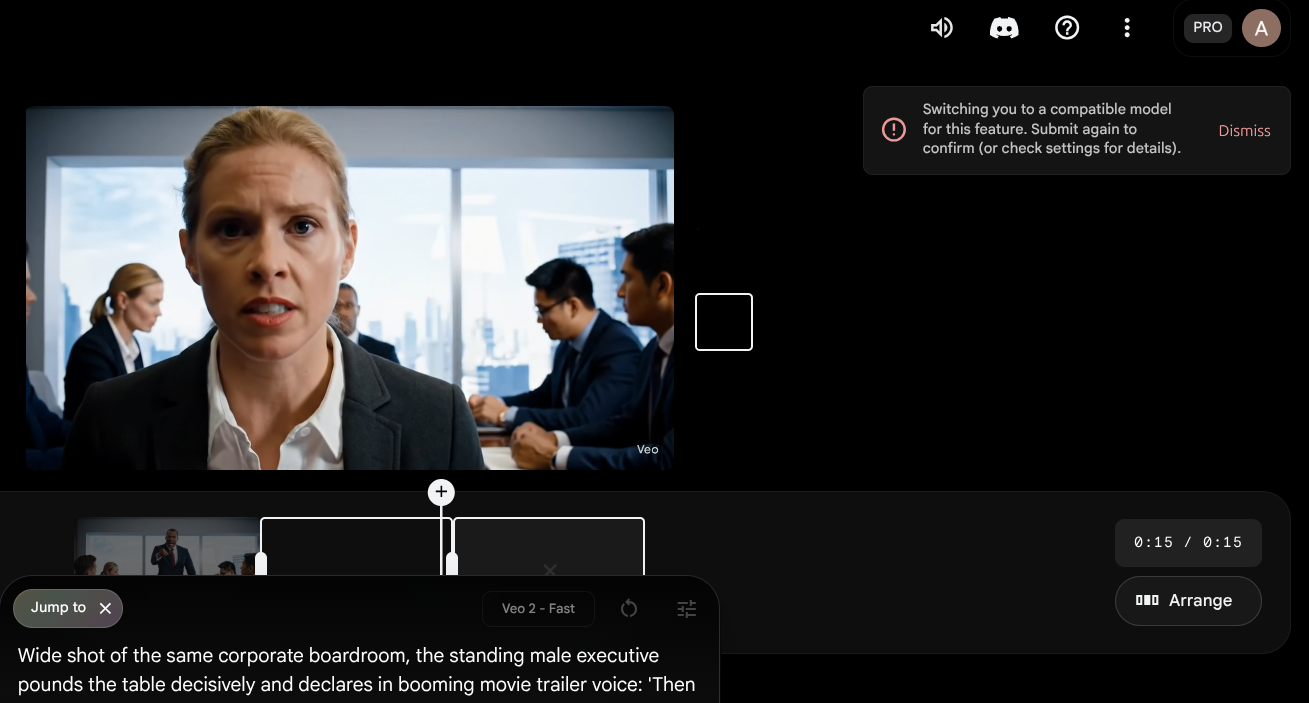

In my first test, I started with Veo 3 Fast (which allows text-to-video) for the initial shot. When I tried using Jump to to generate the second shot, Veo 3 Fast wasn't compatible—it automatically switched to Veo 2 Fast, which lost the audio entirely.

So I switched to Veo 3 Quality (which allows frame-to-video) for the second shot and added a third shot with another prompt using Veo 3 Quality. Neither of the Veo 3 Quality outputs included audio. And when I tried to add the third shot to my scene, the video got corrupted and I received the error: "Something Went Wrong."

Overall, throughout my tests, separate prompts for different shots made it nearly impossible to achieve character consistency. Even with hyper-detailed descriptions, Veo 3 interpreted them as creative suggestions rather than strict requirements, giving me completely different actors and settings.

We're still early days here, and what I was trying to do was complex, so I'm not surprised it didn't nail it. But Veo getting this right feels like it's probably just a few small tweaks away.

Veo 3 offers professional controls and quality, but it requires a lot of hand-holding

The camera work was superb in my tests. Veo 3's cinematic language understanding delivers excellent composition and movement quality.

I got that same quality for single-person shots, but whenever I was dealing with multiple people in a video, I had to be a lot more prescriptive. For example, in my initial tests, people in meetings were looking toward the camera instead of at the person talking.

A little awkward.

I tried to fix this by adding explicit interaction cues to Veo prompts like "looking directly at him with concerned expressions" and "maintain eye contact with the presenter throughout." The results were...medium.

Veo 3 is nuanced in its prompt adherence, but it doesn't interpret the same prompt consistently

Veo 3 really seemed to understand nuanced prompts and delivered results that matched my vision. But there's a limitation (the same you'll find with most large language models, too): Veo 3 doesn't interpret the same prompt consistently.

Running identical prompts multiple times can yield surprisingly different results, making it difficult to get consistent outputs for professional workflows that require exact replication.

You can see the characters changing throughout, and when I capitalized the word "foundation," things got even wonkier.

Veo 3 works with multiple input methods

Veo 3 offers several ways to create videos:

Text-to-video: This is the most widely tested and praised input method, and it's what I used for most of my video generation tests.

Image-to-video: My testing with this gave mixed results, but when it works, it's pretty neat. Note that this currently uses Veo 2 Fast, so there's no audio.

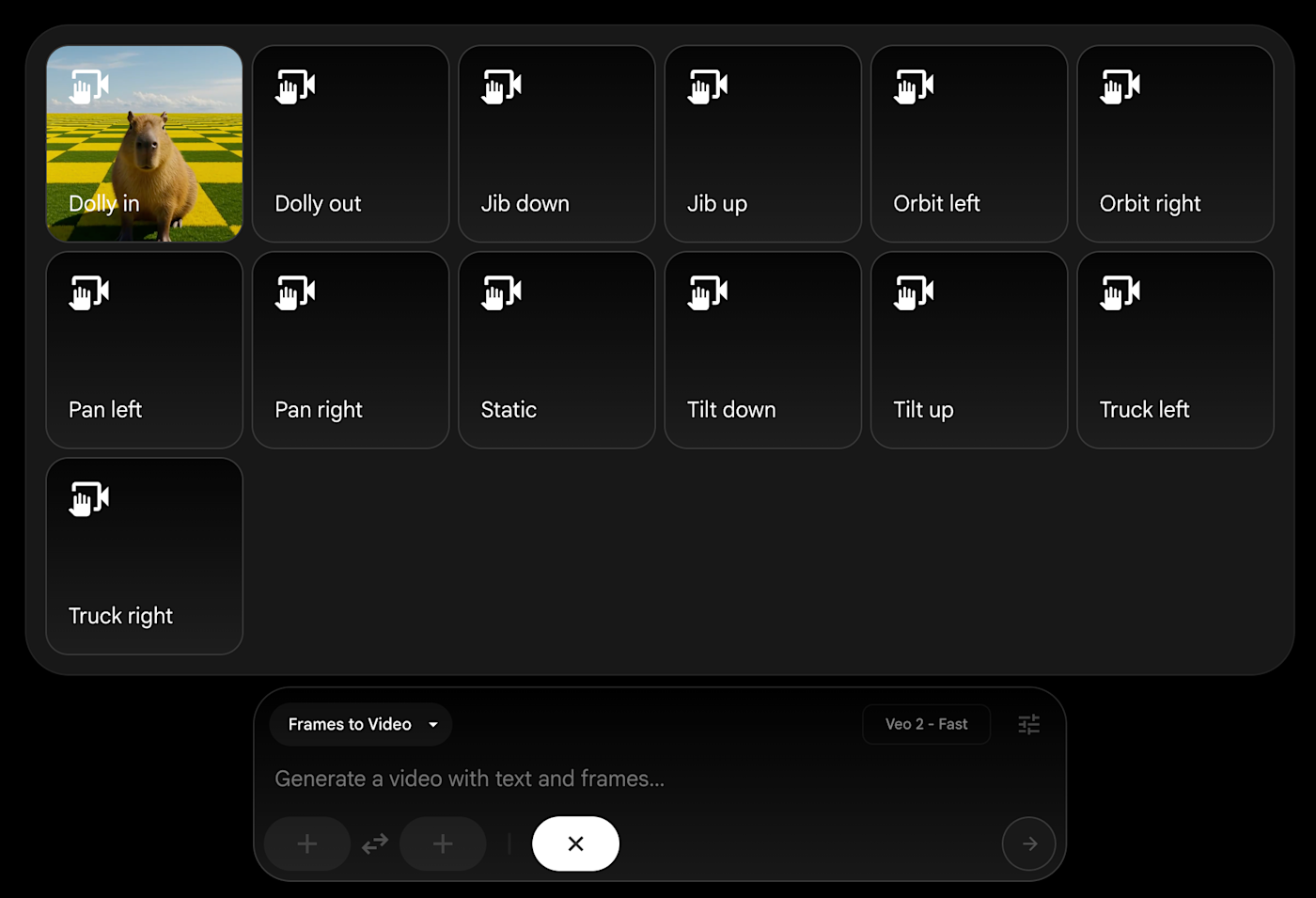

Animating my headshot with Google Veo Frames-to-video: This one has limited Veo 3 compatibility, as I experienced firsthand. You have to use Veo 2 or Veo 3 Quality, which can create workflow complications. But it includes access to camera controls, which allows precise shot direction (e.g., wide, close-up, tracking shots).

Where can you access Veo 3?

Veo 3 is available in both the Gemini chatbot and Flow, Google's AI filmmaking app. I found it was easier to use it with Flow, personally.

It's also more accessible in Flow because Gemini gives Google AI Pro subscribers only 10 trial Veo 3 videos, while Flow offers 100 generations per month for the same plan. (More on pricing in a bit.)

Flow is also purpose-built for video creation with professional tools like:

Camera controls for precise shot direction

Scene-building capabilities

Project management and organization

Here's a video clip example with camera controls.

There are also some geographic limitations to be aware of, specifically that Flow isn't available throughout the EU yet. You can use Veo 2 in the EU via Gemini only. Otherwise, it's available in 70+ countries, including the US, Canada, Australia, the UK, and India.

Google Veo pricing

You'll need to access Google Veo through a broader Google subscription plan, specifically:

Google AI Pro ($19.99 per month) gives you 1,000 monthly AI credits. For Flow, that's 100 credits for Veo 3 Quality, 20 credits for Veo 3 Fast, and 10 credits for Veo 2 Fast.

Google AI Ultra ($249.99 per month) gives you 12,500 monthly AI credits, early access to new features, and no visible watermarks. It's notably the only plan that doesn't include watermarks (paying Pro customers aren't super happy about it).

Ultra subscribers also get access to Ingredients to Video. This feature lets you add individual elements (characters, objects, backgrounds) separately and combine them into scenes for better consistency across shots.

How to create your first video with Veo 3

Once you're subscribed to the Google AI Pro or Ultra plan, head over to Flow (what I recommend) or use the Gemini app (though I had a hard time getting it to work there).

Here's how to get started with Google Veo in Flow:

Click in the prompt field, and describe your scene in detail. Include specifics: setting, characters, actions, and camera angles.

For dialogue, use quotes, e.g., "Character says 'specific dialogue here.'"

Add interaction cues, e.g., "looking directly at each other" or "nodding in agreement."

Specify audio, like background music type, environmental sounds, and so on.

After you set everything up according to your preferences, generate the video, and wait for the end result, which is generally available within a few minutes.

Here are a few tips:

Avoid using all caps for emphasis. It confuses audio generation.

Be extremely specific about character interactions to avoid the camera-staring I mentioned earlier.

For multi-shot sequences, embrace scene changes rather than fighting for consistency.

Test different variations of the same prompt to find what works.

And reminders on a few limitations:

Every video is capped at 8 seconds, which severely limits storytelling possibilities. You can't develop complex narratives or showcase detailed processes.

Google AI Pro users get visible watermarks on all generated content. Only Ultra subscribers ($250 per month) avoid this branding.

When I provided a frame containing a profit and loss statement, Veo 3 added text errors like "Expensestes" instead of "Expenses." The AI struggles with accurate text generation within scenes. That said, so did OpenAI's early image models—I'm sure this won't be an issue for long.

Give Google Veo a spin for AI video generation

Here's my very flawed—but still impressive—final cut using Google Veo with Flow.

Veo isn't ready for prime time start-to-finish projects, but the premise and early tests are certainly promising. And taking the quirks for a spin now will give you an idea of how to refine and speed up the video creation process down the line as the technology catches up.

Related reading: