Google has been in its "Gemini era" for more than a year now, and while the confusing rebrandings have slowed, everything else continues to improve at a rapid pace. Gemini is the name Google gave to its current generation family of multimodal AI models, but in typical Google fashion, it also applies to basically everything else that's related to AI.

It can get a touch confusing since, by my reckoning, Google has:

Google Gemini, a family of multimodal AI models. This is what Google uses in its own apps and to power AI features on its devices, but developers can integrate it in their apps, too.

Google Gemini, a chatbot that runs on the Gemini family of models. (This is the chatbot that used to be called Bard.)

Google Gemini, a replacement for Google Assistant that's rolling out to Android smartphones, Android Wear watches, Android Auto, and Google TV.

Gemini for Google Workspace, the AI features integrated across Gmail, Google Docs, and the other Workspace apps for paying users.

And a few more Geminis that I'm sure I'm missing.

All of these new Geminis are based around the core family of multimodal AI models, so let's start there.

Table of contents:

What is Google Gemini?

Google Gemini is a family of AI models, like OpenAI's GPT. They're all multimodal models, which means they can understand and generate text like a regular large language model (LLM), but they can also natively understand, operate on, and combine other kinds of information like images, audio, videos, and code.

For example, you can give Gemini a prompt like "what's going on in this picture?" and attach an image, and it will describe the image and respond to further prompts asking for more complex information. Similarly, if you give it a load of data, it can generate a graph or other visualization; or it can help you interpret charts, read signs, or translate menus.

Because we're now deep in the corporate competition era of AI, most companies are keeping pretty quiet on the specifics of how their models work and differ. Still, Google has confirmed that the Gemini models use a transformer architecture and rely on strategies like pretraining and fine-tuning, much as other major AI models do. The larger Gemini models have also shifted to a mixture-of-experts approach, which allows them to operate more efficiently with larger parameter counts.

The latest Gemini models hit all the state-of-the-art bases. While other model families have caught up, Google pioneered long context windows with Gemini. This means that a prompt can include more information to better shape the responses the model is able to give and what resources it has to work with. Right now, every current model in the Gemini family has at least a one million token context window. That's enough for multiple long documents, large knowledge bases, and other text-heavy resources. If you have to parse a complicated contract, you could upload the whole document to Gemini and ask questions about it—no matter how long it is. This is also useful if you're building a retrieval augmented generation (RAG) pipeline, though your API costs would be very high if you actually used the full context window in production.

Similarly, Google has added reasoning abilities to the latest Gemini models, Gemini 2.5 Pro and Gemini 2.5 Flash—though it calls it "thinking." This makes them more capable of working through hard logic problems, accurately understanding scientific information, and generating code.

Google Gemini models come in multiple sizes

The different Gemini models are designed to run on almost any device, which is why Google is integrating it absolutely everywhere. Google claims that its different versions are capable of running efficiently on everything from data centers to smartphones.

Each Gemini model differs in how many parameters it has and, as a result, how good it is at responding to more complex queries as well as how much processing power it needs to run. Unfortunately, figures like the number of parameters any given model has are often kept secret—unless there's a reason for a company to brag.

Right now, Google has the following Gemini models—though this is changing rapidly.

Gemini 2.5 Pro

Gemini 2.5 Pro is Google's most advanced model yet. It has a 1M token context window and is capable of reasoning. It's especially good at coding and responding to complex prompts. It's currently available as a preview through the API and Gemini chatbot.

Gemini 2.5 Flash

Gemini 2.5 Flash is designed to be a fast and cost-efficient reasoning model. It has a 1M token context window. It's flexible and intended for use in a wide variety of applications, from text summarization to chatbots to data extraction. It's currently available as a preview through the API and Gemini chatbot.

Gemini 2.0 Flash

Gemini 2.0 Flash is still the most widely available Gemini model. It powers the Gemini chatbot, Gemini for Google Workspace, and lots of other features. While it's no longer state-of-the-art, it's still a very powerful everyday model. It will presumably be replaced by Gemini 2.5 Flash as soon as it's out of preview.

Older Gemini models

In addition to the state-of-the-art Gemini 2.5 models, there are a few other Gemini models worth noting:

Gemini 1.0 Ultra. Gemini Ultra was Gemini's largest and most powerful model. It was never widely released, though there are persistent rumors that it will get an upgrade.

Gemini 1.5 Pro and 1.5 Flash. There are two widely available Gemini models. As of right now, they're still available through Gemini's API so some apps built on top of Gemini rely on them.

Gemini 1.0 Nano. A small model designed for on-device operations, it seems to have been supplanted by Flash but may well be brought back at some point.

How does Google Gemini compare to other LLMs?

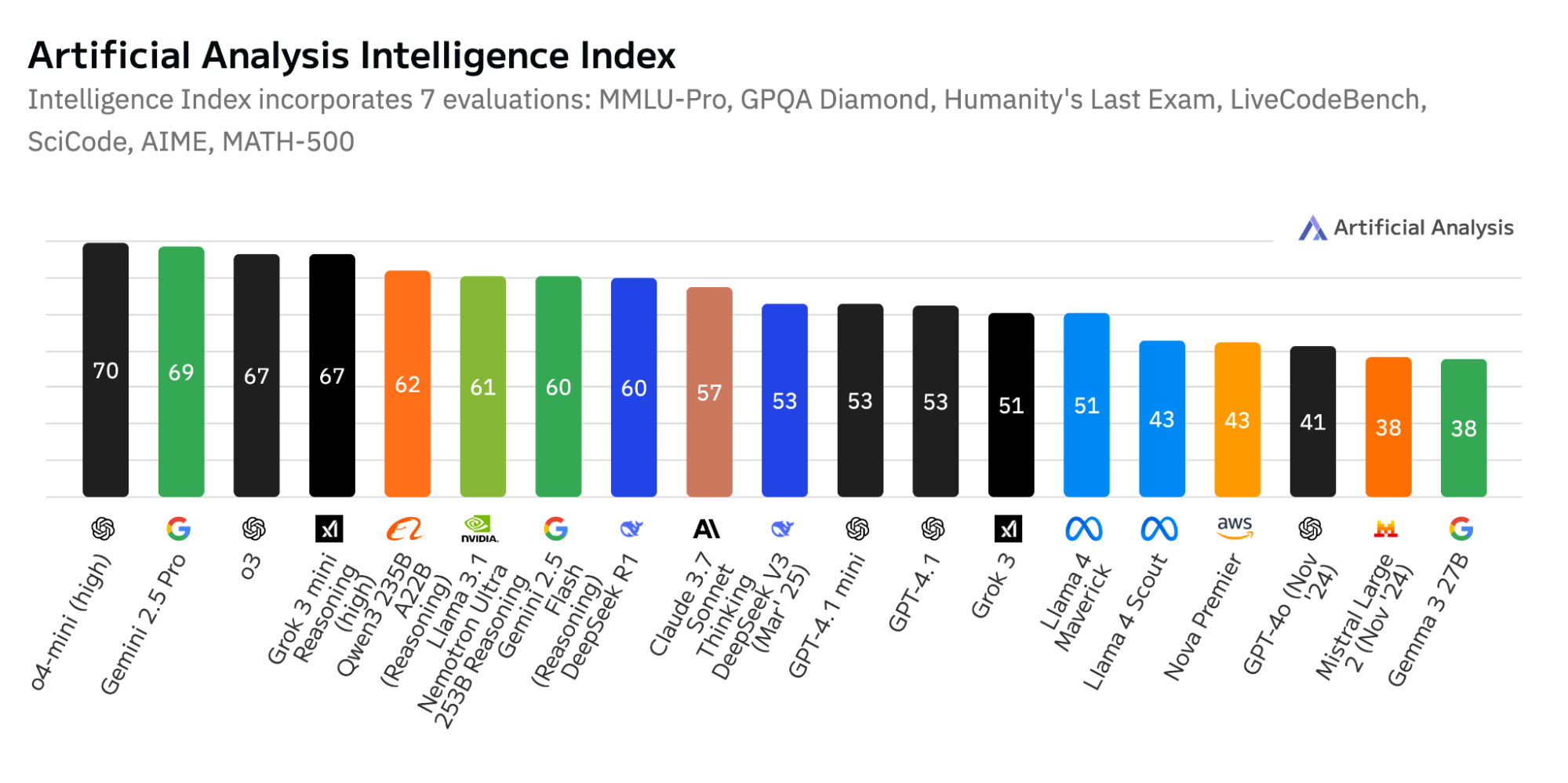

We've reached the point where directly comparing AI models is increasingly irrelevant. 18 research labs have now produced GPT-4 equivalent models. The best models from OpenAI, Anthropic, Meta, Google, and a number of other companies are all incredibly powerful—and how you fine-tune and employ them is now significantly more relevant than what model you choose.

Similarly, the trade-offs between speed and power are becoming more and more important. Gemini 2.5 Pro is one of the most powerful AI models yet developed, but it costs 8 to 25 times as much as Gemini 2.5 Flash per million tokens, depending on whether you need reasoning and how much of the context window is required.

With that said, on the various benchmarks, Gemini 2.5 Pro is currently second only to OpenAI's o4-mini (high), while Gemini 2.5 Flash is firmly in the top 10 and ahead of models like Claude 3.7 Sonnet and GPT-4.1. These leaderboards change rapidly, but as of May 2025, the Gemini 2.5 models are some of the best available. They're likely to remain competitive with the best equivalent models for at least the next few months.

Despite a slow start, Google has got its AI mojo back.

How does Google use Gemini?

Google has integrated or plans to integrate Gemini basically everywhere. The rollout is taking a while because Google has so many different products that all need to be updated—and some of them don't lend themselves to AI. But let's go through the major Gemini-powered tools:

Google Gemini (the chatbot). The most obvious place that Google deploys Gemini is with the chatbot-formerly-known-as-Bard. It's also called Gemini and is more of a direct ChatGPT competitor than a replacement for Search. It has a deep research mode, can search the web, and integrates with other apps. You can even customize it with a feature called Gems. If you're deep in Google's ecosystem, it's a great tool.

Google Workspace. The other area where Gemini is incredibly prominent is Google's Workspace apps like Gmail, Docs, and Sheets. You need to be a Business Standard subscriber ($14/user/month) to get the full power of Gemini across all the different apps, but it can do a lot. Zapier has a full breakdown of all Gemini for Workspace can do, but some of the highlights are summarizing emails in Gmail and files in Google Drive, generating charts and tables in Sheets, and taking notes and translating in Google Meet calls.

Google One. For non-business users, the $20/month Google One AI Premium plan gets you access to more of Gemini's most advanced models and features in the chatbot as well as Gemini in Gmail, Docs, and other Google apps.

Google Search. Search is going to keep getting a lot of Gemini-powered updates. Its AI Overviews are basically quick answer boxes for more complex queries. And AI Mode (available to some users in Labs) offers more of an actual AI search engine, like Perplexity.

Android Auto and Gemini for Google TV. Both products are due to get Gemini updates later this year.

Android. Gemini integration continues to roll out for Google's smartphone operating system.

Everywhere else. Google has committed hard to AI, and after a few bad years, it's finally caught up with its competitors. Expect to see Gemini in every app Google can add it to—at least until there's another name change. It's even coming to Chrome, though this feature has been teased for a while without yet seeing the light of day.

Google Gemini is designed to be built on top of

In addition to using Gemini in its own products, Google also allows developers to integrate Gemini into their own apps, tools, and services.

It seems that almost every app now is adding AI-based features, and many of them are using OpenAI's models or Meta's Llama models to do it. Google wants a piece of that action, so Gemini is designed from the start for developers to be able to build AI-powered apps and otherwise integrate AI into their products. The big advantage it has is that it can integrate them through its cloud computing, hosting, and other web services.

Developers can access previews of Gemini 2.5 Pro and 2.5 Flash as well as use Gemini 2.0 Flash and other models through the Gemini API in Google AI Studio or Google Cloud Vertex AI. This allows them to further train Gemini on their own data to build powerful tools like folks have already been doing with GPT.

How to access Google Gemini

The easiest way to check out Gemini is through the chatbot of the same name. If you subscribe to a Gemini plan, you'll also be able to use it throughout the various different Google apps.

Developers can also test Google Gemini 2.5 Pro, 2.5 Flash, and other models through Google AI Studio or Vertex AI. And with Zapier's Google Vertex AI and Google AI Studio integrations, you can access the latest Gemini models from all the apps you use at work. Here are a few examples to get you started, or you can learn more about how to automate Google AI Studio.

Send prompts in Google Vertex AI every day using Schedule by Zapier

Classify texts in Google Vertex AI when new or updated rows occur in Google Sheets

Generate draft responses to new Gmail emails with Google AI Studio (Gemini)

Create a Slack assistant with Google AI Studio (Gemini)

Zapier is the most connected AI orchestration platform—integrating with thousands of apps from partners like Google, Salesforce, and Microsoft. Use interfaces, data tables, and logic to build secure, automated, AI-powered systems for your business-critical workflows across your organization's technology stack. Learn more.

Related reading:

This article was originally published in January 2024. The most recent update was in May 2025.