Generative AI tools are impressive, but I've long argued that they aren't very useful in the real world unless they have access to more information than just their training data—and can actually do something with it. It's this ability that allows AI tools to create usable content, offer useful insights, and perform actions that actually move work forward.

Model Context Protocol (MCP) is a method of giving AI models the context they need and allowing them to take real action in other apps.

So let's look more at what MCP is, how it works, and why it matters.

But first, a caveat: MCP is a technical standard for developers. If you're not comfortable with client-server architecture, APIs, and the nuts and bolts of the modern web, MCP probably isn't the tool for you. Instead, you should check out Zapier Agents. It also allows you to connect AI assistants to external sources of data—but through a user-friendly web app.

Table of contents:

What is MCP?

MCP is a two-way communication bridge between AI assistants and external tools, providing access to information, but more importantly, giving the AI the ability to take action.

It's an open source protocol designed to safely and securely connect AI tools to data sources like your company's CRM, Slack workspace, or dev server. That means your AI assistant can pull in relevant data and trigger actions in those tools—like updating a record, sending a message, or kicking off a deployment. By giving AI assistants the power to both understand and act, MCP enables more useful, context-aware, and proactive AI experiences.

MCP was originally developed by Anthropic (the company behind Claude), but it's now been embraced by OpenAI as well as AI platforms like Zapier, Replit, Sourcegraph, Windsurf.

How does MCP work?

MCP is a standard framework that defines how AI systems can interact with external tools, services, and data sources. Instead of having to create custom integrations for every service, MCP defines the basics of how they should interoperate, how requests are structured, what features are available, and how they can be discovered. It enables developers to easily and reliably build secure, two-way connections between AI tools and external data sources, apps, and other services.

The simplest analogy here is the worldwide web. The hypertext transfer protocol (HTTP) defines how browsers and apps interact with websites and web servers. You can connect to zapier.com using Chrome, Safari, or even your Terminal app because they all use HTTP. MCP is an attempt to build an HTTP-like protocol for AI interoperability—it gives AI tools a common protocol to use.

Of course, that doesn't quite capture the whole picture because AI tools aren't actually like web browsers. They're able to understand language and intent, so MCP is designed to provide AI models with a structured set of options they can choose from. If you have an MCP server that's capable of downloading webpages from the internet, the AI model should be able to invoke it whether you say "go to zapier.com," "take me to zapier.com," or anything else like that. And if you say "get me my dog photos," it should know to invoke Google Drive instead.

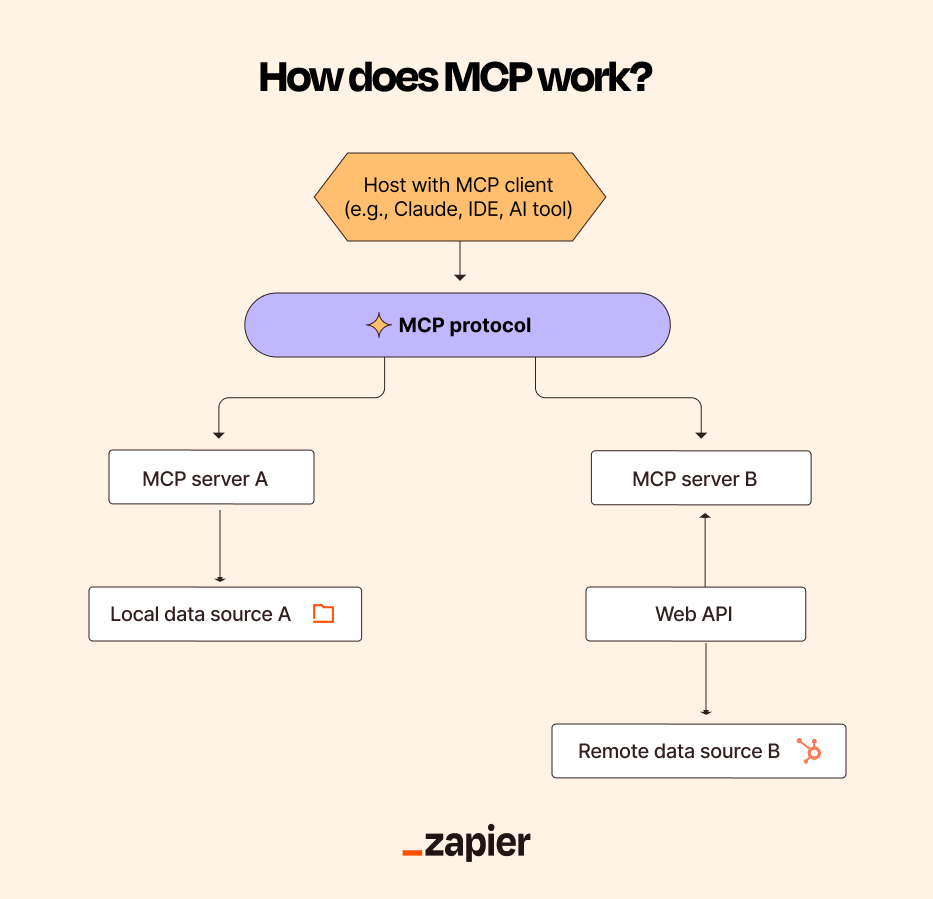

MCP's client-host-server model

Now, let's look at more of the nitty-gritty. MCP operates using a client-host-server model:

The MCP host—typically, a chatbot, IDE, or other AI tool—is the central coordinator within the application: it manages each client instance and controls permissions and security policies. Depending on how things are set up, the host may decide to call for something over MCP based on your request or based on an automated process.

The MCP client is initiated by the host and connects to a single server; it handles communications between the host and the server.

The MCP server connects to a data source or tool, either local or remote, and exposes specific capabilities. For example, an MCP server connected to a file storage app can provide capabilities like "search for a file" and "read a file," while an MCP server connected to your team chat app can provide capabilities like "get my latest mentions" and "update my status." Anthropic maintains a list of available MCP servers, or if you're a developer, you can write your own.

MCP servers can provide data using three basic methods:

Prompts are pre-defined templates for the LLM that can be selected by the user through slash commands, menu options, and the like.

Resources are structured data, like files, data from a database, or a commit history that provide additional context to the LLM.

Tools are functions that allow the model to take action, like interacting with an API or writing something to a file.

While MCP might sound superficially similar to how APIs operate, the two differ significantly in design, intent, and flexibility. An API offers a direct, service-specific interface, while MCP is designed to be a unified framework. Many MCP servers use APIs when they're triggered over MCP, so the two often work in tandem—but they're not the same thing.

What problem is MCP solving?

AI tools are only as useful as the data they have access to and the actions they can take.

For general queries, an LLM's training data will be sufficient. But if you want an AI to know how your company's sales figures compare to last quarter, how your competitor's marketing has changed in response to market conditions, or simply what your CEO's email address is, then you need some way to provide it with the relevant information.

And if you want the AI to do something with that information—like send a report, create a task in your project management tool, update a record in your CRM, or notify your team on Slack—you need a way for it to interact with those apps. MCP makes that easier by giving AI tools a standardized way to discover and invoke actions in external systems. It bridges the gap between understanding and execution, so the AI isn't just responding with insights—it's actively getting things done.

For example, with Zapier's MCP implementation, developers can trigger actions directly within their work apps from their code environment. That means your AI tools aren't limited to answering questions—they can take action, like sending an email, creating a task, or updating a record, all based on your app's exposed capabilities.

Previously, this would mean building a custom integration for every app you wanted to get insights from or take action in. (Or using an iPaaS app that already had these integrations.) Instead, MCP offers a standardized blueprint for how AI tools can interact with any data source. Any app that supports MCP is able to offer a structured set of tools or actions that an AI assistant or agent can leverage. When you ask an AI to do something, it can check what tools are available to it and take the appropriate action—it's a lot more flexible.

By standardizing communications between AI models and external data sources with a secure protocol, MCP makes it a lot quicker and easier for developers to build safe integrations with key tools, and it also makes it easier for developers to swap between different tools. For example, two different file storage apps should have similar MCP server implementations, so switching between, say, Google Drive and Dropbox should be a matter of changing a few lines of code, not writing a whole new integration.

While some platforms like Zapier Agents have enabled these kinds of interactions for a while, MCP gives developers a framework for including them directly within their apps. They both have their place—Zapier Agents is easier to use, while MCP gives developers more direct control.

MCP vs. AI agents

AI agents are AI-powered tools that are able to act autonomously. As a basic example, ChatGPT Deep Research is able to decide what web searches to perform and websites to visit based on your query. You tell it what you want, but it decides how it's going to give it to you.

MCP has the capacity to enable agentic behavior. By allowing developers to connect apps and data sources to AI assistants, they can build AI tools capable of making autonomous decisions and taking action in other apps. But MCP isn't the only way to build AI agents—nor does using MCP automatically make any AI-powered tool an AI agent. It's simply a way of connecting an AI to another tool.

MCP is designed primarily for developers building custom integrations and AI applications—it's ideal for teams with technical resources who need to build specialized AI capabilities into their own applications or workflows. For non-developers or those looking for pre-built solutions, tools like Zapier Agents provide an alternative approach: a no-code way to build custom agents that can quickly connect to more than 7,000+ apps—without having to worry about Docker, API keys, and whether to use TypeScript or Python.

Both approaches have their place in the ecosystem: MCP gives developers deeper control and flexibility for custom implementations, while no-code tools offer accessibility and speed for business users or teams without extensive development resources.

How to get started with MCP

If you're a developer building an AI app and want it to be able to initiate tasks in other apps—not just access data—then MCP is well worth a look. It gives your AI tools the ability to trigger actions in external systems, like sending messages, creating records, or kicking off workflows, all through a standardized protocol. And if you don't want to create your own server, you can find a list of available MCP servers here.

An easy option to get started is Zapier MCP—you can configure a server that can connect with any of the 8,000+ apps available through Zapier. For hosts, apps like Claude, Cursor, and Zed already support MCP, so you can configure and connect your Zapier MCP server (or any other MCP server) to them. For more information on how to implement MCP—as well as a more technical breakdown of the full specification—check out the docs.

Related reading: